Abstract

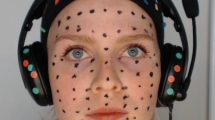

Current marker-based facial motion capture methods might lose the target markers in some cases, such as those with considerable occlusion and blur. Manually revising these statuses requires extensive labor-intensive work. Thus, a robust marker tracking method that provides long-term stability must be developed, thereby simplifying manual operations. In this paper, we present a new facial marker tracking system that focuses on the accuracy and stability of performance capture. The tracking system includes a synthetic analysis step with the robust optical flow tracking method and the proposed Marker-YOLO detector. To illustrate the strength of our system, a real dataset of the performance of voluntary actors was obtained, and ground truth labels were given by artists for subsequent experiments. The results showed that our approach outperforms state-of-the-art trackers such as SiamDW and ECO in specific tasks while running at a real-time speed of 38 fps. The root-mean-squared error and area under the curve results verified the improvements in the accuracy and stability of our approach.

Similar content being viewed by others

Data availability

The datasets generated and analyzed during the current study were not publicly available due to limitations in the scope of likeness rights applied to volunteers’ faces.

References

Ekman, P.: Facial expression and emotion. Am. Psychol. 48(4), 384–392 (1993). https://doi.org/10.1037/0003-066X.48.4.384

Nusseck, M., Cunningham, D.W., Wallraven, C., Bülthoff, H.H.: The contribution of different facial regions to the recognition of conversational expressions. J. Vis. 8(8), 1–1 (2008). https://doi.org/10.1167/8.8.1

Luo, L., Weng, D., Ding, N., Hao, J., Tu, Z.: The effect of avatar facial expressions on trust building in social virtual reality. Visual Comput. (2022)

Zollhöfer, M., Thies, J., Garrido, P., Bradley, D., Beeler, T., Pérez, P., Stamminger, M., Nießner, M., Theobalt, C.: State of the art on monocular 3d face reconstruction, tracking, and applications. Comput. Graph. Forum 37(2), 523–550 (2018). https://doi.org/10.1111/cgf.13382

Bhat, K.S., Goldenthal, R., Ye, Y., Mallet, R., Koperwas, M.: High fidelity facial animation capture and retargeting with contours. In: Proceedings of the 12th ACM SIGGRAPH/Eurographics Symposium on Computer Animation. SCA ’13, pp. 7–14. Association for Computing Machinery, New York, NY, USA (2013). https://doi.org/10.1145/2485895.2485915

R3dS. WRAP4D. R3dS. WRAP4D. https://www.russian3dscanner.com/wrap4d/

Bregler, C., Bhat, K., Saltzman, J., Allen, B.: Ilm’s multitrack: a new visual tracking framework for high-end vfx production. In: SIGGRAPH 2009: Talks. SIGGRAPH ’09. Association for Computing Machinery, New York, NY, USA (2009). https://doi.org/10.1145/1597990.1598019

Vicon Motion Systems Ltd. CaraPost. Vicon Motion Systems Ltd. CaraPost.. https://www.vicon.com/

Jocher, G., Stoken, A., Borovec, J., NanoCode012, Chaurasia, A., TaoXie, Changyu, L., V, A., Laughing, tkianai, yxNONG, Hogan, A., lorenzomammana, AlexWang1900, Hajek, J., Diaconu, L., Marc, Kwon, Y., oleg, wanghaoyang0106, Defretin, Y., Lohia, A., ml5ah, Milanko, B., Fineran, B., Khromov, D., Yiwei, D., Doug, Durgesh, Ingham, F.: ultralytics/yolov5: v5.0 - yolov5-p6 1280 models, aws, supervise.ly and youtube integrations (2021). https://doi.org/10.5281/zenodo.4679653

Lindeberg, T.: Detecting salient blob-like image structures and their scales with a scale-space primal sketch: a method for focus-of-attention. Int. J. Comput. Vis. 11(3), 283–318 (1993)

Jonker, R., Volgenant, A.: A shortest augmenting path algorithm for dense and sparse linear assignment problems. Computing 38(4), 325–340 (1987). https://doi.org/10.1007/BF02278710

Williams, L.: Performance-driven facial animation. In: Proceedings of the 17th Annual Conference on Computer Graphics and Interactive Techniques. SIGGRAPH ’90, pp. 235–242. Association for Computing Machinery, New York, NY, USA (1990). https://doi.org/10.1145/97879.97906

Guenter, B., Grimm, C., Wood, D., Malvar, H., Pighin, F.: Making faces. In: ACM SIGGRAPH 2006 Courses. SIGGRAPH ’06, p. 18. Association for Computing Machinery, New York, NY, USA (2006). https://doi.org/10.1145/1185657.1185858

Lin, I.-C., Ouhyoung, M.: Mirror mocap: automatic and efficient capture of dense 3D facial motion parameters from video. Vis. Comput. 21(6), 355–372 (2005). https://doi.org/10.1007/s00371-005-0291-5

Bickel, B., Botsch, M., Angst, R., Matusik, W., Otaduy, M., Pfister, H., Gross, M.: Multi-scale capture of facial geometry and motion. ACM Trans. Graph. 26(3), 33 (2007). https://doi.org/10.1145/1276377.1276419

Bickel, B., Lang, M., Botsch, M., Otaduy, M.A., Gross, M.: Pose-space animation and transfer of facial details. In: Proceedings of the 2008 ACM SIGGRAPH/Eurographics Symposium on Computer Animation. SCA ’08, pp. 57–66. Eurographics Association, Goslar, DEU (2008)

Borshukov, G., Montgomery, J., Werner, W.: Playable universal capture: Compression and real-time sequencing of image-based facial animation. In: ACM SIGGRAPH 2006 Courses. SIGGRAPH ’06, p. 8. Association for Computing Machinery, New York, NY, USA (2006). https://doi.org/10.1145/1185657.1185848

Choe, B., Lee, H., Ko, H.-S.: Performance-driven muscle-based facial animation. J. Vis. Comput. Anim. 12(2), 67–79 (2001). https://doi.org/10.1002/vis.246

Huang, H., Chai, J., Tong, X., Wu, H.-T.: Leveraging motion capture and 3D scanning for high-fidelity facial performance acquisition. In: ACM SIGGRAPH 2011 Papers. SIGGRAPH ’11. Association for Computing Machinery, New York, NY, USA (2011). https://doi.org/10.1145/1964921.1964969

Ravikumar, S., Davidson, C., Kit, D., Campbell, N., Benedetti, L., Cosker, D.: Reading between the dots: Combining 3d markers and facs classification for high-quality blendshape facial animation. In: Proceedings of Graphics Interface 2016. GI 2016, pp. 143–151. Canadian Human-Computer Communications Society/Société canadienne du dialogue humain-machine (2016). https://doi.org/10.20380/GI2016.18

Fang, X., Wei, X., Zhang, Q., Zhou, D.: Forward non-rigid motion tracking for facial mocap. Vis. Comput. 30(2), 139–157 (2014). https://doi.org/10.1007/s00371-013-0790-8

Moser, L., Hendler, D., Roble, D.: Masquerade: Fine-scale details for head-mounted camera motion capture data. In: ACM SIGGRAPH 2017 Talks. SIGGRAPH ’17. Association for Computing Machinery, New York, NY, USA (2017). https://doi.org/10.1145/3084363.3085086

Moser, L., Williams, M., Hendler, D., Roble, D.: High-quality, cost-effective facial motion capture pipeline with 3d regression. In: ACM SIGGRAPH 2018 Talks. SIGGRAPH ’18. Association for Computing Machinery, New York, NY, USA (2018). https://doi.org/10.1145/3214745.3214755

Cootes, T.F., Edwards, G.J., Taylor, C.J.: Active appearance models. IEEE Trans. Pattern Anal. Mach. Intell. 23(6), 681–685 (2001). https://doi.org/10.1109/34.927467

Chuang, E., Bregler, C.: Performance driven facial animation using blendshape interpolation. Computer Science Technical Report, Stanford University 2(2), 3 (2002)

Chai, J.-x., Xiao, J., Hodgins, J.: Vision-based control of 3d facial animation. In: Proceedings of the 2003 ACM SIGGRAPH/Eurographics Symposium on Computer Animation. SCA ’03, pp. 193–206. Eurographics Association, Goslar, DEU (2003)

Saragih, J.M., Lucey, S., Cohn, J.F.: Real-time avatar animation from a single image. In: 2011 IEEE International Conference on Automatic Face Gesture Recognition (FG), pp. 117–124 (2011). https://doi.org/10.1109/FG.2011.5771383

Moiza, G., Tal, A., Shimshoni, I., Barnett, D., Moses, Y.: Image-based animation of facial expressions. Vis. Comput. 18(7), 445–467 (2002). https://doi.org/10.1007/s003710100157

Cao, C., Hou, Q., Zhou, K.: Displaced dynamic expression regression for real-time facial tracking and animation. ACM Trans. Graph. (2014). https://doi.org/10.1145/2601097.2601204

Liu, S., Wang, J., Zhang, M., Wang, Z.: Three-dimensional cartoon facial animation based on art rules. Vis. Comput. 29(11), 1135–1149 (2013). https://doi.org/10.1007/s00371-012-0756-2

Wu, C., Bradley, D., Gross, M., Beeler, T.: An anatomically-constrained local deformation model for monocular face capture. ACM Trans. Graph. (2016). https://doi.org/10.1145/2897824.2925882

Barrielle, V., Stoiber, N.: Realtime performance-driven physical simulation for facial animation. Comput. Graph. Forum 38(1), 151–166 (2019). https://doi.org/10.1111/cgf.13450

IMAGE METRICS. Live Driver\(^{{\rm TM}}\). IMAGE METRICS. Live Driver\(^{{\rm TM}}\). http://www.image-metrics.com

DYNAMIXYZ. HMC & Grabber\(^{{\rm TM}}\). DYNAMIXYZ. HMC & Grabber\(^{{\rm TM}}\). http://www.dynamixyz.com

Lucas, B.D., Kanade, T.: An iterative image registration technique with an application to stereo vision. In: Proceedings of the 7th International Joint Conference on Artificial Intelligence—Volume 2. IJCAI’81, pp. 674–679. Morgan Kaufmann Publishers Inc., San Francisco, CA, USA (1981)

Bouguet, J.-Y.: Pyramidal implementation of the affine lucas kanade feature tracker description of the algorithm. Intel corporation 5(1–10), 4 (2001)

Blache, L., Loscos, C., Lucas, L.: Robust motion flow for mesh tracking of freely moving actors. Vis. Comput. 32(2), 205–216 (2016). https://doi.org/10.1007/s00371-015-1191-y

Zhao, J., Mao, X., Zhang, J.: Learning deep facial expression features from image and optical flow sequences using 3D CNN. Vis. Comput. 34(10), 1461–1475 (2018). https://doi.org/10.1007/s00371-018-1477-y

Kim, Y.H., Martínez, A.M., Kak, A.C.: A local approach for robust optical flow estimation under varying illumination. In: Proceedings of the British Machine Vision Conference, pp. 91–19110. BMVA Press, UK (2004). https://doi.org/10.5244/C.18.91

Senst, T., Eiselein, V., Sikora, T.: Robust local optical flow for feature tracking. IEEE Trans. Circuits Syst. Video Technol. 22(9), 1377–1387 (2012). https://doi.org/10.1109/TCSVT.2012.2202070

Senst, T., Geistert, J., Sikora, T.: Robust local optical flow: Long-range motions and varying illuminations. In: 2016 IEEE International Conference on Image Processing (ICIP), pp. 4478–4482 (2016). https://doi.org/10.1109/ICIP.2016.7533207

Zhu, Z., Wu, W., Zou, W., Yan, J.: End-to-end flow correlation tracking with spatial-temporal attention. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Vihlman, M., Visala, A.: Optical flow in deep visual tracking. Proceedings of the AAAI Conference on Artificial Intelligence 34(07), 12112–12119 (2020). https://doi.org/10.1609/aaai.v34i07.6890

Qu, Z., Shi, H., Tan, S., Song, B., Tao, Y.: A flow-guided self-calibration siamese network for visual tracking. Vis. Comput. 39(2), 625–637 (2023). https://doi.org/10.1007/s00371-021-02362-5

King, D.E.: Dlib-ml: A machine learning toolkit. J. Mach. Learn. Res. 10, 1755–1758 (2009)

Wang, C.-Y., Liao, H.-Y.M., Wu, Y.-H., Chen, P.-Y., Hsieh, J.-W., Yeh, I.-H.: CSPNet: a new backbone that can enhance learning capability of CNN. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops (2020)

He, K., Zhang, X., Ren, S., Sun, J.: Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1904–1916 (2015). https://doi.org/10.1109/TPAMI.2015.2389824

Wang, K., Liew, J.H., Zou, Y., Zhou, D., Feng, J.: Panet: Few-shot image semantic segmentation with prototype alignment. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) (2019)

Ekman, P., Friesen, W., Hager, J.: The Facial Action Coding System (2002)

Arriaga, O., Valdenegro-Toro, M., Plöger, P.: Real-time convolutional neural networks for emotion and gender classification. arXiv preprint arXiv:1710.07557 (2017)

Ma, B., Huang, L., Shen, J., Shao, L., Yang, M.-H., Porikli, F.: Visual tracking under motion blur. IEEE Trans. Image Process. 25(12), 5867–5876 (2016). https://doi.org/10.1109/TIP.2016.2615812

Guo, Q., Cheng, Z., Juefei-Xu, F., Ma, L., Xie, X., Liu, Y., Zhao, J.: Learning to adversarially blur visual object tracking. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 10839–10848 (2021)

Danelljan, M., Bhat, G., Khan, F.S., Felsberg, M.: Atom: Accurate tracking by overlap maximization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2019)

Bhat, G., Danelljan, M., Gool, L.V., Timofte, R.: Learning discriminative model prediction for tracking. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) (2019)

Bhat, G., Danelljan, M., Van Gool, L., Timofte, R.: Know your surroundings: Exploiting scene information for object tracking. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) Computer Vision—ECCV 2020, pp. 205–221. Springer, Cham (2020)

Danelljan, M., Häger, G., Khan, F., Felsberg, M.: Accurate scale estimation for robust visual tracking. In: Proceedings of the British Machine Vision Conference 2014. BMVA Press, UK (2014). https://doi.org/10.5244/C.28.65

Bertinetto, L., Valmadre, J., Golodetz, S., Miksik, O., Torr, P.H.S.: Staple: Complementary learners for real-time tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

Danelljan, M., Bhat, G., Shahbaz Khan, F., Felsberg, M.: ECO: efficient convolution operators for tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Zhang, Z., Peng, H.: Deeper and wider siamese networks for real-time visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2019)

Wu, Y., Lim, J., Yang, M.-H.: Online object tracking: a benchmark. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2013)

Chatfield, K., Simonyan, K., Vedaldi, A., Zisserman, A.: Return of the devil in the details: delving deep into convolutional nets. In: Proceedings of the British Machine Vision Conference. BMVA Press (2014)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

Li, T., Bolkart, T., Black, M.J., Li, H., Romero, J.: Learning a model of facial shape and expression from 4D scans. ACM Trans. Graph. (Proc. SIGGRAPH Asia) 36(6), 194–119417 (2017)

Funding

This work was supported by the National Key Research and Development Program of China (No.2022YFF0902303) and the Beijing Municipal Science & Technology Commission and Administrative Commission of Zhongguancun Science Park (Z221100007722002) and the National Natural Science Foundation of China (No. 62072036) and the 111 Project (B18005).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

There are no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 1 (mp4 45969 KB)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tian, Z., Weng, D., Fang, H. et al. Robust facial marker tracking based on a synthetic analysis of optical flows and the YOLO network. Vis Comput 40, 2471–2489 (2024). https://doi.org/10.1007/s00371-023-02931-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-02931-w