Abstract

Sign language recognition (SLR) is a challenging task, which requires a thorough understanding of spatial-temporal visual features for translating it into comprehensible written or spoken language. However, existing SLR methods ignore the importance of key spatial-temporal representation due to its sparsity and inconsistency in space and time. To solve this problem, we present a difference-guided multi-scale spatial-temporal representation (DMST) learning model for SLR. In DMST, we devise two modules: (1) key spatial-temporal representation, to extract and enhance key spatial-temporal information by a spatial-temporal difference strategy and (2) multi-scale sequence alignment, to perceive and fuse multi-scale spatial-temporal features and achieve sequence mapping. The DMST model outperforms state-of-the-art performance on four public sign language datasets, which demonstrates the superiority of DMST model and the significance of key spatial-temporal representation for SLR.

Similar content being viewed by others

Notes

The gloss represents the sign with its closest meaning in natural languages.

References

Bahdanau, D., Cho, K., Bengio, Y.: Neural machine translation by jointly learning to align and translate. arXiv (2014)

Camgoz, N.C., Hadfield, S., Koller, O., Ney, H., Bowden, R.: Neural sign language translation. In: CVPR, pp. 7784–7793 (2018)

Camgoz, N.C., Koller, O., Hadfield, S., Bowden, R.: Sign language transformers: joint end-to-end sign language recognition and translation. In: CVPR, pp. 10023–10033 (2020)

Cheng, K.L., Yang, Z., Chen, Q., Tai, Y.W.: Fully convolutional networks for continuous sign language recognition. In: ECCV, pp. 697–714 (2020)

Chung, J., Gulcehre, C., Cho, K., Bengio, Y.: Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv (2014)

Cihan Camgoz, N., Hadfield, S., Koller, O., Bowden, R.: Subunets: end-to-end hand shape and continuous sign language recognition. In: ICCV, pp. 3056–3065 (2017)

Cui, R., Liu, H., Zhang, C.: Recurrent convolutional neural networks for continuous sign language recognition by staged optimization. In: CVPR, pp. 7361–7369 (2017)

Cui, R., Liu, H., Zhang, C.: A deep neural framework for continuous sign language recognition by iterative training. IEEE TMM 21(7), 1880–1891 (2019)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: CVPR, vol. 1, pp. 886–893 (2005)

Evangelidis, G., Singh, G., Horaud, R.: Continuous gesture recognition from articulated poses. In: ECCVW, pp. 595–607 (2014)

Gharbi, H., Bahroun, S., Massaoudi, M., Zagrouba, E.: Key frames extraction using graph modularity clustering for efficient video summarization. In: ICASSP, pp. 1502–1506 (2017)

Graves, A., Fernández, S., Gomez, F., Schmidhuber, J.: Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. In: ICML, pp. 369–376 (2006)

Guo, D., Zhou, W., Li, H., Wang, M.: Hierarchical LSTM for sign language translation. In: AAAI, vol. 32 (2018)

Guo, D., Wang, S., Tian, Q., Wang, M.: Dense temporal convolution network for sign language translation. In: IJCAI, pp. 744–750 (2019)

Hao, A., Min, Y., Chen, X.: Self-mutual distillation learning for continuous sign language recognition. In: ICCV, pp. 11303–11312 (2021)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR, pp. 770–778 (2016)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Huang, J., Zhou, W., Zhang, Q., Li, H., Li, W.: Video-based sign language recognition without temporal segmentation. In: AAAI, vol. 32 (2018)

Iandola, F.N., Han, S., Moskewicz, M.W., Ashraf, K., Dally, W.J., Keutzer, K.: Squeezenet: Alexnet-level accuracy with 50x fewer parameters and \(<\) 0.5 mb model size. arXiv (2016)

Kar, A., Rai, N., Sikka, K., Sharma, G.: Adascan: adaptive scan pooling in deep convolutional neural networks for human action recognition in videos. In: CVPR, pp. 3376–3385 (2017)

Koller, O., Forster, J., Ney, H.: Continuous sign language recognition: towards large vocabulary statistical recognition systems handling multiple signers. CVIU 141, 108–125 (2015)

Koller, O., Zargaran, O., Ney, H., Bowden, R.: Deep sign: hybrid CNN-HMM for continuous sign language recognition. In: BMVC (2016)

Koller, O., Zargaran, O., Ney, H., Bowden, R.: Deep sign: hybrid CNN-HMM for continuous sign language recognition. In: BMVC (2016)

Koller, O., Zargaran, S., Ney, H.: Re-sign: re-aligned end-to-end sequence modelling with deep recurrent CNN-HMMs. In: CVPR, pp. 4297–4305 (2017)

Koller, O., Camgoz, N.C., Ney, H., Bowden, R.: Weakly supervised learning with multi-stream CNN-LSTM-HMMs to discover sequential parallelism in sign language videos. IEEE TPAMI 42(9), 2306–2320 (2019)

Kuncheva, L.I., Yousefi, P., Almeida, J.: Edited nearest neighbour for selecting keyframe summaries of egocentric videos. J. Vis. Commun. Image Represent. 52, 118–130 (2018)

Li, H., Gao, L., Han, R., Wan, L., Feng, W.: Key action and joint CTC-attention based sign language recognition. In: ICASSP, pp. 2348–2352 (2020)

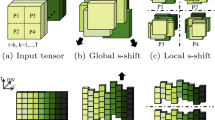

Lin, J., Gan, C., Han, S.: TSM: temporal shift module for efficient video understanding. In: ICCV, pp. 7083–7093 (2019)

Ma, N., Zhang, X., Zheng, H.T., Sun, J.: Shufflenet v2: practical guidelines for efficient CNN architecture design. In: ECCV, pp. 116–131 (2018)

Min, Y., Hao, A., Chai, X., Chen, X.: Visual alignment constraint for continuous sign language recognition. In: ICCV, pp. 11542–11551 (2021)

Niu, Z., Mak, B.: Stochastic fine-grained labeling of multi-state sign glosses for continuous sign language recognition. In: ECCV, pp. 172–186 (2020)

Pan, Y., Mei, T., Yao, T., Li, H., Rui, Y.: Jointly modeling embedding and translation to bridge video and language. In: CVPR, pp. 4594–4602 (2016)

Pfister, T., Charles, J., Zisserman, A.: Large-scale learning of sign language by watching tv (using co-occurrences). In: BMVC (2013)

Pu, J., Zhou, W., Li, H.: Dilated convolutional network with iterative optimization for continuous sign language recognition. In: IJCAI, vol. 3, p. 7 (2018)

Pu, J., Zhou, W., Li, H.: Iterative alignment network for continuous sign language recognition. In: CVPR, pp. 4165–4174 (2019)

Pu, J., Zhou, W., Hu, H., Li, H.: Boosting continuous sign language recognition via cross modality augmentation. In: ACM MM, pp. 1497–1505 (2020)

Qiu, Z., Yao, T., Mei, T.: Learning spatio-temporal representation with pseudo-3d residual networks. In: ICCV, pp. 5533–5541 (2017)

Radosavovic, I., Kosaraju, R.P., Girshick, R., He, K., Dollár, P.: Designing network design spaces. In: CVPR, pp. 10428–10436 (2020)

Simonyan, K., Zisserman, A.: Two-stream convolutional networks for action recognition in videos. In: NIPS, vol. 27 (2014)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. In: NIPS, vol. 30 (2017)

Vazquez-Enriquez, M., Alba-Castro, J.L., Docío-Fernández, L., Rodriguez-Banga, E.: Isolated sign language recognition with multi-scale spatial-temporal graph convolutional networks. In: CVPR, pp. 3462–3471 (2021)

Venugopalan, S., Rohrbach, M., Donahue, J., Mooney, R., Darrell, T., Saenko, K.: Sequence to sequence-video to text. In: ICCV, pp. 4534–4542 (2015)

Wang, S., Guo, D., Zhou, W.G., Zha, Z.J., Wang, M.: Connectionist temporal fusion for sign language translation. In: ACM MM, pp. 1483–1491 (2018)

Wei, C., Zhao, J., Zhou, W., Li, H.: Semantic boundary detection with reinforcement learning for continuous sign language recognition. IEEE TCSVT 31(3), 1138–1149 (2020)

Xie, P., Zhao, M., Hu, X.: PiSLTRc: position-informed sign language transformer with content-aware convolution. IEEE TMM 24, 3908–3919 (2021)

Xie, P., Cui, Z., Du, Y., Zhao, M., Cui, J., Wang, B., Hu, X.: Multi-scale local-temporal similarity fusion for continuous sign language recognition. Pattern Recognit. 136, 109–233 (2023)

Yang, W., Tao, J., Ye, Z.: Continuous sign language recognition using level building based on fast hidden Markov model. Pattern Recognit. Lett. 78, 28–35 (2016)

Yang, Z., Shi, Z., Shen, X., Tai, Y.W.: Sf-net: Structured feature network for continuous sign language recognition. arXiv (2019)

Yao, L., Torabi, A., Cho, K., Ballas, N., Pal, C., Larochelle, H., Courville, A.: Describing videos by exploiting temporal structure. In: ICCV, pp. 4507–4515 (2015)

Zhou, H., Zhou, W., Li, H.: Dynamic pseudo label decoding for continuous sign language recognition. In: ICME, pp. 1282–1287 (2019)

Zhou, H., Zhou, W., Zhou, Y., Li, H.: Spatial-temporal multi-cue network for continuous sign language recognition. In: AAAI, vol. 34, pp. 13009–13016 (2020)

Zhou, H., Zhou, W., Qi, W., Pu, J., Li, H.: Improving sign language translation with monolingual data by sign back-translation. In: CVPR, pp. 1316–1325 (2021)

Zhou, H., Zhou, W., Zhou, Y., Li, H.: Spatial-temporal multi-cue network for sign language recognition and translation. IEEE TMM 24, 768–779 (2021)

Zhu, W., Hu, J., Sun, G., Cao, X., Qiao, Y.: A key volume mining deep framework for action recognition. In: CVPR, pp. 1991–1999 (2016)

Zhu, Q., Li, J., Yuan, F., Gan, Q.: Multi-scale temporal network for continuous sign language recognition. arXiv preprint arXiv:2204.03864 (2022)

Acknowledgements

The work is supported by the National Natural Science Foundation of China (Grant No. 62072334).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Data Availability Statement

All datasets analysed during this study are available in links.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gao, L., Hu, L., Lyu, F. et al. Difference-guided multi-scale spatial-temporal representation for sign language recognition. Vis Comput 39, 3417–3428 (2023). https://doi.org/10.1007/s00371-023-02979-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-02979-8