Abstract

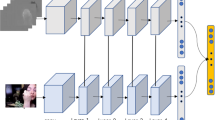

This paper proposes a Siamese motion-aware Spatio-temporal network (SiamMAST) for video action recognition. The SiamMAST is designed based on the fusion of four features via processing video frames: spatial features, temporal features, spatial dynamic features, and temporal dynamic features of a moving target. The SiamMAST comprises AlexNets as the backbone, LSTMs, and the spatial motion-awareness and temporal motion-awareness sub-modules. RGB images are fed into the network, where AlexNets extract spatial features. Further, they are fed into LSTMs to generate temporal features. Additionally, spatial motion-awareness and temporal motion-awareness sub-modules are proposed to capture spatial and temporal dynamic features. Finally, all features are fused and fed into the classification layer. The final recognition result is produced by averaging the test label probabilities across a fixed number of RGB frames and selecting the label of the highest probability. The whole network is trained offline using an end-to-end approach with large-scale image datasets using the standard SGD algorithm with back-propagation. The proposed network is evaluated on two challenging datasets UCF101 (93.53%) and HMDB51 (69.36%). The experiments have demonstrated the effectiveness and efficiency of our proposed SiamMAST.

Similar content being viewed by others

References

Krizhevsky, A., Sutskever, I, Hinton, G: ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems (NIPs), pp. 1097–1105 (2012)

Yu, F., Koltun, V.: Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv: 1511.07122 (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778 (2016)

Feichtenhofer, C., Pinz, A., Zisserman, A.: Convolutional two-stream network fusion for video action recognition. In: Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1933–1941 (2016)

Simonyan, K., Zisserman, A.: Two-stream convolutional networks for action recognition in videos In Proceedings of the Advance Neural Information Processing System, pp. 568–576 (2014)

Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R., Li, F.: Large-scale video classification with convolutional neural networks. In: 2014 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1725–1732 (2014)

Ng, J., Hausknecht, M., Vijayanarasimhan, S., Vinyals, O., Monga, R., Toderici, G.: Beyond short snippets: deep networks for video classification. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition, pp. 4694–4702 (2015)

Wang, L., Xiong, Y., Wang, Z., Qiao, Y., Lin, D., Tang, X., Gool, L.: Temporal segment networks: towards good practices for deep action recognition. In: European Conference on Computer Vision (ECCV), pp. 20–36 (2016)

Tran, D., Wang, H., Torresani, L., Ray, J., LeCun, Y., Paluri, M.: A closer look at spatiotemporal convolutions for action recognition. In: Proceedings of the IEEE Confernce in Computing Visual Pattern Recognition, pp. 6450–6459 (2018)

Carreira, J., Zisserman, A.: Quo vadis, action recognition? A new model and the kinetics dataset. In: Proceedings of the IEEE Confernce in Computing Visual Pattern Recognition, pp. 6299–6308 (2017)

Laptev, I.: On space-time interest points. Int. J. Comput. Vis. 64(2), 107–123 (2005)

Li, Y., Ye, J., Wang, T., Huang, S.: Augmenting bag-of-words: a robust contextual representation of spatiotemporal interest points for action recognition. Vis. Comput. 31(10), 1383–1394 (2015)

Dawn, D.D., Shaikh, S.H.: A comprehensive survey of human action recognition with spatio-temporal interest point (STIP) detector. Vis. Comput. 32(3), 289–306 (2016)

Sanchez, J., Perronnin, F., Mensink, T., Verbeek, J.: Image classification with the fisher vector: theory and practice. Int. J. Com. Vis. 105(3), 222–245 (2013)

Jegou, H., Perronnin, F., Douze, M., Sánchez, J., Pérez, P., Schmid, C.: Aggregating local image descriptors into compact codes. IEEE Trans. Pattern Anal. Mach. Intell. 34(9), 1704–1716 (2012)

Thanikachalam, V., Thyagharajan, K.: Human action recognition using motion history image and correlation filter. Int. J. Appl. Eng. Res. 10(34), 361–363 (2015)

Jiang, Y., Dai, Q., Xue, X., Liu, W., Ngo, CW.: Trajectory‐based modeling of human actions with motion reference points. In: European Conference on Computer Vision (ECCV), pp. 425–438. Springer (2012)

Sadanand, S., Corso, J.: A high‐level representation of activity in video. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1234–1241 (2012)

Dalal, N., Triggs, B., Schmid, C.: Human detection using oriented histograms of flow and appearance. In: European Conference on Computer Vision (ECCV), pp. 428–441. Springer (2006)

Wang, H., Schmid, C.: Action recognition with improved trajectories. In: 2013 IEEE conference on computer vision (ICCV), pp. 3551–3558 (2013)

Laptev, I., Marszalek, M., Schmid, C., Rozenfeld, B.: Learning realistic human actions from movies. In: 2008 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1–8 (2008)

Raman, N., Maybank, S.: Activity recognition using a supervised non-parametric hierarchical HMM. Neurocomputing 199, 163–177 (2016)

Abidine, M., Fergani, B.: Evaluating C‐SVM, CRF and LDA classification for daily activity recognition. In: 2012 International Conference on Multimedia Computing and Systems, pp. 272–277 (2012)

Klaser, A., Marszalek, M., Schmid, C.: A spatio-temporal descriptor based on 3D-gradients, In: 2008 19th British Machine Vision Conference (BMVC), pp. 275–1 (2008)

Willems, G., Tuytelaars, T., Gool, L.: An efficient dense and scale-invariant spatio-temporal interest point detector. In: European Conference on Computer Vision (ECCV), pp. 650–663. Springer (2008)

Dollar, P., Rabaud, V., Cottrell, G., Belongie, S.: Behavior recognition via sparse spatio-temporal features. In: 2005 IEEE International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance, pp. 65–72 (2005)

Csurka, G., Dance, C., Fan, L., Willamowski, J., Bray, C.: Visual categorization with bags of keypoints. In: ECCV Workshop on statistical learning in computer vision, pp. 1–22 (2004)

Cai, Z., Wang, L., Peng, X., Qiao, Y.: Multi-view super vector for action recognition. In: 2014 IEEE Conference on Computer Vision and Pattern Recognition, pp. 596–603 (2014)

Wang, H., Klaser, A., Schmid, C., Liu, C.: Action recognition by dense trajectories. In: 2011 IEEE Conference on Computer Vision and Pattern Recognition, pp. 3169–317 (2016)

Jain, M., Jegou, H., Bouthemy, P.: Better exploiting motion for better action recognition. In 2013 IEEE Conference on Computer Vision and Pattern Recognition, pp. 2555–2562 (2013)

Liang, D., Liang, H., Yu, Z., Zhang, Y.: Deep convolutional BiLSTM fusion network for facial expression recognition. Vis. Comput. 37, 1327–1341 (2021)

Donahue, J., Hendricks, L., Guadarrama, S., Rohrbach M., Venugopalan S., Saenko K., Darrell T.: Long-term recurrent convolutional networks for visual recognition and description. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition, pp. 2625–2634 (2015)

Wang, L., Qiao, Y., Tang, X.: Action recognition with trajectory-pooled deep-convolutional descriptors. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition, pp. 4305–4314 (2015)

Gogi´c, I., Manhart, M., Pandži´c, l., Ahlberg, J.: Fast facial expression recognition using local binary features and shallow neural networks. Vis. Comput. 36, 97–112 (2020)

Abdelbaky, A., Aly, S.: Two-stream spatiotemporal feature fusion for human action recognition. Vis. Comput. 37, 1821–1835 (2021)

Chan, T., Jia, K., Gao, S., Lu, J., Zeng, Z., Ma, Y.: PCANet: A Simple Deep Learning Baseline for Image Classification? arXiv preprint arXiv: 1404.3606v2 (2014)

Tao, R., Gavves, E., Smeulders, A.: Siamese Instance Search for Tracking. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1420–1429 (2016)

Soomro, K., Zamir, A., Shah, M.: UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv preprint: arXiv:1212.0402 (2012)

Kuehne, H., Jhuang, H., Garrote, E., Poggio, T., Serre, T.: HMDB: A large video database for human motion recognition. In: 2011 IEEE Conference on Computer Vision (ICCV), pp. 2556–2563 (2011)

Liu, H., Jie, Z., Jayashree, K., Qi, M., Jiang, J., Yan, S., Feng, J.: Video-based Person Re-identification with accumulative motion context. IEEE Trans Circuits Syst Video Technol 28(10):2788–2802 (2018)

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Hua, Z., Karpathy, A., Khosla, A., Bernstein, M., Berg, A., Li, F.: ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 115(3), 211–252 (2015)

Peng, X., Wang, L., Wang, X., Qiao, Y.: Bag of visual words and fusion methods for action recognition: comprehensive study and good practice. Comput. Vis. Image Underst. 150, 109–125 (2016)

Varol, G., Laptev, I., Schmid, C.: Long-term temporal convolutions for action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 40(6), 1510–1517 (2017)

Tran, D., Bourdev, L., Fergus, R., Torresani, L., Paluri, M.: Learning spatiotemporal features with 3D convolutional networks. In: 2011 IEEE Conference on Computer Vision (ICCV), pp. 4489–4497 (2015)

Bilen, H., Fernando, B., Gavves, E., Vedaldi, A., Gould, S.: Dynamic image networks for action recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 3034–3042 (2016)

Zhu, W., Hu, J., Sun, G., Cao, X., Qiao, Y.: A key volume mining deep framework for action recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1991–1999 (2016)

Tran, D., Ray, J., Shou, Z., Chang, S., Paluri, M.: Convnet architecture search for spatiotemporal feature learning. arXiv preprint: arXiv:1708.05038 (2017)

Diba, A., Fayyaz, M., Sharma, V., Karami, A., Arzani, M., Yousefzadeh, R., Gool, L.: Temporal 3d convnets: New architecture and transfer learning for video classification. arXiv preprint: arXiv:1711.08200 (2017)

Maaten, L., Hinton, G.: Visualizing data using t-SNE. J. Mach. Learn. Res. 9(11), 2579–2605 (2008)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant No. 52277127), Science and Technology Innovation Talent Project of Sichuan Province (Grant No. 2021JDRC0012), Independent Research Project of National Key Laboratory of Traction Power of China (Grant No. 2019TPL-T19), Key Interdisciplinary Basic Research Project of Southwest Jiaotong University (Grant No. 2682021ZTPY089), Open Research Project of National Rail Transit Electrification and Automation Engineering Technology Research Center and Chengdu Guojia Electrical Engineering Co., Ltd (Grant No. NEEC-2019-B06), and State Scholarship Fund of China Scholarship Council. (Grant No. 202007000101).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lu, X., Quan, W., Marek, R. et al. SiamMAST: Siamese motion-aware spatio-temporal network for video action recognition. Vis Comput 40, 3163–3181 (2024). https://doi.org/10.1007/s00371-023-03018-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-03018-2