Abstract

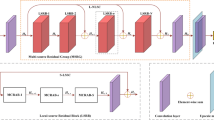

The models based on convolutional neural network have achieved excellent results in image super-resolution by acquiring prior knowledge from a large number of images, but such models still have problems such as the features between layers in the depth network cannot be effectively fused, the number of parameters is too large, and cross-channel feature learning is impossible. Based on this, a deep recursive residual channel attention network (DRRCAN) model was proposed in this paper. To solve the problem that the information between different layers in the deep network cannot be fused effectively, this paper constructs a channel feature fusion module, which can effectively fuse the feature information of different layers. To solve the problem that the parameters increase sharply due to the increase of network depth, recursive blocks are adopted in this paper, which greatly reduces the number of parameters in the deep network. The channel attention is integrated to enable the model to learn features across channels. In addition, to avoid gradient explosion or disappearance, residual modules, long skip connections are introduced to improve the stability and generalization ability of the model. Extensive benchmark evaluations validate the superiority of the proposed DRRCAN model compared with existing algorithms.

Similar content being viewed by others

Data Availability

All in this paper are standard datasets that have been proven by a large number of papers and scholars.

References

Bevilacqua, M., Roumy, A., Guillemot. C., et al.: Low-complexity single-image super-resolution based on nonnegative neighbor embedding (2012)

Chang, H., Yeung, D.Y., Xiong, Y.: Super-resolution through neighbor embedding. In: Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2004. CVPR 2004. IEEE, pp I–I (2004)

Chudasama, V., Upla, K., Raja, K., et al.: Compact and progressive network for enhanced single image super-resolution-compresrnet. Vis. Comput. 38(11), 3643–3665 (2022)

Dong, C., Loy, C.C., He, K., et al.: Learning a deep convolutional network for image super-resolution. In: European Conference on Computer Vision, pp. 184–199. Springer (2014)

Dong, C., Loy, C.C., Tang, X.: Accelerating the super-resolution convolutional neural network. In: European Conference on Computer Vision, pp. 391–407. Springer (2016)

Dosovitskiy, A., Beyer, L., Kolesnikov. A., et al.: An image is worth 16x16 words: transformers for image recognition at scale. (2020) arXiv:2010.11929

Du, Q., Gu, W., Zhang, L., et al.: Attention-based LSTM-CNNS for time-series classification. In: Proceedings of the 16th ACM Conference on Embedded Networked Sensor Systems, pp. 410–411 (2018)

Gao, Y., Qi, Z., Zhao, D.: Edge-enhanced instance segmentation by grid regions of interest. Vis. Comput. 39(3), 1137–1148 (2023)

He, K., Zhang, X., Ren, S., et al.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Huang, J.B., Singh, A., Ahuja, N.: Single image super-resolution from transformed self-exemplars. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5197–5206 (2015)

Huang, Y., Wang, W., Wang, L.: Bidirectional recurrent convolutional networks for multi-frame super-resolution. In: Advances in Neural Information Processing Systems, vol. 28 (2015)

Jing, Y., Lin, L., Li, X., et al.: An attention mechanism based convolutional network for satellite precipitation downscaling over China. J. Hydrol. 613(128), 388 (2022)

Kim, J., Lee, J.K., Lee, K.M.: Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1646–1654 (2016)

Kim, J., Lee, J.K., Lee, K.M.: Deeply-recursive convolutional network for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1637–1645 (2016)

Kirkland, E. J.: Bilinear interpolation. In: Advanced Computing in Electron Microscopy, pp. 261–263. Springer, Berlin (2010)

Ledig, C., Theis, L., Huszár, F., et al.: Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4681–4690 (2017)

Li, Z., Yang, J., Liu, Z., et al.: Feedback network for image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3867–3876 (2019)

Liang, J., Zhou, T., Liu, D., et al.: Clustseg: Clustering for Universal Segmentation. (2023) arXiv:2305.02187

Liu, B., Chen, J.: A super resolution algorithm based on attention mechanism and SRGAN network. IEEE Access 9, 139138–139145 (2021)

Liu, D., Cui, Y., Tan, W., et al.: SG-Net: spatial granularity network for one-stage video instance segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9816–9825 (2021)

Liu, D., Liang, J., Geng, T., et al.: Tripartite feature enhanced pyramid network for dense prediction. IEEE Trans. Image Process. (2023)

Liu, T., Cai, Y., Zheng, J., et al.: Beacon: a boundary embedded attentional convolution network for point cloud instance segmentation. Vis. Comput. pp. 1–11 (2022)

Ma, C., Rao, Y., Cheng, Y., et al.: Structure-preserving super resolution with gradient guidance. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7769–7778 (2020)

Martin, D., Fowlkes, C., Tal, D., et al.: A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: Proceedings Eighth IEEE International Conference on Computer Vision. ICCV 2001. IEEE, pp. 416–423 (2001)

Mei, Y., Fan, Y., Zhou, Y.: Image super-resolution with non-local sparse attention. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3517–3526 (2021)

Mnih, V., Heess, N., Graves. A., et al.: Recurrent models of visual attention. In: Advances in Neural Information Processing Systems, vol. 27 (2014)

Paszke, A., Gross, S., Chintala, S., et al.: Automatic differentiation in pytorch (2017)

Raiko, T., Valpola, H., LeCun, Y.: Deep learning made easier by linear transformations in perceptrons. In: Artificial Intelligence and Statistics, PMLR, pp. 924–932 (2012)

Srivastava, R.K., Greff, K., Schmidhuber, J.: Training very deep networks. In: Advances in Neural Information Processing Systems, vol. 28 (2015)

Tai, Y., Yang, J., Liu, X.: Image super-resolution via deep recursive residual network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3147–3155

Tai, Y., Yang, J., Liu, X., et al.: Memnet: a persistent memory network for image restoration. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4539–4547 (2017)

Tian, C., Xu, Y., Zuo, W., et al.: Coarse-to-fine CNN for image super-resolution. IEEE Trans. Multim. 23, 1489–1502 (2020)

Wang, F., Jiang, M., Qian, C., et al.: Residual attention network for image classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3156–3164 (2017)

Wang, W., Han, C., Zhou, T., et al.: Visual recognition with deep nearest centroids (2022). arXiv:2209.07383

Wang, W., Liang, J., Liu, D.: Learning equivariant segmentation with instance-unique querying (2022). arXiv:2210.00911

Wang, Z., Yang, Y., Wang, Z., et al.: Self-tuned deep super resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 1–8 (2015)

Yang, J., Wright, J., Huang, T.S., et al.: Image super-resolution via sparse representation. IEEE Trans. Image Process. 19(11), 2861–2873 (2010)

Yang, W., Feng, J., Yang, J., et al.: Deep edge guided recurrent residual learning for image super-resolution. IEEE Trans. Image Process. 26(12), 5895–5907 (2017)

Yang, X., Zhu, Y., Guo, Y., et al.: An image super-resolution network based on multi-scale convolution fusion. Vis. Comput. pp. 1–11 (2021)

Yu, J., Xiao, C., Su, K.: A method of gibbs artifact reduction for pocs super-resolution image reconstruction. In: 2006 8th International Conference on Signal Processing. IEEE (2006)

Zeyde, R., Elad, M., Protter, M.: On single image scale-up using sparse-representations. In: International Conference on Curves and Surfaces, pp. 711–730. Springer, Berlin (2010)

Zhang, W., Liu, Y., Dong, C., et al.: RankSRGAN: super resolution generative adversarial networks with learning to rank. IEEE Trans. Pattern Anal. Mach. Intell. 44(10), 7149–7166 (2021)

Zhang, Y., Li, K., Li, K., et al.: Image super-resolution using very deep residual channel attention networks. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 286–301 (2018)

Zhou, D., Liu, Y., Li, X., et al.: Single-image super-resolution based on local biquadratic spline with edge constraints and adaptive optimization in transform domain. Vis. Comput. pp. 1–16 (2022)

Acknowledgements

This research was supported by the National Natural Science Foundation of China (62002200, 62202268, 62272281) and Shandong Provincial Natural Science Foundation (ZR2020QF012, ZR2021QF134, ZR2021MF068, ZR2021MF015, ZR2021MF107).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, Y., Yang, D., Zhang, F. et al. Deep recurrent residual channel attention network for single image super-resolution. Vis Comput 40, 3441–3456 (2024). https://doi.org/10.1007/s00371-023-03044-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-03044-0