Abstract

Video action recognition faces the important and challenging problem of spatiotemporal relationship modeling. In order to solve this issue, current methods typically rely on 2D or 3D CNN operations to model local spatiotemporal dependencies at fixed scales. However, most of these models fail to emphasize the keyframes and action-sensitive regions of the input video, resulting in poor performance. In this paper, an action recognition network with local motion feature extraction and spatiotemporal attention mechanism is proposed. The proposed network consists of a motion capture (MC) module and a temporal attention (TA) and spatiotemporal attention (STA) module, which capture detailed motion features, and learns the contribution of each frame and each region to the action at the feature level, respectively. To evaluate our network, we construct a concrete water addition violation dataset (CWAVD), which can be used to identify water addition violations by construction site workers and improve construction management efficiency and quality. The proposed network achieves the state-of-the-art performance on three of the most challenging datasets, UCF101 (97.6%), HMDB51 (77.3%) and SSV2 (67.8%).

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Imen, J., Anouar, B.K., Ihsen, A., Mohamed, A.M.: Vision-based human action recognition: an overview and real world challenges. Forens. Sci. Int. Digit. Investig. 32, 200901 (2020)

Piergiovanni, A., Ryoo, M.S.: Representation flow for action recognition. In: 2019 IEEE Conference on Computer Vision and Pattern Recognition, pp. 9937–9945. IEEE (2019)

Bobick, A., Davis, J.: The recognition of human movement using temporal templates. IEEE Trans. Pattern Anal. Mach. Intell. 23(3), 257–267 (2001)

Laptev, I.: On space-time interest points. Int. J. Comput. Vision 64, 107–123 (2005)

Fujiyoshi, H., Lipton, A.: Real-time human motion analysis by image skeletonization. In: Fourth IEEE Workshop on Applications of Computer Vision, pp. 15–21. IEEE (1998)

Qiu, Z.X., Zhang, H.B., Deng, W.M., Du, J.X., Lei, Q., Zhang, G.L.: Effective skeleton topology and semantics-guided adaptive graph convolution network for action recognition. Vis. Comput. 39(5), 2191–2203 (2022)

Simonyan, K., Zisserman, A.: Two-stream convolutional networks for action recognition in videos. In: The 27th International Conference on Neural Information Processing Systems, pp. 568–576. MIT Press (2014)

Tran, D., Bourdev, L., Fergus, R., Torresani, L., Paluri, M.: Learning spatiotemporal features with 3D convolutional networks. In: 2015 IEEE International Conference on Computer Vision, pp. 4489–4497. IEEE (2015)

Wang, L., Xiong, Y., Wang, Z., Qiao, Y., Lin, D., Tang, X., Van Gool, L.: Temporal segment networks: towards good practices for deep action recognition. In: 2016 European Conference on Computer Vision, pp. 20–36. Springer (2016)

Carreira, J., Zisserman, A.: Quo vadis, action recognition? A new model and the kinetics dataset. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition, pp. 4724–4733. IEEE (2017)

Abdelbaky, A., Aly, S.: Two-stream spatiotemporal feature fusion for human action recognition. Vis. Comput. 37(7), 1821–1835 (2021)

Fei, K., Wang, C., Zhang, J., Liu, Y., Xie, X., Tu, Z.: Flow-pose Net: an effective two-stream network for fall detection. Vis. Comput. 2022, 1–16 (2022)

Horn, B.K., Schunck, B.G.: Determining optical flow. Artif. Intell. 17(1), 185–203 (1981)

Sun, D., Roth, S., Black, M.J.: Secrets of optical flow estimation and their principles. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 2432–2439. IEEE (2010)

Dosovitskiy, A., Fischer, P., Ilg, E., Häusser, P., Hazirbas, C., Golkov, V., Smagt, P.v.d., Cremers, D., Brox, T.: Flownet: Learning optical flow with convolutional networks. In: 2015 IEEE International Conference on Computer Vision, pp. 2758–2766. IEEE (2015)

Ilg, E., Mayer, N., Saikia, T., Keuper, M., Dosovitskiy, A., Brox, T.: Flownet 2.0: Evolution of optical flow estimation with deep networks. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1647–1655. IEEE (2017)

Zhu, Y., Lan, Z., Newsam, S., Hauptmann, A.: Hidden two-stream convolutional networks for action recognition. In: 2018 Asian Conference on Computer Vision, pp. 363–378. Springer (2019)

Crasto, N., Weinzaepfel, P., Alahari, K., Schmid, C.: Mars: Motion-augmented rgb stream for action recognition. In: 2019 IEEE Conference on Computer Vision and Pattern Recognition, pp. 7874–7883. IEEE (2019)

Lu, Y., Wang, Q., Ma, S., Geng, T., Chen, Y.V., Chen, H., Liu, D.: Transflow: Transformer as flow learner. In: 2023 IEEE Conference on Computer Vision and Pattern Recognition. pp. 18063–18073. IEEE (2023)

Wang, X., Girshick, R., Gupta, A., He, K.: Non-local neural networks. In: 2018 IEEE Conference on Computer Vision and Pattern Recognition, pp. 7794–7803. IEEE (2018)

Liu, Z., Luo, D., Wang, Y., Wang, L., Tai, Y., Wang, C., Li, J., Huang, F., Lu, T.: TEINet: Towards an efficient architecture for video recognition. In: The AAAI Conference on Artificial Intelligence, vol. 34(07), pp. 11669–11676 (2020)

Li, Y., Ji, B., Shi, X., Zhang, J., Kang, B., Wang, L.: Tea: Temporal excitation and aggregation for action recognition. In: 2020 IEEE Conference on Computer Vision and Pattern Recognition, pp. 906–915. IEEE (2020)

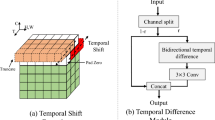

Wang, L., Tong, Z., Ji, B., Wu, G.: Tdn: Temporal difference networks for efficient action recognition. In: 2021 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1895–1904. IEEE (2021)

Geng, T., Zheng, F., Hou, X., Lu, K., Qi, G.-J., Shao, L.: Spatial-temporal pyramid graph reasoning for action recognition. IEEE Trans. Image Process. 31, 5484–5497 (2022)

Sang, H., Zhao, Z., He, D.: Two-level attention model based video action recognition network. IEEE Access. 7, 118388–118401 (2019)

Zhu, Y., Liu, G.: Fine-grained action recognition using multi-view attentions. Vis. Comput. 36(9), 1771–1781 (2020)

Dong, W., Zhang, Z., Song, C., Tan, T.: Identifying the key frames: an attention-aware sampling method for action recognition. Pattern Recognit. 130, 108797 (2022)

Li, J., Wei, P., Zheng, N.: Nesting spatiotemporal attention networks for action recognition. Neurocomputing 459, 338–348 (2021)

Kim, J., Li, G., Yun, I., Jung, C., Kim, J.: Weakly-supervised temporal attention 3d network for human action recognition. Pattern Recognit. 119, 108068 (2021)

Yan, L., Wang, Q., Cui, Y., Feng, F., Quan, X., Zhang, X., Liu, D.: GL-RG: global-local representation granularity for video captioning. In: 2022 International Joint Conference on Artificial Intelligence. (2022).

Cui, Y., Yan, L., Cao, Z., Liu, D.: Tf-blender: Temporal feature blender for video object detection. In: 2021 IEEE International Conference on Computer Vision. pp. 8138–8147. IEEE (2021)

Lin, J., Gan, C., Han, S.: Tsm: Temporal shift module for efficient video understanding. In: 2019 IEEE International Conference on Computer Vision, pp. 7082–7092. IEEE (2019)

Dai, J., Qi, H., Xiong, Y., Li, Y., Zhang, G., Hu, H., Wei, Y.: Deformable convolutional networks. In: 2017 IEEE International Conference on Computer Vision, pp. 764–773. IEEE (2017)

Geng, Z., Guo, M.-H., Chen, H., Li, X., Wei, K., Lin, Z.: Is attention better than matrix decomposition? In: 2021 International Conference on Learning Representations (2021)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: 2018 IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141. IEEE (2018)

Soomro, K., Zamir, A.R., Shah, M.: UCF101: A dataset of 101 human actions classes from videos in the wild. CoRR arXiv:1212.0402 (2012)

Kuehne, H., Jhuang, H., Garrote, E., Poggio, T., Serre, T.: Hmdb: A large video database for human motion recognition. In: 2011 IEEE International Conference on Computer Vision, pp. 2556–2563. IEEE (2011)

Goyal, R., Kahou, S. E., Michalski, V., Materzynska, J., Westphal, S., Kim, H., Haenel, V., Fruend, I., Yianilos, P., Mueller-Freitag, M., Hoppe, F., Thurau, C., Bax, I., Memisevic, R.: The “something something” video database for learning and evaluating visual common sense. In: 2017 IEEE International Conference on Computer Vision, pp. 5843–5851. IEEE (2017)

Xie, Z., Sato, I., Sugiyama, M.: A diffusion theory for deep learning dynamics: stochastic gradient descent exponentially favors flat minima. In: International Conference on Learning Representations (2021)

Diba, A., Fayyaz, M., Sharma, V., Arzani, M. M., Yousefzadeh, R., Gall, J., Van Gool, L.: Spatio-temporal channel correlation networks for action classification. In: 2018 European Conference on Computer Vision, pp. 299–315. Springer (2018)

Zolfaghari, M., Singh, K., Brox, T.: Eco: Efficient convolutional network for online video understanding. In: 2018 European Conference on Computer Vision, pp. 713–730. Springer (2018)

Wang, L., Li, W., Li, W., Van Gool, L.: Appearance-and-relation networks for video classification. In: 2018 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1430–1439. IEEE (2018)

Zhang, G., Huang, G., Chen, H., Pun, C.-M., Yu, Z., Ling, W.-K.: Video action recognition with key-detail motion capturing based on motion spectrum analysis and multiscale feature fusion. Vis. Comput. 39(2), 539–556 (2023)

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A., Torralba, A.: Learning deep features for discriminative localization. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 2921–2929. IEEE (2016)

Acknowledgements

This work was supported by the National Key R&D Program of China (No. 2022YFB2602203).

Author information

Authors and Affiliations

Contributions

XS contributed to writing—review editing, and methodology. DZ contributed to writing—draft, and software. LL carried out data management and analysis. MH and XH performed writing—draft, and writing—review and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Song, X., Zhang, D., Liang, L. et al. Local motion feature extraction and spatiotemporal attention mechanism for action recognition. Vis Comput 40, 7747–7759 (2024). https://doi.org/10.1007/s00371-023-03205-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-03205-1