Abstract

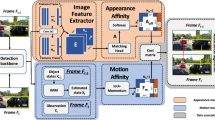

UAV tracking plays a crucial role in computer vision by enabling real-time monitoring UAVs, enhancing safety and operational capabilities while expanding the potential applications of drone technology. Off-the-shelf deep learning based trackers have not been able to effectively address challenges such as occlusion, complex motion, and background clutter for UAV objects in infrared modality. To overcome these limitations, we propose a novel tracker for UAV object tracking, named MAMC. To be specific, the proposed method first employs a data augmentation strategy to enhance the training dataset. We then introduce a candidate target association matching method to deal with the problem of interference caused by the presence of a large number of similar targets in the infrared pattern. Next, it leverages a motion estimation algorithm with window jitter compensation to address the tracking instability due to background clutter and occlusion. In addition, a simple yet effective object research and update strategy is used to address the complex motion and localization problem of UAV objects. Experimental results demonstrate that the proposed tracker achieves state-of-the-art performance on the Anti-UAV and LSOTB-TIR dataset.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Jiang, N., Sheng, B., Li, P., & Lee, T.Y.: Photohelper: Portrait photographing guidance via deep feature retrieval and fusion. IEEE Trans. Multimed. (2022)

Chen, Z., Qiu, J., Sheng, B., Li, P., Enhua, W.: Gpsd: generative parking spot detection using multi-clue recovery model. Vis. Comput. 37(9–11), 2657–2669 (2021)

Al-Jebrni, A.H., Ali, S.G., Li, H., Lin, X., Li, P., Jung, Y., Kim, J., Feng, D.D., Sheng, B., Jiang, L., et al.: Sthy-net: a feature fusion-enhanced dense-branched modules network for small thyroid nodule classification from ultrasound images. Visual Comput. 39, 1–15 (2023)

Li, J., Chen, J., Sheng, B., Li, P., Yang, P., Feng, D.D., Qi, J.: Automatic detection and classification system of domestic waste via multimodel cascaded convolutional neural network. IEEE Trans. Ind. Inf. 18(1), 163–173 (2021)

Cui, Y., Jiang, C., Wang, L., Wu, G.: Mixformer: end-to-end tracking with iterative mixed attention. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (2022)

Kalsotra, R., Arora, S.: Background subtraction for moving object detection: explorations of recent developments and challenges. Vis. Comput. 38(12), 4151–4178 (2022)

Abbass, M.Y., Kwon, K.-C., Kim, N., Abdelwahab, S.A., Abd El-Samie, F.E., Khalaf, A.A.M.: A survey on online learning for visual tracking. Vis. Comput. 37, 993–1014 (2021)

Zhu, Y., Li, C., Liu, Y., Wang, X., Tang, J., Luo, B., & Huang, Z.: Tiny object tracking: a large-scale dataset and a baseline. IEEE Trans. Neural Netw. Learn. Syst. 1–15 (2023)

Zhang, P., Zhao, J., Wang, D., Lu, H., & Ruan, X.: Visible-thermal UAV tracking: a large-scale benchmark and new baseline. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8886–8895 (2022)

Bertinetto, L., Valmadre, J., Henriques, J.F., Vedaldi, A., & Torr, P.H.: Fully-convolutional siamese networks for object tracking. In: European Conference on Computer Vision Workshops (2016)

Xu, Y., Wang, Z., Li, Z., Yuan, Y., Yu, G.: Siamfc++: towards robust and accurate visual tracking with target estimation guidelines. In: AAAI Conference on Artificial Intelligence (2020)

Li, B., Yan, J., Wu, W., Zhu, Z., Hu, X.: High performance visual tracking with siamese region proposal network. In: IEEE Conference on Computer Vision and Pattern Recognition (2018)

Chen, X., Yan, B., Zhu, J., et al.: Transformer tracking. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (2021)

Lin, X., Sun, S., Huang, W., Sheng, B., Li, P., Feng, D.D.: EAPT: efficient attention pyramid transformer for image processing. IEEE Trans. Multimedia 25, 50–61 (2021)

Xie, Z., Zhang, W., Sheng, B., Li, P., Chen, C.P.: BaGFN: broad attentive graph fusion network for high-order feature interactions. IEEE Trans. Neural Netw. Learn. Syst. 34, 4499–4513 (2021)

Danelljan, M., Bhat, G., Khan, F. S., & Felsberg, M.: Atom: accurate tracking by overlap maximization. In: IEEE Conference on Computer Vision and Pattern Recognition (2019)

Bhat, G., Danelljan, M., Van Gool, L., Timofte, R.: Learning discriminative model prediction for tracking. In: IEEE/CVF International Conference on Computer Vision (2019)

Mayer, C., Danelljan, M., Paudel, D.P., Van Gool, L.: Learning target candidate association to keep track of what not to track. In: IEEE/CVF International Conference on Computer Vision (2021)

Zhao, Ji., Wang, G., Li, J., Jin, L., Fan, N., Wang, M., Wang, X., Yong, T., Deng, Y., Guo, Y., et al.: The 2nd anti-uav workshop & challenge: methods and results (2021). arXiv preprint arXiv:2108.09909

Zhang, J., Yuan, T., He, Y., Wang, J.: A background-aware correlation filter with adaptive saliency-aware regularization for visual tracking. Neural Comput. Appl. 34, 6359–6376 (2022)

Yuan, D., Chang, X., Li, Z., He, Z.: Learning adaptive spatial-temporal context-aware correlation filters for UAV tracking. ACM Trans. Multimed. Comput. Commun. Appl. 18(3), 1–18 (2022)

Fan, J., Yang, X., Ruitao, L., Li, W., Huang, Y.: Long-term visual tracking algorithm for UAVS based on kernel correlation filtering and surf features. Vis. Comput. 39(1), 319–333 (2023)

Zhao, J., Zhang, J., Li, D., Wang, D.: Vision-based anti-UAV detection and tracking. IEEE Trans. Intell. Transp. Syst. 23(12), 25323–25334 (2022)

Shi, X., Zhang, Y., Shi, Z., Zhang, Y.: Gasiam: graph attention based siamese tracker for infrared anti-UAV. In: 2022 3rd International Conference on Computer Vision, Image and Deep Learning & International Conference on Computer Engineering and Applications (2022)

Huang, B., Chen, J., Xu, T., Wang, Y., Jiang, S., Wang, Y., Wang, L., Li, J.: Siamsta: spatio-temporal attention based siamese tracker for tracking UAVS. In: IEEE/CVF International Conference on Computer Vision (2021)

Hou, R., Ren, T., Wu, G.: Mirnet: a robust rgbt tracking jointly with multi-modal interaction and refinement. In: IEEE International Conference on Multimedia and Expo (2022)

Hou, R., Xu, B., Ren, T., W., Gangshan: Mtnet: learning modality-aware representation with transformer for RGBT tracking. In: IEEE International Conference on Multimedia and Expo (2023)

Andong, L., Qian, C., Li, C., Tang, J., Wang, L.: Duality-gated mutual condition network for RGBT tracking. IEEE Trans. Neural Netw. Learn. Syst. 1–14 (2022)

Xianguo, Yu., Qifeng, Yu.: Online structural learning with dense samples and a weighting kernel. Pattern Recogn. Lett. 105, 59–66 (2018)

Wu, H., Li, W., Li, W., Liu, G.: A real-time robust approach for tracking uavs in infrared videos. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (2020)

Liu, Q., Xiaohuan, L., He, Z., Zhang, C., Chen, W.-S.: Deep convolutional neural networks for thermal infrared object tracking. Knowl.-Based Syst. 134, 189–198 (2017)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25 (2012)

Liu, Q., Li, X., He, Z., Fan, N., Yuan, D., Wang, H.: Learning deep multi-level similarity for thermal infrared object tracking. IEEE Trans. Multimedia 23, 2114–2126 (2020)

Liu, Q., Yuan, D., Fan, N., Gao, P., Li, X., He, Z.: Learning dual-level deep representation for thermal infrared tracking. IEEE Trans. Multimedia 25, 1269–1281 (2022)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2016)

Welch, G.F.: Kalman filter. Computer vision: a reference guide 1–3 (2020)

Wojke, N., Bewley, A., Paulus, D.: Simple online and realtime tracking with a deep association metric. In: IEEE International Conference on Image Processing (2017)

Yunhao, D., Zhao, Z., Song, Y., Zhao, Y., Fei, S., Gong, T., Meng, H.: Strongsort: make deepsort great again. IEEE Trans. Multimedia 25, 8725–8737 (2023)

Kalal, Zdenek, Mikolajczyk, Krystian, Matas, Jiri: Tracking-learning-detection. IEEE Trans. Pattern Anal. Mach. Intell. 34(7), 1409–1422 (2011)

Liu, Q., Li, X., He, Z., Li, C., Li, J., Zhou, Z., Yuan, D., Li, J., Yang, K., Fan, N., et al.: Lsotb-tir: a large-scale high-diversity thermal infrared object tracking benchmark. In: Proceedings of the 28th ACM International Conference on Multimedia (2020)

Yan, B., Peng, H., Fu, J., Wang, D., Lu, H.: Learning spatio-temporal transformer for visual tracking. In: IEEE/CVF International Conference on Computer Vision (2021)

Li, B., Huang, Z., Ye, J., Li, Y., Scherer, S., Zhao, H., Fu, C.: Pvt++: a simple end-to-end latency-aware visual tracking framework. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (2023)

Cao, Z., Fu, C., Ye, J., Li, B., Li, Y.: Hift: Hierarchical feature transformer for aerial tracking. In: IEEE/CVF International Conference on Computer Vision (2021)

Xing, D., Evangeliou, N., Tsoukalas, A., Tzes, A.: Siamese transformer pyramid networks for real-time uav tracking. In: IEEE/CVF Winter Conference on Applications of Computer Vision (2022)

Fu, Z., Liu, Q., Fu, Z., Wang, Y.: Stmtrack: template-free visual tracking with space-time memory networks. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (2021)

Cao, Z., Huang, Z., Pan, L., Zhang, S., Liu, Z., Fu, C.: Tctrack: temporal contexts for aerial tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2022)

Ye, J., Fu, Changhong, Z., Guangze, P., Danda P., Chen, G.: Unsupervised domain adaptation for nighttime aerial tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2022)

Ye, B., Chang, H., Ma, B., Shan, S., Chen, X.: Joint feature learning and relation modeling for tracking: a one-stream framework. In: European Conference on Computer Vision (2022)

Mayer, C., Danelljan, M., Bhat, G., Paul, M., Paudel, D.P., Yu, F., Van Gool, L.: Transforming model prediction for tracking. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (2022)

Wang, N., Zhou, W., Wang, J., Li, H.: Transformer meets tracker: exploiting temporal context for robust visual tracking. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (2021)

Paul, M., Danelljan, M., Mayer, C., Van Gool, L.: Robust visual tracking by segmentation. In: European Conference on Computer Vision (2022)

Li, X., Liu, Q., Fan, N., He, Z., Wang, H.: Hierarchical spatial-aware siamese network for thermal infrared object tracking. Knowl.-Based Syst. 166, 71–81 (2019)

Liu, Q., Xiaohuan, L., He, Z., Zhang, C., Chen, W.-S.: Deep convolutional neural networks for thermal infrared object tracking. Knowl.-Based Syst. 134, 189–198 (2017)

Yao, T., Jincheng, H., Zhang, B., Gao, Y., Li, P., Qing, H.: Scale and appearance variation enhanced siamese network for thermal infrared target tracking. Infrared Phys. Technol. 117, 103825 (2021)

Yuan, D., Shu, X., Liu, Q., He, Z.: Structural target-aware model for thermal infrared tracking. Neurocomputing 491, 44–56 (2022)

Chen, R., Liu, S., Miao, Z., Li, F.: Gfsnet: generalization-friendly siamese network for thermal infrared object tracking. Infrared Phys. Technol. 123, 104190 (2022)

Sun, J., Zhang, L., Zha, Y., Gonzalez-Garcia, A., Zhang, P., Huang, W., Zhang, Y.: Unsupervised cross-modal distillation for thermal infrared tracking. In: Proceedings of the 29th ACM International Conference on Multimedia (2021)

Funding

This work was supported by the National Natural Science Foundation of China (62072232), the Key R &D Project of Jiangsu Province (BE2022138), the Fundamental Research Funds for the Central Universities (021714380026), the program B for Outstanding Ph.D, candidate of Nanjing University, and the Collaborative Innovation Center of Novel Software Technology and Industrialization.

Author information

Authors and Affiliations

Contributions

BX: Conceptualization, Methodology, Software, Writing—original draft. RH: Investigation, Methodology, Validation. JB: Project administration, Writing—original draft. TR: Polishing, Funding acquisition, Recource. GW: Supervision, Funding acquisition.

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xu, B., Hou, R., Bei, J. et al. Jointly modeling association and motion cues for robust infrared UAV tracking. Vis Comput 40, 8413–8424 (2024). https://doi.org/10.1007/s00371-023-03245-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-03245-7