Abstract

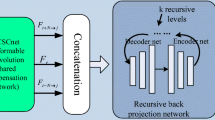

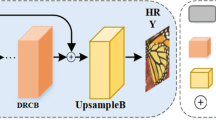

Currently, the mainstream deep video super-resolution (VSR) models typically employ deeper neural network layers or larger receptive fields. This approach increases computational requirements, making network training difficult and inefficient. Therefore, this paper proposes a VSR model called fusion of deformable 3D convolution and cheap convolution (FDDCC-VSR).In FDDCC-VSR, we first divide the detailed features of each frame in VSR into dynamic features of visual moving objects and details of static backgrounds. This division allows for the use of fewer specialized convolutions in feature extraction, resulting in a lightweight network that is easier to train. Furthermore, FDDCC-VSR incorporates multiple D-C CRBs (Convolutional Residual Blocks), which establish a lightweight spatial attention mechanism to aid deformable 3D convolution. This enables the model to focus on learning the corresponding feature details. Finally, we employ an improved bicubic interpolation combined with subpixel techniques to enhance the PSNR (Peak Signal-to-Noise Ratio) value of the original image. Detailed experiments demonstrate that FDDCC-VSR outperforms the most advanced algorithms in terms of both subjective visual effects and objective evaluation criteria. Additionally, our model exhibits a small parameter and calculation overhead.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

No datasets were generated or analyzed during the current study.

References

Greenspan, H.: Super-Resolution in Medical Imaging[J]. Comput. J. 52(1), 43–63 (2009)

Lillesand, T., Kiefer, R.W. and Chipman, J.: Remote sensing and image interpretation, (2014)

Lobanov, A. P.: Resolution limits in astronomical images, arXiv preprint astro-ph/0503225 (2005)

Caballero, J., Ledig, C., Aitken, A., et al.: Real-time video super-resolution with spatio-temporal networks and motion compensation: U.S. Patent 10,701,394[P]. (2020)

Khan, A., Sargano, A.B., Habib, Z.: DSTnet: deformable spatio-temporal convolutional residual network for video super-resolution[J]. Mathematics 9(22), 2873 (2021)

Song, C., Lin, Y., Guo, S., et al.: Spatial-temporal synchronous graph convolutional networks: a new framework for spatial-temporal network data forecasting[C]//Proceedings of the AAAI Conference on Artificial Intelligence. 34(01): 914–921 (2020)

Yang, X., Li, H., Li, X.: Lightweight image super-resolution with feature cheap convolution and attention mechanism[J]. Cluster Computing, 1–16 (2022)

Yang, X., Zhang, Y., Guo, Y., et al.: An image super-resolution deep learning network based on multi-level feature extraction module[J]. Multimedia Tools and Applications 80(5), 7063–7075 (2021)

Xie, Z., Zhang, W., Sheng, B., et al.: BaGFN: broad attentive graph fusion network for high-order feature interactions[J]. IEEE Trans. Neural Netw. Learn. Syst. (2021)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition[J]. arXiv preprint arXiv:1409.1556, (2014)

Lin, X., Sun, S., Huang, W., et al.: EAPT: efficient attention pyramid transformer for image processing[J]. IEEE Transactions on Multimedia, (2021)

Jiang, N., Sheng, B., Li, P., et al.: PhotoHelper: portrait photographing guidance via deep feature retrieval and fusion[J]. IEEE Transactions on Multimedia, (2022)

Liu, S., Huang, D.: Receptive field block net for accurate and fast object detection[C]//Proceedings of the European conference on computer vision (ECCV). 385–400, (2018)

Ying, X., Wang, L., Wang, Y., et al.: Deformable 3d convolution for video super-resolution[J]. IEEE Signal Process. Lett. 27, 1500–1504 (2020)

Dai, J., Qi, H., Xiong, Y., et al.: Deformable convolutional networks[C]//Proceedings of the IEEE international conference on computer vision. 764–773 (2017)

Wang, R., Shivanna, R., Cheng, D., et al. Dcn v2: Improved deep & cross network and practical lessons for web-scale learning to rank systems[C]//Proceedings of the Web Conference 2021.1785–1797 (2021)

Zheng, Y., Zhang, M., Lu, F.: Optical flow in the dark[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 6749–6757 (2020)

Han, K., Wang, Y., Tian, Q., et al.: Ghostnet: More features from cheap operations[C]//Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 1580–1589 (2020)

Hinton, G., Vinyals, O., Dean, J.: Distilling the knowledge in a neural network[J]. arXiv preprint arXiv:1503.02531, 2(7) (2015)

Gui, S., Wang, H., Yu, C., et al.: Adversarially trained model compression: when robustness meets efficiency[J]. arXiv preprint arXiv:1902.03538, (2019)

Xue, T., Chen, B., Wu, J., et al.: Video enhancement with task-oriented flow[J]. Int. J. Comput. Vision 127(8), 1106–1125 (2019)

Lim, B., Son, S., Kim, H., et al.: Enhanced deep residual networks for single image super-resolution[C]//Proceedings of the IEEE conference on computer vision and pattern recognition workshops. 136–144 (2017)

Tao, X., Gao, H., Liao, R., et al.: Detail-revealing deep video super-resolution[C]//Proceedings of the IEEE International Conference on Computer Vision. 4472–4480 (2017)

Haris, M., Shakhnarovich, G., Ukita, N.: Deep back-projection networks for super-resolution[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 1664–1673 (2018)

Zhang, Y., Li, K., Li, K., et al. Image super-resolution using very deep residual channel attention networks[C]//Proceedings of the European conference on computer vision (ECCV). 286–301 (2018)

Yi, P., Wang, Z., Jiang, K., et al.: Progressive fusion video super-resolution network via exploiting non-local spatio-temporal correlations[C]//Proceedings of the IEEE/CVF international conference on computer vision. 3106–3115 (2019)

Wang, X., Chan, K.C.K., Yu, K., et al.: Edvr: Video restoration with enhanced deformable convolutional networks[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. 0–0 (2019)

Wang, L., Guo, Y., Liu, L., et al.: Deep video super-resolution using HR optical flow estimation[J]. IEEE Trans. Image Process. 29, 4323–4336 (2020)

Tian, Y., Zhang, Y., Fu, Y., et al.: Tdan: Temporally-deformable alignment network for video super-resolution[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3360–3369 (2020)

Yi, P., Wang, Z., Jiang, K., et al.: Omniscient video super-resolution[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. 4429–4438 (2021)

Xiang, X., Tian, Y., Zhang, Y., et al.: Zooming slow-mo: Fast and accurate one-stage space-time video super-resolution[C]//Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 3370–3379 (2020)

Geng, Z., Liang, L., Ding, T., et al.: RSTT: Real-time Spatial Temporal Transformer for Space-Time Video Super-Resolution[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 17441–17451 (2022)

Wang, H., Xiang, X., Tian, Y., et al.: STDAN: Deformable Attention Network for Space-Time Video Super-Resolution[J]. arXiv preprint arXiv:2203.06841, (2022)

Xu, G., Xu, J., Li, Z., et al.: Temporal modulation network for controllable space-time video super-resolution[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 6388–6397 (2021)

Crowley, E.J., Gray, G., Storkey, A.J.: Moonshine: Distilling with cheap convolutions[J]. Advances in Neural Information Processing Systems, 31 (2018)

Acknowledgements

This research was supported by the National Natural Science Foundation of China (61573182, 62073164), and by the Fundamental Research Funds for the Central Universities (NS2020025).

Author information

Authors and Affiliations

Contributions

Xiaohu Wang contributed significantly to analysis and manuscript preparation, and performed the data analyses; Xin Yang contributed to the conception of the study, and wrote the manuscript; Hengrui Li performed the experiment; Tao Li helped perform the analysis with constructive discussions.

Corresponding author

Ethics declarations

Conflict of interest

Xiaohu Wang declares that he has no conflict of interest. Xin Yang declares that he has no conflict of interest. Hengrui Li declares that she has no conflict of interest. Tao Li declares that he has no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, X., Yang, X., Li, H. et al. FDDCC-VSR: a lightweight video super-resolution network based on deformable 3D convolution and cheap convolution. Vis Comput 41, 3581–3593 (2025). https://doi.org/10.1007/s00371-024-03621-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-024-03621-x