Abstract

Noises are ubiquitous in sensorimotor interactions and contaminate the information provided to the central nervous system (CNS) for motor learning. An interesting question is how the CNS manages motor learning with imprecise information. Integrating ideas from reinforcement learning and adaptive optimal control, this paper develops a novel computational mechanism to explain the robustness of human motor learning to the imprecise information, caused by control-dependent noise that exists inherently in the sensorimotor systems. Starting from an initial admissible control policy, in each learning trial the mechanism collects and uses the noisy sensory data (caused by the control-dependent noise) to form an imprecise evaluation of the performance of the current policy and then constructs an updated policy based on the imprecise evaluation. As the number of learning trials increases, the generated policies mathematically provably converge to a (potentially small) neighborhood of the optimal policy under mild conditions, despite the imprecise information in the learning process. The mechanism directly synthesizes the policies from the sensory data, without identifying an internal forward model. Our preliminary computational results on two classic arm reaching tasks are in line with experimental observations reported in the literature. The model-free control principle proposed in the paper sheds more lights into the inherent robustness of human sensorimotor systems to the imprecise information, especially control-dependent noise, in the CNS.

Similar content being viewed by others

Notes

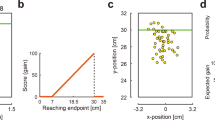

To construct good enough data matrices \(\hat{\varPhi }_{i,M,N}\) and \(\hat{\varPsi }_{i,M,N}\) in Step (2) of Algorithm 3, stochastic differential equation (23) needs to be solved over a long time interval, which is time-consuming on an ordinary laptop. We avoid this difficulty by directly computing \(\varPhi _{i,M}\) and \(\varPsi _{i,M}\) based on (23) and setting \(\hat{\varPhi }_{i,M,N} = \varPhi _{i,M} + \omega _1\) and \(\hat{\varPsi }_{i,M,N}=\varPsi _{i,M} + \omega _2\), where each element in \(\omega _1\) and \(\omega _2\) are drawn from the uniform distribution. All the simulations in this section are conducted in this way. Elements in \(\omega _1\) and \(\omega _2\) are drawn from uniform distribution over \([-10,10]\) in NF and VF, and over \([-1,1]\) in DF, respectively.

References

Acerbi L, Vijayakumar S, Wolpert DM (2017) Target uncertainty mediates sensorimotor error correction. PLoS ONE 12(1):1–21

Åström KJ, Wittenmark B (1995) Adaptive control, 2nd edn. Addison-Wesley, Reading

Bach DR, Dolan RJ (2012) Knowing how much you don’t know: a neural organization of uncertainty estimates. Nat Rev Neurosci 13(8):572–586

Balas G, Chiang R, Packard A, Safonov M (2007) Robust control toolbox user’s guide. The Math Works Inc, Tech Rep

Bertsekas DP (2011) Approximate policy iteration: a survey and some new methods. J Control Theory Appl 9(3):310–335

Bertsekas DP (2019) Reinforcement learning and optimal control. Athena Scientific, Belmont

Bian T, Jiang ZP (2019) Continuous-time robust dynamic programming. SIAM J Control Optim 57(6):4150–4174

Bian T, Jiang Y, Jiang ZP (2016) Adaptive dynamic programming for stochastic systems with state and control dependent noise. IEEE Trans Autom Control 61(12):4170–4175

Bian T, Wolpert DM, Jiang ZP (2020) Model-free robust optimal feedback mechanisms of biological motor control. Neural Comput 32(3):562–595

Braun DA, Aertsen A, Wolpert DM, Mehring C (2009) Learning optimal adaptation strategies in unpredictable motor tasks. J Neurosci 29(20):6472–6478

Burdet E, Osu R, Franklin DW, Milner TE, Kawato M (2001) The central nervous system stabilizes unstable dynamics by learning optimal impedance. Nature 414(6862):446–449

Burdet E, Tee KP, Mareels I, Milner TE, Chew CM, Franklin DW, Osu R, Kawato M (2006) Stability and motor adaptation in human arm movements. Biol Cybern 94(1):20–32

Česonis J, Franklin DW (2020) Time-to-target simplifies optimal control of visuomotor feedback responses. eNeuro 7(2):ENEURO.0514–19.2020

Česonis J, Franklin DW (2021) Mixed-horizon optimal feedback control as a model of human movement. arXiv preprint arXiv:210406275

Cluff T, Scott SH (2015) Apparent and actual trajectory control depend on the behavioral context in upper limb motor tasks. J Neurosci 35(36):12465–12476

Crevecoeur F, Scott SH, Cluff T (2019) Robust control in human reaching movements: a model-free strategy to compensate for unpredictable disturbances. J Neurosci 39(41):8135–8148

Crevecoeur F, Thonnard JL, Lefèvre P (2020) A very fast time scale of human motor adaptation: within movement adjustments of internal representations during reaching. eNeuro 7(1):1–16

d’Acremont M, Lu ZL, Li X, Van der Linden M, Bechara A (2009) Neural correlates of risk prediction error during reinforcement learning in humans. NeuroImage 47(4):1929–1939

Fiete IR, Fee MS, Seung HS (2007) Model of birdsong learning based on gradient estimation by dynamic perturbation of neural conductances. J Neurophysiol 98(4):2038–2057

Fitts PM (1954) The information capacity of the human motor system in controlling the amplitude of movement. J Exp Psychol 47(6):381

Flash T, Hogan N (1985) The coordination of arm movements: an experimentally confirmed mathematical model. J Neurosci 5(7):1688–1703

Franklin DW, Wolpert DM (2011) Computational mechanisms of sensorimotor control. Neuron 72(3):425–442

Franklin DW, Burdet E, Osu R, Kawato M, Milner TE (2003) Functional significance of stiffness in adaptation of multijoint arm movements to stable and unstable dynamics. Exp Brain Res 151(2):145–157

Franklin DW, Burdet E, Peng Tee K, Osu R, Chew CM, Milner TE, Kawato M (2008) CNS learns stable, accurate, and efficient movements using a simple algorithm. J Neurosci 28(44):11165–11173

Gaveau J, Berret B, Demougeot L, Fadiga L, Pozzo T, Papaxanthis C (2014) Energy-related optimal control accounts for gravitational load: comparing shoulder, elbow, and wrist rotations. J Neurophysiol 111(1):4–16

Gomi H, Kawato M (1996) Equilibrium-point control hypothesis examined by measured arm stiffness during multijoint movement. Science 272(5258):117–120

Gravell BJ, Esfahani PM, Summers TH (2020) Robust control design for linear systems via multiplicative noise. IFAC-PapersOnLine 53(2):7392–7399

Hadjiosif AM, Krakauer JW, Haith AM (2021) Did we get sensorimotor adaptation wrong? Implicit adaptation as direct policy updating rather than forward-model-based learning. J Neurosci 41(12):2747–2761

Hahnloser RHR, Kozhevnikov AA, Fee MS (2002) An ultra-sparse code underliesthe generation of neural sequences in a songbird. Nature 419(6902):65–70

Haith AM, Krakauer JW (2013) Model-based and model-free mechanisms of human motor learning. In: Richardson MJ, Riley MA, Shockley K (eds) Progress in motor control. Springer, New York, pp 1–21

Harris CM, Wolpert DM (1998) Signal-dependent noise determines motor planning. Nature 394(6695):780–784

Huang VS, Haith A, Mazzoni P, Krakauer JW (2011) Rethinking motor learning and savings in adaptation paradigms: model-free memory for successful actions combines with internal models. Neuron 70(4):787–801

Huh D (2012) Rethinking optimal control of human movements. PhD thesis, UC San Diego

Huh D, Todorov E, Sejnowski T et al (2010) Infinite horizon optimal control framework for goal directed movements. In: Proceedings of the 9th annual symposium on advances in computational motor control, vol 12

Izawa J, Shadmehr R (2011) Learning from sensory and reward prediction errors during motor adaptation. PLoS Comput Biol 7(3):e1002012

Jiang Y, Jiang ZP (2014) Adaptive dynamic programming as a theory of sensorimotor control. Biol Cybern 108(4):459–473

Jiang Y, Jiang ZP (2015) A robust adaptive dynamic programming principle for sensorimotor control with signal-dependent noise. J Syst Sci Complex 28(2):261–288

Jiang Y, Jiang ZP (2017) Robust adaptive dynamic programming. Wiley-IEEE Press, Hoboken

Jiang Z, Bian T, Gao W (2020) Learning-based control: a tutorial and some recent results. Found Trends Syst Control 8:176–284

Kadiallah A, Liaw G, Kawato M, Franklin DW, Burdet E (2011) Impedance control is selectively tuned to multiple directions of movement. J Neurophysiol 106(5):2737–2748

Kamalapurkar R, Walters P, Rosenfeld J, Dixon W (2018) Reinforcement learning for optimal feedback control: a Lyapunov-based approach. Springer, Berlin

Khalil HK (2002) Nonlinear systems, 3rd edn. Prentice-Hall, Upper Saddle River

Kiumarsi B, Vamvoudakis KG, Modares H, Lewis FL (2018) Optimal and autonomous control using reinforcement learning: a survey. IEEE Trans Neural Netw Learn Syst 29(6):2042–2062

Kleinman D (1968) On an iterative technique for Riccati equation computations. IEEE Trans Autom Control 13(1):114–115

Kleinman D (1969) On the stability of linear stochastic systems. IEEE Trans Autom Control 14(4):429–430

Körding KP, Wolpert DM (2004) Bayesian integration in sensorimotor learning. Nature 427(6971):244–247

Körding KP, Wolpert DM (2006) Bayesian decision theory in sensorimotor control. Trends Cognit Sci 10(7):319–326

Krakauer JW, Hadjiosif AM, Xu J, Wong AL, Haith AM (2019) Motor learning. American Cancer Society, Atlanta, pp 613–663

Li L, Imamizu H, Tanaka H (2015) Is movement duration predetermined in visually guided reaching? A comparison of finite-and infinite-horizon optimal feedback control. In: The abstracts of the international conference on advanced mechatronics: toward evolutionary fusion of IT and mechatronics: ICAM 2015.6. The Japan Society of Mechanical Engineers, pp 247–248

Liberzon D (2012) Calculus of variations and optimal control theory: a concise introduction. Princeton University Press, Princeton

Liu D, Todorov E (2007) Evidence for the flexible sensorimotor strategies predicted by optimal feedback control. J Neurosci 27(35):9354–9368

Magnus JR, Neudecker H (2007) Matrix differential calculus with applications in statistics and economerices. Wiley, New York

Mistry M, Theodorou E, Schaal S, Kawato M (2013) Optimal control of reaching includes kinematic constraints. J Neurophysiol 110(1):1–11

Morasso P (1981) Spatial control of arm movements. Exp Brain Res 42(2):223–227

Mori T, Fukuma N, Kuwahara M (1986) On the Lyapunov matrix differential equation. IEEE Trans Autom Control 31(9):868–869

Mussa-Ivaldi F, Hogan N, Bizzi E (1985) Neural, mechanical, and geometric factors subserving arm posture in humans. J Neurosci 5(10):2732–2743

Orbán G, Wolpert DM (2011) Representations of uncertainty in sensorimotor control. Curr Opin Neurobiol 21(4):629–635

Pang B, Jiang ZP (2020) Adaptive optimal control of linear periodic systems: an off-policy value iteration approach. IEEE Trans Autom Control 66(2):888–894

Pang B, Jiang ZP (2021) Robust reinforcement learning: a case study in linear quadratic regulation. In: The 35th AAAI conference on artificial intelligence (AAAI). pp 9303–9311

Pang B, Bian T, Jiang ZP (2019) Adaptive dynamic programming for finite-horizon optimal control of linear time-varying discrete-time systems. Control Theory Technol 17(1):18–29

Pang B, Jiang ZP, Mareels I (2020) Reinforcement learning for adaptive optimal control of continuous-time linear periodic systems. Automatica 118:109035

Pang B, Bian T, Jiang ZP (2021) Robust policy iteration for continuous-time linear quadratic regulation. IEEE Trans Autom Control. https://doi.org/10.1109/TAC.2021.3085510

Parker A, Derrington A, Blakemore C, van Beers RJ, Baraduc P, Wolpert DM (2002) Role of uncertainty in sensorimotor control. Philos Trans R So Lond Ser B Biol Sci 357(1424):1137–1145

Pavliotis GA (2014) Stochastic processes and applications. Springer, New York

Qian N, Jiang Y, Jiang ZP, Mazzoni P (2013) Movement duration, Fitts’s law, and an infinite-horizon optimal feedback control model for biological motor systems. Neural Comput 25(3):697–724

Schmidt RA, Lee TD, Winstein C, Wulf G, Zelaznik HN (2018) Motor control and learning: a behavioral emphasis. In: Human kinetics

Selen LPJ, Franklin DW, Wolpert DM (2009) Impedance control reduces instability that arises from motor noise. J Neurosci 29(40):12606–12616

Shadmehr R, Mussa-Ivaldi S (2012) Biological learning and control: how the brain builds representations, predicts events, and makes decisions. MIT Press, Cambridge

Shmuelof L, Huang VS, Haith AM, Delnicki RJ, Mazzoni P, Krakauer JW (2012) Overcoming motor forgetting through reinforcement of learned actions. J Neurosci 32(42):14617–14621a

Sternad D (2018) It’s not (only) the mean that matters: variability, noise and exploration in skill learning. Curr Opin Behav Sci 20:183–195

Sternad D, Abe MO, Hu X, Müller H (2011) Neuromotor noise, error tolerance and velocity-dependent costs in skilled performance. PLOS Comput Biol 7(9):1–15

Sutton RS, Barto AG (2018) Reinforcement learning: an introduction, 2nd edn. MIT Press, Cambridge

Thorp EB, Kording KP, Mussa-Ivaldi FA (2017) Using noise to shape motor learning. J Neurophysiol 117(2):728–737

Todorov E (2005) Stochastic optimal control and estimation methods adapted to the noise characteristics of the sensorimotor system. Neural Comput 17(5):1084–1108

Todorov E, Jordan MI (2002) Optimal feedback control as a theory of motor coordination. Nat Neurosci 5(11):1226–1235

Tsitsiklis JN (2002) On the convergence of optimistic policy iteration. J Mach Learn Res 3:59–72

Tumer EC, Brainard MS (2007) Performance variability enables adaptive plasticity of ‘crystallized’ adult birdsong. Nature 450(7173):1240–1244

Ueyama Y (2014) Mini-max feedback control as a computational theory of sensorimotor control in the presence of structural uncertainty. Front Comput Neurosci 8:1–14

Uno Y, Kawato M, Suzuki R (1989) Formation and control of optimal trajectory in human multijoint arm movement. Biol Cybern 61(2):89–101

Vaswani PA, Shmuelof L, Haith AM, Delnicki RJ, Huang VS, Mazzoni P, Shadmehr R, Krakauer JW (2015) Persistent residual errors in motor adaptation tasks: reversion to baseline and exploratory escape. J Neurosci 35(17):6969–6977

Willems JL, Willems JC (1976) Feedback stabilizability for stochastic systems with state and control dependent noise. Automatica 12(3):277–283

Wolpert DM (2007) Probabilistic models in human sensorimotor control. Hum Mov Sci 26(4):511–524

Wolpert D, Ghahramani Z, Jordan M (1995) An internal model for sensorimotor integration. Science 269(5232):1880–1882

Wu HG, Miyamoto YR, Castro LNG, Ölveczky BP, Smith MA (2014) Temporal structure of motor variability is dynamically regulated and predicts motor learning ability. Nat Neurosci 17(2):312–321

Yeo SH, Franklin DW, Wolpert DM (2016) When optimal feedback control is not enough: feedforward strategies are required for optimal control with active sensing. PLOS Comput Biol 12:1–22

Zhou K, Doyle JC (1998) Essentials of robust control, vol 104. Prentice Hall, Upper Saddle River

Zhou SH, Oetomo D, Tan Y, Burdet E, Mareels I (2011) Human motor learning through iterative model reference adaptive control. IFAC Proc Vol 44(1):2883–2888

Zhou SH, Tan Y, Oetomo D, Freeman C, Burdet E, Mareels I (2017) Modeling of endpoint feedback learning implemented through point-to-point learning control. IEEE Trans Control Syst Technol 25(5):1576–1585

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Benjamin Lindner.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work has been supported in part by the U.S. National Science Foundation under Grants ECCS-1501044 and EPCN-1903781.

Appendix A Proof of Theorem 2

Appendix A Proof of Theorem 2

Let \(\epsilon = \epsilon _1/2\) in Theorem 1. We firstly show that for each stabilizing \(\hat{K}_i\in {\mathbb {R}}^{m\times n}\), there exist an integer \(N_i\) and a constant \(s_i>0\), such that if Assumption 1 is satisfied, then for any \(N>N_i\) and \(s>s_i\), almost surely

By definition,

where \(C_0>0\) is a constant. Then, we only need to show that for any \(N>N_i\) and \(s>s_i\),

Vectorizing (15) yields

where \(\bar{p}_i={{\,\mathrm{vec}\,}}(\bar{P}_i)\), i.e., the vectorization of matrix \(\bar{P}_i\),

and for \(Y\in {\mathbb {S}}^n\), \(D_n\in {\mathbb {R}}^{n^2\times \frac{1}{2}n(n+1)}\) is the unique matrix with full column rank (Magnus and Neudecker 2007, Page 57) such that

Vectorizing (18) yields

where \(\check{p}_i = {{\,\mathrm{vec}\,}}(\check{P}_i)\). Since (8), (9), (28) and (15) are mutually equivalent with Assumption 1, by (17)

Since \(\hat{K}_i\) is stabilizing, by continuity, there exists an integer \(N_{i,1}\), such that for any \(N>N_{i,1}\), \({\mathcal {T}}(\hat{\varPhi }_{i,M,N},\hat{\varPsi }_{i,M,N},\hat{K}_i)\) is Hurwitz. Then, we have

where

By continuity of matrix inversion, (17) and (31), there exist an integer \(N_{i,2}\ge N_{i,1}\) and \(s_{i}>0\), such that for any \(N>N_{i,2}\) and \(s>s_{i}\)

and

Setting \(N_i = N_{i,2}\) completes the proof of (27). By Theorem 1, there exists an integer \(\bar{I}\), such that if

then \(\Vert \hat{K}_{\bar{I}} - K^*\Vert _F<\epsilon _1\). Condition (32) can be satisfied by setting

This completes the proof.

Rights and permissions

About this article

Cite this article

Pang, B., Cui, L. & Jiang, ZP. Human motor learning is robust to control-dependent noise. Biol Cybern 116, 307–325 (2022). https://doi.org/10.1007/s00422-022-00922-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00422-022-00922-z