Abstract

The installation of the LOEWE-CSC (http://csc.uni-frankfurt.de/csc/?51) supercomputer at the Goethe University in Frankfurt lead to the development of a Linpack which can fully utilize the installed AMD Cypress GPUs. At its core, a fast DGEMM for combined GPU and CPU usage was created. The DGEMM library is tuned to hide all DMA transfer times and thus maximize the GPU load. A work stealing scheduler was implemented to add the remaining CPU resources to the DGEMM. On the GPU, the DGEMM achieves 497 GFlop/s (90.9% of the theoretical peak). Combined with the 24-core Magny-Cours CPUs, 623 GFlop/s (83.6% of the peak) are achieved.

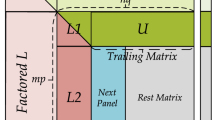

The HPL (http://www.netlib.org/benchmark/hpl/algorithm.html) benchmark was modified to perform well with one MPI-process per node. The modifications include multi-threading, vectorization, use of the GPU DGEMM, cache optimizations, and a new Lookahead algorithm. A Linpack performance of 70% theoretical peak is achieved and this performance scales linearly to hundreds of nodes.

Similar content being viewed by others

References

Advanced Micro Devices: AMD stream computing guide. URL http://developer.amd.com/gpu/ATIStreamSDK/assets/ATI_Stream_SDK_OpenCL_Programming_Guide.pdf

Amdahl G (1967) Validity of the single processor approach to achieving large-scale computing capabilities. In: AFIPS conference proceedings, vol 30, pp 483–485

Drepper U (2007) What every programmer should know about memory. URL http://www.akkadia.org/drepper/cpumemory.pdf

Goethe University of Frankfurt Center for Scientific Computing: LOEWE-CSC cluster. URL http://csc.uni-frankfurt.de/csc/?51

Intel Corporation (2009) Intel threading building blocks reference manual. URL http://software.intel.com/sites/products/documentation/hpc/tbb/reference.pdf

Nakasato N (2010) A fast GEMM implementation on a cypress GPU. URL http://www.dcs.warwick.ac.uk/~sdh/pmbs10/pmbs10/Workshop_Programme_files/fastgemm.pdf

NVIDIA Corporation: CUBLAS library. URL http://developer.download.nvidia.com/compute/cuda/1_0/CUBLAS_Library_1.0.pdf

Rohr D, Kretz M, Bach M (2010) Technical report, CALDGEMM and HPL. URL http://code.compeng.uni-frankfurt.de/attachments/10/techreport.pdf

Texas Advanced Computing Center: GotoBLAS basic linear algebra library. URL http://www.tacc.utexas.edu/tacc-projects/

University of Tennesse: High performance Linpack algorithm. URL http://www.netlib.org/benchmark/hpl/algorithm.html

Volkov V, Demmel J (2008) Benchmarking GPUs to tune dense linear algebra. In: SC 08 ACM/IEEE conference on supercomputing proceedings, pp 1–11

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bach, M., Kretz, M., Lindenstruth, V. et al. Optimized HPL for AMD GPU and multi-core CPU usage. Comput Sci Res Dev 26, 153–164 (2011). https://doi.org/10.1007/s00450-011-0161-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00450-011-0161-5