Abstract

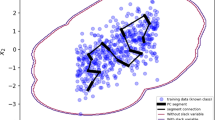

A simple yet effective learning algorithm, k locally constrained line (k-LCL), is presented for pattern classification. In k-LCL, any two prototypes of the same class are extended to a constrained line (CL), through which the representational capacity of the training set is largely improved. Because each CL is adjustable in length, k-LCL can well avoid the “intersecting” of training subspaces in most traditional feature classifiers. Moreover, to speed up the calculation, k-LCL classifies an unknown sample focusing only on its local CLs in each class. Experimental results, obtained on both synthetic and real-world benchmark data sets, show that the proposed method has better accuracy and efficiency than most existing feature line methods.

Similar content being viewed by others

References

Blake CL, Merz CJ (1998) UCI repository of machine learning databases. Available at http://www.ics.uci.edu/~mlearn/MLRepository.html

Chen JH, Chen CS (2004a) Object recognition based on image sequences by using inter-feature-line consistencies. Pattern Recognit 37:1913–1923

Chen JH, Chen CS (2004b) Reducing svm classification time using multiple mirror classifiers. IEEE Trans Syst Man Cybern Part B: Cybern 34(2):1173–1183

Chen K, Wu TY, Zhang HJ (2002) On the use of nearest feature line for speaker identification. Pattern Recognit Lett 23(4):1735–1746

Du H, Chen YQ (2007) Rectified nearest feature line segment for pattern classification. Pattern Recognit 40(5):1486–1497

Fukunaga K, Hummels DM (1989) Leave-one-out procedures for nonparametric error estimates. IEEE Trans Pattern Anal Machine Intell 11:421–423

Gao QB, Wang ZZ (2005) Using nearest feature line and tunable nearest neighbor methods for prediction of protein subcellular locations. Comput Biol Chem 29:388–392

Gao QB, Wang ZZ (2007) Center-based nearest neighbor classifier. Pattern Recognit 40:346–349

He R, Ao M, Xiang SM, Li SZ (2008) Nearest feature line: a tangent approximation. In: Chinese conference on pattern recognition 2008, October, Beijing, pp 1–6

Jollife IT (2002) Principal component analysis, 2nd edn. Springer, New York

Kohavi R (1995) A study of cross-validation and bootstrap for accuracy estimation and model selection. In: 14th Int Joint Conf Artificial Intel, vol 2, pp 1137–1143

Lee J, Zhang C (2006) Classification of gene-expression data: the manifold-based metric learning way. Pattern Recognit 39(12):2450–2463

Li SZ (2000) Content-based classification and retrieval of audio using the nearest feature line method. IEEE Trans Speech Audio Process 8(5):619–625

Li SZ, Lu J (1999) Face recognition using the nearest feature line method. IEEE Trans Neural Netw 10(2):439–443

Li SZ, Chan KL, Wang C (2000) Performance evaluation of the nearest feature line method in image classification and retrieval. IEEE Trans Pattern Anal Machine Intell 22(11):1135–1139

Ma L, Wang YH, Tan TN (2002) Iris recognition using circular symmetric filters. In: Proceeding of the sixteenth international conference on pattern recognition, vol. 2, Quebec, Canada, 11–15 August, pp 414–417

Manocha S, Girolami MA (2007) An empirical analysis of the probabilistic k-nearest neighbour classifier. Pattern Recognit Lett 28:1818–1824

Orozco-Alzate M, Duin RPW, Castellanos-Domínguez CG (2009) A generalization of dissimilarity representations using feature lines and feature planes. Pattern Recognit Lett 30:242–254

Osuna E, Girosi F (1999) Reducing the run-time complexity of support vector machines. In: Advances in kernel methods: support vector learning. MIT Press, Cambridge, MA, pp 271–284

Pang YW, Yuan Y, Li XL (2007) Generalized nearest feature line for subspace learning. Electron Lett 43(20):1079–1080

Pang YW, Yuan Y, Li XL (2009) Iterative subspace analysis based on feature line distance. IEEE Trans Image Process 18(4):903–907

Ripley BD (1994) Neural networks and related methods for classification. J R Stat Soc Ser B (Methodol) 56(3):409–456

Samaria F, Harter A (1994) Parameterisation of a stochastic model for human face identification. In: Proceedings of second IEEE workshop on applications of computer vision, Sarasota, FL, December, pp 138–142

Zhao L, Qi W, Li SZ, Yang SQ, Zhang HJ (2001) Content-based retrieval of video shot using improved nearest feature line method. In: 2001 IEEE international conference on acoustics, speech, and signal processing (ICASSP), vol 3, Salt Lake City, USA, 8–11 May, pp 1625–1628

Zheng W, Zhao L, Zou C (2004) Locally nearest neighbor classifiers for pattern classification. Pattern Recognit 37(6):1307–1309

Zhou Z, Chee KK (2005) The nearest feature midpoint––a novel approach for pattern classification. Int J Inf Technol 11(1):1–15

Zhou Y, Zhang C, Wang J (2004) Tunable nearest neighbor classifier. Lect Notes Comp Sci 3175:204–211

Acknowledgments

The authors would like to thank the anonymous referees as well as the guest editors for their helpful comments and suggestions. This research has been supported by the National Key Basic Research and Development Program of China (Grant No. 2006CB701303) and the National High Technology Research and Development Program of China (Grant No. 2006AA12Z105).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Qing, J., Huo, H. & Fang, T. Pattern classification based on k locally constrained line. Soft Comput 15, 703–712 (2010). https://doi.org/10.1007/s00500-010-0602-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-010-0602-2