Abstract

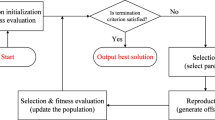

Evolutionary algorithms (EAs) are particularly suited to solve problems for which there is not much information available. From this standpoint, estimation of distribution algorithms (EDAs), which guide the search by using probabilistic models of the population, have brought a new view to evolutionary computation. While solving a given problem with an EDA, the user has access to a set of models that reveal probabilistic dependencies between variables, an important source of information about the problem. However, as the complexity of the used models increases, the chance of overfitting and consequently reducing model interpretability, increases as well. This paper investigates the relationship between the probabilistic models learned by the Bayesian optimization algorithm (BOA) and the underlying problem structure. The purpose of the paper is threefold. First, model building in BOA is analyzed to understand how the problem structure is learned. Second, it is shown how the selection operator can lead to model overfitting in Bayesian EDAs. Third, the scoring metric that guides the search for an adequate model structure is modified to take into account the non-uniform distribution of the mating pool generated by tournament selection. Overall, this paper makes a contribution towards understanding and improving model accuracy in BOA, providing more interpretable models to assist efficiency enhancement techniques and human researchers.

Similar content being viewed by others

Notes

Given a sufficient population size (learning data set).

References

Ackley DH (1987) A connectionist machine for genetic hill climbing. Kluwer Academic, Boston

Ahn CW, Ramakrishna RS (2008) On the scalability of the real-coded Bayesian optimization algorithm. IEEE Trans Evol Comput 12(3):307–322

Balakrishnan N, Nevzorov VB (2003) A primer on statistical distributions. Wiley

Blickle T, Thiele L (1997) A comparison of selection schemes used in genetic algorithms. Evol Comput 4(4):311–347

Brindle A (1981) Genetic algorithms for function optimization. PhD thesis, University of Alberta, Edmonton, Canada (unpublished doctoral dissertation)

Chickering DM, Geiger D, Heckerman D (1994) Learning Bayesian networks is NP-Hard. Technical Report MSR-TR-94-17, Microsoft Research, Redmond, WA

Chickering DM, Heckerman D, Meek C (1997) A Bayesian approach to learning Bayesian networks with local structure. Technical Report MSR-TR-97-07, Microsoft Research, Redmond, WA

Cooper GF, Herskovits EH (1992) A Bayesian method for the induction of probabilistic networks from data. Mach Learn 9:309–347

Cormen TH, Leiserson CE, Rivest RL (1990) Introduction to algorithms. MIT Press, Massachusetts

Correa ES, Shapiro JL (2006) Model complexity vs. performance in the Bayesian optimization algorithm. In: Runarsson TP et al (eds) PPSN IX: Parallel problem solving from nature, LNCS 4193, Springer, pp 998–1007

Deb K, Goldberg DE (1993) Analyzing deception in trap functions. Foundations of genetic algorithms 2, pp 93–108

Echegoyen C, Lozano JA, Santana R, Larrañaga P (2007) Exact bayesian network learning in estimation of distribution algorithms. In: Proceedings of the IEEE congress on evolutionary computation, IEEE Press, pp 1051–1058

Etxeberria R, Larrañaga P (1999) Global optimization using Bayesian networks. In: Rodriguez AAO et al (eds) Second symposium on artificial intelligence (CIMAF-99), Habana, Cuba, pp 332–339

Friedman N, Goldszmidt M (1999) Learning Bayesian networks with local structure. Graphical Models. MIT Press, pp 421–459

Goldberg DE, Sastry K (2010) Genetic algorithms: the design of innovation, 2nd edn. Springer

Goldberg DE, Korb B, Deb K (1989) Messy genetic algorithms: motivation, analysis, and first results. Complex Syst 3(5):493–530

Harik GR (1995) Finding multimodal solutions using restricted tournament selection. In: Proceedings of the sixth international conference on genetic algorithms pp 24–31

Harik GR, Lobo FG, Goldberg DE (1999) The compact genetic algorithm. IIEEE Trans Evol Comput 3(4):287–297

Hauschild M, Pelikan M (2008) Enhancing efficiency of hierarchical BOA via distance-based model restrictions. In: Proceedings of the 10th international conference on parallel problem solving from nature, Springer-Verlag, pp 417–427

Hauschild M, Pelikan M, Sastry K, Goldberg DE (2008) Using previous models to bias structural learning in the hierarchical BOA. In: Proceedings of the ACM SIGEVO genetic and evolutionary computation conference (GECCO-2008), ACM, New York, NY, USA, pp 415–422

Hauschild M, Pelikan M, Sastry K, Lima CF (2009) Analyzing probabilistic models in hierarchical BOA. IEEE Trans Evol Comput 13(6):1199–1217

Heckerman D, Geiger D, Chickering DM (1994) Learning Bayesian networks: the combination of knowledge and statistical data. Technical Report MSR-TR-94-09, Microsoft Research, Redmond, WA

Henrion M (1988) Propagation of uncertainty in Bayesian networks by logic sampling. In: Lemmer JF, Kanal LN (eds.) Uncertainty in artificial intelligence, Elsevier, pp 149–163

Japkowicz N, Stephen S (2002) The class imbalance problem: a systematic study. Intell Data Anal 6(5):429–450

Johnson A, Shapiro J (2001) The importance of selection mechanisms in distribution estimation algorithms. In: Proceedings of the 5th European conference on artificial evolution, LNCS vol 2310, Springer-Verlag, London, pp 91–103

Kubat M, Matwin S (1997) Addressing the curse of imbalanced training sets: one-sided selection. In: Proc. 14th international Conference on Machine Learning, Morgan Kaufmann, pp 179–186

Larrañaga P, Lozano JA (eds) (2002) Estimation of distribution algorithms: a new tool for evolutionary computation. Kluwer Academic Publishers, Boston, MA

Lima CF (2009) Substructural local search in discrete estimation of distribution algorithms. PhD thesis, University of Algarve, Portugal

Lima CF, Sastry K, Goldberg DE, Lobo FG (2005) Combining competent crossover and mutation operators: a probabilistic model building approach. In: Beyer H et al (eds) Proceedings of the ACM SIGEVO genetic and evolutionary computation conference (GECCO-2005), ACM Press, pp 735–742

Lima CF, Pelikan M, Sastry K, Butz M, Goldberg DE, Lobo FG (2006) Substructural neighborhoods for local search in the Bayesian optimization algorithm. In: Runarsson TP et al (eds) PPSN IX: parallel problem solving from nature, LNCS 4193, Springer, pp 232–241

Lima CF, Goldberg DE, Pelikan M, Lobo FG, Sastry K, Hauschild M (2007) Influence of selection and replacement strategies on linkage learning in BOA. In: Tan KC et al (eds) IEEE Congress on evolutionary computation (CEC-2007), IEEE Press, pp 1083–1090

Lima CF, Pelikan M, Lobo FG, Goldberg DE (2009) Loopy substructural local search for the Bayesian optimization algorithm. In: Proceedings of the second international workshop on engineering stochastic local search algorithms (SLS-2009), LNCS Vol. 5752, Springer, pp 61–75

Lozano JA, Larrañaga P, Inza I, Bengoetxea E (eds) (2006) Towards a new evolutionary computation: advances on estimation of distribution algorithms. Springer, Berlin

Mühlenbein H (2008) Convergence of estimation of distribution algorithms for finite samples. (unpublished manuscript)

Mühlenbein H, Mahning T (1999) FDA—a scalable evolutionary algorithm for the optimization of additively decomposed functions. Evol Comput 7(4):353–376

Mühlenbein H, Schlierkamp-Voosen D (1993) Predictive models for the breeder genetic algorithm: I. Continuous parameter optimization. Evol Comput 1(1):25–49

Pearl J (1988) Probabilistic reasoning in intelligent systems: networks of plausible inference. Morgan Kaufmann, San Mateo, CA

Pelikan M (2005) Hierarchical Bayesian optimization algorithm: toward a new generation of evolutionary algorithms. Springer

Pelikan M, Goldberg DE (2001) Escaping hierarchical traps with competent genetic algorithms. In: Spector L, et al (eds) Proceedings of the genetic and evolutionary computation conference (GECCO-2001), Morgan Kaufmann, San Francisco, CA, pp 511–518

Pelikan M, Sastry K (2004) Fitness inheritance in the bayesian optimization algorithm. In: Deb K et al (eds) Proceedings of the genetic and evolutionary computation conference (GECCO-2004), Part II, LNCS 3103, Springer, pp 48–59

Pelikan M, Goldberg DE, Cantú-Paz E (1999) BOA: the Bayesian optimization algorithm. In: Banzhaf W et al (eds) Proceedings of the genetic and evolutionary computation conference GECCO-99, Morgan Kaufmann, San Francisco, CA, pp 525–532

Pelikan M, Goldberg DE, Lobo F (2002) A survey of optimization by building and using probabilistic models. Comput Optim Appl 21(1):5–20

Pelikan M, Sastry K, Goldberg DE (2003) Scalability of the Bayesian optimization algorithm. Int J Approx Reason 31(3):221–258

Pelikan M, Sastry K, Cantú-Paz E (eds) (2006) Scalable optimization via probabilistic modelling: from algorithms to applications. Springer

Pyle D (1999) Data preparation for data mining. Morgan Kaufmann, San Francisco, CA

Rissanen JJ (1978) Modelling by shortest data description. Automatica 14:465–471

Santana R, Larrañaga P, Lozano JA (2005) Interactions and dependencies in estimation of distribution algorithms. In: Proceedings of the IEEE congress on evolutionary computation, IEEE Press, pp 1418–1425

Santana R, Larrañaga P, Lozano JA (2008) Protein folding in simplified models with estimation of distribution algorithms. IEEE Trans Evol Comput 12(4):418–438

Sastry K (2001) Evaluation-relaxation schemes for genetic and evolutionary algorithms. Master’s thesis, University of Illinois at Urbana-Champaign, Urbana, IL

Sastry K, Goldberg DE (2004) Designing competent mutation operators via probabilistic model building of neighborhoods. In: Deb K et al (eds) Proceedings of the genetic and evolutionary computation conference (GECCO-2004), Part II, LNCS 3103, Springer, pp 114–125

Sastry K, Pelikan M, Goldberg DE (2004) Efficiency enhancement of genetic algorithms via building-block-wise fitness estimation. In: Proceedings of the IEEE international conference on evolutionary computation, pp 720–727

Sastry K, Abbass HA, Goldberg DE, Johnson DD (2005) Sub-structural niching in estimation distribution algorithms. In: Beyer H, et al (eds) Proceedings of the ACM SIGEVO genetic and evolutionary computation conference (GECCO-2005), ACM Press

Sastry K, Lima CF, Goldberg DE (2006) Evaluation relaxation using substructural information and linear estimation. In: Keijzer M et al (eds) Proceedings of the ACM SIGEVO genetic and evolutionary computation conference (GECCO-2006), ACM Press, pp 419–426

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6:461–464

Thierens D (1999) Scalability problems of simple genetic algorithms. Evol Comput 7(1):45–68

Thierens D, Goldberg DE (1993) Mixing in genetic algorithms. In: Forrest S (ed) Proceedings of the Fifth international conference on genetic algorithms, Morgan Kaufmann, San Mateo, CA, pp 38–45

Weiss GM, Provost F (2003) Learning when training data are costly: the effect of class distribution on tree induction. J Artif Intell Res 19:315–354

Wu H, Shapiro JL (2006) Does overfitting affect performance in estimation of distribution algorithms. In: Keijzer M et al (eds) Proceedings of the ACM SIGEVO genetic and evolutionary computation conference (GECCO-2006), ACM Press, pp 433–434

Yu TL, Goldberg DE (2004) Dependency structure matrix analysis: Offline utility of the dependency structure matrix genetic algorithm. In: Deb K et al (eds) Proceedings of the genetic and evolutionary computation conference (GECCO-2004), Part II, LNCS 3103, Springer, pp 355–366

Yu TL, Sastry K, Goldberg DE (2007a) Population size to go: Online adaptation using noise and substructural measurements. In: Lobo FG, et al (eds) Parameter setting in evolutionary algorithms, Springer, pp 205–224

Yu TL, Sastry K, Goldberg DE, Pelikan M (2007b) Population sizing for entropy-based model building in genetic algorithms. In: Thierens D, et al (eds) Proceedings of the ACM SIGEVO genetic and evolutionary computation conference (GECCO-2007), ACM Press, pp 601–608

Yu TL, Goldberg DE, Sastry K, Lima CF, Pelikan M (2009) Dependency structure matrix, genetic algorithms, and effective recombination. Evol Comput 17(4):595–626

Acknowledgments

This work was sponsored by the Portuguese Foundation for Science and Technology under grants SFRH-BD-16980-2004 and PTDC-EIA-67776-2006, the BBSRC under grant BB/D0196131, the Air Force Office of Scientific Research, Air Force Materiel Command, USAF, under grant FA9550-06-1-0096, and the National Science Foundation under NSF CAREER grant ECS-0547013. Fernando Lobo is also a member of the Center for Environmental and Sustainability Research (CENSE) and acknowledges its support. The work was also supported by the High Performance Computing Collaboratory sponsored by Information Technology Services, the Research Award and the Research Board at the University of Missouri in St. Louis. The US Government is authorized to reproduce and distribute reprints for government purposes notwithstanding any copyright notation thereon.

Author information

Authors and Affiliations

Corresponding author

Additional information

The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of the Air Force Office of Scientific Research, the National Science Foundation, or the US Government.

Rights and permissions

About this article

Cite this article

Lima, C.F., Lobo, F.G., Pelikan, M. et al. Model accuracy in the Bayesian optimization algorithm. Soft Comput 15, 1351–1371 (2011). https://doi.org/10.1007/s00500-010-0675-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-010-0675-y