Abstract

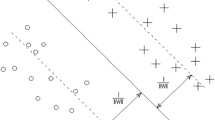

A method for the sparse solution of \(\varepsilon \)-tube support vector regression machines is presented. The proposed method achieves a high accuracy versus complexity ratio and allows the user to adjust the complexity of the resulting models. The sparse representation is guaranteed by limiting the number of training data points for the support vector regression method. Each training data point is selected based on its influence on the accuracy of the model using the active learning principle. The training time can be adjusted by the user by selecting how often the hyper-parameters of the algorithm are optimised. The advantages of the proposed method are illustrated on several examples. The algorithm performance is compared with the performance of several state-of-the-art algorithms on the well-known benchmark data sets. The application of the proposed algorithm for the black-box modelling of the electronic circuits is also demonstrated. The experiments clearly show that it is possible to reduce the number of support vectors and significantly improve the accuracy versus complexity ratio of \(\varepsilon \)-tube support vector regression.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Bao Y, Lu Y, Zhang J (2004) Forecasting stock price by SVMs regression. Artificial intelligence: methodology, systems, and applications, Lecture Notes in Computer Science. Springer, Berlin, pp 295– 303

Ben-Hur A, Ong CS, Sonnenburg S, Schölkopf B, Rätsch G (2008) Support vector machines and kernels for computational biology. PLoS Comput Biol 4(10)

Boser BE, Guyon IM, Vapnik VN (1992) A training algorithm for optimal margin classifiers. In: COLT ’92: Proceedings of the fifth annual ACM workshop on computational learning theory. ACM, New York, pp 144–152

Brabanter KD, Brabanter JD, Suykens J, Moor BD (2010) Optimized fixed-size kernel models for large data sets. Comput Stat Data Anal 54(6):1484–1504

Cao C, Xu J (2007) Short-term traffic flow predication based on PSO-SVM. In: Peng Q, Wang KCP, Qiu Y, Pu Y, Luo X, Shuai B (eds) Proceedings of the first international conference on transportation engineering, vol 246. American Society of Civil Engineers (ASCE), Chengdu, China, p 28

Ceperic V, Baric A (2004) Modeling of analog circuits by using support vector regression machines. In: Proceedings of the 11th IEEE international conference on electronics, circuits and systems, ICECS 2004, pp 391–394

Ceperic V, Gielen G, Baric A (2012) Recurrent sparse support vector regression machines trained by active learning in the time-domain. Expert Syst Appl 39(12):10,933–10,942

Ceperic V, Gielen G, Baric A (2012) Sparse multikernel support vector regression machines trained by active learning. Expert Syst Appl 39(12):11,029–11,035

Chang CC, Lin CJ (2001) LIBSVM: a library for support vector machines. Software available at http://www.csie.ntu.edu.tw/cjlin/libsvm

Chauhan Y, Venugopalan S, Karim M, Khandelwal S, Paydavosi N, Thakur P, Niknejad A, Hu C (2012) Bsim-industry standard compact MOSFET models. In: Proceedings of the European solid-state device research conference (ESSDERC), 2012, pp 46–49

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20(3):273–297

Drucker H, Burges CJC, Kaufman L, Smola AJ, Vapnik V (1996) Support vector regression machines. In: Mozer MC, Jordan MI, Petsche T (eds) Advances in neural information processing systems, vol 9, pp 155–161

Fan RE, Chen PH, Lin CJ (2005) Working set selection using second order information for training support vector machines. J Mach Learn Res 6:1889–1918

Fei S, Liu C, Zeng Q, Miao Y (2008) Application of particle swarm optimization-based support vector machine in fault diagnosis of turbo-generator. In: IITA ’08: Proceedings of the 2008 second international symposium on intelligent information technology application. IEEE Computer Society, Washington, DC, pp 1040–1044

Figueiredo MAT (2003) Adaptive sparseness for supervised learning. IEEE Trans Pattern Anal Mach Intell 25(9):1150–1159

Guo B, Gunn S, Damper R, Nelson J (2008) Customizing kernel functions for SVM-based hyperspectral image classification. IEEE Trans Image Process 17(4):622–629

Guo G, Zhang JS, Zhang GY (2010) A method to sparsify the solution of support vector regression. Neural Comput Appl 19(1):115–122

Guo Y, Xu Y, Xu R, Yan B (2007) A support vector regression nonlinear model for SiC MESFET. In: Proceedings of 2007 international workshop on electron devices and semiconductor technology, EDST 2007, pp 153–156

Härdle WK, Moro R, Hoffmann L (2010) Learning machines supporting Bankruptcy prediction. SFB 649 Discussion Paper 2010–032, Sonderforschungsbereich 649, Humboldt Universität zu Berlin, Germany

Hsu CW, Chang CC, Lin CJ (2003) A practical guide to support vector classification. Department of Computer Science and and Information Engineering, National Taiwan University, Technical report

Jiang J, Ip HH (2007) Dynamic distance-based active learning with SVM. In: MLDM ’07: Proceedings of the 5th international conference on machine learning and data mining in pattern recognition. Springer, Berlin, pp 296–309

Jiang MH, Yuan XC (2007) Construction and application of PSO-SVM model for personal credit scoring. In: ICCS ’07: Proceedings of the 7th international conference on computational science, Part IV. Springer, Berlin, pp 158–161

Karsmakers P, Leroux P, De Brabanter J, Suykens J (2008) Least squares support vector machines for modelling electronic devices. In: European conference on the use of modern information and communication technologies (ECUMICT). Ghent, Belgium, pp 1–4

Karush W (1939) Minima of functions of several variables with inequalities as side constraints. University of Chicago, Master’s thesis

Keerthi SS, Shevade SK, Bhattacharyya C, Murthy KRK (2001) Improvements to Platt’s SMO algorithm for SVM classifier design. Neural Comput 13(3):637–649

Kuhn HW, Tucker AW (1951) Nonlinear programming. In: Proceedings of 2nd Berkeley symposium on mathematical statistics and probabilistics. University of California Press, Berkeley

Kuo TF, Yajima Y (2010) Ranking and selecting terms for text categorization via SVM discriminate boundary. Int J Intell Syst 25(2):137–154

Marsaglia G, Tsang WW (2000) The ziggurat method for generating random variables. J Stat Softw 5(8):1–7

Matsumoto M, Nishimura T (1998) Mersenne twister: a 623-dimensionally equidistributed uniform pseudo-random number generator. ACM Trans Model Comput Simul 8(1):3–30

Michie D, Spiegelhalter DJ, Taylor CC, Campbell J (eds) (1994) Machine learning, neural and statistical classification. Ellis Horwood, Upper Saddle River

Neumaier A (1998) Solving ill-conditioned and singular linear systems: a tutorial on regularization. SIAM Rev 40(3):636–666

Platt JC (1999) Fast training of support vector machines using sequential minimal optimization. Advances in kernel methods: support vector learning. MIT Press, Cambridge, pp 185–208

Roth V (2004) The generalized LASSO. IEEE Trans Neural Netw 15(1):16–28

Ruzza S, Dallago E, Venchi G, Morini S (2008) An offset compensation technique for bandgap voltage reference in CMOS technology. In: IEEE International symposium on circuits and systems, pp 2226–2229

Schölkopf B, Smola A (2001) Learning with kernels: support vector machines, regularization, optimization, and beyond (adaptive computation and machine learning). The MIT Press, Cambridge

Schölkopf B, Smola AJ, Williamson RC, Bartlett PL (2000) New support vector algorithms. Neural Comput 12(5):1207–1245

Smola AJ, Schölkopf B (2004) A tutorial on support vector regression. Stat Comput 14(3):199–222

Suykens JAK (2002) Least squares support vector machines. World Scientific, River Edge

Tipping ME (2001) Sparse bayesian learning and the relevance vector machine. J Mach Learn Res 1:211–244

Tong S, Koller D (2002) Support vector machine active learning with applications to text classification. J Mach Learn Res 2:45–66

Vapnik V, Golowich SE, Smola A (1996) Support vector method for function approximation, regression estimation, and signal processing. In: Advances in neural information processing systems, vol 9. MIT Press, pp 281–287

Vaz A, Vicente L (2007) A particle swarm pattern search method for bound constrained global optimization. J Glob Optim 39(2):197–219

Vaz AIF, Vicente LN (2009) PSwarm: a hybrid solver for linearly constrained global derivative-free optimization. Optim Methods Softw 24(4–5):669–685

Yeh IC (1998) Modeling of strength of high-performance concrete using artificial neural networks. Cement Concr Res 28(12):1797–1808

Acknowledgments

The authors acknowledge the financial support of the IWT INVENT project and ON Semiconductor, Belgium.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by V. Piuri.

Rights and permissions

About this article

Cite this article

Ceperic, V., Gielen, G. & Baric, A. Sparse \(\varepsilon \)-tube support vector regression by active learning. Soft Comput 18, 1113–1126 (2014). https://doi.org/10.1007/s00500-013-1131-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-013-1131-6