Abstract

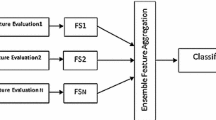

A classifier ensemble combines a set of individual classifier’s predictions to produce more accurate results than that of any single classifier system. However, one classifier ensemble with too many classifiers may consume a large amount of computational time. This paper proposes a new ensemble subset evaluation method that integrates classifier diversity measures into a novel classifier ensemble reduction framework. The framework converts the ensemble reduction into an optimization problem and uses the harmony search algorithm to find the optimized classifier ensemble. Both pairwise and non-pairwise diversity measure algorithms are applied by the subset evaluation method. For the pairwise diversity measure, three conventional diversity algorithms and one new diversity measure method are used to calculate the diversity’s merits. For the non-pairwise diversity measure, three classical algorithms are used. The proposed subset evaluation methods are demonstrated by the experimental data. In comparison with other classifier ensemble methods, the method implemented by the measurement of the interrater agreement exhibits a high accuracy prediction rate against the current ensembles’ performance. In addition, the framework with the new diversity measure achieves relatively good performance with less computational time.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Banfield RE, Hall LO, Bowyer KW, Kegelmeyer WP (2004) Ensemble diversity measures and their application to thinning. Inf Fusion 6:2005

Bouziane H, Messabih B, Chouarfia A (2015) Effect of simple ensemble methods on protein secondary structure prediction. Soft Comput 19(6):1663–1678

Breiman L (1996) Bagging predictors. Mach Learn 24(2):123–140

Brown G, Wyatt J, Harris R, Yao X (2005) Diversity creation methods: a survey and categorisation. J Inf Fusion 6:5–20

Chao F, Sun Y, Wang Z, Yao G, Zhu Z, Zhou C, Meng Q, Jiang M (2014) A reduced classifier ensemble approach to human gesture classification for robotic chinese handwriting. In: IEEE international conference on fuzzy systems (FUZZ-IEEE), pp 1720–1727

Chen G, Giuliani M, Clarke D, Gaschler A, Knoll A (2014) Action recognition using ensemble weighted multi-instance learning. In: IEEE international conference on robotics and automation (ICRA), pp 4520–4525

Cherkauer KJ (1996) Human expert–level performance on a scientific image analysis task by a system using combined artificial neural networks. In: Chan P (ed) Working notes of the AAAI workshop on integrating multiple learned models

Christoudias C, Urtasun R, Darrell T (2008) Multi-view learning in the presence of view disagreement. In: Proceedings of the twenty-fourth conference annual conference on uncertainty in artificial intelligence (UAI-08). AUAI Press, Corvallis, pp 88–96

Cunningham P, Carney J (2000) Diversity versus quality in classification ensembles based on feature selection. In: 11th European conference on machine learning. Springer, New York, pp 109–116

Dash M, Liu H (1997) Feature selection for classification. Intell Data Anal 1(1C4):131–156

Diao R, Shen Q (2012) Feature selection with harmony search. IEEE Trans Syst Man Cybern Part B Cybern 42(6):1509–1523

Diao R, Chao F, Peng T, Snooke N, Shen Q (2014) Feature selection inspired classifier ensemble reduction. IEEE Trans Cybern 44(8):1259–1268

Fleiss JL (1981) Statistical methods for rates and proportions. In: Wiley series in probability and mathematical statistics. Applied probability and statistics. Wiley, New York

Geem ZW (ed) (2010) Recent advances in harmony search algorithm. In: Studies in computational intelligence, vol 270. Springer, New York

Giacinto G, Roli F (2001) Design of effective neural network ensembles for image classification purposes. Image Vis Comput 19(9C10):699–707

Hall MA (2000) Correlation-based feature selection for discrete and numeric class machine learning. In: Proceedings of the seventeenth international conference on machine learning (ICML’00). Morgan Kaufmann Publishers Inc., San Francisco, pp 359–366

Hall M, Frank E, Holmes G, Pfahringer B, Reutemann P, Witten IH (2009) The WEKA data mining software: an update. SIGKDD Explor Newsl 11(1):10–18. doi:10.1145/1656274.1656278

Harrison R, Birchall R, Mann D, Wang W (2011) A novel ensemble of distance measures for feature evaluation: application to sonar imagery. In: Yin H, Wang W, Rayward-Smith V (eds) Intelligent data engineering and automated learning (IDEAL’11), vol 6936., Lecture notes in computer scienceSpringer, Berlin, pp 327–336

Kohavi R, Wolpert DH (1996) Bias plus variance decomposition for zero-one loss functions. In: Proceedings of the thirteenth international conference on machine learning. Morgan Kaufmann Publishers, San Francisco, pp 275–283

Lichman M (2013) UCI machine learning repository. http://archive.ics.uci.edu/ml

Mandal I (2014) A novel approach for predicting DNA splice junctions using hybrid machine learning algorithms. Soft Comput 1–14. doi:10.1007/s00500-014-1550-z

Marqués A, García V, Sánchez J (2012) Two-level classifier ensembles for credit risk assessment. Expert Syst Appl 39(12):10916–10922

Mashinchi M, Orgun M, Mashinchi M, Pedrycz W (2011) Harmony search-based approach to fuzzy linear regression. IEEE Trans Fuzzy Syst 19(3):432–448

Nanni L, Lumini A (2007) Ensemblator: an ensemble of classifiers for reliable classification of biological data. Pattern Recognit Lett 28(5):622–630

Okun O, Global I (2011) Feature selection and ensemble methods for bioinformatics: algorithmic classification and implementations. Information Science Reference Imprint of: IGI Publishing, Hershey

Partridge D, Krzanowski W (1997) Software diversity: practical statistics for its measurement and exploitation. Inf Softw Technol 39(10):707–717

Ramos CCO, Souza AN, Chiachia G, Falcão AX, Papa JAP (2011) A novel algorithm for feature selection using harmony search and its application for non-technical losses detection. Comput Electr Eng 37(6):886–894

Skalak DB (1996) The sources of increased accuracy for two proposed boosting algorithms. In: Proceedings of American association for artificial intelligence (AAAI-96). Integrating Multiple Learned Models Workshop, Portland, pp 120–125

Su P, Shang C, Shen Q (2015) A hierarchical fuzzy cluster ensemble approach and its application to big data clustering. J Intell Fuzzy Syst 28:2409–2421

Sun B, Wang J, Chen H, Wang Y (2014) Diversity measures in ensemble learning. Control Decis 29(3):385–395

Tahir M, Kittler J, Bouridane A (2012) Multilabel classification using heterogeneous ensemble of multi-label classifiers. Pattern Recognit Lett 33(5):513–523

Tang E, Suganthan P, Yao X (2006) An analysis of diversity measures. Mach Learn 65(1):247–271. doi:10.1007/s10994-006-9449-2

Teng G, He C, Gu X (2014) Response model based on weighted bagging GMDH. Soft Comput 18(12):2471–2484

Wang X, Yang J, Teng X, Xia W, Jensen R (2007) Feature selection based on rough sets and particle swarm optimization. Pattern Recognit Lett 28(4):459–471

Witten IH, Frank E, Hall MA (2011) Data mining: practical machine learning tools and techniques, 3rd edn. Morgan Kaufmann Publishers Inc., San Francisco

Wróblewski J (2001) Ensembles of classifiers based on approximate reducts. Fundam Inf 47(3–4):351–360

Yao G, Chao F, Zeng H, Shi M, Jiang M, Zhou C (2014) Integrate classifier diversity evaluation to feature selection based classifier ensemble reduction. In: 14th UK workshop on computational intelligence (UKCI), pp. 1–7. doi:10.1109/UKCI.2014.6930156

Zheng L, Diao R, Shen Q (2015) Self-adjusting harmony search-based feature selection. Soft Comput 19:1567–1579. doi:10.1007/s00500-014-1307-8

Zheng Y, Zhang M, Zhang B (2014) Biogeographic harmony search for emergency air transportation. Soft Comput 1432–7643 . doi:10.1007/s00500-014-1556-6

Acknowledgments

The authors would like to thank the reviewers for their invaluable comments and suggestions, which have helped improve the presentation of this paper greatly.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by D. Neagu.

This work was supported by the National Natural Science Foundation of China (Nos. 61203336, 61273338, and 61075058) and the Major State Basic Research Development Program of China (973 Program) (No. 2013CB329502).

Rights and permissions

About this article

Cite this article

Yao, G., Zeng, H., Chao, F. et al. Integration of classifier diversity measures for feature selection-based classifier ensemble reduction. Soft Comput 20, 2995–3005 (2016). https://doi.org/10.1007/s00500-015-1927-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-015-1927-7