Abstract

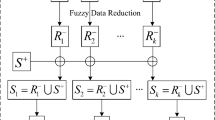

Big data are data too big to be handled and analyzed by traditional software tools, big data can be characterized by five V’s features: volume, velocity, variety, value and veracity. However, in the real world, some big data have another feature, i.e., class imbalanced, such as e-health big data, credit card fraud detection big data and extreme weather forecast big data are all class imbalanced. In order to deal with the problem of classifying binary imbalanced big data, based on MapReduce, non-iterative learning, ensemble learning and oversampling, this paper proposed an promising algorithm which includes three stages. Firstly, for each positive instance, its enemy nearest neighbor is found with MapReduce, and p positive instances are randomly generated with uniform distribution in its enemy nearest neighbor hypersphere, i.e., oversampling p positive instances within the hypersphere. Secondly, l balanced data subsets are constructed and l classifiers are trained on the constructed data subsets with an non-iterative learning approach. Finally, the trained classifiers are integrated by fuzzy integral to classify unseen instances. We experimentally compared the proposed algorithm with three related algorithms: SMOTE, SMOTE+RF-BigData and MR-V-ELM, and conducted a statistical analysis on the experimental results. The experimental results and the statistical analysis demonstrate that the proposed algorithm outperforms the other three methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Abdallah ACB, Frigui H, Gader P (2012) Adaptive local fusion with fuzzy integrals. IEEE Trans Fuzzy Syst 20(5):849–864

Abdi L, Hashemi S (2016) To combat multi-class imbalanced problems by means of over-sampling techniques. IEEE Trans Knowl Data Eng 28(1):238–251

Bao L, Juan C, Li JT et al (2016) Boosted near-miss under-sampling on SVM ensembles for concept detection in large-scale imbalanced datasets. Neurocomputing 172:198–206

Berkeley EC, Bobrow DG (1964) The programming language LISP: its operation and applications. Program Lang LISP Oper Appl 21(99):1803–1824

Cai MJ, Li QG, Ma JM (2017) Knowledge reduction of dynamic covering decision information systems caused by variations of attribute values. Int J Mach Learn Cybern 8(4):1131–1144

Cao WP, Wang XZ, Ming Z et al (2018) A review on neural networks with random weights. Neurocomputing 275:278–287

Cao P, Zhao D, Zaiane O (2013) An optimized cost-sensitive SVM for imbalanced data learning. In: PAKDD part II. LNAI 7819, pp 280–292

Castro CL, Braga AP (2013) Novel cost-sensitive approach to improve the multilayer perceptron performance on imbalanced data. IEEE Trans Neural Netw Learn Syst 24(6):888–899

Chawla NV, Lazarevic A, Hall LO, et al (2003) SMOTEBoost: improving prediction of the minority class in boosting. In: Proceeding of knowledge discovery in databases, pp 107–119

Chawla NV, Bowyer KW, Hall LO et al (2002) SMOTE: synthetic minority over-sampling technique. J Artif Intell Res 16:321–357

Das B, Krishnan NC, Cook DJ (2015) RACOG and wRACOG: two probabilistic oversampling techniques. IEEE Trans Knowl Data Eng 27(1):222–234

Dean J, Ghemawat S (2008) MapReduce: simplified data processing on large clusters. Commun ACM 51(1):107–113

Ding S, Zhang N, Zhang J et al (2017) Unsupervised extreme learning machine with representational features. Int J Mach Learn Cybern 8(2):587–595

El-Monsef MEA, El-Gayar MA, Aqeel RM (2017) A comparison of three types of rough fuzzy sets based on two universal sets. Int J Mach Learn Cybern 8(1):343–353

Emani CK, Cullot N, Nicolle C (2015) Understandable Big Data: a survey. Comput Sci Rev 17:70–81

Fan W, Stolfo SJ, Zhang J, Chan PK (1999) Adacost: misclassification cost-sensitive boosting. Presented at the 6th international conference on machine learning, San Francisco, pp 97–105

Fernández A, RÍo SD, Chawla NV et al (2017) An insight into imbalanced Big Data classification: outcomes and challenges. Complex Intell Syst 3(2):105–120

Galar M, Fernández A, Barrenechea E et al (2012) A review on ensembles for the class imbalance problem-bagging boosting and hybrid-based approaches. IEEE Trans Syst Man Cyber Part C Appl Rev 42(4):463–484

Galar M, Fernández A, Barrenechea E et al (2013) EUSBoost: enhancing ensembles for highly imbalanced data-sets by evolutionary undersampling. Pattern Recogn 46(12):3460–3471

Ghanavati M, Wong RK, Chen F, et al (2014) An effective integrated method for learning big imbalanced data. In: IEEE international congress on Big Data, pp 691–698

He HB, Garcia EA (2009) Learning from imbalanced data. IEEE Trans Knowl Data Eng 21(9):1263–1284

Huang GB (2015) What are extreme learning machines? Filling the gap between frank Rosenblatts dream and John von Neumanns puzzle. Cognit Comput 7(3):263–278

Huang GB, Zhu QY, Siew CK (2006) Extreme learning machine: theory and applications. Neurocomputing 70:489–501

Huang GB, Chen L, Siew CK (2006) Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans Neural Netw 17(4):879–892

Huang GB, Wang DH, Lan Y (2011) Extreme learning machines: a survey. Int J Mach Learn Cybern 2(2):107–122

Huang GB, Zhou HM, Ding XJ, Zhang R (2012) Extreme learning machine for regression and multiclass classification. IEEE Trans Syst Man Cybern Part B 42(2):513–529

Huang G, Huang GB, Song S, You K (2015) Trends in extreme learning machines: a review. Neural Netw 61:32–48

Igelnik B, Pao YH (1995) Stochastic choice of basis functions in adaptive function approximation and the functional-link net. IEEE Trans Neural Netw 6(6):1320–1329

Janez D (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7(1):1–30

Joshi M, Kumar V, Agarwal R (2001) Evaluating boosting algorithms to classify rare classes: comparison and improvements. In: Proceeding of IEEE international conference on data mining, pp 257–264

Krawczyk B (2016) Learning from imbalanced data: open challenges and future directions. Prog Artif Intell 5:221–232

Krawczyka B, Woźniaka M, Schaefer G (2014) Cost-sensitive decision tree ensembles for effective imbalanced classification. Appl Soft Comput 14:554–562

Kuncheva LI (2001) Combining classifiers: soft computing solutions. In: Pal SK, Pal A (eds) Pattern recognition: from classical to modern approaches. World Scientific, Singapore, pp 427–451

Lee W, Jun CH, Lee JS (2017) Instance categorization by support vector machines to adjust weights in AdaBoost for imbalanced data classification. Inf Sci 381:92–103

Li KW, Shao MW, Wu WZ (2017) A data reduction method in formal fuzzy contexts. Int J Mach Learn Cybern 8(4):1145–1155

Lichman M (2013) UCI machine learning repository. School of Information and Computer Science, University of California, Irvine. http://archive.ics.uci.edu/ml

Liu XM, Liu B (2016) A liver data set with five attributes and two imbalanced classes.https://github.com/ShenData/data.git

Liu XY, Wu J, Zhou ZH (2009) Exploratory undersampling for class imbalance learning. IEEE Trans Syst Man Cybern B Cybern 39(2):539–550

López V, del Ro S, Bentez JM et al (2015) Cost-sensitive linguistic fuzzy rule based classification systems under the MapReduce framework for imbalanced big data. Fuzzy Sets Syst 258(1):5–38

Ludwig SA (2015) MapReduce-based fuzzy c-means clustering algorithm: implementation and scalability. Int J Mach Learn Cybern 6(6):923–934

Mao WT, Wang JW, Xue ZN (2017) An ELM-based model with sparse-weighting strategy for sequential data imbalance problem. Int J Mach Learn Cybern 8(4):1333–1345

Maurya CK, Toshniwal D, Venkoparao GV (2016) Online sparse class imbalance learning on big data. Neurocomputing 216:250–260

Meng M, Wei J, Wang JB et al (2017) Adaptive semi-supervised dimensionality reduction based on pairwise constraints weighting and graph optimizing. Int J Mach Learn Cybern 8(3):793–805

Ofek N, Rokach L, Stern R et al (2017) Fast-CBUS: a fast clustering-based undersampling method for addressing the class imbalance problem. Neurocomputing 243:88–102

Oscar FR, Beatriz PS, Bertha GB (2017) An incremental non-iterative learning method for one-layer feedforward neural networks. Appl Soft Comput. https://doi.org/10.1016/j.asoc.2017.07.061

Pao YH, Phillips SM, Sobajic DJ (1992) Neural-net computing and the intelligent control of systems. Int J Control 56(2):263–289

Pao YH, Park GH, Sobajic DJ (1994) Learning and generalization characteristics of the random vector functional-link net. Neurocomputing 6(94):163–180

Ralescu D, Adams G (1980) The fuzzy integral. J Math Anal Appl 75(2):562–570

Ramentol E, Vluymans S, Verbiest N et al (2015) IFROWANN: imbalanced fuzzy-rough ordered weighted average nearest neighbor classification. IEEE Trans Fuzzy Syst 23(5):1622–1637

Ren ZL, Wei CP (2017) A multi-attribute decision-making method with prioritization relationship and dual hesitant fuzzy decision information. Int J Mach Learn Cybern 8(3):755–763

Reznik AM (1999) Non-iterative learning for neural networks. In: Proceedings of the international joint conference on neural networks (IJCNN1999), vol 2, pp 1374–1379

Río SD, López V, Benítez JM et al (2014) On the use of MapReduce for imbalanced big data using random forest. Inf Sci 285:112–137

Rivera WA (2017) Noise reduction a priori synthetic over-sampling for class imbalanced data sets. Inf Sci 408:146–161

Rivera WA, Xanthopoulos P (2016) A priori synthetic over-sampling methods for increasing classification sensitivity in imbalanced data sets. Expert Syst Appl 66:124–135

Schmidt WF, Kraaijveld MA, Duin RPW (1991) A non-iterative method for training feedforward networks. In: Proceedings of the international joint conference on neural networks (IJCNN1991), vol 2, pp 19–24

Schmidt WF, Kraaijveld MA, Duin RPW (1992) Feed forward neural networks with random weights. In: Proceedings of 11th IAPR international conference on pattern recognition methodology and systems, vol 2, pp 1–4

Seiffert C, Khoshgoftaar T, Hulse JV et al (2010) Rusboost: a hybrid approach to alleviating class imbalance. IEEE Trans Syst Man Cybern Part A Syst Hum 40(1):185–197

Sun Y, Kamel MS, Wong AK et al (2007) Cost-sensitive boosting for classification of imbalanced data. Pattern Recogn 40(12):3358–3378

Triguero I, Galar M, Merino D, et al (2016) Evolutionary undersampling for extremely imbalanced big data classification under apache spark. In: 2016 IEEE congress on evolutionary computation (CEC2016), pp 640–647

Tsang ECC, Sun BZ, Ma WM (2017) General relation-based variable precision rough fuzzy set. Int J Mach Learn Cybern 8(3):891–901

Wang XZ (2015) Uncertainty in learning from Big Data-editorial. J Intell Fuzzy Syst 28(5):2329–2330

Wang XZ, Dong CR (2009) Improving generalization of fuzzy if-then rules by maximizing fuzzy entropy. IEEE Trans Fuzzy Syst 17(3):556–567

Wang XZ, Xing HJ, Li Y et al (2015) A study on relationship between generalization abilities and fuzziness of base classifiers in ensemble learning. IEEE Trans Fuzzy Syst 23(5):1638–1654

Wang XZ, Zhang TL, Wang R (2017) Noniterative deep learning: incorporating restricted Boltzmann machine into multilayer random weight neural networks. IEEE Trans Syst Man Cybern Syst. https://doi.org/10.1109/TSMC.2017.2701419

Wang XZ, Wang R, Xu C (2017) Discovering the relationship between generalization and uncertainty by incorporating complexity of classification. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2017.2653223

Wang R, Wang XZ, Kwong S et al (2017) Incorporating diversity and informativeness in multiple-instance active learning. IEEEE Trans Fuzzy Syst 25(6):1460–1475

Wang XZ, Wang R, Xu C (2018) Discovering the relationship between generalization and uncertainty by incorporating complexity of classification. IEEE Trans Cybern 48(2):703–715

Ye J (2017) Multiple attribute group decision making based on interval neutrosophic uncertain linguistic variables. Int J Mach Learn Cybern 8(3):837–848

Zhai JH (2011) Fuzzy decision tree based on fuzzy-rough technique. Soft Comput 15(6):1087–1096

Zhai JH, Xu HY, Wang XZ (2012) Dynamic ensemble extreme learning machine based on sample entropy. Soft Comput 16(9):1493–1502

Zhai JH, Wang XZ, Pang XH (2016) Voting-based instance selection from large data sets with MapReduce and random weight networks. Inf Sci 367:1066–1077

Zhai JH, Zhang Y, Zhu HY (2017) Three-way decisions model based on tolerance rough fuzzy set. Int J Mach Learn Cybern 8(1):35–43

Zhai JH, Zhang SF, Wang CX (2017) The classification of imbalanced large data sets based on MapReduce and ensemble of ELM classifiers. Int J Mach Learn Cybern 8(3):1009–1017

Zhan YZ, Zhang J, Mao QR (2012) Fusion recognition algorithm based on fuzzy density determination with classification capability and supportability. Pattern Recognit Artif Intell 25(2):346–351

Zhang L, Zhang D (2015) Domain adaptation extreme learning machines for drift compensation in E-nose systems. IEEE Trans Instrum Meas 64(7):1790–1801

Zhang L, Zhang D (2016) Robust visual knowledge transfer via extreme learning machine based domain adaptation. IEEE Trans Image Process 25(10):4959–4973

Zhang L, Zuo WM, Zhang D (2016) LSDT: latent sparse domain transfer learning, for visual adaptation. IEEE Trans Image Process 25(3):1177–1191

Zhao SY, Wang XZ, Chen DG et al (2013) Nested structure in parameterized rough reduction. Inf Sci 248:130–150

Zhao SY, Chen H, Li CP et al (2015) A novel approach to building a robust fuzzy rough classifier. IEEE Trans Fuzzy Syst 23(4):769–786

Zong WW, Huang GB, Chen YQ (2013) Weighted extreme learning machine for imbalance learning. Neurocomputing 101(3):229–242

Acknowledgements

This research is supported by The National Natural Science Foundation of China (71371063) and by The Natural Science Foundation of Hebei Province (F2017201026).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by X. Wang, A. K. Sangaiah, M. Pelillo.

Rights and permissions

About this article

Cite this article

Zhai, J., Zhang, S., Zhang, M. et al. Fuzzy integral-based ELM ensemble for imbalanced big data classification. Soft Comput 22, 3519–3531 (2018). https://doi.org/10.1007/s00500-018-3085-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-018-3085-1