Abstract

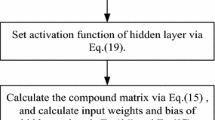

Aiming at the accuracy prediction of combustion efficiency for a 300 MW circulating fluidized bed boiler (CFBB), a circular convolution parallel extreme learning machine (CCPELM) which is a double parallel forward neural network is proposed. In CCPELM, the circular convolution theory is introduced to map the hidden layer information into higher-dimension information; in addition, the input layer information is directly transmitted to its output layer, which makes the whole network into a double parallel construction. In this paper, CCPELM is applied to establish a model for boiler efficiency though data samples collected from a 300 MW CFBB. Some comparative simulation results with other neural network models show that CCPELM owns very high prediction accuracy with fast learning speed and very good repeatability in learning ability.

Similar content being viewed by others

References

Antoulas AC (2005) An overview of approximation methods for large-scale dynamical systems. Annu Rev Control 29(2):181–190

Arsie I, Pianese C, Sorrentino M (2010) Development of recurrent neural networks for virtual sensing of NOx emissions in internal combustion engines. SAE Int J Fuels Lubr 2(2):354–361

Bartlett PL (1998) The sample complexity of pattern classification with neural networks: the size of the weights is more important than the size of the network. IEEE Trans Neural Netw 44(2):525–536

Deng W, Zheng Q, Chen L (2009) Regularized extreme learning machine. In: Proceedings of the IEEE symposium on computational intelligence and data mining, pp 389–395

Deng WY, Zheng QH, Chen L et al (2010) Research on extreme learning of neural networks. Chin J Comput 33(2):279–287

Gao XQ, Ding YM (2009) Digital signal processing. Xidian University Press, Xi’an

Green M, Ekelund U, Edenbrandt L et al (2009) Exploring new possibilities for case-based explanation of artificial neural network ensembles. Neural Netw 22(1):75–81

Hernandez L, Baladrón C, Aguiar JM et al (2013) Short-term load forecasting for microgrids based on artificial neural networks. Energies 6:1385–1408

Huang G-B, Chen L (2007) Convex incremental extreme learning machine. Neurocomputing 70:3056–3062

Huang G-B, Chen L (2008) Enhanced random search based incremental extreme learning machine. Neurocomputing 71:3460–3468

Huang GB, Zhu QY, Siew CK (2004) Extreme learning machine: a new learning scheme of feed forward neural networks. In: Proceedings of international joint conference on neural networks, pp 25–29

Huang G-B, Zhu Q-Y, Siew C-K (2006) Extreme learning machine: theory and applications. Neurocomputing 70:489–501

Huang GB, Chen L, Siew CK (2006) Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans Neural Netw 17(4):879–892

Huang GB, Zhou H, Ding X et al (2012) Extreme learning machine for regression and multiclass classification. IEEE Trans Syst Man Cybern B Cybern 42(2):513–529

Iliya S, Goodyer E, Gow J, et al (2015) Application of artificial neural network and support vector regression in cognitive radio networks for RF power prediction using compact differential evolution algorithm. In: Federated conference on computer science and information systems, pp 55–66

Iosifidis A, Tefas A, Pitas I (2013) Minimum class variance extreme learning machine for human action recognition. IEEE Trans Circuits Syst Video Technol 5427–5431

Li MB, Huang GB, Saratchandran P et al (2005) Fully complex extreme learning machine. Neurocomputing 68(1):306–314

Li G, Niu P, Wang H et al (2013) Least square fast learning network for modeling the combustion efficiency of a 300 MW coal-fired boiler. Neural Netw Off J Int Neural Netw Soc 5(3):57–66

Luo Z, Wang F, Zhou H et al (2011) Principles of optimization of combustion by radiant energy signal and its application in a 660 MWe down- and coal-fired boiler. Korean J Chem Eng 28(12):2336–2343

May RJ, Maier HR, Dandy GC (2010) Data splitting for artificial neural networks using SOM-based stratified sampling. Neural Netw Off J Int Neural Netw Soc 23(2):283–294

Pangaribuan JJ, Suharjito (2014) Diagnosis of diabetes mellitus using extreme learning machine. In: International conference on information technology systems and innovation, pp 33–38

Pao YH, Park GH, Sobajic DJ (1994) Learning and generalization characteristics of the random vector functional-link net. Neurocomputing 6(2):163–180

Qiu X, Suganthan PN, Gehan AJ (2017) Amaratunga. Electricity load demand time series forecasting with empirical mode decomposition based random vector functional link network. In: IEEE international conference on systems, man, and cybernetics. IEEE, pp 1394–1399

Qu BY, Lang BF, Liang JJ et al (2016) Two-hidden-layer extreme learning machine for regression and classification. Neurocomputing 175:826–834

Schoukens J, Pintelon R (1991) Identification of linear systems: a practical guideline to accurate modeling. Pergamon Press, Inc, Oxford

Suresh S, Babu RV, Kim HJ (2009) No-reference image quality assessment using modified extreme learning machine classifier. Appl Soft Comput 9:541–552

Wang J, Wu W, Li Z et al (2012) Convergence of gradient method for double parallel feedforward neural network. Int J Nume Anal Model 8(3):484–495

Zhang H, Yin Y, Zhang S (2016) An improved ELM algorithm for the measurement of hot metal temperature in blast furnace. Neurocomputing 174(4):232–237

Zheng Y, Gao X, Sheng C (2017) Impact of co-firing lean coal on NOx emission of a large-scale pulverized coal-fired utility boiler during partial load operation. Korean J Chem Eng 34(4):1273–1280

Zhou H, Zhao JP, Zheng LG et al (2012) Modeling NOx, emissions from coal-fired utility boilers using support vector regression with ant colony optimization. Eng Appl Artif Intell 25(1):147–158

Zhu Q-Y, Qin A-K, Suganthan P-N, Huang G-B (2005) Evolutionary extreme learning machine. Pattern Recognit 38(10):1759–1763

Acknowledgements

This work was funded by National Natural Science Foundation of China (Grant Nos. 61403331, 61573306), Program for the Top Young Talents of Higher Learning Institutions of Hebei (Grant No. BJ2017033), Natural Science Foundation of Hebei Province (Grant No. F2016203427), China Postdoctoral Science Foundation (Grant No. 2015M571280), the Doctorial Foundation of Yanshan University (Grant No. B847), the natural science foundation for young scientist of Hebei province (Grant No. F2014203099) and the independent research program for young teachers of Yanshan University (Grant No. 13LG006).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In Appendix, the proposed CCPELM is employed on nine regression problems to test the proposed algorithm, and the specification about above regression cases is reported in Table 5. For each data base, its input attributes are normalized into [− 1,1], while its target attribute is scaled into [0,1]. The activation function of algorithms can refer to Sect. 5. For each data set, Table 6 gives the comparison of network complexity based on cross-validation method for the selected algorithms, among which IELM’s maximal hidden node can be arranged as 500 according to the Huang et al. (2006b). For each case, 30 trials are carried out by each algorithm and the results (mean of RMSE and \(R^{2})\) are reported in Tables 7 and 8, respectively.

As shown in Table 7, results of only one case for each of RVFL, TELM and P-ELM are better than other algorithms, RVFL on the Servo, TELM on Delta elevators and P-ELM on the abalone, and results of IELM are better on the two cases (auto price and machine CPU). However, for the CCPELM, its results are better than other outstanding algorithms on three data sets (boston housing, auto MPG and stocks) except for the delta ailerons case on which all of RVFL, ELM, P-ELM, TELM and CCPELM have the same excellent results. According to Table 8, we could also know that CCPELM still achieves better \(R^{2}\) on four data set. In addition, Fig. 4 shows the relationship between generalization performance of CCPELM and its network size for the stocks case. According to Fig. 4, the generalization performance of CCPELM is very stable on a wide with the increasing number of hidden neurons. In a word, CCPLM has the small prediction error and its prediction value is closer to the actual value on most cases, further proving the applicability of the proposed algorithm.

Rights and permissions

About this article

Cite this article

Li, G., Chen, B., Qi, X. et al. Circular convolution parallel extreme learning machine for modeling boiler efficiency for a 300 MW CFBB. Soft Comput 23, 6567–6577 (2019). https://doi.org/10.1007/s00500-018-3305-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-018-3305-8