Abstract

In this paper, a precise multimodal eye blink recognition method using feature level fusion (MmERMFLF) is proposed. A new feature: eye-eyebrow facet ratio (EEBFR) (formed by fusing eye facet ratio (EFR: ratio of diagonal length and width of eye) and eyebrow to nose facet ratio (EBNFR: distance between eyebrow landmarks and nose landmark)) for approximating the eye state is computed. Initially, an improved intellectual framework (Sagacious Information Recuperation Technique) that senses the emergency state using information retrieved from eye blinks, pulse rate as well as behavioral patterns(emotions) exhibited by an individual is presented. Further a novel multimodal method (MmERMFLF) for detection and counting of eye blinks is implemented. For training, one state-of-the-art database—ZJU is used. To additionally improve the performance, feature-level fusion schemes [simple concatenate and fusion codes (gaborization)] are enforced and equated. Receiver operating characteristics, error rate, sensitivity, specificity, and precision are used to demonstrate the performance of the proposed method qualitatively and quantitatively. Accuracy with proposed MmERMFLF is increased to 99.02% (using EEBGFR method with bagged ensemble classifier) in comparison to unimodal eye blink recognition system (97.60%). 99.80% genuine blinks are classified by MmERMFLF (when gaborization fusion is used) using simple tree classifier.

Similar content being viewed by others

References

Adejoke EJ, Samuel IT (2015) Development of eye-blink and face corpora for research in human computer interaction. Int J Adv Comput Sci Appl (IJACSA) 6(5):109–111

Ahmad MI, Woo WL, Dlay S (2016) Non-stationary feature fusion of face and palmprint multimodal biometrics. Neurocomputing 177:49–61

Ahmd R, Borole JN (2015) Drowsy driver identification using eye blink detection. Int J Computer Science and Information Technologies 6(1):270–274

Alsaeedi N, Wloka D (2019) Real-time eyeblink detector and eye state classifier for virtual reality (VR) headsets (head-mounted displays, HMDs). Sensors 19(5):1121. https://doi.org/10.3390/s19051121

Arai K (2015) Relations between psychological status and eye movements. Int J Adv Res Artif Intell 4(6):16–22

Arai K (2018) Method for thermal pain level prediction with eye motion using SVM. Int J Adv Comput Sci Appl (IJACSA). https://doi.org/10.14569/IJACSA.2018.090427

Arai K, Mardiyanto R (2010) Real time blinking detection based on gabor filter. Int J Hum Comput Interact 1(3):33–45

Arora P, Bhargava S, Srivastava S, Hanmandlu M (2017) Multimodal biometric system based on information set theory and refined scores. Soft Comput 21(17):5133–5144. https://doi.org/10.1007/s00500-016-2108-z

Asthana A, Zafeoriou S, Cheng S, Pantic M (2014) Incremental face alignment in the wild. In: Conference on computer vision and pattern recognition

Bekerman I, Gottlieb P, Vaiman M (2014). Variations in eyeball diameters of the healthy adults. J Ophthalmol 2014, 5, Article ID 503645. https://doi.org/10.1155/2014/503645

Chau M, Betke M (2005) Real time eye tracking and blink detection with USB cameras. Technical Report 2005-12, Boston University Computer Science

Chaudhary G, Srivastava S (2018) Accurate human recognition by score-level and feature-level fusion using palm-phalanges print. Arab J Sci Eng 43(6):543–554. https://doi.org/10.1007/s13369-017-2644-6

Chaudhary G, Srivastava S, Bhardwaj S (2016) Multi-level fusion of palmprint and dorsal hand vein. In: Satapathy SC, Mandal JK, Udgata SK, Bhateja V (eds) Information systems design and intelligent applications. Springer, pp 321–330

Chuduc H, Nguyenphan K, Nguyen D (2013) A review of heart rate variability and its applications. APCBEE Procedia 7:80–85

Donato G, Bartlett MS, Hager JC, Ekman P, Sejnowski TJ (1999) Classifying facial actions. IEEE Trans Pattern Anal Mach Intell 21(10):974–989

Drutarovsky T, Fogelton A (2014) Eye blink detection using variance of motion vectors. In: Computer vision—ECCV workshops

Frank M (2013) Marchak, detecting false intent using eye blink measures. Front Psychol 4:1–9

Grover J, Hanmandlu M (2015) Hybrid fusion of score level and adaptive fuzzy decision level fusions for the finger-knuckle-print based authentication. Appl Soft Comput 31:1–13

Haghighat M, Abdel-Mottaleb M, Alhalabi W (2016) Discriminant correlation analysis for feature level fusion with application to multimodal biometrics. In: 2016 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 1866–1870

Hong L, Jain A (1998) Integrating faces and fingerprints for personal identification. IEEE Trans Pattern Anal Mach Intell 20(12):1295–1307

Jeng Ren-He, Chen Wen-Shiung (2017) Two feature-level fusion methods with feature scaling and hashing for multimodal biometrics. IETE Techn. Rev. 34(1):91–101

Khan MT, Anwar H, Ullah F et al. (2019) Smart real-time video surveillance platform for drowsiness detection based on eyelid closure. In: Wireless communications and mobile computing, 2019

Kim H, Suh KH, Lee EC (2017) Multi-modal user interface combining eye tracking and hand gesture recognition. J Multimodal User Interfaces 11:241–250

Kong WK, Zhang D, Li W (2003) Palmprint feature extraction using 2-D Gabor filters. Pattern Recognit 36(10):2339–2347

Kong A, Zhang D, Kamel M (2006) Palmprint identification using feature-level fusion. Pattern Recognit 39(3):478–487

Kong A, Zhang D, Kamel M (2009) A survey of palmprint recognition. Pattern Recognit 42(7):1408–1418

Lamba PS, Virmani D (2018a) Information retrieval from emotions and eye blinks with help of sensor nodes. Int J Electr Comput Eng (IJECE) 8(4):2433–2441

Lamba PS, Virmani D (2018b) Reckoning number of eye blinks using eye facet correlation for exigency detection. J Intell Fuzzy Syst 35(5):5279–5286

Leal Sharon, Vrij Aldert (2008) Blinking during and after lying. J Nonverbal Behav 32:187–194

Lee WH, Lee EC, Park KE (2010) Blink detection robust to various facial poses. J Neurosci Methods 193(2):356–372

Li Q, Qiu Z, Sun D (2005) Feature-level fusion of hand biometrics for personal verification based on kernel PCA. In: Zhang DY, Jain A (eds) Advances in biometrics. Springer, Berlin, pp 744–750

Milborrow S, Nicolls F (2008) Locating facial features with an extended active shape model. In: Computer vision—ECCV 2008, lecture notes in computer science, vol 5305. Springer, pp 504–513

Pan G, Sun L, Wu Z, Lao S (2007) Eyeblink-based anti-spoofing in face recognition from a generic webcamera. In: ICCV

Perelman BS (2014) Detecting deception via eyeblink frequency modulation. PeerJ 2(e260):1–12

Ross Arun, Jain Anil, Qian Jian-Zhong (2003) Information fusion in biometrics. Pattern Recognit Lett 24(13):2115–2125

Sanchez D, Melin P, Castillo O (2018) Fuzzy adaptation for particle swarm optimization for modular neural networks applied to iris recognition. In: Advances in intelligent systems and computing, pp 104–114. https://doi.org/10.1007/978-3-319-67137-6_11

Sánchez D, Melin P, Castillo O (2018) Comparison of type-2 fuzzy integration for optimized modular neural networks applied to human recognition. In: Berger-Vachon C, Gil Lafuente A, Kacprzyk J, Kondratenko Y, Merigó J, Morabito C (eds) Complex systems: solutions and challenges in economics, management and engineering. Studies in systems, decision and control, vol 125. Springer, Cham

Sanyal R, Chakrabarty K (2019) Two stream deep convolutional neural network for eye state recognition and blink detection. in: 2019 3rd international conference on electronics, materials engineering & nano-technology (IEMENTech), Kolkata, India, pp 1–8

Seymour TL, Baker CA, Gaunt JT (2013) Combining blink, pupil, and response time measures in a concealed knowledge test. Front Psychol 3:1–15

Singh LP, Deepali V (2019) Information retrieval from facial expression using voting to assert exigency. J Discrete Math Sci Cryptogr 22(2):177–190

Singh H, Singh J (2019) Object acquisition and selection using automatic scanning and eye blinks in an HCI system. J Multimodal User Interfaces. https://doi.org/10.1007/s12193-019-00303-0

Song F, Tan X, Liu X, Chen S (2014) Eyes closeness detection from still images with multi-scale histograms of principal oriented gradients. J Pattern Recognit Soc 47:2825–2838

Soukupova T (2016) Eye blink detection using facial landmarks (Diploma Thesis). https://dspace.cvut.cz/bitstream/handle/10467/64839/F3-DP-2016-Soukupova-Tereza-SOUKUPOVA_DP_2016.pdf

Soukupova T, Cech J (2016) Real-time eye blink detection using facial landmarks. In: Cehovin L, Mandeljc R, Struc V (eds) 21st computer vision winter workshop. RimskeToplice, Slovenia

Sukno FM, Pavani S-K, Butakoff C, Frangi AF (2009) Automatic assessment of eye blinking patterns through statistical shape models. In: ICVS

Tian Y-I, Kanade T, Cohn JF (2001) Recognizing action units for facial expression analysis. IEEE Trans Pattern Anal Mach Intell 23(2):1–19

Torricelli D, Goffredo M, Conforto S, Schmid M (2009) An adaptive blink detector to initialize and update a view- based remote eye gaze tracking system in a natural scenario. Pattern Recognit Lett 30(12):1144–1150

Wang Y-Q (2014) An analysis of the Viola-Jones face detection algorithm. Image Process On Line 4:128–148

Wang F, Han J (2008) Robust multimodal biometric authentication integrating iris, face and palmprint. Inf Technol Control 37(4):326–332

Yan Z, Hu L, Chen H, Lu F (2008) Computer vision syndrome: a widely spreading but largely unknown epidemic among computer users. Comput Hum Behav 24(5):2026–2042

Zhu C, Huang C (2019) Adaptive Gabor algorithm for face posture and its application in blink detection. In: 2019 IEEE 3rd advanced information management, communicates, electronic and automation control conference (IMCEC), Chongqing, China, 2019, pp 1871–1875

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

1.1 A. SIRT

Figure 15 shows the framework for the proposed SIRT (Lamba and Virmani 2018a). The framework unfurls the intact structure of the procedure consolidated together. For instance, if the eye blink sensor detects a deliberate/purposeful bizarre blink pattern, notwithstanding a Fear or Surprise emotion detected by the emotion recognition sensors in the comparative time allotment alongside an irregular pulse rate, the circumstance is a disturbing one. The detailed working of the framework and related parameters can be learned from (Lamba and Virmani 2018a).

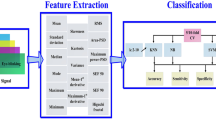

B. Feature extraction and classification for unimodal eye blink recognition system

Eye landmarks (Asthana et al. 2014) are detected for each single frame of the video sequence as shown in Fig. 16.

The EFR between diagonal length and width of the eye is computed (Lamba and Virmani 2018b).

P1 to P6 are the landmark (left eye) as depicted in Fig. 16, similarly P7 to P12 are the landmark detected in right eye. EFRL and EFRR denotes eye facet ratios for left and right eye respectively. Each individual has a different eye structure; hence the distance is calculated diagonally [diagonal distance between landmarks provide better results (Lamba and Virmani 2018b)]. Since both eyes blink synchronously, the EFR’s of both eyes are averaged (Eq. B.7). To the best of our knowledge, none of the existing paper is taking the diagonal distance between the eye landmarks.

For each video frame, the landmarks are perceived. The EFR is computed between diagonal length and width of the eye. The EFR is primarily constant for an open eye for an individual and approximately drops to zero (less than 0.21) for a closed eye. It is experimentally found that the when an individual eye is closed (during blink), EFR value is less than or equal to 0.21 (threshold value). Figure 17 shows frame wise eye status (open/half open/close) with EFR values of a video sequence (frame 13 to 25) from ZJU database. Blink starts with frame 16 and ends with frame 20. It can be clearly seen from the figure that the moment eye is in half open or open state, EFR value is greater than 0.21. Same pattern is seen for all the videos of the database (ZJU). Another instance of an EFR signal over the video sequence (147 frames) consisting of six blinks (as the EFR wave has dropped six times below the threshold value (0.21)) is presented in Fig. 18.

Theoretically, to find the landmark (P2, P3, P5 and P6) coordinates, eye structure (eye landmarks) can be linked to an ellipse, translated from the origin by a distance (h, k); the equation of the ellipse is given by

To find the coordinates (P2, P3, P5 and P6), a line (slope m = 1) is made to pass through center of the ellipse (h, k), which is given by

By plugging Eq. B.9 in Eq. B.8 we get,

which may be rearranged as a quadratic equation given by

Thus the coordinate of intersections is given by

2.1 Classification of feature extracted

If a subject yawns or voluntary closes the eyes for a long period or even makes a facial expression, a series of low EFR values are received. This does not signify that the subject is blinking. Therefore, a classifier that takes a larger temporal window of a frame as an input is proposed. Owing to the fact that a normal blink interval lasts from 100 to 400 ms, roughly 420 ms window can have a momentous impact on a blink detection. Further the database (ZJU) contains videos recorded at 30 fps. Thus, to get the best results a 13-dimensional feature is grouped by concatenating the EFRs of its ± 6 neighboring frames. For training, 13 EFR values (13 consecutive frames) are taken into account and assigned the response value as 1 if a blink is present, otherwise 0. A sample classification matrix for a video sequence with 1000 input frames is shown in Fig. 19. Here EFRi (i = 1 to number of frames) is the EFR computed for the ith frame of the video sequence. For example, if there are 1000 frames in a video sequence, the first row will contain EFR values for first 13 frames (1–13), second row will contain 13 EFR values (2- 14 frames), and so on. The last row will be having EFR values for frame number 988 to 1000. Different classifiers are trained from the video sequences (ZJU) and manually annotated sequences. While testing, a classifier is executed in a scanning-window fashion. A 13-dimensional feature is computed and characterized by classifiers for each frame of a video sequence. Blink is detected instantly after the blink’s end. Stage wise process for blink detection is shown in Fig. 20.

C. Percentage per true class

Tables 6, 7 and 8 shows a particular instance of percentages per true class including true positive rate (TPR) and false negative rate (FNR) (Confusion Matrix) of individual classifiers for EFR (unimodal), EEBFR and EEBGFR (multimodal) methods.

Rights and permissions

About this article

Cite this article

Lamba, P.S., Virmani, D. & Castillo, O. Multimodal human eye blink recognition method using feature level fusion for exigency detection. Soft Comput 24, 16829–16845 (2020). https://doi.org/10.1007/s00500-020-04979-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-020-04979-5