Abstract

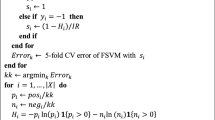

The existence of both uncertainty and imprecision has detrimental impact on efficiency of decision-making applications and some machine learning methods, in particular support vector machine in which noisy samples diminish the performance of SVM training. Therefore, it is important to introduce a special method in order to improve this problem. Fuzzy aspects can handle mentioned problem which has been considered in some classification methods. This paper presents a novel weighted support vector machine to improve the noisy sensitivity problem of standard support vector machine for multiclass data classification. The basic idea is considered to add a weighted coefficient to the penalty term Lagrangian formula for optimization problem, which is called entropy degree, using lower and upper approximation for membership function in fuzzy rough set theory. As a result, noisy samples have low degree and important samples have high degree. To evaluate the power of the proposed method WSVM-FRS (Weighted SVM-Fuzzy Rough Set), several experiments have been conducted based on tenfold cross-validation over real-world data sets from UCI repository and MNIST data set. Experimental results show that the proposed method is superior than the other state-of-the-art competing methods regarding accuracy, precision and recall metrics.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Alcantud JCR, Díaz S, Montes S (2019) Liberalism and dictatorship in the problem of fuzzy classification. Int J Approx Reason 110:82–95

Bian H, Mazlack L (2003) Fuzzy-rough nearest-neighbor classification approach. In: IEEE 22nd international conference of the North American Fuzzy Information Processing Society, pp 500–505

Chang CC, Lin CJ (2011) Libsvm: a library for support vector machines. ACM Trans Intell Syst Technol (TIST) 2(3):27

Changdar C, Pal RK, Mahapatra GS (2016) A genetic ant colony optimization based algorithm for solid multiple travelling salesmen problem in fuzzy rough environment. Soft Comput 21:1–15

Chen D, Yang W, Li F (2008) Measures of general fuzzy rough sets on a probabilistic space. Inf Sci 178:3177–3187

Cohen S Afshar JT, van Schaik A (2017) Emnist: an extension of mnist to handwritten letters. arXiv preprint http://arxiv.org/abs/1702.05373

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20(3):273–297

Demsar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Derrac J, Verbiest N, García S, Cornelis C, Herrera F (2013) On the use of evolutionary feature selection for improving fuzzy rough set based prototype selection. Soft Comput 17:223–238

Ding S, Zhang X, An Y, Xue Y (2017) Weighted linear loss multiple birth support vector machine based on information granulation for multi-class classification. Pattern Recognit 67:32–46

Du W, Cao Z, Song T, Li Y, Liang Y (2017) A feature selection method based on multiple kernel learning with expression profiles of different types. BioData Min 10:1–16

Dubois D, Prade H (1990) Rough fuzzy sets and fuzzy rough sets. Int J Gen Syst 17:191–209

Hamidzadeh J, Ghadamyari R (2019) Clustering data stream with uncertainty using belief function theory and fading function. Soft Comput 24:127–138

Hamidzadeh J, Moradi M (2018) Improved one-class classification using filled function. Appl Intell 48:1–17

Hamidzadeh J, Moradi M (2020) Enhancing data analysis: uncertainty-resistance method for handling incomplete data. Appl Intell 50:74–86

Hamidzadeh J, Namaei N (2018) Belief-based chaotic algorithm for support vector data description. Soft Comput 23:1–26

Hamidzadeh J, Monsefi R, Yazdi HS (2014) LMIRA: large margin instance reduction algorithm. Neurocomputing 145:477–487

Hamidzadeh J, Sadeghi R, Namaei N (2017) Weighted support vector data description based on Chaotic bat algorithm. Appl Soft Comput 60:540–551

Han D, Liu W, Dezert J, Yang Y (2016) A novel approach to pre-extracting support vectors based on the theory of belief functions. Knowl Based Syst 110:210–223

Hsu CW, Lin CJ (2002) A comparison of methods for multi-class support vector machines. IEEE Trans Neural Netw 13:415–425

Javid M, Hamidzadeh J (2019) An active multi-class classification using privileged information and belief function. Int J Mach Learn Cybern 11:1–14

Karal O (2017) Maximum likelihood optimal and robust Support Vector Regression with lncosh loss function. Neural Netw 94:1–12

LeCun Y, Cortes C, Burges CJC (2010) The MNIST database of handwritten digits. http://yann.lecun.com/exdb/mnist

Li J, Wang Y, Cao Y, Xu C (2016) Weighted doubly regularized support vector machine and its application to microarray classification with noise. Neurocomputing 173:595–605

Li J, Wang J, Zheng Y, Xiao H (2017) Microarray classification with noise via weighted adaptive elastic net. In: IEEE data driven control and learning systems, pp 26–27

Lichman M (2013) UCI machine learning repository. http://archive.ics.uci.edu/ml.

Liu Z, Dezert J, Pan Q, Mercier G (2011) Combination of sources of evidence with different discounting factors based on a new dissimilarity measure. Decis Support Syst 52:133–141

Liu Z, Pan Q, Dezert J, Mercier G (2015) Credal c-means clustering method based on belief functions. Knowl Based Syst 74:119–132

Liu Z, Pan Q, Dezert J, Martin A (2016) Adaptive imputation of missing values for incomplete pattern classification. Pattern Recognit 52:85–957

Lu X, Liu W, Zhou C, Huang M (2017) Probabilistic weighted support vector machine for robust modeling with application to hydraulic actuator. IEEE Trans Ind Inform 13(4):1723–1733

Ma J, Zhou S, Li. Chen, W. Wang, Z. Zhang, (2019) A sparse robust model for large scale multi-class classification based on K-SVCR. Pattern Recognit Lett 117:16–23

Mao WT, Xu JC, Wang C et al (2014) A fast and robust model selection algorithm for multi-input multi-output support vector machine. Neurocomputing 130:10–19

Moghaddam VH, Hamidzadeh J (2016) New Hermite orthogonal polynomial kernel and combined kernels in Support Vector Machine classifier. Pattern Recognit 60:921–935

Nguyen VL, Desterck S, Masson MH (2018) Partial data querying through racing algorithms. Int J Approx Reason 96:36–55

Pawlak Z (1982) Rough sets. Int J Comput Inf Sci 11:341–356

Santhanama V, Morariua VI, Harwooda D, Davisa LS (2016) A non-parametric approach to extending generic binary classifiers for multi-classification. Pattern Recogn 58:149–158

Shafer G (1976) A mathematical theory of evidence. Princeton University Press

Sheng H, Xiao J, Wang Z, Li F (2015) Electric vehicle state of charge estimation: nonlinear correlation and fuzzy support vector machine. J Power Sources 281:131–137

Singh S, Shreevastava S, Som T, Somani G (2020) A fuzzy similarity-based rough set approach for attribute selection in set-valued information systems. Soft Comput 24:4675–4691

Sivasankar E, Selvi C, Mahalakshmi S (2020) Rough set-based feature selection for credit risk prediction using weight-adjusted boosting ensemble method. Soft Comput 24:3975–3988

Sun W, Liu C, Xu Y, Tian L, Li W (2017) A band-weighted support vector machine method for hyperspectral imagery classification. IEEE Geosci Remote Sens Soc 14:1710–1714

Tang H, Dong P, Shi Y (2019) A new approach of integrating piecewise linear representation and weighted support vector machine for forecasting stock turning points. Appl Soft Comput 78:685–696

Vanir V (1999) An overview of statistical learning theory. IEEE Trans Neural Netw 10(5):988–999

Vapnik V (1995) The nature of statistical learning theory. IEEE Trans Neural Netw 10(5):988–999

Verbiest N, Cornelis C, Herrera F (2013a) FRPS: a fuzzy rough prototype selection method. Pattern Recognit 46:2770–2782

Verbiest N, Cornelis C, Herrera F (2013b) FRPS: a fuzzy rough prototype selection method. Pattern Recognit 46(10):2770–2782

Wu WZ, Leung Y, Zhang WX (2002) Connections between rough set theory and Dempster–Shafer theory of evidence. Int J Gen Syst 31:405–430

Xu Q, Zhang J, Jiang C, Huang X, He Y (2015) Weighted quantile regression via support vector machine. Expert Syst Appl 42:5441–5451

Xu P, Davoine F, Zha H, Denœuxa T (2016) Evidential calibration of binary SVM classifiers. Int J Approx Reason 72:55–70

Xue Y, Zhang L, Wang B, Zhang Z, Li F (2018) Nonlinear feature selection using Gaussian kernel SVM-RFE for fault diagnosis. Appl Intell 48:1–26

Yang L, Xu Z (2017) Feature extraction by PCA and diagnosis of breast tumors using SVM with DE-based parameter tuning. Int J Mach Learn Cyber 10:1–11

Yang X, Song Q, Cao A (2005) Weighted support vector machine for data classification. IEEE Int Joint Conf Neural Netw 2:859–864

Yang X, Tan L, He L (2014) A robust least squares support vector machine for regression and classification with noise. Neurocomputing 140:41–52

Yao Y, Lingras P (1998) Interpretations of belief functions in the theory of rough sets. Inf Sci 104:81–106

Zadeh LA (1965) Fuzzy sets. Inf Control 8:338–353

Zhai J (2011) Fuzzy decision tree based on fuzzy-rough technique. Soft Comput 15:1087–1096

Zhang W, Yu L, Yoshida T, Wang Q (2018) Feature weighted confidence to incorporate prior knowledge into support vector machines for classification. Knowl Inf Syst 58:1–27

Zhou C, Lu X, Huang M (2016) Dempster–Shafer theory-based robust least squares support vector machine for stochastic modelling. Neurocomputing 182:145–153

Zhu X, Wu X (2004) Class noise vs. attribute noise: a quantitative study of their impacts. Artif Intell Rev 22:177–210

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Moslemnejad, S., Hamidzadeh, J. Weighted support vector machine using fuzzy rough set theory. Soft Comput 25, 8461–8481 (2021). https://doi.org/10.1007/s00500-021-05773-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-021-05773-7