Abstract

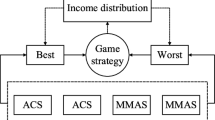

To address the difficulties of slow convergence and inadequate accuracy of traditional ant colony algorithms in solving the traveling salesman problem (TSP), we propose a multi-ant colony algorithm based on the Stackelberg game and incremental learning (SGIACO). We incorporate the Stackelberg game strategy across multiple colonies, where the leader guides the follower to optimize population co-evolution, ensuring a balance between convergence and diversity of the algorithm. Furthermore, we propose an incremental learning strategy that enhances efficient paths on the public routes and ignores inefficient ones, thus accelerating the convergence speed of the algorithm. Finally, when the algorithm stagnates, a pheromone balance mechanism is implemented to help the ants escape from local optima. We conducted experiments on 23 TSP instances to validate the algorithm's performance and compare it to ACS, MMAS, as well as other recent algorithms. In addition, non-parametric tests were conducted for comprehensive performance analysis. Moreover, we verified the feasibility of SGIACO through simulations in robot path planning scenarios. The experimental results show that SGIACO has good convergence and accuracy, which is competitive with other algorithms. Future research aims to scale SGIACO for larger real-world applications, enhancing its adaptability and scalability.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Data availability

Enquiries about data availability should be directed to the authors.

References

Ajmal MS, Iqbal Z, Khan FZ, Ahmad M, Ahmad I, Gupta BB (2021) Hybrid ant genetic algorithm for efficient task scheduling in cloud data centers. Comput Electr Eng. https://doi.org/10.1016/j.compeleceng.2021.107419

Akhand MAH, Ayon SI, Shahriyar SA, Siddique N, Adeli H (2020) Discrete spider monkey optimization for travelling salesman problem. Appl Soft Comput. https://doi.org/10.1016/j.asoc.2019.105887

Baltierra S, Valdebenito J, Mora M (2022) A proposal of edge detection in images with multiplicative noise using the ant colony system algorithm. Eng Appl Artif Intell. https://doi.org/10.1016/j.engappai.2022.104715

Cai X, Gao L, Li F (2019) Sequential approximation optimization assisted particle swarm optimization for expensive problems. Appl Soft Comput. https://doi.org/10.1016/j.asoc.2019.105659

Cinar AC, Korkmaz S, Kiran MS (2020) A discrete tree-seed algorithm for solving symmetric traveling salesman problem. Eng Sci Technol Int J 23(4):879–890. https://doi.org/10.1016/j.jestch.2019.11.005

Dorigo M, Gambardella LM (1997) Ant colony system: a cooperative learning approach to the traveling salesman problem. IEEE Trans Evol Comput 1(1):53–66. https://doi.org/10.1109/4235.585892

Dorigo M, Maniezzo V, Colorni A (1996) Ant system: optimization by a colony of cooperating agents. IEEE Trans Syst Man Cybern B Cybern 26(1):29–41. https://doi.org/10.1109/3477.484436

Dubey GP, Stalin S, Alqahtani O, Alasiry A, Sharma M, Aleryani A, Shukla PK, Alouane MT-H (2023) Optimal path selection using reinforcement learning based ant colony optimization algorithm in IoT-Based wireless sensor networks with 5G technology. Comput Commun 212:377–389. https://doi.org/10.1016/j.comcom.2023.09.015

Ebadinezhad S (2020) DEACO: Adopting dynamic evaporation strategy to enhance ACO algorithm for the traveling salesman problem. Eng Appl Artif Intell. https://doi.org/10.1016/j.engappai.2020.103649

Ezugwu AE-S, Adewumi AO (2017) Discrete symbiotic organisms search algorithm for travelling salesman problem. Expert Syst Appl 87:70–78. https://doi.org/10.1016/j.eswa.2017.06.007

Gülcü Ş, Mahi M, Baykan ÖK, Kodaz H (2016) A parallel cooperative hybrid method based on ant colony optimization and 3-Opt algorithm for solving traveling salesman problem. Soft Comput 22(5):1669–1685. https://doi.org/10.1007/s00500-016-2432-3

Hore S, Chatterjee A, Dewanji A (2018) Improving variable neighborhood search to solve the traveling salesman problem. Appl Soft Comput 68:83–91. https://doi.org/10.1016/j.asoc.2018.03.048

Huang W (2022) Seismic data interpolation by shannon entropy-based shaping. IEEE Trans Geosci Remote Sens 60:1–12. https://doi.org/10.1109/tgrs.2022.3180200

James Kennedy RE (1995) Particle Swarm optimization proceedings of international conference on neural networks, 1942–1948. https://doi.org/10.1109/ICNN.1995.488968

Jati GK, Kuwanto G, Hashmi T, Widjaja H (2023) Discrete komodo algorithm for traveling salesman problem. Appl Soft Comput. https://doi.org/10.1016/j.asoc.2023.110219

Jiang W-Y, Lin Y, Chen M, Yu Y-Y (2015) A co-evolutionary improved multi-ant colony optimization for ship multiple and branch pipe route design. Ocean Eng 102:63–70. https://doi.org/10.1016/j.oceaneng.2015.04.028

Jung M, Lee J, Kim J (2024) A lightweight CNN-transformer model for learning traveling salesman problems. Appl Intell. https://doi.org/10.1007/s10489-024-05603-x

Kumar V, Dangi B (2022) Quantile-based shannon entropy for record statistics. Commun Math Stat 11(2):283–306. https://doi.org/10.1007/s40304-021-00248-5

Kurdi M (2022) Ant colony optimization with a new exploratory heuristic information approach for open shop scheduling problem. Knowl-Based Syst. https://doi.org/10.1016/j.knosys.2022.108323

Li S, Wei Y, Liu X, Zhu H, Yu Z (2022) A new fast ant colony optimization algorithm: the saltatory evolution ant colony optimization algorithm. Mathematics. https://doi.org/10.3390/math10060925

Li G, Liu C, Wu L, Xiao W (2023a) A mixing algorithm of ACO and ABC for solving path planning of mobile robot. Appl Soft Comput. https://doi.org/10.1016/j.asoc.2023.110868

Li J, Yan J, Zhang H, Xiao K (2023b) Identification of feedback nonlinear systems with time delay based on chaotic decreasing weight sparrow search algorithm. Soft Comput 28(5):4009–4024. https://doi.org/10.1007/s00500-023-09373-5

Li W, Wang C, Huang Y, Cheung Y-M (2023c) Heuristic smoothing ant colony optimization with differential information for the traveling salesman problem. Appl Soft Comput. https://doi.org/10.1016/j.asoc.2022.109943

Liu C, Wu L, Xiao W, Li G, Xu D, Guo J, Li W (2023) An improved heuristic mechanism ant colony optimization algorithm for solving path planning. Knowl-Based Syst. https://doi.org/10.1016/j.knosys.2023.110540

Meng J, You X, Liu S (2022) Heterogeneous ant colony optimization based on adaptive interactive learning and non-zero-sum game. Soft Comput 26(8):3903–3920. https://doi.org/10.1007/s00500-022-06833-2

Miao C, Chen G, Yan C, Wu Y (2021) Path planning optimization of indoor mobile robot based on adaptive ant colony algorithm. Comput Ind Eng. https://doi.org/10.1016/j.cie.2021.107230

Miller BL, Shaw MJ (1996). Genetic algorithms with dynamic niche sharing for multimodal function optimization. Proceedings of 1996 {IEEE} international conference on evolutionary computation, 786–791. https://doi.org/10.1109/ICEC.1996.542701

Pan Q, Tang J, Wang H, Li H, Chen X, Lao S (2021) SFSADE: an improved self-adaptive differential evolution algorithm with a shuffled frog-leaping strategy. Artif Intell Rev 55(5):3937–3978. https://doi.org/10.1007/s10462-021-10099-9

Pan Q, Tang J, Lao S (2022) EDOA: an elastic deformation optimization algorithm. Appl Intell 52(15):17580–17599. https://doi.org/10.1007/s10489-022-03471-x

Pan Q, Tang J, Zhan J, Li H (2023) Bacteria phototaxis optimizer. Neural Comput Appl 35(18):13433–13464. https://doi.org/10.1007/s00521-023-08391-6

Panwar K, Deep K (2021) Transformation operators based grey wolf optimizer for travelling salesman problem. J Comput Sci. https://doi.org/10.1016/j.jocs.2021.101454

Rahman MG, Islam MZ (2022) Adaptive decision forest: an incremental machine learning framework. Pattern Recognit. https://doi.org/10.1016/j.patcog.2021.108345

Rokbani N, Kumar R, Abraham A, Alimi AM, Long HV, Priyadarshini I, Son LH (2020) Bi-heuristic ant colony optimization-based approaches for traveling salesman problem. Soft Comput 25(5):3775–3794. https://doi.org/10.1007/s00500-020-05406-5

Rungwachira P, Thammano A (2023) Hybrid sweep algorithm and modified ant system with threshold for travelling salesman problem. Adv Nat Comput Fuzzy Syst Knowl Discov Icnc-Fskd 2022(153):317–326. https://doi.org/10.1007/978-3-031-20738-9_36

Salii Y (2019) Revisiting dynamic programming for precedence-constrained traveling salesman problem and its time-dependent generalization. Eur J Oper Res 272(1):32–42. https://doi.org/10.1016/j.ejor.2018.06.003

Shao W, Shao Z, Pi D (2022) An ant colony optimization behavior-based MOEA/D for distributed heterogeneous hybrid flow shop scheduling problem under nonidentical time-of-use electricity tariffs. IEEE Trans Autom Sci Eng 19(4):3379–3394. https://doi.org/10.1109/tase.2021.3119353

Silva BCH, Fernandes IFC, Goldbarg MC, Goldbarg EFG (2020) Quota travelling salesman problem with passengers, incomplete ride and collection time optimization by ant-based algorithms. Comput Oper Res. https://doi.org/10.1016/j.cor.2020.104950

Strong WL (2016) Biased richness and evenness relationships within Shannon-Wiener index values. Ecol Ind 67:703–713. https://doi.org/10.1016/j.ecolind.2016.03.043

Stützle T, Hoos HH (2000) Ant system. Futur Gener Comput Syst 16(8):889–914. https://doi.org/10.1016/s0167-739x(00)00043-1

Tang M, Liao H, Wu X (2023) A Stackelberg game model for large-scale group decision making based on cooperative incentives. Inf Fusion 96:103–116. https://doi.org/10.1016/j.inffus.2023.03.013

Tang J, Pan Q, Chen Z, Liu G, Yang G, Zhu F, Lao S (2024) An improved artificial electric field algorithm for robot path planning. IEEE Transa Aerosp Electron Syst. https://doi.org/10.1109/taes.2024.3351110

Tripathy HK, Mishra S, Thakkar HK, Rai D (2021) CARE: a collision-aware mobile robot navigation in grid environment using improved breadth first search. Comput Electri Eng. https://doi.org/10.1016/j.compeleceng.2021.107327

Tuani AF, Keedwell E, Collett M (2020) Heterogenous adaptive ant colony optimization with 3-opt local search for the travelling salesman problem. Appl Soft Comput. https://doi.org/10.1016/j.asoc.2020.106720

Wang H, Yi W (2023) A Stackelberg game model for construction waste transportation. Adv Eng Inform. https://doi.org/10.1016/j.aei.2023.101991

Wang Y, Wang L, Chen G, Cai Z, Zhou Y, Xing L (2020) An Improved ant colony optimization algorithm to the periodic vehicle routing problem with time window and service choice. Swarm Evolut Comput. https://doi.org/10.1016/j.swevo.2020.100675

Wu C, Fu X, Pei J, Dong Z (2021) A novel sparrow search algorithm for the traveling salesman problem. IEEE Access 9:153456–153471. https://doi.org/10.1109/access.2021.3128433

Wu Y, Song W, Cao Z, Zhang J, Lim A (2022) Learning improvement heuristics for solving routing problems. IEEE Trans Neural Netw Learn Syst 33(9):5057–5069. https://doi.org/10.1109/tnnls.2021.3068828

Wu L, Huang X, Cui J, Liu C, Xiao W (2023) Modified adaptive ant colony optimization algorithm and its application for solving path planning of mobile robot. Expert Syst Appl. https://doi.org/10.1016/j.eswa.2022.119410

Xu X, Li J, Zhou M (2021) Delaunay-triangulation-based variable neighborhood search to solve large-scale general colored traveling salesman problems. IEEE Trans Intell Transp Syst 22(3):1583–1593. https://doi.org/10.1109/tits.2020.2972389

Yang K, You X, Liu S, Pan H (2020) A novel ant colony optimization based on game for traveling salesman problem. Appl Intell 50(12):4529–4542. https://doi.org/10.1007/s10489-020-01799-w

Yang H, Zhao M, Yuan L, Yu Y, Li Z, Gu M (2023) Memory-efficient transformer-based network model for traveling salesman problem. Neural Netw 161:589–597. https://doi.org/10.1016/j.neunet.2023.02.014

Yousefikhoshbakht M, Volchenkov D (2021) Solving the traveling salesman problem: a modified metaheuristic algorithm. Complexity 2021:1–13. https://doi.org/10.1155/2021/6668345

Yuan Q, Qian F (2010) A hybrid genetic algorithm for twice continuously differentiable NLP problems. Comput Chem Eng 34(1):36–41. https://doi.org/10.1016/j.compchemeng.2009.09.006

Zhang Y, Rong F, Wang Z (2018) Research on cold chain logistic service pricing—based on tripartite Stackelberg game. Neural Comput Appl 32(1):213–222. https://doi.org/10.1007/s00521-018-3803-8

Zhang W, Wang C, Lin W, Lin J (2020) Continuous-domain ant colony optimization algorithm based on reinforcement learning. Int J Wavel Multiresolut Inf Process. https://doi.org/10.1142/s0219691320500848

Zhang Z, Xu X, Yue F, Ba Y (2023) Robot path planning based on concept lattice. Int J Approx Reason 153:87–103. https://doi.org/10.1016/j.ijar.2022.11.013

Zhao H, Zhang C (2022) An ant colony optimization algorithm with evolutionary experience-guided pheromone updating strategies for multi-objective optimization. Expert Syst Appl. https://doi.org/10.1016/j.eswa.2022.117151

Zhao J, You X, Duan Q, Liu S (2022) Multiple ant colony algorithm combining community relationship network. Arab J Sci Eng 47(8):10531–10546. https://doi.org/10.1007/s13369-022-06579-x

Zhou X, Ma H, Gu J, Chen H, Deng W (2022) Parameter adaptation-based ant colony optimization with dynamic hybrid mechanism. Eng Appl Artif Intell. https://doi.org/10.1016/j.engappai.2022.105139

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 61673258, Grant 61075115 and in part by the Shanghai Natural Science Foundation under Grant 19ZR1421600.

Author information

Authors and Affiliations

Contributions

The paper and the code of the algorithm were written by Qihuan Wu. Suggestions for revising the manuscript were given by Xiaoming You. The material for the experiment was prepared by Sheng Liu. The final manuscript is approved by all authors.

Corresponding author

Ethics declarations

Conflict of interest

All the authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wu, Q., You, X. & Liu, S. Multi-ant colony algorithm based on the Stackelberg game and incremental learning. Soft Comput 29, 2107–2128 (2025). https://doi.org/10.1007/s00500-025-10469-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-025-10469-3