Abstract

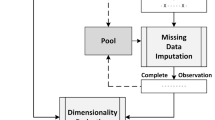

This paper presents the expectation–maximization (EM) variant of probabilistic neural network (PNN) as a step toward creating an autonomous and deterministic PNN. In the real world, faulty reading sensors can happen and will create input vectors with missing features yet they should not be discarded. To overcome this, regularized EM is put in place as a preprocessing step to impute the missing values. The problem faced by users when using random initialization is that they have to define the number of clusters through trial and error, which makes it stochastic in nature. Global k-means is used to autonomously find the number of clusters using a selection criterion and deterministically provide the number of clusters needed to train the model. In addition, fast Global k-means will be tested as an alternative to Global k-means to help reduce computational time. Tests are conducted on both homoscedastic and heteroscedastic PNNs. Benchmark medical datasets and also vibration data collected from a US Navy CH-46E helicopter aft gearbox known as Westland were used. The tests’ results fully support the usage of fast Global k-means and regularized EM as preprocessing steps to aid the EM-trained PNN.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Specht DF (1990) Probabilistic neural network. Neural Netw 3:109–118. doi:10.1016/0893-6080(90)90049-Q

Parzen E (1962) On the estimation of a probability density function. Ann Math Stat 3:1065–1076. doi:10.1214/aoms/1177704472

Berthold MR, Diamond J (1998) Constructive training of probabilistic neural networks. Neurocomputing 19:167–183. doi:10.1016/S0925-2312(97)00063-5

Ordonez C, Omiecinski E (2002) FREM: fast and robust EM clustering for large data sets. In: Proceedings of the eleventh international conference on information and knowledge management, November 4–9, 2002, McLean

Schneider T (2001) Analysis of incomplete climate data: estimation of mean values and covariance matrices and imputation of missing values. J Clim 14:853–871 doi:10.1175/1520-0442(2001)014<0853:AOICDE>2.0.CO;2

Specht DF (1988) Probabilistic neural network for classification, mapping, or associative memory. Proc IEEE Int Conf Neural Netw 1:525–532

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood for incomplete data via the EM algorithm. J R Stat Soc B 39:1–38

Wu C (1983) On the convergence properties of the EM algorithm. Ann Stat 11:95–103. doi:10.1214/aos/1176346060

Xu L, Jordan MI (1996) On convergence properties of the EM algorithm for Gaussian mixtures. Neural Comput 8:129–151. doi:10.1162/neco.1996.8.1.129

Yang ZR, Chen S (1998) Robust maximum likelihood training of heteroscedastic probabilistic neural networks. Neural Netw 11:739–747. doi:10.1016/S0893-6080(98)00024-0

Likas A, Vlassis N, Verbeek JJ (2003) The Global k-means clustering algorithm. Pattern Recognit 36:451–461. doi:10.1016/S0031-3203(02)00060-2

Little RJA, Rubin DB (1987) Statistical analysis with missing data. Wiley series in probability and mathematical statistics. Wiley, New York

Blake CL, Merz CJ (1998) UCI repository of machine learning databases. Department of Information and Computer Sciences , University of California, Irvine

Zarndt FA (1995) Comprehensive case study: an examination of machine learning and connectionist algorithms. MSc thesis, Department of Computer Science, Brigham Young University

Cameron BG (1993) Final report on CH-46 Aft transmission seeded fault testing. Westland Helicopters Ltd, UK, Research Paper RP907

Yen GG, Lin KC (2000) Wavelet packet feature extraction for vibration monitoring. IEEE Trans Ind Electron 47(3). doi:10.1109/41.847906

Coifman RR, Wickerhauser MV (1992) Entropy based algorithms for best basis selection. IEEE Trans Inf Theory 38:713–718. doi:10.1109/18.119732

Wickerhauser MV (1994) Adapted wavelet analysis from theory to software. Wellesley, Natick

Fukunaga K (1992) Introduction to statistical pattern recognition. Academic Press, New York

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chang, R.K.Y., Loo, C.K. & Rao, M.V.C. Enhanced probabilistic neural network with data imputation capabilities for machine-fault classification. Neural Comput & Applic 18, 791–800 (2009). https://doi.org/10.1007/s00521-008-0215-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-008-0215-1