Abstract

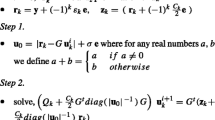

Twin support vector regression (TSVR) was proposed recently as a novel regressor that tries to find a pair of nonparallel planes, i.e., ε-insensitive up- and down-bounds, by solving two related SVM-type problems. However, it may incur suboptimal solution since its objective function is positive semi-definite and the lack of complexity control. In order to address this shortcoming, we develop a novel SVR algorithm termed as smooth twin SVR (STSVR). The idea is to reformulate TSVR as a strongly convex problem, which results in unique global optimal solution for each subproblem. To solve the proposed optimization problem, we first adopt a smoothing technique to convert the original constrained quadratic programming problems into unconstrained minimization problems, and then use the well-known Newton–Armijo algorithm to solve the smooth TSVR. The effectiveness of the proposed method is demonstrated via experiments on synthetic and real-world benchmark datasets.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Vapnik VN (1995) The nature of statistical theory. Springer, New York

Burges CJC (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Discov 2(2):121–167

Cristianini N, Shawe-Taylor J (2000) An introduction to support vector machines. Cambridge University Press, Cambridge

Schölkopf B, Smola AJ (2002) Learning with kernels. MIT Press, Cambridge

Boyd S and Vandenberghe L (2003) Convex optimization. Cambridge University Press, Cambridge. Available at http://www.stanford.edu/~boyd/cvxbook.html

Osuna E, Freund R, Girosi F (1997) An improved training algorithm for support vector machines. In: Proceedings of neural networks for signal processing, vol VII, New York, USA

Platt JC (1998) Fast training of support vector machines using sequential minimal optimization. In: Advances in Kernel methods—support vector machines. Cambridge

Joachims T (1999) Making large-scale SVM learning practical. In: Advances in Kernel methods-support vector learning. MIT press, Cambridge, pp 169–184

Collobert R, Bengio S (2001) SVMTorch: support vector machines for large-scale regression problems. J Mach Learn 1(2):143–160

Chang CC, Lin CJ (2001) LIBSVM: a library for support vector machines. Available from http://www.csie.ntu.edu.tw/∼cjlin

Mangasarian OL, Wild EW (2006) Multisurface proximal support vector classification via generalized eigenvalues. IEEE Trans Pattern Anal Mach Intell 28(1):69–74

Jayadeva RK, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29(5):905–910

Kumar MA, Gopal M (2008) Application of smoothing technique on twin support vector machines. Pattern Recognit Lett 29:1842–1848

Kumar MA, Gopal M (2009) Least squares twin support vector machines for pattern classification. Expert Syst Appl 36:7535–7543

Peng X (2010) TSVR: an efficient twin support vector machine for regression. Neural Netw 23(3):365–372

Chapelle O (2007) Training a support vector machine in the primal. Neural Comput 19(5):1155–1178

Bo L, Wang L, Jiao L (2007) Recursive finite Newton algorithm for support vector regression in the primal. Neural Comput 19(4):1082–1096

Vapnik VN (1998) Statistical learning theory. Wiley, New York

Suykens JAK, Van Gestel T, De Brabanter J, De Moor B, Vandewalle J (2002) Least squares support vector machine. World Scientific Publishing, Singapore

Tikhonov AN, Arsen VY (1977) Solutions of ill-posed problems. Wiley, New York

Lee Y-J, Mangasarian OL (2000) SSVM: a smooth support vector machine. Comput Optim Appl 20(1):5–22

Mangasarian OL, Musicant DR (2001) Lagrangian support vector machines. J Mach Learn Res 1:161–177

Bo L, Wang L, Jiaov L (2007) Selecting a reduced set for building sparse support vector regression in the primal. Lecture notes in computer science vol 4426, pp 35–47

The MOSEK Optimization Tools (2008) Version 5.0, Denmark. [Online]. Available: http://www.mosek.com

Yunyun W, Songcan C, Hui X (2010) Structure-embedded AUC-SVM. Int J Pattern Recognit Artif Intell 24(5):667–690

Luss R and D’Aspremont A (2008) Support vector machine classification with indefinite Kernels. In: Advances in Neural Information Process Systems 20. MIT Press, Cambridge, pp 953–960

Hao P-Y (2010) New support vector algorithms with parametric insensitive/margin model. Neural Netw 23:60–73

Lee CC, Chung PC, Tsai JR, Chang CI (1999) Robust radial basis function neural networks. IEEE Trans Syst Man Cybern B: Cybern 29(6):674–685

Wang M, Hua X-S, Song Y, Dai L-R, Zhang H-J (2006) Semi-supervised Kernel regression. In: Proceedings of the sixth international conference on data mining (ICDM’06)

Zhou Z-H, Li M (2007) Semisupervised regression with cotraining-style algorithms. IEEE Trans Knowl Data Eng 19(11):1479–1493

Muphy PM, Aha DW (1992) UCI repository of machine learning databases. University of California, Irvine. Available at http://www.ics.uci.edu/~mlearn

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chen, X., Yang, J., Liang, J. et al. Smooth twin support vector regression. Neural Comput & Applic 21, 505–513 (2012). https://doi.org/10.1007/s00521-010-0454-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-010-0454-9