Abstract

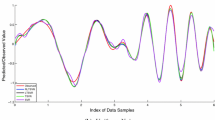

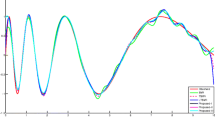

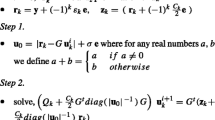

In this paper, a simple and linearly convergent Lagrangian support vector machine algorithm for the dual of the twin support vector regression (TSVR) is proposed. Though at the outset the algorithm requires inverse of matrices, it has been shown that they would be obtained by performing matrix subtraction of the identity matrix by a scalar multiple of inverse of a positive semi-definite matrix that arises in the original formulation of TSVR. The algorithm can be easily implemented and does not need any optimization packages. To demonstrate its effectiveness, experiments were performed on well-known synthetic and real-world datasets. Similar or better generalization performance of the proposed method in less training time in comparison with the standard and twin support vector regression methods clearly exhibits its suitability and applicability.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Balasundaram S, Kapil (2010) On Lagrangian support vector regression. Exp Syst Appl 37:8784–8792

Balasundaram S, Kapil (2010) Application of Lagrangian twin support vector machines for classification. In: 2nd international conference on machine learning and computing, ICMLC2010, IEEE Press, pp 193–197

Box GEP, Jenkins GM (1976) Time series analysis: forecasting and control. Holden-Day, San Francisco

Brown MPS, Grundy WN, Lin D et al (2000) Knowledge-based analysis of microarray gene expression data using support vector machine. Proc Nat Acad Sci USA 97(1):262–267

Chen X, Yang J, Liang J, Ye Q (2011) Smooth twin support vector regression. Neural Comput Appl. doi:10.1007/s00521-010-0454-9

Cristianini N, Shawe-Taylor J (2000) An introduction to support vector machines and other kernel based learning method. Cambridge University Press, Cambridge

DELVE (2005) Data for evaluating learning in valid experiments. http://www.cs.toronto.edu/~delve/data

Jayadeva, Khemchandani R, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Patt Anal Mach Intell 29(5):905–910

Joachims T, Ndellec C, Rouveriol (1998) Text categorization with support vector machines: learning with many relevant features. In: European conference on machine learning no. 10, Chemnitz, Germany, pp 137–142

Kumar MA, Gopal M (2009) Least squares twin support vector machines for pattern classification. Expert Syst Appl 36:7535–7543

Li G, Wen C, Guang G-B, Chen Y (2011) Error tolerance based support vector machine for regression. Neurocomputing 74(5):771–782

Mangasarian OL (1994) Nonlinear programming. SIAM Philadelphia, PA

Mangasarian OL, Musicant DR (2001) Lagrangian support vector machines. J Mach Learn Res 1:161–177

Mangasarian OL, Solodov MV (1993) Nonlinear complementarity as unconstrained and constrained minimization. Math Program B 62:277–297

Mangasarian OL, Wild EW (2006) Multisurface proximal support vector classification via generalized eigenvalues. IEEE Trans Patt Anal Mach Intell 28(1):69–74

Mukherjee S, Osuna E, Girosi F (1997) Nonlinear prediction of chaotic time series using support vector machines. In: NNSP’97: Neural networks for signal processing VII: Proceedings of IEEE signal processing society workshop, Amelia Island, FL, USA, pp 511–520

Muller KR, Smola AJ, Ratsch G, Schölkopf B, Kohlmorgen J (1999) Using support vector machines for time series prediction. In: Schölkopf B, Burges CJC, Smola AJ (eds) Advances in Kernel methods- support vector learning. MIT Press, Cambridge, pp 243–254

Murphy PM, Aha DW (1992) UCI repository of machine learning databases. University of California, Irvine. http://www.ics.uci.edu/~mlearn

Osuna E, Freund R, Girosi F (1997) Training support vector machines: an application to face detection. In: Proceedings of computer vision and pattern recognition, pp 130–136

Peng X (2010) TSVR: an efficient twin support vector machine for regression. Neural Netw 23(3):365–372

Ribeiro B (2002) Kernelized based functions with Minkovsky’s norm for SVM regression. In: Proceedings of the international joint conference on neural networks, 2002, IEEE press, pp 2198–2203

Shao Y-H, Zhang C-H, Wang X-B, Deng N-Y (2011) Improvements on twin support vector machines. IEEE Trans Neural Netw 22(6):962–968

The MOSEK optimization tools (2008) Version 5.0, Denmark. http://www.mosek.com

Vapnik VN (2000) The nature of statistical learning theory, 2nd edn. Springer, New York

Zhong P, Xu Y, Zhao Y (2011) Training twin support vector regression via linear programming. Neural Comput Appl. doi:10.1007/s00521-011-0526-6

Acknowledgments

The authors are extremely thankful to the learned referees for their critical and constructive comments that greatly improved the earlier version of the paper. Mr. Tanveer acknowledges the financial support given as scholarship by Council of Scientific and Industrial Research, India.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Balasundaram, S., Tanveer, M. On Lagrangian twin support vector regression. Neural Comput & Applic 22 (Suppl 1), 257–267 (2013). https://doi.org/10.1007/s00521-012-0971-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-012-0971-9