Abstract

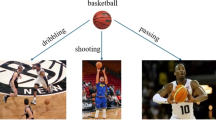

Automatically describing the image caption is a challenging task in computer vision. The difficulty mainly lies in capturing the interesting objects and recognizing the interaction activity of the interesting objects. In this paper, we introduce “centerpiece interaction,” a complex visual composite, to represent the main objects interaction activity. We propose a centerpiece interaction recognition framework to achieve the detection of interesting objects and the recognition of their interaction activity by regarding them as an integrated task. In our framework, firstly, a graph-based model is proposed to learn the 2.5D spatial co-occurrence context among objects, which strongly facilitates the interesting objects detection. Secondly, we propose a hierarchical model, with the help of 2.5D spatial co-occurrence context obtained, to learn the relational features of the interesting objects in a hierarchy of stages by integrating the features of the interesting objects, which significantly improve the recognition of centerpiece interaction. Experiments on a joint dataset show that our framework outperforms state-of-the-art in spatial co-occurrence context analysis, the interesting objects detection and the centerpiece interaction recognition.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Andriluka M, Sigal L (2012) Human context: modeling human-human interactions for monocular 3d pose estimation. In: Perales FJ, Fisher RB, Moeslund TB (eds) Articulated motion and deformable objects, Springer, Berlin, Heidelberg, pp 260–272

Desai C, Ramanan D (2012) Detecting actions, poses, and objects with relational phraselets. In: Fitzgibbon A, Lazebnik S, Perona P, Sato Y, Schmid C (eds) Computer vision-ECCV 2012, Springer, Berlin, Heidelberg, pp 158–172

Desai C, Ramanan D, Fowlkes C (2010) Discriminative models for static human-object interactions. In: Computer vision and pattern recognition workshops (CVPRW), 2010 IEEE computer society conference on, pp 9–16

Desai C, Ramanan D, Fowlkes CC (2011) Discriminative models for multi-class object layout. Int J Comput Vis 95(1):1–12

Faccin M, Migdał P, Johnson T, Biamonte J. Bergholm V (2013) Community detection in quantum complex networks. arXiv preprint arXiv:1310.6638

Franc V, Sonnenburg S (2008) Optimized cutting plane algorithm for support vector machines. In: Proceedings of the 25th international conference on machine learning, ACM, pp 320–327

George D (2008) How the brain might work: a hierarchical and temporal model for learning and recognition. Ph.D. thesis, Stanford University

Guerra-Filho G, Fermuller C, Aloimonos Y (2005) Discovering a language for human activity. In: Proceedings of the AAAI 2005 fall symposium on anticipatory cognitive embodied systems, Washington, DC

Guo G, Lai A (2014) A survey on still image based human action recognition. Pattern Recognit 47(10):3343–3361

Gupta A, Kembhavi A, Davis LS (2009) Observing human-object interactions: using spatial and functional compatibility for recognition. IEEE Trans Pattern Anal Mach Intell 31(10):1775–1789

Gupta A, Mannem P (2012) From image annotation to image description. In: Huang T, Zeng Z, Li C, Leung CS (eds) Neural information processing, Springer, Berlin, Heidelberg, pp 196–204

Gupta A, Srinivasan P, Shi J, Davis LS (2009) Understanding videos, constructing plots learning a visually grounded storyline model from annotated videos. In: IEEE conference on computer vision and pattern recognition, 2009. CVPR 2009. pp 2012–2019

Hawkins J, George D (2006) Hierarchical temporal memory: concepts, theory and terminology. Numenta Inc, Whitepaper

Hinton GE, Salakhutdinov RR (2006) Reducing the dimensionality of data with neural networks. Science 313(5786):504–507

Hoiem D, Efros AA, Hebert M (2011) Recovering occlusion boundaries from an image. Int J Comput Vis 91(3):328–346

Hu JF, Zheng WS, Lai J, Gong S, Xiang T (2013) Recognising human-object interaction via exemplar based modelling. In: 2013 IEEE international conference on computer vision (ICCV), pp 3144–3151

Hussein ME, Torki M, Gowayyed MA, El-Saban M (2013) Human action recognition using a temporal hierarchy of covariance descriptors on 3d joint locations. In: Rossi F (ed) Proceedings of the twenty-third international joint conference on artificial intelligence, AAAI Press, California, pp 2466–2472

Johnson-Frey SH, Maloof FR, Newman-Norlund R, Farrer C, Inati S, Grafton ST (2003) Actions or hand-object interactions: human inferior frontal cortex and action observation. Neuron 39(6):1053–1058

Karpathy A, Joulin A, Li FFF (2014) Deep fragment embeddings for bidirectional image sentence mapping. In: Advances in neural information processing systems, pp 1889–1897

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105

Lai D, Lu H, Nardini C (2010) Finding communities in directed networks by pagerank random walk induced network embedding. Physica A: Stat Mech Appl 389(12):2443–2454

Li LJ, Socher R, Fei-Fei L (2009) Towards total scene understanding: classification, annotation and segmentation in an automatic framework. In: IEEE conference on computer vision and pattern recognition, 2009. CVPR 2009, pp 2036–2043

Memisevic R, Zach C, Pollefeys M, Hinton GE (2010) Gated softmax classification. In: Advances in neural information processing systems, pp 1603–1611

Mones E (2013) Hierarchy in directed random networks. Phys Rev E 87(2):022,817

Prest A, Ferrari V, Schmid C (2013) Explicit modeling of human-object interactions in realistic videos. IEEE Trans Pattern Anal Mach Intell 35(4):835–848

Ratliff N, Bagnell JA, Zinkevich M (2006) Subgradient methods for maximum margin structured learning. In: ICML workshop on learning in structured output spaces, vol. 46. Citeseer

Rohrbach M, Qiu W, Titov I, Thater S, Pinkal M, Schiele B (2013) Translating video content to natural language descriptions. In: 2013 IEEE international conference on computer vision (ICCV), pp 433–440

Sadeghi MA, Farhadi A (2011) Recognition using visual phrases. In: 2011 IEEE conference on computer vision and pattern recognition (CVPR), pp 1745–1752

Serre T, Oliva A, Poggio T (2007) A feedforward architecture accounts for rapid categorization. Proc Natl Acad Sci 104(15):6424–6429

Sporns O (2012) Discovering the human connectome. MIT Press, Cambridge

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2014) Going deeper with convolutions. arXiv preprint arXiv:1409.4842

Vinyals O, Toshev A, Bengio S, Erhan D (2014) Show and tell: A neural image caption generator. arXiv preprint arXiv:1411.4555

Wei P, Zhao Y, Zheng N, Zhu SC (2013) Modeling 4d human-object interactions for event and object recognition. In: 2013 IEEE international conference on computer vision (ICCV), pp 3272–3279

Wisuttirungseurai P, Kawewong A, Patanukhom K (2014) Object categorization using co-occurrence and spatial relationship with human interaction. In: International conference on 2014 information science and applications (ICISA), pp 1–4

Yao B, Fei-Fei L (2010) Grouplet: a structured image representation for recognizing human and object interactions. In: IEEE conference on computer vision and pattern recognition (CVPR), 2010, pp 9–16

Yao B, Fei-Fei L (2012) Recognizing human-object interactions in still images by modeling the mutual context of objects and human poses. IEEE Trans Pattern Anal Mach Intell 34(9):1691–1703

Acknowledgments

The research was supported in part by Natural Science Foundation of China (No. 60903071), National Basic Research Program of China (973 Program, No. 2013CB329605), Specialized Research Fund for the Doctoral Program of Higher Education of China, and Training Program of the Major Project of BIT.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bai, L., Li, K., Pei, J. et al. Main objects interaction activity recognition in real images. Neural Comput & Applic 27, 335–348 (2016). https://doi.org/10.1007/s00521-015-1846-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-015-1846-7