Abstract

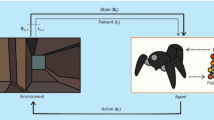

Improving the learning convergence of reinforcement learning (RL) in mobile robot navigation has been the interest of many recent works that have investigated different approaches to obtain knowledge from effectively and efficiently exploring the robot’s environment. In RL, this knowledge is of great importance for reducing the high number of interactions required for updating the value function and to eventually find an optimal or a nearly optimal policy for the agent. In this paper, we propose a topological Q-learning (TQ-learning) algorithm that makes use of the topological ordering among the observed states of the environment in which the agent acts. This algorithm builds an incremental topological map of the environment using Instantaneous Topological Map model which we use for accelerating value function updates as well as providing a guided exploration strategy for the agent. We evaluate our algorithm against the original Q-learning and the Influence Zone algorithms in static and dynamic environments.

Similar content being viewed by others

Notes

By “subsequent experiences”, we mean subsequent visits by the agent to those proceeding states.

The environment states are represented by nodes in the ITM map as will be discussed later.

In ε-greedy exploration, the agent selects the next action randomly with a probability of ε and based on the learned policy with a probability of 1 − ε.

References

Sutton RS (1991) Dyna, an integrated architecture for learning, planning, and reacting. ACM SIGART Bull 2(4):160–163

Moore AW, Atkeson CG (1993) Prioritized sweeping: reinforcement learning with less data and less time. Mach Learn 13(1):103–130

Hwang KS, Jiang WC, Chen YJ (2012) Tree-based Dyna-Q agent. The 2012 IEEE/ASME international conference on advanced intelligent mechatronics, Kaohsiung, Taiwan

Sutton RS (1988) Learning to predict by the methods of temporal differences. Mach Learn 3(1):9–44

Watkins CGCH (1989) Learning from delayed rewards. King’s College, Cambridge

Wiering M, Schmidhuber J (1998) Fast online Q(λ). Mach Learn 33(1):105–115

Kaelbling LP, Littman ML, Moore AW (1996) Reinforcement learning: a survey. J Artif Intell Res 4:237–285

Peng J, Williams RJ (1996) Incremental multi-step Q-learning. Mach Learn 22(1–3):283–290

Touzet CF (1997) Neural reinforcement learning for behaviour synthesis. Rob Auton Syst 22(3–4):251–281

Zeller M, Sharma R, Schulten K (1997) Motion planning of a pneumatic robot using a neural network. IEEE Control Syst 17(3):89–98

Busoniu L, Babuska R, Schutter BD, Ernst D (2010) Reinforcement learning and dynamic programming using function approximators. CRC Press, New York

Millán JDR, Posenato D, Dedieu E (2002) Continuous-action Q-learning. Mach Learn 49(2–3):247–265

Munos R, Szepesvári C (2008) Finite-time bounds for fitted value iteration. J Mach Learn Res 1:623–665

Dietterich TG (2000) Hierarchical reinforcement learning with the MAXQ value function decomposition. Artificial intelligence research

Takase N, Kubota N, Baba N (2012) Multi-scale Q-learning of a mobile robot in dynamic environments. SCIS-ISIS, Kobe

Braga APS, Araújo AFR (2003) A topological reinforcement learning agent for navigation. Neural Comput Appl 12:220–236

Braga APS, Araújo AFR (2006) Influence Zones: a strategy to enhance reinforcement learning. Neurocomputing 70(1–3):21–34

Dai P, Strehl AL, J. Goldsmith (2008) Expediting RL by using graphical structures. Proceedings of the seventh international joint conference on autonomous agents and multiagent systems

Sutton RS, Barto AG (1998) Introduction to reinforcement learning. MIT Press/Bradford Books, Cambridge

Sutton RS (1990) Integrated architectures for learning, planning, and reacting based on approximating dynamic programming. Proceedings of the seventh international conference on machine learning

Luciw M, Graziano V, Ring M, Schmidhuber J (2011) Artificial curiosity with planning for autonomous perceptual and cognitive development. Development and learning (ICDL), Frankfurt

Zahedi K, Martius G, Ay N (2013) Linear combination of one-step predictive information with an external reward in an episodic policy gradient setting: a critical analysis. Front Psychol. doi:10.3389/fpsyg.2013.00801

Remolina E, Kuipers B (2004) Towards a general theory of topological maps. Artif Intell 152(1):47–104

Thrun S, Buckenz A (1996) Integrating grid-based and topological maps for mobile robot navigation. Proceedings of the thirteenth national conference on artificial intelligence AAAI, Portland, Oregon

Kohonen T (1989) Self-organization and associative memory. Springer, Berlin

Kohonen T (2001) Self-organizing maps. Springer, Berlin

Martinetz T, Schulten K (1991) A neural-gas network learns topologies. Artif Neural Netw, Amsterdam, pp 397–402

Fritzke B (1995) A growing neural gas network learns topologies. Adv Neural Inf Process Syst 7:625–632

Jockusch J, Ritter H (1999) An Instantaneous Topological Mapping Model for correlated stimuli. Proceedings of the IJCNN’99, Washington, DC

Ng AY, Jordan M (2000) PEGASUS: a policy search method for large MDPs and POMDPs. Proceedings of the sixteenth conference on uncertainty in artificial intelligence, San Francisco

Acknowledgments

This research is supported by High Impact Research MoE Grant UM.C/625/1/HIR/MoE/FCSIT/10 from the Ministry of Education Malaysia.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hafez, M.B., Loo, C.K. Topological Q-learning with internally guided exploration for mobile robot navigation. Neural Comput & Applic 26, 1939–1954 (2015). https://doi.org/10.1007/s00521-015-1861-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-015-1861-8