Abstract

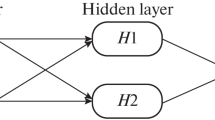

Nowadays application of neural networks in the manufacturing field is widely assessed even if this type of problem is typically characterized by an insufficient availability of data for a robust network training. Satisfactory results can be found in the literature, in both forming and machining operations, regarding the use of a neural network as a predictive tool. Nevertheless, the research of the optimal network configuration is still based on trial-and-error approaches, rather than on the application of specific techniques . As a consequence, the best method to determine the optimal neural network configuration is still a lack of knowledge in the literature overview. According to that, a comparative analysis is proposed in this work. More in detail four different approaches have been used to increase the generalization abilities of a neural network. These methods are based, respectively, on the use of genetic algorithms, Taguchi, tabu search and decision trees. The parameters taken into account in this work are the training algorithm, the number of hidden layers, the number of neurons and the activation function of each hidden layer. These techniques have been firstly tested on three different datasets, generated through numerical simulations in the Deform2D environment, in an attempt to map the input–output relationship for an extrusion, a rolling and a shearing process. Subsequently, the same approach has been validated on a fourth dataset derived from the literature review for a complex industrial process to widely generalize and asses the proposed methodology in the whole manufacturing field. Four tests were carried out for each dataset modifying the original data with a random noise with zero mean and standard deviation of one, two and five per cent. The results show that the use of a suitable technique for determining the architecture of a neural network can generate a significant performance improvement compared to a trial-and-error approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Neves J, Rocha M, Cortez P (2007) Evolution of neural networks for classification and regression. Neurocomputing 70(16):2809–2816

Bouzerdoum A, Arulampalam G (2003) A generalized feedforward neural network architecture for classification and regression. Neural Netw 16(5):561–568

Pfingsten JT (2007) Machine learning for mass production and industrial engineering

Pospichal J, Svozil D, Kvasnicka V (1997) Introduction to multi-layer feed-forward neural networks. Chemom Intell Lab Syst 39(1):43–62

Umbrello D, Ambrogio G, Filice L, Shivpuri R (2007) An ann approach for predicting subsurface residual stresses and the desired cutting conditions during hard turning. J Mater Process Technol 189(1):143–152

Hambli R (2009) Statistical damage analysis of extrusion processes using finite element method and neural networks simulation. Finite Elem Anal Des 45(10):640–649

Yang F (2010) Neural network metamodeling for cycle time-throughput profiles in manufacturing. Eur J Oper Res 205(1):172–185

Unal M, Onat M, Demetgul M, Kucuk H (2014) Fault diagnosis of rolling bearings using a genetic algorithm optimized neural network. Measurement 58:187–196

Saravanan R, Venkatesan D, Kannan K (2009) A genetic algorithm-based artificial neural network model for the optimization of machining processes. Neural Comput Appl 18(2):135–140

Yao YL, Fang XD (1993) Assessment of chip forming patterns with tool wear progression in machining via neural networks. Int J Mach Tools Manuf 33(1):89–102

Gagliardi F, Ambrogio G (2013) Design of an optimized procedure to predict opposite performances in porthole die extrusion. Neural Comput Appl 23(1):195–206

Fığlalı A, Özcan B (2014) Artificial neural networks for the cost estimation of stamping dies. Neural Comput Appl 25(3–4):1–10

Yusuff RM, Saberi S (2012) Neural network application in predicting advanced manufacturing technology implementation performance. Neural Comput Appl 21(6):1191–1204

Jerez JM, Gómez I, Franco L (2009) Neural network architecture selection: Can function complexity help? Neural Process Lett 30(2):71–87

Haussler D, Baum EB (1989) What size net gives valid generalization? Neural Comput 1(1):151–160

Yoneyama T, Camargo L (2001) Specification of training sets and the number of hidden neurons for multilayer perceptrons. Neural Comput 13(12):2673–2680

Qing M, Jun-Hai Z, Xi-Zhao W, Qing-Yan S (2013) Architecture selection for networks trained with extreme learning machine using localized generalization error model. Neurocomputing 102:3–9

Lirov Y (1992) Computer aided neural network engineering. Neural Netw 5(4):711–719

Haykin S (1994) Neural networks: a comprehensive foundation. Prentice Hall, Englewood Cliffs

Berger JO (1985) Statistical decision theory and Bayesian analysis. Springer, Berlin

Smith AFM, Bernardo JM (2009) Bayesian theory, vol 405. Wiley, New York

Johnson JD, Sexton RS, Dorsey RE (1999) Optimization of neural networks: a comparative analysis of the genetic algorithm and simulated annealing. Eur J Oper Res 114(3):589–601

Araújo R, Soares SA, Carlos H (2013) Comparison of a genetic algorithm and simulated annealing for automatic neural network ensemble development. Neurocomputing 121:498–511

Bogart C, Whitley D, Starkweather T (1990) Genetic algorithms and neural networks: optimizing connections and connectivity. Parallel Comput 14(3):347–361

Spears WM, De Jong KA (1992) A formal analysis of the role of multi-point crossover in genetic algorithms. Ann Math Artif Intell 5(1):1–26

Agrawal RB, Agrawal RB, Deb K (1994) Simulated binary crossover for continuous search space. Complex Syst 9(3):1–15

Glover F (1990) Artificial intelligence, heuristic frameworks and tabu search. Manag Dec Econ 11(5):365–375

Li M, Ruan X, Ye J, Qiao J (2007) A tabu based neural network learning algorithm. Neurocomputing 70(4):875–882

Lim LEN, Khaw JFC, Lim BS (1995) Optimal design of neural networks using the Taguchi method. Neurocomputing 7(3):225–245

Rowlands H, Packianather MS, Drake PR (2000) Optimizing the parameters of multilayered feedforward neural networks through Taguchi design of experiments. Qual Reliab Eng Int 16(6):461–473

Tannock J, Sukthomya W (2005) The optimisation of neural network parameters using Taguchi’s design of experiments approach: an application in manufacturing process modelling. Neural Comput Appl 14(4):337–344

Quinlan JR (1996) Learning decision tree classifiers. ACM Comput Surv (CSUR) 28(1):71–72

Neville PG (1999) Decision trees for predictive modeling. SAS Institute Inc

Kim YS (2008) Comparison of the decision tree, artificial neural network, and linear regression methods based on the number and types of independent variables and sample size. Expert Syst Appl 34(2):1227–1234

Kubat M, Ivanova I (1995) Initialization of neural networks by means of decision trees. Knowl Based Syst 8(6):333–344

Maji P (2008) Efficient design of neural network tree using a new splitting criterion. Neurocomputing 71(4):787–800

Pinkus A, Schocken S, Leshno M, Lin VY (1993) Multilayer feedforward networks with a nonpolynomial activation function can approximate any function. Neural Netw 6(6):861–867

Cybenko G (1989) Approximation by superpositions of a sigmoidal function. Math Control Signals Syst 2(4):303–314

Orr GB Müller KR LeCun YA, Bottou L (1998) Efficient backprop. In: Neural networks: tricks of the trade. Springer, Berlin, pp 9–50

Sandberg IW, Park J (1991) Universal approximation using radial-basis-function networks. Neural Comput 3(2):246–257

Argyros AA, Lourakis MIA (2005) Is Levenberg–Marquardt the most efficient optimization algorithm for implementing bundle adjustment? In: Tenth IEEE international conference on computer vision, 2005 (ICCV 2005), vol 2. IEEE, pp 1526–1531

Stafylopatis A, Likas A (2000) Training the random neural network using quasi-newton methods. Eur J Oper Res 126(2):331–339

Braun H, Riedmiller M (1993) A direct adaptive method for faster backpropagation learning: The rprop algorithm. In: IEEE international conference on neural networks, 1993. IEEE, pp 586–591

Al Timemy AH, Al Naima FM (2010) Resilient back propagation algorithm for breast biopsy classification based on artificial neural networks. Breast Cancer 4(5):6–7

Khoshayanda MR, Soltani Bozchalooic I, Dadgara A, Rafiee-Tehrania MGhaffari A, Abdollahib H (2006) Performance comparison of neural network training algorithms in modeling of bimodal drug delivery. Int J Pharm 327(1):126–138

Matlab Users Guide. Version 7.13. 0.564 (r2011b). the mathworks, 2011

Gagliardi F, Giardini C, Ceretti E, Fratini L (2009) A new approach to study material bonding in extrusion porthole dies. CIRP Ann Manuf Technol 58(1):259–262

Umbrello D Ambrogio G, Filice L (2004) Numerical analysis of the fracture surface in thick sheets blanking. In: Proceedings of the 7th ESAFORM conference, pp 757–760

Filice L, Ambrogio G, Gagliardi F (2004) First experimental and numerical evidences concerning the hydropiercing process. In: 7th Esaform conference on material forming, Trondheim (Norway), 28–30 April 2004

Fluhrer J (2005) Deform 3d version 5.0 user’s manual [z]. Columbus: Scientific Forming Technologles Corporation

Schmid SR, Kalpakjian S (2010) Manufacturing processes for engineering materials, vol 5. Pearson education

Peascoe RA, Watkins TR, Thiele JD, Melkote SN (2000) Effect of cutting edge geometry and workpiece hardness on surface residual stresses in finish hard turning of aisi 2100 steel. J Manuf Sci Eng 122(1):642–649

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ciancio, C., Ambrogio, G., Gagliardi, F. et al. Heuristic techniques to optimize neural network architecture in manufacturing applications. Neural Comput & Applic 27, 2001–2015 (2016). https://doi.org/10.1007/s00521-015-1994-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-015-1994-9