Abstract

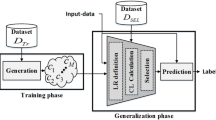

In dynamic ensemble selection (DES) techniques, only the most competent classifiers, for the classification of a specific test sample, are selected to predict the sample’s class labels. The key in DES techniques is estimating the competence of the base classifiers for the classification of each specific test sample. The classifiers’ competence is usually estimated according to a given criterion, which is computed over the neighborhood of the test sample defined on the validation data, called the region of competence. A problem arises when there is a high degree of noise in the validation data, causing the samples belonging to the region of competence to not represent the query sample. In such cases, the dynamic selection technique might select the base classifier that overfitted the local region rather than the one with the best generalization performance. In this paper, we propose two modifications in order to improve the generalization performance of any DES technique. First, a prototype selection technique is applied over the validation data to reduce the amount of overlap between the classes, producing smoother decision borders. During generalization, a local adaptive K-Nearest Neighbor algorithm is used to minimize the influence of noisy samples in the region of competence. Thus, DES techniques can better estimate the classifiers’ competence. Experiments are conducted using 10 state-of-the-art DES techniques over 30 classification problems. The results demonstrate that the proposed scheme significantly improves the classification accuracy of dynamic selection techniques.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Notes

The term base classifier refers to a single classifier belonging to an ensemble or a pool of classifiers.

References

Alcalá-Fdez J, Fernández A, Luengo J, Derrac J, García S (2011) KEEL data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. Mult Valued Log Soft Comput 17(2–3):255–287

Bache K, Lichman M (2013) UCI machine learning repository. http://archive.ics.uci.edu/ml

Britto AS, Sabourin R, de Oliveira LES (2014) Dynamic selection of classifiers: a comprehensive review. Pattern Recognit 47(11):3665–3680

Calvo-Zaragoza J, Valero-Mas JJ, Rico-Juan JR (2016) Prototype generation on structural data using dissimilarity space representation. Neural Comput Appl. doi:10.1007/s00521-016-2278-8

Cavalin PR, Sabourin R, Suen CY (2012) Logid: an adaptive framework combining local and global incremental learning for dynamic selection of ensembles of HMMs. Pattern Recognit 45(9):3544–3556

Cavalin PR, Sabourin R, Suen CY (2013) Dynamic selection approaches for multiple classifier systems. Neural Comput Appl 22(3–4):673–688

Cruz RMO, Sabourin R, Cavalcanti GDC (2015) A DEEP analysis of the META-DES framework for dynamic selection of ensemble of classifiers. CoRR arXiv:1509.00825

Cruz RMO, Sabourin R, Cavalcanti GDC (2015) META-DES.H: a dynamic ensemble selection technique using meta-learning and a dynamic weighting approach. In: International joint conference on neural networks, pp 1–8

Cruz RMO, Sabourin R, Cavalcanti GDC, Ren TI (2015) META-DES: a dynamic ensemble selection framework using meta-learning. Pattern Recognit 48(5):1925–1935

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Díez-Pastor J, Rodríguez JJ, García-Osorio C, Kuncheva LI (2015) Diversity techniques improve the performance of the best imbalance learning ensembles. Inf Sci 325:98–117

Dos Santos EM, Sabourin R, Maupin P (2008) A dynamic overproduce-and-choose strategy for the selection of classifier ensembles. Pattern Recognit 41:2993–3009

Duin RPW, Juszczak P, de Ridder D, Paclik P, Pekalska E, Tax DM (2004) Prtools, a matlab toolbox for pattern recognition http://www.prtools.org

Garcia S, Derrac J, Cano J, Herrera F (2012) Prototype selection for nearest neighbor classification: taxonomy and empirical study. IEEE Trans Pattern Anal Mach Intell 34(3):417–435

Giacinto G, Roli F (2001) Dynamic classifier selection based on multiple classifier behaviour. Pattern Recognit 34:1879–1881

King RD, Feng C, Sutherland A (1995) Statlog: comparison of classification algorithms on large real-world problems. Appl Artif Intell Int J 9(3):289–333

Ko AHR, Sabourin R, Britto uS Jr (2008) From dynamic classifier selection to dynamic ensemble selection. Pattern Recognit 41:1735–1748

Kuncheva L (2004) Ludmila kuncheva collection LKC. http://pages.bangor.ac.uk/~mas00a/activities/real_data.htm

Nanni L, Fantozzi C, Lazzarini N (2015) Coupling different methods for overcoming the class imbalance problem. Neurocomputing 158:48–61

Sabourin M, Mitiche A, Thomas D, Nagy G (1993) Classifier combination for handprinted digit recognition. In: International conference on document analysis and recognition, pp 163–166

Smith MR, Martinez TR, Giraud-Carrier CG (2014) An instance level analysis of data complexity. Mach Learn 95(2):225–256

Smits PC (2002) Multiple classifier systems for supervised remote sensing image classification based on dynamic classifier selection. IEEE Trans Geosci Remote Sens 40(4):801–813

Sun Z, Song Q, Zhu X, Sun H, Xu B, Zhou Y (2015) A novel ensemble method for classifying imbalanced data. Pattern Recognit 48(5):1623–1637

Triguero I, Derrac J, García S, Herrera F (2012) A taxonomy and experimental study on prototype generation for nearest neighbor classification. IEEE Trans Syst Man Cybern Part C 42(1):86–100

Valentini G (2003) Ensemble methods based on bias-variance analysis. Ph.D. thesis, Dipartimento di Informatica e Scienze dell’ Informazione (DISI)—Università di Genova

Wang J, Neskovic P, Cooper LN (2007) Improving nearest neighbor rule with a simple adaptive distance measure. Pattern Recognit Lett 28:207–213

Wilson DL (1972) Asymptotic properties of nearest neighbor rules using edited data. IEEE Trans Syst Man Cybern 2(3):408–421

Woloszynski T, Kurzynski M (2010) A measure of competence based on randomized reference classifier for dynamic ensemble selection. In: International conference on pattern recognition (ICPR), pp 4194–4197

Woloszynski T, Kurzynski M (2011) A probabilistic model of classifier competence for dynamic ensemble selection. Pattern Recognit 44:2656–2668

Woloszynski T, Kurzynski M, Podsiadlo P, Stachowiak GW (2012) A measure of competence based on random classification for dynamic ensemble selection. Inf Fusion 13(3):207–213

Woods K, Kegelmeyer WP Jr, Bowyer K (1997) Combination of multiple classifiers using local accuracy estimates. IEEE Trans Pattern Anal Mach Intell 19:405–410

Zhu X, Wu X, Yang Y (2004) Dynamic classifier selection for effective mining from noisy data streams. In: Proceedings of the 4th IEEE international conference on data mining, pp 305–312

Acknowledgments

This work was supported by the Natural Sciences and Engineering Research Council of Canada (NSERC), the École de technologie supérieure (ÉTS Montréal) and CNPq (Conselho Nacional de Desenvolvimento Científico e Tecnológico).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Cruz, R.M.O., Sabourin, R. & Cavalcanti, G.D.C. Prototype selection for dynamic classifier and ensemble selection. Neural Comput & Applic 29, 447–457 (2018). https://doi.org/10.1007/s00521-016-2458-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-016-2458-6