Abstract

Learning partial differential equations (LPDEs) from training data for particular tasks has been successfully applied to many image processing problems. In this paper, we propose a more effective LPDEs model for vector-valued image tasks. The PDEs are also formulated as a linear combination of fundamental differential invariants, but have several distinctions. First, we simplify the current LPDEs system by omitting a PDE which works as an indicate function in current ones. Second, instead of using \(L_2\)-norm, we use the \(L_1\)-norm to regularize the coefficients with respect to the fundamental differential invariants. Third, as the objective function is not smooth, we resort to the alternating direction method to optimize it. We illustrate the properties of our LPDEs system by several examples in denoising and demosaicking of RGB color images. The experiments demonstrate the advantage of the proposed method over other PDE-based methods.

Similar content being viewed by others

Notes

The images are padded with zeros of several pixels width around them, so that the Dirichlet boundary conditions \(u_{m}(x,y,t)=0, v_{m}(x,y,t)=0, (x,y,t)\in \varGamma\), are naturally fulfilled.

The images are padded with zeros of several pixels width around them such that the Dirichlet boundary conditions \(u^c_{m}(x,y,t)=0, (x,y,t)\in \varGamma\), are naturally fulfilled.

References

Arbelaez P, Fowlkes C, Martin D (2013) The Berkeley segmentation dataset [Online]. Available: http://www.eecs.berkeley.edu/Research/Projects/CS/vision/bsds/

Aubert G, Kornprobst P (2006) Mathematical problems in image processing: partial differential equations and the calculus of variations, vol 147. Springer, New York

Beauchemin SS, Barron JL (1995) The computation of optical flow. ACM Comput Surv (CSUR) 27(3):433–466

Beck A, Teboulle M (2009) A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J Imaging Sci 2(1):183–202

Bingsheng H, Xiaoming Y (2013) Linearized alternating direction method of multipliers with Gaussian back substitution for separable convex programming. Numer Algebra Control Opt 22(2):313–340

Blomgren P, Chan TF (1998) Color TV: total variation methods for restoration of vector-valued images. IEEE Trans Image Process 7(3):304–309

Boyd S, Parikh N, Chu E, Peleato B, Eckstein J (2011) Distributed optimization and statistical learning via the alternating direction method of multipliers. Found Trends Mach Learn 3(1):1–122

Chan TF, Esedoglu S (2005) Aspects of total variation regularized \({L}^1\) function approximation. SIAM J Appl Math 65(5):1817–1837

Combettes PL, Wajs VR (2005) Signal recovery by proximal forward–backward splitting. Multis Model Simul 4(4):1168–1200

Deng W, Yin W (2016) On the global and linear convergence of the generalized alternating direction method of multipliers. J Sci Comput 66(3):1–28

Ehrhardt MJ, Arridge SR (2013) Vector-valued image processing by parallel level sets [online]. Available http://www0.cs.ucl.ac.uk/staff/ehrhardt/software.html

Ehrhardt MJ, Arridge SR (2014) Vector-valued image processing by parallel level sets. Image Process IEEE Trans 23(1):9–18

Esser E (2009) Applications of Lagrangian-based alternating direction methods and connections to split Bregman. Cam Report

Estrada FJ (2010) Image denoising benchmark [online]. Available http://www.cs.utoronto.ca/strider/Denoise/Benchmark/

Figueiredo MA, Nowak RD, Wright SJ (2007) Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J Select Top Signal Process 1(4):586–597

Goldstein T, Osher S (2009) The split Bregman method for L1-regularized problems. Siam J Imaging Sci 2(2):323–343

Gunturk BK, Altunbasak Y, Mersereau RM (2002) Color plane interpolation using alternating projections. IEEE Trans Image Process Publ IEEE Signal Process Soc 11(9):997–1013

Li C, Xu C, Gui C, Fox MD (2005) Level set evolution without re-initialization: a new variational formulation. In: Computer vision and pattern recognition, 2005. CVPR 2005. IEEE Computer Society Conference on. vol 1, pp 430–436. IEEE

Lin Z, Liu R, Su Z (2011) Linearized alternating direction method with adaptive penalty for low-rank representation. Advances in neural information processing systems, pp 612–620

Lin Z, Zhang W, Tang X (2008) Learning partial differential equations for computer vision. Tech. rep., Technical report, Microsoft Research, MSR-TR-2008-189

Lin Z, Zhang W, Tang X (2009) Designing partial differential equations for image processing by combining differential invariants. Tech. rep., Technical report, Microsoft Research, MSR-TR-2009-192

Lions JL (1971) Optimal control of systems governed by partial differential equations. Springer, New York

Liu R, Cao J, Lin Z, Shan S (2014) Adaptive partial differential equation learning for visual saliency detection. In: CVPR

Liu R, Lin Z, Zhang W, Tang K, Su Z (2010) Learning PDEs for image restoration via optimal control. In: ECCV

Liu R, Lin Z, Zhang W, Tang K, Su Z (2013) Toward designing intelligent PDES for computer vision: an optimal control approach. Image Vis Comput 31(1):43–56

Liu R, Lin Z, Zhang W, Tang K, Su Z (2013) Toward designing intelligent PDEs for computer vision: An optimal control approach. Available http://www.cis.pku.edu.cn/faculty/vision/zlin/zlin.htm

Malioutov DM, Cetin M, Willsky AS (2005) Homotopy continuation for sparse signal representation. In: ICASSP. vol 5, pp v-733. IEEE

Martin B, Andrea CM, Stanley O, Martin R (2013) Level set and PDE based reconstruction methods in imaging. Springer, New York

Osher S, Rudin LI (1990) Feature-oriented image enhancement using shock filters. SIAM J Numer Anal 27(4):919–940

Perona P, Malik J (1990) Scale-space and edge detection using anisotropic diffusion. Pattern Anal Mach Intell IEEE Trans 12(7):629–639

Rudin LI, Osher S, Fatemi E (1992) Nonlinear total variation based noise removal algorithms. Phys D 60(1):259–268

Sapiro G (2006) Geometric partial differential equations and image analysis. Cambridge University Press, Cambridge

Sochen N, Kimmel R, Malladi R (1998) A general framework for low level vision. IEEE Trans Image Process 7(3):310–318

Tao M (2014) Some parallel splitting methods for separable convex programming with the \(o(1/t)\) convergence rate. Pacif J Opt 10(2):359–384

Yang J, Yuan X (2011) Linearized augmented Lagrangian and alternating direction methods for nuclear norm minimization. Math Comput 82(281):301–329

Zhao Z, Fang C, Lin Z, Wu Y (2015) A robust hybrid method for text detection in natural scenes by learning-based partial differential equations. Neurocomputing 168:23–34

Acknowledgements

The authors thank the NSFC support (Nos. 61471369 and 61503396) and the open Research Foundation of State Key Laboratory of Astronautic Dynamics (Nos. 2013ADL-DW0101 and 2014ADL-DW0102).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

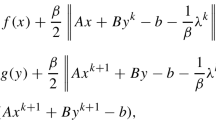

When each of them gets the minimum, the functional \(J(\mathbf {u}_m,\mathbf {a})\) (8) get the minimum. We simply write \(u^c_m\) as \(\varphi\) and fix \(u^k_m\) \((k\ne c)\) when we derive the formulation next. We divide \(J(\varphi ,\mathbf {a})\) into two parts

For the first part, we have

For the second part, we first define \(F(\varphi ,\mathbf {a})=\sum _{i=0}^{36}a^c_i (t)\mathbf {inv}_i (\varphi )\), Then, we get

where \(Q=\varOmega \times [0,T]\) and \(\text {d}Q={\text { d}}\varOmega {\text { d}}t\).

We first compute \(F(\varphi +\varepsilon \delta \varphi ,\mathbf {a})-F(\varphi ,\mathbf {a})\), and it equals

where

From the above formulations, we can get

As the perturbation \(\delta \varphi\) should satisfy that \(\delta \varphi |_{\varGamma }=0\) and \(\delta \varphi |_{t=0}=0\). Integrating by parts, we have

If \(\frac{\mathrm {D}L}{\mathrm {D}\varphi }\) exists, the boundary conditions must satisfy the equation as follows

Then, we get the G\(\hat{a}\)teaux derivative

When the functional gets the minimum, it should satisfy the follow PDE with the initial condition \(u^c_m|_{t=0}=I^c_m\),

Rights and permissions

About this article

Cite this article

Jiao, Y., Pan, X., Zhao, Z. et al. Learning sparse partial differential equations for vector-valued images. Neural Comput & Applic 29, 1205–1216 (2018). https://doi.org/10.1007/s00521-016-2623-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-016-2623-y