Abstract

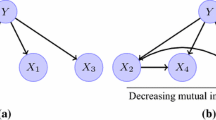

Latent dependency forest models (LDFMs) are a new type of probabilistic models with dynamic dependency structures over random variables. They distinguish themselves from other probabilistic models by the fact that there is no need for structure search when learning the models. However, parameter learning of LDFMs is still quite challenging since the partition function cannot be tractably calculated. In this paper, we investigate and empirically compare several algorithms of learning parameters of LDFMs which either approximate or ignore the partition function in the learning objective. Furthermore, we propose an approximate algorithm to estimate the partition function of LDFM. Experimental results show that (1) our learning algorithms can achieve better results than the previous learning algorithm of LDFMs, and (2) our partition function estimation algorithm is accurate.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Carreira-Perpinan MA, Hinton GE (2005) On contrastive divergence learning. Artif Intell Stat 10:33–40

Chu S, Jiang Y, Tu K (2017) Latent dependency forest models. In: Proceedings of the thirty-first AAAI conference on artificial intelligence, 4–9 Feb 2017, San Francisco, California, USA, pp 3733–3739

Collins M, Globerson A, Koo T, Carreras X, Bartlett PL (2008) Exponentiated gradient algorithms for conditional random fields and max-margin Markov networks. J Mach Learn Res 9(Aug):1775–1822

Fahlman SE, Hinton GE, Sejnowski TJ (1983) Massively parallel architectures for AI: NETL, Thistle, and Boltzmann machines. In: Proceedings of the third AAAI conference on artificial intelligence. AAAI Press, pp 109–113

Gens R, Pedro D (2013) Learning the structure of sum-product networks. In: International conference on machine learning, pp 873–880

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT Press, Cambridge

Hinton GE (2002) Training products of experts by minimizing contrastive divergence. Neural Comput 14(8):1771–1800

Hinton GE, Osindero S, Teh YW (2006) A fast learning algorithm for deep belief nets. Neural Comput 18(7):1527–1554

Kivinen J, Warmuth MK (1997) Exponentiated gradient versus gradient descent for linear predictors. Inf Comput 132(1):1–63

Koller D, Friedman N (2009) Probabilistic graphical models: principles and techniques. MIT Press, Cambridge

Liang P, Klein D (2009) Online EM for unsupervised models. In: Proceedings of human language technologies: the 2009 annual conference of the North American chapter of the association for computational linguistics. Association for Computational Linguistics, pp 611–619

Lowd D, Rooshenas A (2015) The libra toolkit for probabilistic models. J Mach Learn Res 16:2459–2463

Ma J, Peng J, Wang S, Xu J (2013) Estimating the partition function of graphical models using Langevin importance sampling. J Mach Learn Res 31:433–441

McDonald R, Pereira F, Ribarov K, Hajič J (2005) Non-projective dependency parsing using spanning tree algorithms. In: Proceedings of the conference on human language technology and empirical methods in natural language processing. Association for Computational Linguistics, pp 523–530

Meila M, Jordan MI (2001) Learning with mixtures of trees. J Mach Learn Res 1:1–48

Murray I, Ghahramani Z (2004) Bayesian learning in undirected graphical models: approximate MCMC algorithms. In: Proceedings of the 20th conference on Uncertainty in artificial intelligence. AUAI Press, pp 392–399

Neal RM (2001) Annealed importance sampling. Stat Comput 11(2):125–139

Poon H, Domingos P (2011) Sum-product networks: a new deep architecture. In: Proceedings of the twenty-seventh conference on uncertainty in artificial intelligence. AUAI Press, pp 337–346

Rooshenas A, Lowd D (2014) Learning sum-product networks with direct and indirect variable interactions. In: Proceedings of the 31st international conference on machine learning (ICML-14), pp 710–718

Sato MA, Ishii S (2000) On-line em algorithm for the normalized gaussian network. Neural Comput 12(2):407–432

Tieleman T (2008) Training restricted Boltzmann machines using approximations to the likelihood gradient. In: Proceedings of the 25th international conference on machine learning. ACM, pp 1064–1071

Tsamardinos I, Aliferis CF, Statnikov A (2003) Time and sample efficient discovery of Markov blankets and direct causal relations. In: Proceedings of the ninth ACM SIGKDD international conference on knowledge discovery and data mining. ACM, pp 673–678

Wainwright MJ, Jordan MI (2008) Graphical models, exponential families, and variational inference. Found Trends® Mach Learn 1(1–2):1–305

Zhao H, Poupart P, Gordon GJ (2016) A unified approach for learning the parameters of sum-product networks. In: Advances in neural information processing systems, pp 433–441

Zhao Y, Chen Y, Tu K, Tian J (2015) Curriculum learning of Bayesian network structures. In: Proceedings of the 7th Asian conference on machine learning, pp 269–284

Funding

Funding was provided by National Natural Science Foundation of China (CN) (Grant No. 61503248).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Jiang, Y., Zhou, Y. & Tu, K. Learning and evaluation of latent dependency forest models. Neural Comput & Applic 31, 6795–6805 (2019). https://doi.org/10.1007/s00521-018-3504-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-018-3504-3