Abstract

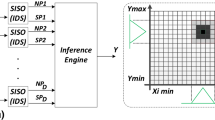

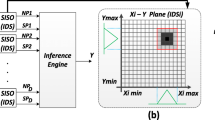

Active Learning Method (ALM) is one of the powerful tools in soft computing and it is inspired by the human brain capabilities in approaching complicated problems. ALM, which is in essence an adaptive fuzzy learning algorithm, tries to model a Multi-Input Single-Output system with several single-input single-output subsystems. Each of these subsystems is then modeled by an ink drop spread (IDS) plane. IDS operator, which is the main processing engine of ALM, extracts two kinds of informative features, Narrow Path and Spread, from each IDS plane without complicated computations. These features from all IDS planes are then aggregated in the inference engine. Despite the great performance of ALM in different applications, an efficient hardware implementation has remained a challenge, which is mainly due to considerably high memory requirement of IDS operation. In this paper, in a novel approach to IDS operation, we propose an abstract representation of the IDS planes which minimizes the memory requirement and the computational cost, and consequently, benefits the hardware implementation in terms of area and speed. The proposed approach is fully compatible with memristor-crossbar implementation with an adaptive learning capability. Simpler learning algorithm and higher speed make our proposed algorithm suitable for applications where real-time process, low-cost and small implementation are of high priority. Applications in the classification of real-world datasets and function approximation are provided to confirm the effectiveness of the algorithm. Eventually, the paper concludes that the proposed computing structure provides a synergy between artificial neural networks and fuzzy domains.

Similar content being viewed by others

References

Zadeh LA (1994) Fuzzy logic, neural networks, and soft computing. Commun ACM 37(3):77–84

Shouraki SB (2000) A novel fuzzy approach to modeling and control and its hardware implementation based on brain functionality and specifications. The University of Electro-Communication, Chofu-Tokyo

Shouraki SB, Honda N (1999) Simulation of brain learning process through a novel fuzzy hardware approach. In: 1999 IEEE international conference and proceedings on IEEE SMC’99 systems, man, and cybernetics, 1999. IEEE

Shouraki SB, Honda N, Yuasa G (1999) Fuzzy interpretation of human intelligence. Int J Uncertain Fuzziness Knowl Based Syst 7(04):407–414

Sugeno M, Yasukawa T (1993) A fuzzy-logic-based approach to qualitative modeling. IEEE Trans Fuzzy Syst 1(1):7–31

Takagi T, Sugeno M (1985) Fuzzy identification of systems and its applications to modeling and control. Syst Man Cybern IEEE Trans 1:116–132

Jang J-SR (1993) ANFIS: adaptive-network-based fuzzy inference system. Syst Man Cybern IEEE Trans 23(3):665–685

Murakami M, Honda N (2007) A study on the modeling ability of the IDS method: a soft computing technique using pattern-based information processing. Int J Approx Reason 45(3):470–487

Shouraki SB, Honda N (1998) Fuzzy controller design by an active learning method. In: 31th symposium of intelligent control

Shahdi SA, Shouraki SB (2002) Supervised active learning method as an intelligent linguistic controller and its hardware implementation. In: 2nd IASTEAD international conference on artificial intelligence and applications (AIA’02), Malaga, Spain

Sakurai Y, Honda N, Nishino J (2003) Acquisition of control knowledge of nonholonomic system by active learning method. In: IEEE international conference on systems, man and cybernetics, 2003. IEEE

Sagha H, Afrakoti IEP, Bagherishouraki S (2013) Actor-critic-based ink drop spread as an intelligent controller. Turk J Electr Eng Comput Sci 21(4):1015–1034

Sagha H, et al (2008) Real-time IDS using reinforcement learning. In: Second international symposium on intelligent information technology application, 2008. IITA’08. IEEE

Klidbary SH, et al (2017) Outlier robust fuzzy active learning method (ALM). In: 7th international conference on computer and knowledge engineering (ICCKE), 2017. IEEE

Firouzi M, Shouraki SB, Afrakoti IEP (2014) Pattern analysis by active learning method classifier. J Intell Fuzzy Syst 26(1):49–62

Shahraiyni TH et al (2007) Application of the Active Learning Method for the estimation of geophysical variables in the Caspian Sea from satellite ocean colour observations. Int J Remote Sens 28(20):4677–4683

Murakami M, Honda N, Nishino J (2004) A high performance IDS processing unit for a new fuzzy-based modeling. In: IEEE international conference and proceedings on fuzzy systems, 2004. IEEE

Firouzi M, et al (2010) A novel pipeline architecture of replacing ink drop spread. In: 2010 second world congress on nature and biologically inspired computing (NaBIC). IEEE

Tarkhan M, Shouraki SB, Khasteh SH (2009) A novel hardware implementation of IDS method. IEICE Electron Express 6(23):1626–1630

Merrikh-Bayat F, Shouraki SB, Rohani A (2011) Memristor crossbar-based hardware implementation of the IDS method. Fuzzy Syst IEEE Trans 19(6):1083–1096

Afrakoti IEP, Shouraki SB, Haghighat B (2014) An optimal hardware implementation for active learning method based on memristor crossbar structures. Syst J IEEE 8(4):1190–1199

Chua LO (1971) Memristor-the missing circuit element. Circuit Theory IEEE Trans 18(5):507–519

Chua LO, Kang SM (1976) Memristive devices and systems. Proc IEEE 64(2):209–223

Strukov DB et al (2008) The missing memristor found. Nature 453(7191):80–83

Eshraghian K et al (2011) Memristor MOS content addressable memory (MCAM): hybrid architecture for future high performance search engines. IEEE Trans Very Large Scale Integr VLSI Syst 19(8):1407–1417

Snider G et al (2011) From synapses to circuitry: using memristive memory to explore the electronic brain. Computer 2:21–28

Klidbary SH, Shouraki SB, Afrakoti IEP (2016) Fast IDS computing system method and its memristor crossbar-based hardware implementation. arXiv:1602.06787

Waser R, Aono M (2007) Nanoionics-based resistive switching memories. Nat Mater 6(11):833–840

Bavandpour M et al (2014) Spiking neuro-fuzzy clustering system and its memristor crossbar based implementation. Microelectron J 45(11):1450–1462

Prezioso M et al (2015) Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521(7550):61–64

Saïghi S et al (2015) Plasticity in memristive devices for spiking neural networks. Front Neurosci 9:51

Prezioso M et al (2016) Self-adaptive spike-time-dependent plasticity of metal-oxide memristors. Sci Rep 6:21331

Li T et al (2016) A spintronic memristor-based neural network with radial basis function for robotic manipulator control implementation. IEEE Trans Syst Man Cybern Syst 46(4):582–588

Li T, et al (2016) An improved design of RBF neural network control algorithm based on spintronic memristor crossbar array. Neural Comput Appl 1–8

Perez-Carrasco J, et al (2010) On neuromorphic spiking architectures for asynchronous STDP memristive systems. In: Proceedings of 2010 IEEE international symposium on circuits and systems (ISCAS). IEEE

Pershin YV, La Fontaine S, Di Ventra M (2009) Memristive model of amoeba learning. Phys Rev E 80(2):021926

Bayat FM, Shouraki SB (2015) Nonlinear behavior of memristive devices during tuning process and its impact on STDP learning rule in memristive neural networks. Neural Comput Appl 26(1):67–75

Kuekes P (2008) Material implication: digital logic with memristors. In: A presentation in the memristor and memristive systems symposium at UC Berkeley

Raja T, Mourad S (2009) Digital logic implementation in memristor-based crossbars. In: IEEE international conference on communications, circuits and systems, 2009 (ICCCAS)

Shin S, Kim K, Kang S-M (2009) Memristor-based fine resolution programmable resistance and its applications. In: International conference on communications, circuits and systems, 2009 (ICCCAS 2009), IEEE

Pershin YV, Ventra MD (2010) Practical approach to programmable analog circuits with memristors. Circuits Syst I Regul Pap IEEE Trans 57(8):1857–1864

Merrikh-Bayat F, Shouraki SB (2010) Memristor-based circuits for performing basic arithmetic operations. arXiv:1008.3452

Merrikh-Bayat F, Bagheri-Shouraki S (2011) Mixed analog-digital crossbar-based hardware implementation of sign–sign LMS adaptive filter. Analog Integr Circ Sig Process 66(1):41–48

Cho K, Lee S-J, Eshraghian K (2015) Memristor-CMOS logic and digital computational components. Microelectron J 46(3):214–220

Truong SN et al (2015) New twin crossbar architecture of binary memristors for low-power image recognition with discrete cosine transform. IEEE Trans Nanotechnol 14(6):1104–1111

Hasan R, Taha TM, Yakopcic C (2017) On-chip training of memristor crossbar based multi-layer neural networks. Microelectron J 66:31–40

Klidbary SH, Shouraki SB (2018) A novel adaptive learning algorithm for low-dimensional feature space using memristor-crossbar implementation and on-chip training. Appl Intell. https://doi.org/10.1007/s10489-018-1202-6

Mouttet B (2009) Proposal for memristors in signal processing. nano-net. Springer, Berlin, pp 11–13

Sheridan PM et al (2017) Sparse coding with memristor networks. Nature Nanotechnol 12:784–789

Kolka Z, Biolek D, Biolkova V (2015) Improved model of TiO2 memristor. Radioengineering 24(2):378–383

Biolek D et al (2015) Reliable modeling of ideal generic memristors via state-space transformation. Radioengineering 24(2):393–407

Naous R, Al-Shedivat M, Salama KN (2016) Stochasticity modeling in memristors. IEEE Trans Nanotechnol 15(1):15–28

Juang C-F, Tsao Y-W (2008) A type-2 self-organizing neural fuzzy system and its FPGA implementation. Syst Man Cybern Part B Cybern IEEE Trans 38(6):1537–1548

Yi Y et al (2016) FPGA based spike-time dependent encoder and reservoir design in neuromorphic computing processors. Microprocess Microsyst 46:175–183

Afrakoti IEP et al (2017) Using a memristor crossbar structure to implement a novel adaptive real-time fuzzy modeling algorithm. Fuzzy Sets Syst 307:115–128

Lang KJ (1988) Learning to tell two spirals apart. In: Proceedings of 1988 connectionist models summer school, Pittsburgh, PA

Sagha H, et al (2008) Genetic ink drop spread. In: Second international symposium on intelligent information technology application, IITA’08. IEEE

Acknowledgments

The authors would like to thank Soroush Sheikhpour Kourabbaslou and Mohammad Bavandpour for their kind discussions. The first author is grateful to Iran National Science Foundation (INSF), which has partially supported the present research (Grant No. 96000943).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Appendix A: Proof of convergence

Appendix A: Proof of convergence

As it has also been discussed in the body of paper, the training mode in the original ALM algorithm is Batch-Mode, and in our proposed algorithm is Sample-Mode. This modification in training mode is the main reason for the increase in the training speed and the decrease in the required memory space and hardware implementation. Convergence occurs in both modes, but not necessarily equivalent. As the computing space has uncertainty (fuzzy space), this error and different equivalency do not significantly affect the final result. In this computing space, it cannot be said that the output has become better or worse (but the average of convergence of Batch-based algorithm is better than that of Sample-Based). In order to prove the convergence of \(c_{\text{NP}}\) vector, first, we show the change in one element of this vector when different training inputs are introduced:

Where, each training input is shown as pair \((x_{t} ,y_{t} )\), I is the index of the vector’s elements for updating, \(K_{I}\) is the total number of training samples in element I, \(\alpha_{2}\) is learning rate, \(x_{t}\) is the input that vary between one and the maximum of the quantization level of \(X\) axis (\(k_{Rsnx}\)). For each training input associated with each quantization level, following equation holds:

\(K\) is the total number of training samples in an IDS plane (number of iterations). For the benefit of simplicity and less computations, if \(x_{t} = I\), the previous equation can be simplified as follows:

If we expand the summation, following equations can be obtained:

Eventually, a recursive equation can be obtained as follows:

If \(K_{I} \gg 1,\) in the previous equation, the first term of the equation tends to zero, and by variable replacement as \(p = k_{I} - i\) following equation can be obtained:

This equation shows that the final output of the algorithm converges to the weighted sum of outputs. With regard to forgetting property introduced in the proposed method, the impact of training samples that were shown later in the training phase is higher than that of training samples that were shown to the algorithm earlier. If \(y_{{k_{I} - p}}\) are the same, the previous equation is a geometric series which converges to \(y_{{k_{I} - p}}\) eventually. It should be mentioned that because of the fact that ALM and EMALM algorithms partition the inputs domains. This results in lower spread in inputs \(y_{{k_{I} - p}}\) and consequently lower range of variation in \(y_{{k_{I} - p}}\). Therefore, for the mentioned recursive algorithm, the standard deviation of Narrow Path is small and because the computations are conducted in the space with uncertainty and random choice of \(y_{{k_{I} - p}}\), this error and lack of equivalency do not significantly affect the final result.

With regard to assumptions we made about the convergence of describing vectors, the value of spread would be:

If \(c_{{{\text{UB}}K_{I} + 1}}^{I} - c_{{{\text{LB}}K_{I} + 1}}^{I} = SP_{{K_{I} + 1}}^{I}\) is inserted in the previous equations, we would have:

For some values of \(k_{I}\), we would have:

Eventually, a recursive equation can be obtained as follows:

This equation shows that as the number of training samples (or the number of iterations) increases, the value of spread decreases, and eventually our belief to the occurrence of that event (\(C_{\text{NP}}\) vector) increases.

Rights and permissions

About this article

Cite this article

Klidbary, S.H., Shouraki, S.B. & Afrakoti, I.E.P. An adaptive efficient memristive ink drop spread (IDS) computing system. Neural Comput & Applic 31, 7733–7754 (2019). https://doi.org/10.1007/s00521-018-3604-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-018-3604-0