Abstract

This paper proposes a method to evaluate the degree of emotion being motivated in continuous music videos based on asymmetry index (AsI). By collecting two groups of electroencephalogram (EEG) signals from 6 channels (Fp1, Fp2, Fz and AF3, AF4, Fz) in the left and right hemispheres, multidimensional directed information is used to measure the mutual information shared between two frontal lobes, and then, we get AsI to estimate the degree of emotional induction. In order to evaluate the effect of AsI processing on physiological emotion recognition, 32-channel EEG signals, 2-channel EEG signals and 2-channel EMG signals are selected for each subject from the DEAP dataset, and different sub-bands are extracted using wavelet packet transform. k-means algorithm is used to cluster the wavelet packet coefficients of each sub-band, and the probability distribution of the coefficients under each cluster is calculated. Finally, the probability distribution value of each sample is sent as the original features into echo state network for unsupervised intrinsic plasticity training; the reservoir state nodes are selected as the final feature vector and fed into the support vector machine. The experimental results show that the proposed algorithm can achieve an average recognition rate of 70.5% when the subjects are independent. Compared with the case without AsI, the recognition rate is increased by 8.73%. On the other hand, the ESN is adopted for the original physiological feature refinement which can significantly reduce feature dimensions and be more beneficial to the emotion classification. Therefore, this study can effectively improve the performance of human–machine interface systems based on emotion recognition.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Change history

15 November 2018

The authors quite agree that the AsI model is first developed by Panagiotis Petrantonakis from references [11] and [18] in their thesis.

References

Vilar P (2014) Designing the user interface: strategies for effective human–computer interaction (5th edition). Inf Process Manage 61(5):1073–1074

Andreasson R, Alenljung B, Billing E et al (2018) Affective touch in human–robot interaction: conveying emotion to the nao robot. Int J Social Robot 3:1–19

Zhang Z, Tanaka E (2017) Affective computing using clustering method for mapping human’s emotion. In: IEEE international conference on advanced intelligent mechatronics. IEEE, pp 235–240

Fragopanagos N, Taylor JG (2005) Emotion recognition in human–computer interaction. Neural Netw 18(4):389

Hu M, Zheng Y, Ren F et al (2015) Age estimation and gender classification of facial images based on Local Directional Pattern. In: IEEE international conference on cloud computing and intelligence systems. IEEE, pp 103–107

Ren F, Huang Z (2016) Automatic facial expression learning method based on humanoid robot XIN-REN. IEEE Trans Hum Mach Syst 46(6):810–821

Wang K, An N, Li BN et al (2017) Speech emotion recognition using Fourier parameters. IEEE Trans Affect Comput 6(1):69–75

Camurri A, Camurri A, Camurri A (2016) Adaptive body gesture representation for automatic emotion recognition. ACM Trans Interact Intell Syst 6(1):6

Ren F (2009) Affective information processing and recognizing human emotion. Elsevier Science Publishers B.V., Amsterdam

Ren F, Wang L (2017) Sentiment analysis of text based on three-way decisions. J Intell Fuzzy Syst 33(1):245–254

Petrantonakis PC, Hadjileontiadis LJ (2012) Adaptive emotional information retrieval from EEG signals in the time–frequency domain. IEEE Trans Signal Process 60(5):2604–2616

Yoon HJ, Chung SY (2013) EEG-based emotion estimation using Bayesian weighted-log-posterior function and perceptron convergence algorithm. Comput Biol Med 43(12):2230–2237

Davidson RJ, Ekman P, Saron CD et al (1990) Approach-withdrawal and cerebral asymmetry: emotional expression and brain physiology: I. J Pers Soc Psychol 58(2):330

Davidson RJ, Schwartz GE, Saron C, Bennett J, Goleman DJ (1979) Frontal versus parietal EEG asymmetry during positive and negative affect. Psychophysiology 16:202–203

Zheng WL, Lu BL (2015) Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans Auton Ment Dev 7(3):162–175

Daimi SN, Saha G (2014) Classification of emotions induced by music videos and correlation with participants’ rating. Expert Syst Appl 41(13):6057–6065

Sakata O, Shiina T, Saito Y (2002) Multidimensional directed information and its application. Electron Commun Jpn 85(4):45–55

Petrantonakis PC, Hadjileontiadis LJ (2011) A novel emotion elicitation index using frontal brain asymmetry for enhanced EEG-based emotion recognition. IEEE Trans Inf Technol Biomed 15(5):737–746

Sakata O, Shiina T, Satake T et al (2006) Short-time multidimensional directed coherence for EEG analysis. IEEJ Trans Electr Electron Eng 1(4):408–416

Deshpande G, Laconte S, Peltier S et al (2006) Directed transfer function analysis of fMRI data to investigate network dynamics. In: International conference of the IEEE engineering in medicine and biology society, p 671

Xu X, Ye Z, Peng J (2007) Method of direction-of-arrival estimation for uncorrelated, partially correlated and coherent sources. Microw Antennas Propag IET 1(4):949–954

Roebroeck A, Formisano E, Goebel R (2005) Mapping directed influence over the brain using Granger causality and fMRI. Neuroimage 25(1):230–242

Jaeger H (2001) The “echo state” approach to analysing and training recurrent neural networks. Technical report GMD Report 148. German National Research Center for Information Technology

Jaeger H (2002) Tutorial on training recurrent neural networks, covering BPPT, RTRL, EKF and the echo state network approach. GMD-Forschungszentrum Informationstechnik, Bonn

Han M, Xu M (2018) Subspace echo state network for multivariate time series prediction. IEEE Trans Neural Netw Learn Syst 29(1):238–244

Koprinkova Hristova P, Tontchev N (2012) Echo state networks for multi-dimensional data clustering. In: International conference on artificial neural networks and machine learning. Springer-Verlag, pp 571–578

Fourati R, Ammar B, Aouiti C et al (2017) Optimized echo state network with intrinsic plasticity for EEG-based emotion recognition. In: International conference on neural information processing. Springer, Cham, pp 718–727

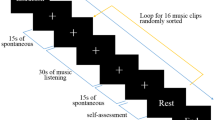

Koelstra S, Muhl C, Soleymani M et al (2012) DEAP: a database for emotion analysis; using physiological signals. IEEE Trans Affect Comput 3(1):18–31

Kanungo T, Mount DM, Netanyahu NS et al (2002) An efficient k-means clustering algorithm: analysis and implementation. IEEE Trans Pattern Anal Mach Intell 24(7):881–892

Hartigan JA (1979) A k-means clustering algorithm. Appl Stat 28(1):100–108

Huang Z (1998) Extensions to the k-means algorithm for clustering large data sets with categorical values. Data Min Knowl Disc 2(3):283–304

Zheng WL, Zhu JY, Lu BL et al (2016) Identifying stable patterns over time for emotion recognition from EEG. IEEE Trans Affect Comput 99(1949):1. https://doi.org/10.1109/TAFFC.2017.2712143

Lin YP, Wang CH, Jung TP et al (2010) EEG-based emotion recognition in music listening. IEEE Trans Biomed Eng 57(7):1798–1806

Jenke R, Peer A, Buss M (2017) Feature extraction and selection for emotion recognition from EEG. IEEE Trans Affect Comput 5(3):327–339

Yin Z, Wang Y, Liu L et al (2017) Cross-subject EEG feature selection for emotion recognition using transfer recursive feature elimination. Front Neurorobot 11:19

Schrauwen B, Wardermann M, Verstraeten D et al (2008) Improving reservoirs using intrinsic plasticity. Neurocomputing 71(7–9):1159–1171

Skowronski MD, Harris JG (2007) Special issue: automatic speech recognition using a predictive echo state network classifier. Elsevier Science Ltd, Amsterdam

Chen J, Hu B, Wang Y et al (2017) A three-stage decision framework for multi-subject emotion recognition using physiological signals. In: IEEE international conference on bioinformatics and biomedicine. IEEE, pp 470–474

Acknowledgements

This research has been partially supported by National Natural Science Foundation of China (Grant No. 61432004), NSFC-Shenzhen Joint Foundation (Key Project) (Grant No. U1613217).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Ren, F., Dong, Y. & Wang, W. Emotion recognition based on physiological signals using brain asymmetry index and echo state network. Neural Comput & Applic 31, 4491–4501 (2019). https://doi.org/10.1007/s00521-018-3664-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-018-3664-1