Abstract

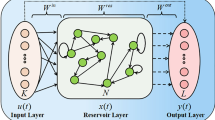

Echo state network (ESN) refers to a novel recurrent neural network with a largely and randomly generated reservoir and a trainable output layer, which has been utilized in the time series prediction. In spite of that, since the output weights are computed by the simple linear regression, there may be an ill-posed problem in the training process for ESN. In order to tackle this issue, a sparse Bayesian ESN (SBESN) is given. The proposed SBESN attempts to estimate the probability of the outputs and trains the network through sparse Bayesian learning, where independent regularization priors should be implied to each weight rather than sharing one prior for all weights. Simulation results illustrate that the SBESN model is insensitivity to reservoir size and completely outperforms other models.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Li J, Dai Q, Ye R (2019) A novel double incremental learning algorithm for time series prediction. Neural Comput Appl 31(10):6055–6077

Mohapatra UM, Majhi B, Satapathy SC (2019) Financial time series prediction using distributed machine learning techniques. Neural Comput Appl 31(8):3369–3384

Zhang W, Xu A, Ping D (2019) An improved kernel-based incremental extreme learning machine with fixed budget for nonstationary time series prediction. Neural Comput Appl 31(3):637–652

Ak R, Fink O, Zio E (2016) Two machine learning approaches for short-term wind speed time-series prediction. IEEE Trans Neural Netw Learn Syst 27:1734–1747

Chandra R, Zhang M (2015) Competition and collaboration in cooperative coevolution of Elman recurrent neural networks for time-series prediction. IEEE Trans Neural Netw Learn Syst 26:3123–3136

Chen DW (2017) Research on traffic flow prediction in the big data environment based on the improved RBF neural network. IEEE Trans Ind Informat 13:2000–2008

Han HG, Lin ZL, Qiao JF (2017) Modeling of nonlinear systems using the self -organizing fuzzy neural network with adaptive gradient algorithm. Neurocomputing 266:566–578

Miranian A, Abdollahzade M (2013) Developing a local least-squares support vector machines-based neuro-fuzzy model for nonlinear and chaotic time series prediction. IEEE Trans Neural Netw Learn Syst 24:207–218

Jaeger H, Haas H (2004) Harnessing nonlinearity: predicting chaotic systems and saving energy in wireless communication. Science 304:78–80

Yao XS, Wang ZS, Zhang HG (2019) Prediction and identification of discrete-time dynamic nonlinear systems based on adaptive echo state network. Neural Netw 113:11–19

Han M, Xu ML (2018) Laplacian echo state network for multivariate time series prediction. IEEE Trans Neural Netw Learn Syst 29:238–244

Qiao JF, Li FJ, Han HG, Li WJ (2017) Growing echo-state network with multiple subreservoirs. IEEE Trans Neural Netw Learn Syst 28:391–404

Yang CL, Qiao JF, Wang L, Zhu XX (2019) Dynamical regularized echo state network for time series prediction. Neural Comput Appl 31:6781–6794

Ma QL, Chen EH, Lin ZX, Yan JY (2019) Convolutional multitimescale echo state network. IEEE Trans Cybern. https://doi.org/10.1109/tcyb.2019.2919648

Badoni M, Singh B, Singh A (2017) Implementation of echo-state network based control for power quality improvement. IEEE Trans Ind Electron 64:5576–5584

Chen Q, Shi L, Na J, Ren XM (2018) Adaptive echo state network control for a class of pure-feedback systems with input and output constraints. Neurocomputing 275:1370–1382

Duan HB, Wang XH (2016) Echo state networks with orthogonal pigeon-inspired optimization for image restoration. IEEE Trans Neural Netw Learn Syst 27:2413–2425

Dutoit X, Schrauwen B, Van Campenhout J, Stroobandt D, Van Brussel H, Nuttin M (2009) Pruning and regularization in reservoir computing. Neurocomputing 72:1534–1546

Reinhart RF, Steil JJ (2012) Regularization and stability in reservoir networks with output feedback. Neurocomputing 90:96–105

Bishop CM (1995) Training with noise is equivalent to Tikhonov regularization. Neural Comput 7:108–116

Qiao JF, Wang L, Yang CL (2019) Adaptive lasso echo state network based on modified Bayesian information criterion for nonlinear system modeling. Neural Comput Appl 31:6163–6177

Xu ML, Han M (2016) Adaptive elastic echo state network for multivariate time series prediction. IEEE Trans Cybern 46:2173–2183

Chatzis SP, Demiris Y (2011) Echo state Gaussian process. IEEE Trans Neural Netw 22:1435–1445

Li DC, Han M, Wang J (2012) Chaotic time series prediction based on a novel robust echo state network. IEEE Trans Neural Netw Learn Syst 23:787–799

Huang BB, Qin G, Zhao R, Wu Q (2018) Recursive Bayesian echo state network with an adaptive inflation factor for temperature prediction. Neural Comput Appl 29:1535–1543

Liu Y, Liu QL, Wang W, Zhao J, Leung H (2012) Data-driven based model for flow prediction of steam system in steel industry. Inf Sci 193:104–114

Shen LH, Chen JH, Zeng ZG, Yang JZ (2018) A novel echo state network for multivariate and nonlinear time series prediction. Appl Soft Comput 62:524–535

Shutin D, Zechner C, Kulkarni SR (2012) Regularized variational bayesian learning of echo state networks with delay & sum readout. Neural Comput 24:967–995

Soria-Olivas E, Gomez-Sanchis J, Martin JD (2011) BELM: Bayesian extreme learning machine. IEEE Trans Neural Netw 22:505–509

Luo JH, Vong CM, Wong PK (2014) Sparse Bayesian extreme learning machine for multi-classification. IEEE Trans Neural Netw Learn Syst 25:836–843

Wipf DP, Rao BD (2004) Sparse Bayesian learning for basis selection. IEEE Trans Signal Process 52:2153–2164

Wong KI, Vong CM, Wong PK, Luo JH (2015) Sparse Bayesian extreme learning machine and its application to biofuel engine performance prediction. Neurocomputing 149:397–404

Tipping ME (2001) Sparse Bayesian learning and the relevance vector machine. J Mach Learn Res 1:211–244

Tipping ME (2004) Bayesian inference: an introduction to principles and practice in machine learning. Lect Notes Comput Sci 3176:41–62

Jaeger H (2001) The ‘echo state’ approach to analysing and training recurrent neural networks-with an erratum note. German Nat Res Center Inf Technol, Bonn, Germany, Tech Rep 148

Lun SX, Yao XS, Hu HF (2016) A new echo state network with variable memory length. Inf Sci 370–371:103–119

Buehner M, Young P (2006) A tighter bound for the echo state property. IEEE Trans Neural Netw 17(3):820–824

Yildiz IB, Jaeger H, Kiebel SJ (2012) Re-visiting the echo state property. Neural Netw 35:1–9

Gallicchio C, Micheli A (2011) Architectural and Markovian factors of echo state networks. Neural Netw 24(5):440–456

Wang HS, Yan XF (2014) Improved simple deterministically constructed cycle reservoir network with sensitive iterative pruning algorithm. Neurocomputing 145:353–362

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J R Stat Soc B 58:267–288

Lorenz EN (1963) Deterministic nonperiodic flow. J Atmos Sci 20:130–141

Rodan A, Tino P (2010) Minimum complexity echo state network. IEEE Trans Neural Netw 22:131–144

Han HG, Liu Z, Hou Y, Qiao JF (2020) Data-driven multiobjective predictive control for wastewater treatment process. IEEE Trans Ind Inform 16(4):2767–2775

Yang CL, Qiao JF, Han HG, Wang L (2018) Design of polynomial echo state networks for time series prediction. Neurocomputing 290:148–160

Yang CL, Qiao JF, Ahmad Z, Nie KZ, Wang L (2019) Online sequential echo state network with sparse RLS algorithm for time series prediction. Neural Netw 118:32–42

Acknowledgements

This work was supported by the National Key Research and Development Program of China under Grants 2020YFC1511702, and the National Natural Science Foundation of China under Grants 61771059.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, L., Su, Z., Qiao, J. et al. Design of sparse Bayesian echo state network for time series prediction. Neural Comput & Applic 33, 7089–7102 (2021). https://doi.org/10.1007/s00521-020-05477-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-05477-3