Abstract

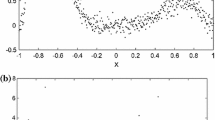

When the training data required by the data-driven model is insufficient or difficult to cover the sample space completely, incorporating the prior knowledge and prior knowledge compensation module into the support vector regression (PESVR) can significantly improve the accuracy and generalization performance of the model. However, the optimization problem to be solved is very complex, resulting long training time, and it must be retrained all the data from scratch every time the training set is modified. Comparing to standard support vector regression (SVR), PESVR has multiple input datasets and more complex objective function and constraints, including several coupling constraints, the existing methods cannot effectively solve accurate on-line learning of this nested (i.e. fully coupled) model. In this paper, an accurate on-line support vector regression incorporated with prior knowledge and error compensation is proposed. Under the constraint of Karush–Kuhn–Tucker conditions, the model parameters are updated recursively through the sequential adiabatic incremental adjustments. The error compensation model and the prediction model are updated simultaneously when a real measured sample or prior knowledge sample is added to or removed from the training set. The updated model is identical to the model produced by the batch learning algorithm. Experiments on an artificial dataset and several benchmark datasets show encouraged results for online learning and prediction.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Libsvm data: Regression. https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/regression.html

Alcala-Fdez J, Fernández A, Luengo J, Derrac J, García S (2011) Keel data-mining software tool: Data set repository, integration of algorithms and experimental analysis framework. Multiple-Valued Logic and Soft Computing 17:255–287

Balasundaram S, Gupta D (2016) Knowledge-based extreme learning machines. Neural Computing and Applications 27(6):1629–1641

Bin Gu, Jiandong Wang, Haiyan Chen: On-line off-line ranking support vector machine and analysis. In: 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), pp. 1364–1369 (2008)

Bloch G, Lauer F, Colin G, Chamaillard Y (2008) Support vector regression from simulation data and few experimental samples. Information Sciences 178(20):3813–3827

Boyd S, Vandenberghe L (2004) Convex Optimization. Cambridge University Press, New York, NY, USA

Cauwenberghs, G., Poggio, T.: Incremental and decremental support vector machine learning. In: Proceedings of the 13th International Conference on Neural Information Processing Systems, NIPS, pp. 388–394. MIT Press, Cambridge, MA, USA (2000)

Chaharmiri R, Arezoodar AF (2016) The effect of sequential coupling on radial displacement accuracy in electromagnetic inside-bead forming: simulation and experimental analysis using Maxwell and ABAQUS software. Journal of Mechanical Science and Technology 30(5):2005–2010

Chang C, Lin C (2002) Training ν-support vector regression: Theory and algorithms. Neural Computation 14(8):1959–1977

Chen Y, Xiong J, Xu W, Zuo J (2019) A novel online incremental and decremental learning algorithm based on variable support vector machine. Cluster Computing 22(3):7435–7445

Crammer K, Dekel O, Keshet J, Shalev-Shwartz S, Singer Y (2006) Online passive-aggressive algorithms. Journal of Machine Learning Research 7:551–585

Dai C, Pi D, Fang Z, Peng H (2014) A Novel Long-Term Prediction Model for Hemispherical Resonator Gyroscope’s Drift Data. IEEE Sensors Journal 14(6):1886–1897

Diehl CP, Cauwenberghs G (2003) Svm incremental learning, adaptation and optimization. In: Proceedings of the International Joint Conference on Neural Networks, 2003., vol. 4, pp. 2685–2690 vol.4

Dua D, Graff C (2017) UCI machine learning repository . http://archive.ics.uci.edu/ml

Gretton A, Desobry F (2003) On-line one-class support vector machines. an application to signal segmentation. In: 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing, 2003. Proceedings. (ICASSP ’03)., vol. 2, pp. II–709

Gu B, Sheng VS (2013) Feasibility and Finite Convergence Analysis for Accurate On-Line \(\nu\)-Support Vector Machine. IEEE Transactions on Neural Networks and Learning Systerms 24(8):1304–1315

Gu B, Sheng VS, Wang Z, Ho D, Osman S, Li S (2015) Incremental learning for \(\nu\)-Support Vector Regression. Neural Networks 67:140–150

Gu B, Wang JD, Yu YC, Zheng GS, Huang YF, Xu T (2012) Accurate on-line \(\nu\)-support vector learning. Neural Networks 27:51–59

Hastie T, Rosset S, Tibshirani R, Zhu J (2004) The entire regularization path for the support vector machine. J. Mach. Learn. Res. 5:1391–415

Karasuyama M, Takeuchi I (2010) Multiple incremental decremental learning of support vector machines. IEEE Transactions on Neural Networks 21(7):1048–1059

Karush (2014) W Minima of Functions of Several Variables with Inequalities as Side Conditions, pp. 217–245. Springer Basel, Basel

Laskov P, Gehl C, Krueger S, Müller KR (2006) Incremental support vector learning: Analysis, implementation and applications. Journal of Machine Learning Research (JMLR) 7

Laskov P, Gehl C, Krüger S, Müller KR (2006) Incremental support vector learning: Analysis, implementation and applications. J. Mach. Learn. Res. 7:1909–1936

Lauer F, Bloch G (2008) Incorporating prior knowledge in support vector machines for classification: A review. Neurocomputing 71(7):1578–1594

Lauer F, Bloch G (2008) Incorporating prior knowledge in support vector regression. Machine Learning 70(1):89–118

Liang Z, Li Y (2009) Incremental support vector machine learning in the primal and applications. Neurocomputing 72(10):2249–2258

Liao Z, Couillet R (2019) A large dimensional analysis of least squares support vector machines. IEEE Transactions on Signal Processing 67(4):1065–1074

Lin LS, Li DC, Chen HY, Chiang YC (2018) An attribute extending method to improve learning performance for small datasets. Neurocomputing 286:75–87

Liu B, Wang L, Jin YH, Tang F, Huang DX (2005) Improved particle swarm optimization combined with chaos. Chaos Solitons & Fractals 25(5):1261–1271

Liu Z, Xu Y, Qiu C, Tan J (2019) A novel support vector regression algorithm incorporated with prior knowledge and error compensation for small datasets. Neural Computing and Applications

Ma J, Theiler J, Perkins S (2003) Accurate on-line support vector regression. Neural Computation 15(11):2683–2703

Mangasarian OL, Musicant DR (1999) Successive overrelaxation for support vector machines. IEEE Transactions on Neural Networks 10(5):1032–1037

Shapiai MI, Ibrahim Z, Khalid M (2012) Enhanced Weighted Kernel Regression with Prior Knowledge Using Robot Manipulator Problem as a Case Study. Procedia Engineering 41:82–89

Smola AJ, Schölkopf B (2004) A tutorial on support vector regression. Statistics and Computing 14(3):199–222

Zhao Y, Zhao H, Huo X, Yao Y (2017) Angular Rate Sensing with GyroWheel Using Genetic Algorithm Optimized Neural Networks. Sensors 17(7)

Zheng D, Wang J, Zhao Y (2006) Non-flat function estimation with a multi-scale support vector regression. Neurocomputing 70(1–3):420–429

Zhou J, Duan B, Huang J, Cao H (2014) Data-driven modeling and optimization for cavity filters using linear programming support vector regression. Neural Computing and Applications 24(7):1771–1783

Zhou J, Duan B, Huang J, Li N (2015) Incorporating prior knowledge and multi-kernel into linear programming support vector regression. Soft Computing 19(7):2047–2061

Zhou J, Liu D, Peng Y, Peng X (2012) Dynamic battery remaining useful life estimation: An on-line data-driven approach. In: 2012 IEEE International Instrumentation and Measurement Technology Conference Proceedings, pp. 2196–2199

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 52075480, 51935009 and U1608256, in part by Key Research and Development Program of Zhejiang Province under Grant 2021C01008, and in part by the Natural Science Foundation of Zhejiang Province under Grant Y19E050078.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix 1: Proof to the partition results of the three datasets

Appendix 1: Proof to the partition results of the three datasets

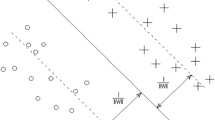

In this appendix, the KKT conditions are utilized to derive the partition results of the sets \(Set_r,Set_e,Set_p\). According to convex optimization theory [6], the solution of the minimization problem (5) is obtained by minimizing the following Lagrange formulation

where \({\widetilde{A}}_1, {\widetilde{A}}_2, {\widetilde{A}}_3\) are the dual form of the constraints in (5):

\(\chi ^{(*)}\),\(\upsilon ^{(*)}\), \(\zeta ^{(*)}\), \(\iota ^{(*)}\), \(\varsigma ^{(*)}\), \(\tau ^{(*)}\), \(\rho\), \(\kappa\) are Lagrangian multipliers. Then by the KKT theorem [21], the following KKT conditions are obtained

Note that \(\rho\) and \(\kappa\) are, respectively, equal to the optimal b and \(b_e\) in (4) [9]. According to (9)–(11) and (57), the partial derivative of Lagrangian function L are written as:

Combining (8), (58)–(61), the partition results (12)–(14) of the datasets \(Set_r,Set_e\) and \(Set_p\) are obtained.

Rights and permissions

About this article

Cite this article

Liu, Z., Xu, Y., Duan, G. et al. Accurate on-line support vector regression incorporated with compensated prior knowledge. Neural Comput & Applic 33, 9005–9023 (2021). https://doi.org/10.1007/s00521-020-05664-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-05664-2