Abstract

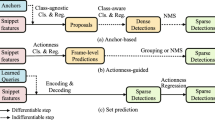

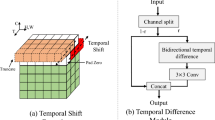

Video-based human action recognition remains a challenging task. There are three main limitations: (1) Most works are only restricted to single temporal scale modeling. (2) Although a few methods consider multilevel motion features, they disregard the fact that different features usually contribute differently. (3) Most attention mechanisms only notice important regions in frames without concerning the spatial structure information around them. To address these issues, a discriminative multi-focused and complementary temporal/spatial attention framework is presented, which consists of the multi-focused temporal attention network with multi-granularity loss (M2TEAN) and complementary spatial attention network with co-classification loss (C2SPAN). Firstly, M2TEAN not only focuses on discriminative multilevel motion features, but also highlights more discriminative features among them. Specifically, a short-term discriminative attention sub-network and a middle-term consistent attention sub-network are, respectively, constructed to focus on discriminative short-term and middle-term features. A long-term evolutive attention sub-network is proposed to focus on long-term action evolution over time. Followed by a multi-focused temporal attention module, more discriminative features are ulteriorly highlighted. Secondly, C2SPAN captures discriminative regions in frames, while mining the spatial structure information around them. Experiments reveal that our methods produce state-of-the-art results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Yu T, Wang L, Da C, Gu H, Xiang S, Pan C (2019) Weakly semantic guided action recognition. IEEE Trans Multimed 21(10):2504–2517

Ibrahim MS, Mori G (2018) Hierarchical relational networks for group activity recognition and retrieval. In: Proceedings of the European conference on computer vision (ECCV). pp 721–736

Hu JF, Zheng WS, Pan J, Lai J, Zhang J (2018) Deep bilinear learning for rgb-d action recognition. In: Proceedings of the European conference on computer vision (ECCV). pp 335–351

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 886–893

Laptev I, Marszalek M, Schmid C, Rozenfeld B (2008) Learning realistic human actions from movies. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 1–8

Crasto N, Weinzaepfel P, Alahari K, Schmid C (2019) MARS: motion-augmented RGB stream for action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 7882–7891

Song S, Liu J, Li Y, Guo Z (2020) Modality compensation network: cross-modal adaptation for action recognition. IEEE Trans Image Process 29:3957–3969

Du Y, Yuan C, Li B, Zhao L, Li Y, Hu W (2018) Interaction-aware spatio-temporal pyramid attention networks for action classification. In: Proceedings of the European conference on computer vision (ECCV). pp 373–389

Zhu Y, Li R, Yang Y, Ye N (2020) Learning cascade attention for fine-grained image classification. Neural Netw 122:174–182

Georgakopoulos SV, Kottari K, Delibasis K, Plagianakos VP, Maglogiannis I (2019) Improving the performance of convolutional neural network for skin image classification using the response of image analysis filters. Neural Comput Appl 31(6):1805–1822

Takikawa T, Acuna D, Jampani V, Fidler S (2019) Gated-scnn: Gated shape cnns for semantic segmentation. In: Proceedings of IEEE international conference on computer vision ( ICCV). pp 5229–5238

Tokunaga H, Acuna D, Jampani V, Fidler S (2019) Adaptive weighting multi-field-of-view CNN for semantic segmentation in pathology. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 12597–12606

Yang M, Wen W, Wang X, Shen L, Gao G (2020) Adaptive convolution local and global learning for class-level joint representation of facial recognition with a single sample per data subject. IEEE Trans Inf Forensics Secur 15:2469–2484

Liu D, Gao X, Wang N, Li J, Peng C (2020) Coupled attribute learning for heterogeneous face recognition. IEEE Trans Neural Netw Learn Syst. https://doi.org/10.1109/TNNLS.2019.2957285

Zhou L, Gu X (2020) Embedding topological features into convolutional neural network salient object detection. Neural Netw 121:308–318

Zhang H, Guo H, Wang X, Ji Y, Wu QJ (2020) Clothescounter: a framework for star-oriented clothes mining from videos. Neurocomputing 377:38–48

Zhang H, Ji Y, Huang W, Liu L (2019) Sitcom-star-based clothing retrieval for video advertising: a deep learning framework. Neural Comput Appl 31(11):7361–7380

Dixit M, Li Y, Vasconcelos N (2019) Semantic fisher scores for task transfer: using objects to classify scenes. IEEE Trans Pattern Anal Mach Intell. https://doi.org/10.1109/TPAMI.2019.2921960

He N, Fang L, Li S, Plaza J, Plaza A (2019) Skip-connected covariance network for remote sensing scene classification. IEEE Trans Neural Netw Learn Syst 31(5):1461–1474

Li C, Zhong Q, Xie D, Pu S (2019) Collaborative spatiotemporal feature learning for video action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 7872–7881

Tran D, Bourdev L, Fergus R, Torresani L, Paluri M (2015) Learning spatiotemporal features with 3D convolutional networks. In: Proceedings of IEEE international conference on computer vision (ICCV). pp 4489–4497

Carreira J, Zisserman A (2017) Quo vadis, action recognition? A new model and the Kinetics dataset. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 4724–4733

Donahue J, Hendricks LA, Guadarrama S, Rohrbach M, Venugopalan S, Darrell T, Saenko K (2015) Long-term recurrent convolutional networks for visual recognition and description. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 677–691

Wang Q, Chen K (2020) Multi-label zero-shot human action recognition via joint latent ranking embedding. Neural Netw 122:1–23

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos. In: Proceedings of advances in neural information processing systems (NIPS). pp 568–576

Feichtenhofer C, Pinz A, Zisserman A (2016) Convolutional two-stream network fusion for video action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 1933–1941

Dai C, Liu X, Lai J (2020) Human action recognition using two-stream attention based LSTM networks. Applied Soft Computing 86:105820

Lu M, Li ZN, Wang Y, Pan G (2019) Deep attention network for egocentric action recognition. IEEE Trans Image Process 28(8):3703–3713

Rahimi S, Aghagolzadeh A, Ezoji M (2020) Human action recognition using double discriminative sparsity preserving projections and discriminant ridge-based classifier based on the GDWL-l1 graph. Expert Syst with Appl 141:112927

Naveenkumar N, Domnic S (2020) Deep ensemble network using distance maps and body part features for skeleton based action recognition. Pattern Recognit 100:107125

Li Y, Song S, Li Y, Liu J (2019) Temporal bilinear networks for video action recognition. Proce AAAI Conf Artif Intell 33:8674–8681

Zhang H, Liu D, Xiong Z (2019) Two-stream action recognition-oriented video super-resolution. In: Proceedings of IEEE international conference on computer vision (ICCV). pp 8799–8808

Li L, Zhang Z, Huang Y, Wang L (2018) Deep temporal feature encoding for action recognition. In: 2018 24th international conference on pattern recognition (ICPR). pp 1109–1114

Zhu J, Zhu Z, Zou W (2018) End-to-end video-level representation learning for action recognition. In: 2018 24th international conference on pattern recognition (ICPR). pp 645–650

Wang L, Xiong Y, Wang Z, Qiao Y, Lin D, Tang X, Van GL (2016) Temporal segment networks: Towards good practices for deep action recognition. In: Proceedings of the European conference on computer vision (ECCV). pp 20–36

Fernando B, Anderson P, Hutter M, Gould S (2016) Discriminative hierarchical rank pooling for activity recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 1924–1932

Bilen H, Fernando B, Gavves E, Vedaldi A (2018) Action recognition with dynamic image networks. IEEE Trans Pattern Anal Mach Intell 40:2799–2813

Meng L, Zhao B, Chang B, Huang G, Sun W, Tung F, Sigal L (2019) Interpretable spatio-temporal attention for video action recognition. In: Proceedings of the IEEE international conference on computer vision workshops (ICCV Workshops)

Song S, Lan C, Xing J, Zeng W, Liu J (2018) Spatio-temporal attention-based LSTM networks for 3D action recognition and detection. IEEE Trans Image Process 27(7):3459–3471

Yang H, Yuan C, Zhang L, Sun Y, Hu W, Maybank SJ (2020) STA-CNN: Convolutional spatial-temporal attention learning for action recognition. IEEE Trans Image Process 29:5783–5793

Ni B, Li T, Yang X (2018) Learning semantic-aligned action representation. IEEE Trans Neural Netw Learn Syst 29(8):3715–3725

Li D, Qiu Z, Dai Q, Yao T, Mei T (2018) Recurrent tubelet proposal and recognition networks for action detection. In: Proceedings of the European conference on computer vision (ECCV). pp 303–318

Si C, Chen W, Wang W, Wang L, Tan T (2019) An attention enhanced graph convolutional lstm network for skeleton-based action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 1227–1236

Soomro K, Zamir AR, Shah M (2012) UCF101: a dataset of 101 human actions classes from videos in the wild. https://arxiv.org/abs/1212.0402v1

Kuehne H, Jhuang H, Garrote E, Poggio T, Serre T (2011) HMDB: a large video database for human motion recognition. In: Proceedings of IEEE international conference on computer vision (ICCV). pp 2556–2563

Paszke A, Gross S, Chintala S, Chanan G, Yang E, DeVito Z, Lin Z, Desmaison A, Antiga L, Lerer A (2017) Automatic differentiation in pytorch. In: Proceedings of NIPS workshop. pp 1–4

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 2818–2826

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 248–255

Zach C, Pock T, Bischof H (2007) A duality based approach for realtime tv-l 1 optical flow. In: Proceedings of the 29th DAGM symposium on pattern recognition. pp 214–223

Zhang B, Wang L, Wang Z, Qiao Y, Wang H (2018) Real-time action recognition with deeply transferred motion vector CNNs. IEEE Trans Image Process 27(5):2326–2339

Wei D, Lim JJ, Zisserman A, Freeman WT (2018) Learning and using the arrow of time. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 8052–8060

Li D, Yao T, Duan LY, Mei T, Rui Y (2018) Unified spatio-temporal attention networks for action recognition in videos. IEEE Trans Multimed 21(2):416–428

Chen L, Song Z, Lu J, Zhou J (2019) Learning principal orientations and residual descriptor for action recognition. Pattern Recognit 86:14–26

Zhao J, Snoek CG (2019) Dance with flow: Two-in-one stream action detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 9935–9944

Wang P, Liu L, Shen C, Shen HT (2019) Order-aware convolutional pooling for video based action recognition. Pattern Recognit 91:357–365

Bo Y, Lu Y, He W (2020) Few-shot learning of video action recognition only based on video contents. In: IEEE winter conference on applications of computer vision (WACV). pp 595–604

Girdhar R, Tran D, Torresani L, Ramanan D (2019) Distinit: Learning video representations without a single labeled video. In: Proceedings of the IEEE international conference on computer vision (ICCV). pp 852–861

Wang C, Fu H, Ling CX, Du P, Ma H (2020) Region-based global reasoning networks. In: Proceedings of the AAAI conference on artificial intelligence. pp 12136–12143

Yang H, Yuan C, Li B, Du Y, Xing J, Hu W, Maybank SJ (2019) Asymmetric 3d convolutional neural networks for action recognition. Pattern Recognit 85:1–12

Su B, Wu Y (2019) Learning low-dimensional temporal representations with latent alignments. IEEE Trans Pattern Anal Mach Intell. https://doi.org/10.1109/TPAMI.2019.2919303

Pang B, Zha K, Cao H, Shi C, Lu C (2019) Deep rnn framework for visual sequential applications. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). pp 423–432

Hao W, Zhang Z (2019) Spatiotemporal distilled dense-connectivity network for video action recognition. Pattern Recognit 92:13–24

Liu K, Liu W, Ma H, Tan M, Gan C (2020) A real-time action representation with temporal encoding and deep compression. IEEE Trans Circ Syst Video Technol. https://doi.org/10.1109/TCSVT.2020.2984569

Acknowledgements

This work was supported partially by Science and Technology Overall Innovation Project of Shaanxi Province (Grant 2013KTZB03-03-03) and Shaanxi Province key project of Research and Development Plan (S2018-YF-ZDGY-0187).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All the authors of the manuscript declared that there are no potential conflicts of interest.

Human and animal rights

All the authors of the manuscript declared that there is no research involving human participants and/or animal.

Informed consent

All the authors of the manuscript declared that there is no material that required informed consent.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Tong, M., Yan, K., Jin, L. et al. DM-CTSA: a discriminative multi-focused and complementary temporal/spatial attention framework for action recognition. Neural Comput & Applic 33, 9375–9389 (2021). https://doi.org/10.1007/s00521-021-05698-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-05698-0