Abstract

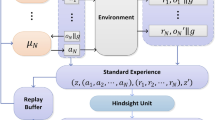

Reinforcement learning algorithms have made huge progress in recent years by leveraging the power of deep neural networks. Despite the success, deep reinforcement learning algorithms’ performance is largely dependent on the approach of exploration. Some of them engage in exploratory behavior by injecting external noise into the action space or adopting a gaussian policy. This paper presents a deep reinforcement learning algorithm without external noise called self-guided deep deterministic policy gradient with multi-actor (SDDPGM), which is the combination of deep deterministic policy gradient and generative adversarial networks (GANs). It employs the generator of GANs which trained from excellent experiences to guide the learning of the agent and makes discriminator constitute a subjective reward. Moreover, to make the learning more stable, SDDPGM applies a multi-actor mechanism that stands as a serially distinct actor based on the temporal phase of an episode. Finally, experiments show that SDDPGM is a promising deep reinforcement learning method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Levine S, Pastor P, Krizhevsky A, Ibarz J, Quillen D (2018) Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. Int J Robot Res 37:421–436

Li T, Liu YJ, Tong SC (2014) Adaptive neural control using reinforcement learning for a class of robot manipulator. Neural Comput Appl 25:135–141

Silver D et al (2016) Mastering the game of go with deep neural networks and tree search. Nature 529:484–489

Jiang ZY, Xu DX, Liang JJ (2017) A deep reinforcement learning framework for the financial portfolio management problem. arXiv preprint arXiv:1706.10059

Jiang MX, Hai T, Pan ZG, Wang HY, Jia YJ, Deng C (2019) Multi-agent deep reinforcement learning for multi-object tracker. IEEE Access 7:32400–32407

Han JW, Yang L, Zhang DW, Chang XJ, Liang XD (2018) Reinforcement cutting-agent learning for video object segmentation. In: 2018 IEEE conference on computer vision and pattern recognition, pp 9080–9089.https://doi.org/10.1109/CVPR.2018.00946

Ganin Y, Kulkarni T, Babuschkin I, Eslami SMA, Vinyals O (2018) Synthesizing programs for images using reinforced adversarial learning. In: Proceedings of the 35th international conference on machine learning, pp 1652–1661

Li JW, Monroe W, Ritter A, Jurafsky D, Galley M, Gao JF (2016) Deep reinforcement learning for dialogue generation. In: Proceedings of the 2016 conference on empirical methods in natural language processing, pp 1192–1202. https://doi.org/10.18653/v1/d16-1127

Yin QY, Zhang Y, Zhang WN, Liu T, Wang WY (2018) Deep reinforcement learning for Chinese zero pronoun resolution. In: Proceedings of the 56th annual meeting of the association for computational linguistics, pp 569–578. https://doi.org/10.18653/v1/P18-1053

Feng J, Huang ML, Zhao L, Yang Y, Zhu XY (2018) Reinforcement learning for relation classification from noisy data. In: Proceedings of the thirty-second AAAI conference on artificial intelligence, pp 5779–5786

Sutton RS, Barto AG (1998) Reinforcement learning: an introduction. MIT Press, Cambridge

Watkins C, Christopher J, Dayan P (1992) Q-learning. Mach Learn 8:279–292

Mnih V et al (2015) Human-level control through deep reinforcement learning. Nature 518:529–533. https://doi.org/10.1038/nature14236

Wang ZY, Schaul S, Hessel M, Hasselt HV, Lanctot M, Freitas ND (2016) Dueling network architectures for deep reinforcement learning. In: Proceedings of the 33rd international conference on machine learning, pp 1995–2003

Hasselt HV, Gueza A, Silver D (2016) Deep reinforcement learning with double Q-learning. In: Proceedings of the thirtieth AAAI conference on artificial intelligence, pp 2094–2100

Hausknecht MJ, Stone P (2015) Deep recurrent Q-learning for partially observable MDPs. In: Proceedings of the 2015 AAAI conference on artificial intelligence, pp 29–37

Konda VR, Tsitsiklis JN (2000) Actor-critic algorithms. In: Proceedings of the 12nd neural information processing system, pp 1008–1014

Lillicrap TP, Hunt JJ, Pritzel A, Heess N, Erez T, Tassa Y, Silver D, Wierstra D (2015) Continuous control with deep reinforcement learning. Comput Sci 8:A187

Mnih V, Badia AP, Mirza M, Graves A, Harley T, Lillicrap TP, Silver D, Kavukcuoglu K (2016) Asynchronous methods for deep reinforcement learning. In: Proceedings of 33rd international conference of machine learning, pp 1928–1937

Silver D, Lever G, Heess N, Degris T, Wierstra D, Riedmiller MA (2014) Deterministic policy gradient algorithms. In: Proceedings of 31st international conference of machine learning, pp 387–395

Tangkaratt V, Abdolmaleki A, Sugiyama M (2018) Guide actor-critic for continuous control. In: 6th international conference on learning representations, pp 427–438

Sutton RS, McAllester D, Singh S, Mansour Y (2000) Policy gradient methods for reinforcement learning with function approximation. In: Proceedings of the 12nd neural information processing system, pp 1057–1063

Wawrzynski P (2015) Control policy with autocorrelated noise in reinforcement learning for robotics. Mach Learn 5:91–95

Goodfellow IJ, Abadie JP, Mirza M, Xu B, Farley DW, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. In: Proceedings of the 27th neural information processing system, pp 2672–2680

Uhlenbeck GE, Ornstein LS (1930) On the theory of Brownian motion. Phys Rev 36:823–841

Pfau D, Vinyals O (2016) Connecting generative adversarial networks and actor-critic methods. arXiv preprint arXiv:1610.01945

Lowe R, Yi W, Aviv T, Jean H, Pieter A, Igor M (2017) Multi-agent actor-critic for mixed cooperative-competitive environments. In: Proceedings of the 30th neural information processing system, pp 6382–6393

Ho J, Ermon S (2016) Generative adversarial imitation learning. In: Proceedings of the 29th neural information processing system, pp 4565–4573

Wu L, Li Z, Tao Q, Lai J, Liu T-Y (2017) Sequence prediction with unlabeled data by reward function learning. In: IJCAI, pp 3098–3104

Schmidhuber J (2010) Formal theory of creativity, fun, and intrinsic motivation (1990–2010). IEEE Trans Auton Ment Dev 2(3):230–247

Burda Y, Edwards H, Pathak D, Storkey AJ, Darrell T, Efros AA (2019) Large-scale study of curiosity-driven learning. In: 7th international conference on learning representations, New Orleans, LA, USA, May 6–9

Brockman G, Cheung V, Pettersson L, Schneider J, Schulman J, Tang J, Zaremba W (2016) OpenAI gym. arXiv preprint arXiv:1606.01540

Todorov E, Erez T, Tassa Y (2012) MuJoCo: a physics engine for model-based control. In: Proceedings of 2012 IEEE international conference intelligent robots systems, pp 5026–5033

Schulman J, Levine S, Abbeel P (2015) Trust region policy optimization. In: Proceedings of 32nd international conference of machine learning, pp 1889–1897

Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O (2017) Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347

Dhariwa P et al (2017) OpenAI baselines. Github. https://github.com/openai/baselines

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chen, H., Liu, Q. & Zhong, S. Self-guided deep deterministic policy gradient with multi-actor. Neural Comput & Applic 33, 9723–9732 (2021). https://doi.org/10.1007/s00521-021-05738-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-05738-9