Abstract

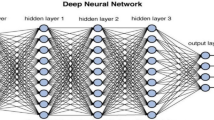

Deep neural networks (DNNs) have demonstrated super performance in most learning tasks. However, a DNN typically contains a large number of parameters and operations, requiring a high-end processing platform for high-speed execution. To address this challenge, hardware-and-software co-design strategies, which involve joint DNN optimization and hardware implementation, can be applied. These strategies reduce the parameters and operations of the DNN, and fit it into a low-resource processing platform. In this paper, a DNN model is used for the analysis of the data captured using an electrochemical method to determine the concentration of a neurotransmitter and the recoding electrode. Next, a DNN miniaturization algorithm is introduced, involving combined pruning and compression, to reduce the DNN resource utilization. Here, the DNN is transformed to have sparse parameters by pruning a percentage of its weights. The Lempel–Ziv–Welch algorithm is then applied to compress the sparse DNN. Next, a DNN overlay is developed, combining the decompression of the DNN parameters and DNN inference, to allow the execution of the DNN on a FPGA on the PYNQ-Z2 board. This approach helps avoid the need for inclusion of a complex quantization algorithm. It compresses the DNN by a factor of 6.18, leading to about 50% reduction in the resource utilization on the FPGA.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Goodfellow I, Bengio Y, Courville A, Bengio Y (2016) Deep learning, vol 1. MIT press Cambridge,

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen L-C Mobilenetv2: Inverted residuals and linear bottlenecks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018. pp 4510–4520

Martinez AMC, Gerlach L, Payá-Vayá G, Hermansky H, Ooster J, Meyer BT (2019) DNN-based performance measures for predicting error rates in automatic speech recognition and optimizing hearing aid parameters. Speech Commun 106:44–56

Zhang X, Han Y, Xu W, Wang Q (2021) HOBA: a novel feature engineering methodology for credit card fraud detection with a deep learning architecture. Inf Sci 557:302–316

Li Y, Huang C, Ding L, Li Z, Pan Y, Gao X (2019) Deep learning in bioinformatics: introduction, application, and perspective in the big data era. Methods 166:4–21

Zhou Y, Hou Y, Shen J, Huang Y, Martin W, Cheng F (2020) Network-based drug repurposing for novel coronavirus 2019-nCoV/SARS-CoV-2. Cell Discov 6(1):1–18

Behrendt K, Novak L, Botros R A deep learning approach to traffic lights: Detection, tracking, and classification. In: 2017 IEEE International Conference on Robotics and Automation (ICRA), 2017. IEEE, pp 1370–1377

Shvets AA, Rakhlin A, Kalinin AA, Iglovikov VI Automatic instrument segmentation in robot-assisted surgery using deep learning. In: 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), 2018. IEEE, pp 624–628

Wang Z, Yang Z, Dong T (2017) A review of wearable technologies for elderly care that can accurately track indoor position, recognize physical activities and monitor vital signs in real time. Sensors 17(2):341

Adams SD, Doeven EH, Tye SJ, Bennet KE, Berk M, Kouzani AZ (2019) TinyFSCV: FSCV for the Masses. IEEE Transactions on Neural Systems and Rehabilitation Engineering

Oh Y, Park C, Kim DH, Shin H, Kang YM, DeWaele M, Lee J, Min H-K, Blaha CD, Bennet KE (2016) Monitoring in vivo changes in tonic extracellular dopamine level by charge-balancing multiple waveform fast-scan cyclic voltammetry. Anal Chem 88(22):10962–10970

Struzyna LA, Browne KD, Brodnik ZD, Burrell JC, Harris JP, Chen HI, Wolf JA, Panzer KV, Lim J, Duda JE (2018) Tissue engineered nigrostriatal pathway for treatment of Parkinson’s disease. J Tissue Eng Regen Med 12(7):1702–1716

Srejic LR, Wood KM, Zeqja A, Hashemi P, Hutchison WD (2016) Modulation of serotonin dynamics in the dorsal raphe nucleus via high frequency medial prefrontal cortex stimulation. Neurobiol Dis 94:129–138

Purcell EK, Becker MF, Guo Y, Hara SA, Ludwig KA, McKinney CJ, Monroe EM, Rechenberg R, Rusinek CA, Saxena A (2021) Next-generation diamond electrodes for neurochemical sensing: challenges and opportunities. Micromachines 12(2):128

Nasri B, Wu T, Alharbi A, You K-D, Gupta M, Sebastian SP, Kiani R, Shahrjerdi D (2017) Hybrid CMOS-graphene sensor array for subsecond dopamine detection. IEEE Trans Biomed Circuits Syst 11(6):1192–1203

Guo Y, Werner CF, Handa S, Wang M, Ohshiro T, Mushiake H, Yoshinobu T (2021) Miniature multiplexed label-free pH probe in vivo. Biosens Bioelectron 174:112870

Puthongkham P, Venton BJ (2020) Recent advances in fast-scan cyclic voltammetry. Analyst 145(4):1087–1102

Kim J, Oh Y, Park C, Kang YM, Shin H, Kim IY, Jang DP (2019) Comparison study of partial least squares regression analysis and principal component analysis in fast-scan cyclic voltammetry. Int J Electrochem Sci 14:5924–5937

Puthongkham P, Rocha J, Borgus JR, Ganesana M, Wang Y, Chang Y, Gahlmann A, Venton BJ (2020) Structural similarity image analysis for detection of adenosine and dopamine in fast-scan cyclic voltammetry color plots. Anal Chem 92(15):10485–10494

Falconi C, Mandal S (2019) Interface electronics: state-of-the-art, opportunities and needs. Sensors Actuators A: Phys

Sze V, Chen Y-H, Yang T-J, Emer JS (2017) Efficient processing of deep neural networks: A tutorial and survey. Proceedings of the IEEE 105(12):2295-2329

Verhelst M, Moons B (2017) Embedded deep neural network processing: algorithmic and processor techniques bring deep learning to iot and edge devices. IEEE Solid-State Circuits Mag 9(4):55–65

Zhang Z, Kouzani AZ (2020) Implementation of DNNs on IoT devices. Neural Comput Appl 32(5):1327–1356

Abdelfattah MS, Dudziak Ł, Chau T, Lee R, Kim H, Lane ND Best of both worlds: automl codesign of a cnn and its hardware accelerator. In: 2020 57th ACM/IEEE Design Automation Conference (DAC), 2020. IEEE, pp 1–6

Reagen B, Whatmough P, Adolf R, Rama S, Lee H, Lee SK, Hernández-Lobato JM, Wei G-Y, Brooks D Minerva: Enabling low-power, highly-accurate deep neural network accelerators. In: 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture (ISCA), 2016. IEEE, pp 267–278

Iandola F, Keutzer K Small neural nets are beautiful: enabling embedded systems with small deep-neural-network architectures. In: Proceedings of the Twelfth IEEE/ACM/IFIP International Conference on Hardware/Software Codesign and System Synthesis Companion, 2017. ACM, p 1

Han S, Mao H, Dally WJ (2015) Deep compression: compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv preprint arXiv:151000149

Ma X, Guo F-M, Niu W, Lin X, Tang J, Ma K, Ren B, Wang Y Pconv: The missing but desirable sparsity in dnn weight pruning for real-time execution on mobile devices. In: Proceedings of the AAAI Conference on Artificial Intelligence, 2020. vol 04. pp 5117–5124

Nan K, Liu S, Du J, Liu H (2019) Deep model compression for mobile platforms: a survey. Tsinghua Sci Technol 24(6):677–693

Li Z, Wang Z, Xu L, Dong Q, Liu B, Su C-I, Chu W-T, Tsou G, Chih Y-D, Chang T-YJ (2020) RRAM-DNN: an RRAM and model-compression empowered all-weights-on-chip DNN accelerator. IEEE J Solid-State Circuits

Guo Y (2018) A survey on methods and theories of quantized neural networks. arXiv preprint arXiv:180804752

Nicodemo N, Naithani G, Drossos K, Virtanen T, Saletti R Memory requirement reduction of deep neural networks for field programmable gate arrays using low-bit quantization of parameters. In: 2020 28th European Signal Processing Conference (EUSIPCO), 2021. IEEE, pp 466–470

Hernández G, Zamora E, Sossa H, Téllez G, Furlán F (2020) Hybrid neural networks for big data classification. Neurocomputing 390:327–340

Gómez-Flores W, Sossa H (2021) Smooth dendrite morphological neurons. Neural Netw 136:40–53

Guo K, Zeng S, Yu J, Wang Y, Yang H (2019) [DL] A survey of FPGA-based neural network inference accelerators. ACM Transactions Reconfig Technol Syst (TRETS) 12(1):1–26

Uthayakumar J, Vengattaraman T, Dhavachelvan P (2021) A survey on data compression techniques: From the perspective of data quality, coding schemes, data type and applications. J King Saud Univ Comput Inf Sci 33(2):119–140

Moffat A (2019) Huffman coding. ACM Comput Surv (CSUR) 52(4):1–35

Capon J (1959) A probabilistic model for run-length coding of pictures. IRE Transactions Information Theor 5(4):157–163

PM N, Chezian RMA (2013) Survey on lossless dictionary based datacompression algorithms. Int J Sci Eng Technol Res 2(2):256–261

Zhang Z, Yoonbae Oh, Adams SD, Bennet KE, Kouzani AZ (2020) An FSCV deep neural network: development, pruning, and acceleration on an FPGA. IEEE J Biomed Informatics. https://doi.org/10.1109/JBHI.2020.3037366

Lee KH, Lujan JL, Trevathan JK, Ross EK, Bartoletta JJ, Park HO, Paek SB, Nicolai EN, Lee JH, Min H-K (2017) WINCS Harmoni: Closed-loop dynamic neurochemical control of therapeutic interventions. Sci Rep 7:46675

Kimble CJ, Johnson DM, Winter BA, Whitlock SV, Kressin KR, Horne AE, Robinson JC, Bledsoe JM, Tye SJ, Chang S-Y Wireless instantaneous neurotransmitter concentration sensing system (WINCS) for intraoperative neurochemical monitoring. In: 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 2009. IEEE, pp 4856–4859

Bottou L (2012) Stochastic gradient descent tricks. Neural networks: Tricks of the trade. Springer, New York, pp 421–436

Acknowledgements

The authors would like to thank Dr. Yoonbae Oh and Dr. Kevin E. Bennet of Mayo Clinic for providing the FSCV dataset used.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, Z., Kouzani, A.Z. Resource-constrained FPGA/DNN co-design. Neural Comput & Applic 33, 14741–14751 (2021). https://doi.org/10.1007/s00521-021-06113-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-06113-4