Abstract

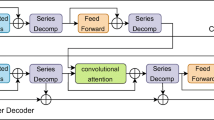

Univariate time series forecasting is still an important but challenging task. Considering the wide application of temporal data, adaptive predictors are needed to study historical behavior and forecast future state in various scenarios. In this paper, inspired by human attention mechanism and decomposition and reconstruction framework, we proposed a convolution-based dual-stage attention (CDA) architecture combined with Long Short-Term Memory networks (LSTM) for univariate time series forecasting. Specifically, we first use the decomposition algorithm to generate derived variables from target series. Input variables are then fed into the CDA-LSTM machine for further forecasting. In the Encoder–Decoder phase, for the first stage, attention operation is combined with the LSTM acting as an encoder, which could adaptively learn the relevant derived series to the target. In the second stage, the temporal attention mechanism is integrated with decoder aiming to automatically select the relevant encoder hidden states across all time steps. A convolution phase is concatenated parallelly to the Encoder–Decoder phase to reuse the historical information of the target and extract the mutation features. The experimental results demonstrate the proposed method could be adopted as expert systems for forecasting in multiple scenarios, and the superiority is verified by comparing with twelve baseline models on ten datasets. The practicability of different decomposition algorithms and convolution architectures is also discussed by extensive experiment. Overall, our work carries a significant value not merely in adaptive modeling of deep learning in time series issues, but also in the field of univariate data processing and prediction.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Availability of data and code

Access to all the datasets involved in this paper has been provided on the relevant pages. For the code of this article, please contact Xiaoquan Chu (chuxq@cau.edu.cn).

Notes

Available at http://www.sidc.be/silso/home.

Available at https://fred.stlouisfed.org/series/STLFSI2.

Available at https://fred.stlouisfed.org/series/DEXCHUS.

Available at https://fred.stlouisfed.org/series/DEXUSEU.

Available at http://www.eia.doe.gov.

Available at https://www.aemo.com.au/

Available at http://www.3w3n.com.

References

Song G, Dai Q (2017) A novel double deep ELMs ensemble system for time series forecasting. Knowl-Based Syst 134:31–49. https://doi.org/10.1016/j.knosys.2017.07.014

Längkvist M, Karlsson L, Loutfi A (2014) A review of unsupervised feature learning and deep learning for time-series modeling. Pattern Recogn Lett 42:11–24. https://doi.org/10.1016/j.patrec.2014.01.008

Yang H-F, Chen Y-PP (2019) Hybrid deep learning and empirical mode decomposition model for time series applications. Expert Syst Appl 120:128–138. https://doi.org/10.1016/j.eswa.2018.11.019

Kanarachos S, Christopoulos S-RG, Chroneos A, Fitzpatrick ME (2017) Detecting anomalies in time series data via a deep learning algorithm combining wavelets, neural networks and Hilbert transform. Expert Syst Appl 85:292–304. https://doi.org/10.1016/j.eswa.2017.04.028

Rodrigues F, Markou I, Pereira FC (2019) Combining time-series and textual data for taxi demand prediction in event areas: a deep learning approach. Inf Fusion 49:120–129. https://doi.org/10.1016/j.inffus.2018.07.007

He Y, Xu Q, Wan J, Yang S (2016) Electrical load forecasting based on self-adaptive chaotic neural network using Chebyshev map. Neural Comput Appl 29(7):603–612. https://doi.org/10.1007/s00521-016-2561-8

Guo L, Li N, Jia F, Lei Y, Lin J (2017) A recurrent neural network based health indicator for remaining useful life prediction of bearings. Neurocomputing 240:98–109. https://doi.org/10.1016/j.neucom.2017.02.045

Sezer OB, Ozbayoglu AM (2018) Algorithmic financial trading with deep convolutional neural networks: time series to image conversion approach. Appl Soft Comput 70:525–538. https://doi.org/10.1016/j.asoc.2018.04.024

Liu Y, Zhang Q, Song L, Chen Y (2019) Attention-based recurrent neural networks for accurate short-term and long-term dissolved oxygen prediction. Comput Electron Agric 165:104964. https://doi.org/10.1016/j.compag.2019.104964

Du S, Li T, Yang Y, Horng S-J (2020) Multivariate time series forecasting via attention-based encoder–decoder framework. Neurocomputing 388:269–279. https://doi.org/10.1016/j.neucom.2019.12.118

Chu X, Li Y, Tian D, Feng J, Mu W (2019) An optimized hybrid model based on artificial intelligence for grape price forecasting. Br Food J 121(12):3247–3265. https://doi.org/10.1108/bfj-06-2019-0390

Torres JF, Galicia A, Troncoso A, Martínez-Álvarez F (2018) A scalable approach based on deep learning for big data time series forecasting. Integr Comput-Aided Eng 25(4):335–348. https://doi.org/10.3233/ica-180580

Dong Y, Liu P, Zhu Z, Wang Q, Zhang Q (2020) A fusion model-based label embedding and self-interaction attention for text classification. IEEE Access 8:30548–30559. https://doi.org/10.1109/access.2019.2954985

Yan S, Xie Y, Wu F, Smith JS, Lu W, Zhang B (2020) Image captioning via hierarchical attention mechanism and policy gradient optimization. Signal Process 167:107329. https://doi.org/10.1016/j.sigpro.2019.107329

Agethen S, Hsu WH (2020) Deep multi-kernel convolutional LSTM networks and an attention-based mechanism for videos. IEEE Trans Multimed 22(3):819–829. https://doi.org/10.1109/tmm.2019.2932564

Liu Y, Gong C, Yang L, Chen Y (2020) DSTP-RNN: a dual-stage two-phase attention-based recurrent neural network for long-term and multivariate time series prediction. Expert Syst Appl 143:113082. https://doi.org/10.1016/j.eswa.2019.113082

Totaro S, Hussain A, Scardapane S (2020) A non-parametric softmax for improving neural attention in time-series forecasting. Neurocomputing 381:177–185. https://doi.org/10.1016/j.neucom.2019.10.084

Li Y, Zhu Z, Kong D, Han H, Zhao Y (2019) EA-LSTM: Evolutionary attention-based LSTM for time series prediction. Knowl-Based Syst 181:104785. https://doi.org/10.1016/j.knosys.2019.05.028

Qiu X, Ren Y, Suganthan PN, Amaratunga GAJ (2017) Empirical Mode Decomposition based ensemble deep learning for load demand time series forecasting. Appl Soft Comput 54:246–255. https://doi.org/10.1016/j.asoc.2017.01.015

Hübner R, Steinhauser M, Lehle C (2010) A dual-stage two-phase model of selective attention. Psychol Rev 117(3):759–784. https://doi.org/10.1037/a0019471

Qin Y, Song D, Cheng H, Cheng W, Jiang G, Cottrell GW (2017) A dual-stage attention-based recurrent neural network for time series prediction. In: Paper presented at the Proceedings of the 26th international joint conference on artificial intelligence, Melbourne, Australia

Zeng M, Nguyen LT, Yu B, Mengshoel OJ, Zhu J, Wu P, Zhang J (2014) Convolutional neural networks for human activity recognition using mobile sensors. https://doi.org/10.4108/icst.mobicase.2014.257786

Crone SF, Hibon M, Nikolopoulos K (2011) Advances in forecasting with neural networks? Empirical evidence from the NN3 competition on time series prediction. Int J Forecast 27(3):635–660. https://doi.org/10.1016/j.ijforecast.2011.04.001

Chen Y, Yang Y, Liu C, Li C, Li L (2015) A hybrid application algorithm based on the support vector machine and artificial intelligence: an example of electric load forecasting. Appl Math Model 39(9):2617–2632. https://doi.org/10.1016/j.apm.2014.10.065

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444. https://doi.org/10.1038/nature14539

Cabrera D, Guamán A, Zhang S, Cerrada M, Sánchez R-V, Cevallos J, Long J, Li C (2020) Bayesian approach and time series dimensionality reduction to LSTM-based model-building for fault diagnosis of a reciprocating compressor. Neurocomputing 380:51–66. https://doi.org/10.1016/j.neucom.2019.11.006

Fullah Kamara A, Chen E, Liu Q, Pan Z (2020) Combining contextual neural networks for time series classification. Neurocomputing 384:57–66. https://doi.org/10.1016/j.neucom.2019.10.113

Kuo P-H, Huang C-J (2018) An electricity price forecasting model by hybrid structured deep neural networks. Sustainability 10(4):1280. https://doi.org/10.3390/su10041280

Shaojie B, Zico KJ, Vladlen K (2018) An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. http://arxiv.org/abs/1803.01271v2

Ma Z, Dai Q, Liu N (2015) Several novel evaluation measures for rank-based ensemble pruning with applications to time series prediction. Expert Syst Appl 42(1):280–292. https://doi.org/10.1016/j.eswa.2014.07.049

Hajirahimi Z, Khashei M (2020) Sequence in hybridization of statistical and intelligent models in time series forecasting. Neural Process Lett. https://doi.org/10.1007/s11063-020-10294-9

Alirezaei HR, Salami A, Mohammadinodoushan M (2017) A study of hybrid data selection method for a wavelet SVR mid-term load forecasting model. Neural Comput Appl 31(7):2131–2141. https://doi.org/10.1007/s00521-017-3171-9

Cho K, Merrienboer BV, Bahdanau D, Bengio Y (2014) On the properties of neural machine translation: encoder-decoder approaches. Comput Sci

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate. Comput Sci

Yang Z, Yang D, Dyer C, He X, & Hovy E (2017) Hierarchical attention networks for document classification. In: Conference of the north american chapter of the association for computational linguistics: human language technologies

Huang NE, Shen Z, Long SR, Wu MC, Shih HH, Zheng Q, Yen N-C, Tung CC, Liu HH (1998) The empirical mode decomposition and the hilbert spectrum for nonlinear and non-stationary time series analysis. Proc Math Phys Eng Sci 454(1971):903–995

Huang NE, Wu ML, Qu W, Long SR, Shen S (2010) Applications of hilbert-huang transform to non-stationary financial time series analysis. Appl Stoch Model Bus Ind 19(3):245–268. https://doi.org/10.1002/asmb.501

Huang NE (2005) Hilbert-huang transform and its applications. World Scientific Publ Co Pte Ltd, Singapore

Zhaohua WU, Huang NE (2009) Ensemble empirical mode decomposition: a noise-assisted data analysis method. Adv Adapt Data Anal. https://doi.org/10.1142/S1793536909000047

Wu Z, Huang NE (2004) A study of the characteristics of white noise using the empirical mode decomposition method. Proc Math Phys Eng Sci 460(2046):1597–1611. https://doi.org/10.1098/rspa.2003.1221

Yang Y, Yang Y (2020) Hybrid method for short-term time series forecasting based on EEMD. IEEE Access 8:61915–61928. https://doi.org/10.1109/access.2020.2983588

Sudheer G, Suseelatha A (2015) A wavelet-nearest neighbor model for short-term load forecasting. Energy Sci Eng 3(1):51–59. https://doi.org/10.1002/ese3.48

Acknowledgements

This study was supported by the Chinese Agricultural Research System (CARS-29) and the open funds of the Key Laboratory of Viticulture and Enology, Ministry of Agriculture, PR China.

Funding

This study was funded by the Chinese Agricultural Research System (CARS-29) and the open funds of the Key Laboratory of Viticulture and Enology, Ministry of Agriculture, PR China.

Author information

Authors and Affiliations

Contributions

XC contributed to conceptualization, methodology, and writing manuscript. HJ and YL performed data curation and writing—review and editing. JF performed supervision, writing—review and editing. WM contributed to funding acquisition, supervision, and writing—review and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chu, X., Jin, H., Li, Y. et al. CDA-LSTM: an evolutionary convolution-based dual-attention LSTM for univariate time series prediction. Neural Comput & Applic 33, 16113–16137 (2021). https://doi.org/10.1007/s00521-021-06212-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-06212-2