Abstract

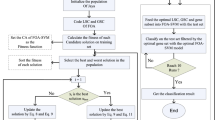

Microarray technology is known as one of the most important tools for collecting DNA expression data. This technology allows researchers to investigate and examine types of diseases and their origins. However, microarray data are often associated with a small sample size, a significant number of genes, imbalanced data, etc., making classification models inefficient. Thus, a new hybrid solution based on a multi-filter and adaptive chaotic multi-objective forest optimization algorithm (AC-MOFOA) is presented to solve the gene selection problem and construct the Ensemble Classifier. In the proposed solution, a multi-filter model (i.e., ensemble filter) is proposed as preprocessing step to reduce the dataset's dimensions, using a combination of five filter methods to remove redundant and irrelevant genes. Accordingly, the results of the five filter methods are combined using a voting-based function. Additionally, the results of the proposed multi-filter indicate that it has good capability in reducing the gene subset size and selecting relevant genes. Then, an AC-MOFOA based on the concepts of non-dominated sorting, crowding distance, chaos theory, and adaptive operators is presented. AC-MOFOA as a wrapper method aimed at reducing dataset dimensions, optimizing KELM, and increasing the accuracy of the classification, simultaneously. Next, in this method, an ensemble classifier model is presented using AC-MOFOA results to classify microarray data. The performance of the proposed algorithm was evaluated on nine public microarray datasets, and its results were compared in terms of the number of selected genes, classification efficiency, execution time, time complexity, hypervolume indicator, and spacing metric with five hybrid multi-objective methods, and three hybrid single-objective methods. According to the results, the proposed hybrid method could increase the accuracy of the KELM in most datasets by reducing the dataset's dimensions and achieve similar or superior performance compared to other multi-objective methods. Furthermore, the proposed Ensemble Classifier model could provide better classification accuracy and generalizability in the seven of nine microarray datasets compared to conventional ensemble methods. Moreover, the comparison results of the Ensemble Classifier model with three state-of-the-art ensemble generation methods indicate its competitive performance in which the proposed ensemble model achieved better results in the five of nine datasets.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Tyagi V, Mishra A (2013) A survey on different feature selection methods for microarray data analysis. Int J Comput Appl 67:36–40. https://doi.org/10.5120/11482-7181

Bolón-Canedo V, Sánchez-Maroño N, Alonso-Betanzos A et al (2014) A review of microarray datasets and applied feature selection methods. Inf Sci (Ny) 282:111–135. https://doi.org/10.1016/j.ins.2014.05.042

Almugren N, Alshamlan H (2019) A survey on hybrid feature selection methods in microarray gene expression data for cancer classification. IEEE Access 7:78533–78548. https://doi.org/10.1109/ACCESS.2019.2922987

Khalid S, Khalil T, Nasreen S (2014) A survey of feature selection and feature extraction techniques in machine learning. Proc 2014 Sci Inf Conf SAI 2014 372–378. https://doi.org/10.1109/SAI.2014.6918213

Remeseiro B, Bolon-Canedo V (2019) A review of feature selection methods in medical applications. Comput Biol Med 112:103375. https://doi.org/10.1016/j.compbiomed.2019.103375

Nguyen BH, Xue B, Zhang M (2020) A survey on swarm intelligence approaches to feature selection in data mining. Swarm Evol Comput 54:100663. https://doi.org/10.1016/j.swevo.2020.100663

Amaldi E, Kann V (1998) On the approximability of minimizing nonzero variables or unsatisfied relations in linear systems. Theor Comput Sci 209:237–260. https://doi.org/10.1016/S0304-3975(97)00115-1

Kohavi R, John GH (1997) Wrappers for feature subset selection. Artif Intell 97:273–324. https://doi.org/10.1016/S0004-3702(97)00043-X

Chandrashekar G, Sahin F (2014) A survey on feature selection methods. Comput Electr Eng 40:16–28. https://doi.org/10.1016/j.compeleceng.2013.11.024

Lai CM (2018) Multi-objective simplified swarm optimization with weighting scheme for gene selection. Appl Soft Comput J 65:58–68. https://doi.org/10.1016/j.asoc.2017.12.049

Dash R, Misra BB (2018) A multi-objective feature selection and classifier ensemble technique for microarray data analysis. Int J Data Min Bioinform 20:123–160. https://doi.org/10.1504/IJDMB.2018.093683

Osanaiye O, Cai H, Choo K-KR et al (2016) Ensemble-based multi-filter feature selection method for DDoS detection in cloud computing. EURASIP J Wirel Commun Netw 2016:130. https://doi.org/10.1186/s13638-016-0623-3

Balogun AO, Basri S, Abdulkadir SJ, Sobri AH (2019) A hybrid multi-filter wrapper feature selection method for software defect predictors

Miao J, Niu L (2016) A survey on feature selection. Procedia Comput Sci 91:919–926. https://doi.org/10.1016/j.procs.2016.07.111

Lu H, Chen J, Yan K et al (2017) A hybrid feature selection algorithm for gene expression data classification. Neurocomputing 256:56–62. https://doi.org/10.1016/j.neucom.2016.07.080

Chuang LY, Yang CH, Wu KC, Yang CH (2011) A hybrid feature selection method for DNA microarray data. Comput Biol Med 41:228–237. https://doi.org/10.1016/j.compbiomed.2011.02.004

Yang CH, Chuang LY, Yang CH (2010) IG-GA: a hybrid filter/wrapper method for feature selection of microarray data. J Med Biol Eng 30:23–28

Shukla AK, Singh P, Vardhan M (2018) A hybrid gene selection method for microarray recognition. Biocybern Biomed Eng 38:975–991. https://doi.org/10.1016/j.bbe.2018.08.004

Nguyen T, Khosravi A, Creighton D, Nahavandi S (2015) A novel aggregate gene selection method for microarray data classification. Pattern Recognit Lett 60–61:16–23. https://doi.org/10.1016/j.patrec.2015.03.018

Dashtban M, Balafar M (2017) Gene selection for microarray cancer classification using a new evolutionary method employing artificial intelligence concepts. Genomics 109:91–107. https://doi.org/10.1016/j.ygeno.2017.01.004

Lee CP, Leu Y (2011) A novel hybrid feature selection method for microarray data analysis. Appl Soft Comput J 11:208–213. https://doi.org/10.1016/j.asoc.2009.11.010

Jansi Rani M, Devaraj D (2019) Two-stage hybrid gene selection using mutual information and genetic algorithm for cancer data classification. J Med Syst. https://doi.org/10.1007/s10916-019-1372-8

Ghosh M, Adhikary S, Ghosh KK et al (2019) Genetic algorithm based cancerous gene identification from microarray data using ensemble of filter methods. Med Biol Eng Comput 57:159–176. https://doi.org/10.1007/s11517-018-1874-4

Shukla AK, Singh P, Vardhan M (2018) A two-stage gene selection method for biomarker discovery from microarray data for cancer classification. Chemom Intell Lab Syst 183:47–58. https://doi.org/10.1016/j.chemolab.2018.10.009

Sayed S, Nassef M, Badr A, Farag I (2019) A nested genetic algorithm for feature selection in high-dimensional cancer microarray datasets. Expert Syst Appl 121:233–243. https://doi.org/10.1016/j.eswa.2018.12.022

Jain I, Jain VK, Jain R (2018) Correlation feature selection based improved-binary particle swarm optimization for gene selection and cancer classification. Appl Soft Comput J 62:203–215. https://doi.org/10.1016/j.asoc.2017.09.038

Shen Q, Shi WM, Kong W (2008) Hybrid particle swarm optimization and tabu search approach for selecting genes for tumor classification using gene expression data. Comput Biol Chem 32:53–60. https://doi.org/10.1016/j.compbiolchem.2007.10.001

Baliarsingh SK, Vipsita S, Dash B (2020) A new optimal gene selection approach for cancer classification using enhanced Jaya-based forest optimization algorithm. Neural Comput Appl 32:8599–8616. https://doi.org/10.1007/s00521-019-04355-x

Apolloni J, Leguizamón G, Alba E (2016) Two hybrid wrapper-filter feature selection algorithms applied to high-dimensional microarray experiments. Appl Soft Comput 38:922–932. https://doi.org/10.1016/j.asoc.2015.10.037

Bir-Jmel A, Douiri SM, Elbernoussi S (2019) Gene selection via a new hybrid ant colony optimization algorithm for cancer classification in high-dimensional data. Comput Math Methods Med 2019:1–20. https://doi.org/10.1155/2019/7828590

Vafaee Sharbaf F, Mosafer S, Moattar MH (2016) A hybrid gene selection approach for microarray data classification using cellular learning automata and ant colony optimization. Genomics 107:231–238. https://doi.org/10.1016/j.ygeno.2016.05.001

Dash R, Dash R, Rautray R (2019) An evolutionary framework based microarray gene selection and classification approach using binary shuffled frog leaping algorithm J. King Saud Univ Comput Inf Sci. https://doi.org/10.1016/j.jksuci.2019.04.002

Shukla AK, Singh P, Vardhan M (2020) Gene selection for cancer types classification using novel hybrid metaheuristics approach. Swarm Evol Comput. https://doi.org/10.1016/j.swevo.2020.100661

Elyasigomari V, Lee DA, Screen HRC, Shaheed MH (2017) Development of a two-stage gene selection method that incorporates a novel hybrid approach using the cuckoo optimization algorithm and harmony search for cancer classification. J Biomed Inform 67:11–20. https://doi.org/10.1016/j.jbi.2017.01.016

Fei L, Juan L (2008) Optimal genes selection with a new multi-objective evolutional algorithm hybriding NSGA-II with EDA. Biomed Eng Informatics New Dev Futur - Proc 1st Int Conf Biomed Eng Informatics, BMEI 2008 1:327–331. https://doi.org/10.1109/BMEI.2008.313

Hasnat A, Molla AU (2017) Feature selection in cancer microarray data using multi-objective genetic algorithm combined with correlation coefficient. Proc IEEE Int Conf Emerg Technol Trends Comput Commun Electr Eng ICETT. https://doi.org/10.1109/ICETT.2016.7873741

Banerjee M, Mitra S, Banka H (2007) Evolutionary rough feature selection in gene expression data. IEEE Trans Syst Man Cybern Part C Appl Rev 37:622–632. https://doi.org/10.1109/TSMCC.2007.897498

Mohamad MS, Omatu S, Deris S, Yoshioka M (2008) Multi-objective optimization using genetic algorithm for gene selection from microarray data. Proc Int Conf Comput Commun Eng 2008, ICCCE08 Glob Links Hum Dev 1331–1334. https://doi.org/10.1109/ICCCE.2008.4580821

Annavarapu CSR, Dara S, Banka H (2016) Cancer microarray data feature selection using multi-objective binary particle swarm optimization algorithm. Excli J 15:460–473. https://doi.org/10.17179/excli2016-481

Chakraborty G, Chakraborty B (2013) Multi-objective optimization using pareto GA for gene-selection from microarray data for disease classification. Proc - 2013 IEEE Int Conf Syst Man Cybern SMC 2013:2629–2634. https://doi.org/10.1109/SMC.2013.449

Divya S, Kiran ELN, Rao MS, Vemulapati P (2020) Prediction of Gene Selection Features Using Improved Multi-objective Spotted Hyena Optimization Algorithm. In: Advances in Intelligent Systems and Computing. pp 59–67

Sharma A, Rani R (2019) C-HMOSHSSA: gene selection for cancer classification using multi-objective meta-heuristic and machine learning methods. Comput Method Progr Biomed 178:219–235. https://doi.org/10.1016/j.cmpb.2019.06.029

Ratnoo S, Ahuja J (2017) Dimension reduction for microarray data using multi-objective ant colony optimisation. Int J Comput Syst Eng 3:58. https://doi.org/10.1504/ijcsyse.2017.10004024

Yang P, Hwa Yang Y, B Zhou B, Y Zomaya A (2010) A review of ensemble methods in bioinformatics. Curr Bioinform 5:296–308. https://doi.org/10.2174/157489310794072508

Lan K, Tong WD, Fong S et al (2018) A survey of data mining and deep learning in bioinformatics. J Med Syst. https://doi.org/10.1007/s10916-018-1003-9

Khoshgoftaar TM, Dittman DJ, Wald R, Awada W (2013) A review of ensemble classification for DNA microarrays data. Proc Int Conf Tools with Artif Intell ICTAI. https://doi.org/10.1109/ICTAI.2013.64

Amaratunga D, Cabrera J, Lee Y-S (2008) Enriched random forests. Bioinformatics 24:2010–2014

Polikar R (2012) Ensemble learning. In: Zhang C, Ma Y (eds) Ensemble machine learning. Springer, pp 1–34

Xue X, Yao M, Wu Z (2018) A novel ensemble-based wrapper method for feature selection using extreme learning machine and genetic algorithm. Knowl Inf Syst 57:389–412. https://doi.org/10.1007/s10115-017-1131-4

Huda S, Yearwood J, Jelinek HF et al (2016) A hybrid feature selection with ensemble classification for imbalanced healthcare data: a case study for brain tumor diagnosis. IEEE Access 4:9145–9154. https://doi.org/10.1109/ACCESS.2016.2647238

Fallahpour S, Lakvan EN, Zadeh MH (2017) Using an ensemble classifier based on sequential floating forward selection for financial distress prediction problem J. Retail Consum Serv 34:159–167. https://doi.org/10.1016/j.jretconser.2016.10.002

Franken H, Lehmann R, Häring HU, et al (2011) Wrapper- and ensemble-based feature subset selection methods for biomarker discovery in targeted metabolomics. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics) 7036 LNBI:121–132. https://doi.org/10.1007/978-3-642-24855-9_11

Panthong R, Srivihok A (2015) Wrapper feature subset selection for dimension reduction based on ensemble learning algorithm. Procedia Comput Sci 72:162–169. https://doi.org/10.1016/j.procs.2015.12.117

Yang P, Liu W, Zhou BB, et al (2013) Ensemble-based wrapper methods for feature selection and class imbalance learning. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics) 7818 LNAI:544–555. https://doi.org/10.1007/978-3-642-37453-1_45

Yu E, Cho S (2006) Ensemble based on GA wrapper feature selection. Comput Ind Eng 51:111–116. https://doi.org/10.1016/j.cie.2006.07.004

Idris A, Khan A (2017) Churn prediction system for telecom using filter-wrapper and ensemble classification. Comput J 60:410–430. https://doi.org/10.1093/comjnl/bxv123

Zhou Y, Cheng G, Jiang S, Dai M (2020) Building an efficient intrusion detection system based on feature selection and ensemble classifier. Comput Networks 174:107247. https://doi.org/10.1016/j.comnet.2020.107247

Mukhopadhyay A, Member S, Maulik U, Member S (2014) A survey of multiobjective evolutionary algorithms for data mining: Part I. IEEE Trans Evol Comput 18:4–19

Baliarsingh SK, Vipsita S, Muhammad K, Bakshi S (2019) Analysis of high-dimensional biomedical data using an evolutionary multi-objective emperor penguin optimizer. Swarm Evol Comput 48:262–273. https://doi.org/10.1016/j.swevo.2019.04.010

Li X, Yin M (2013) Multiobjective binary biogeography based optimization for feature selection using gene expression data. IEEE Trans Nanobioscience 12:343–353. https://doi.org/10.1109/TNB.2013.2294716

Ghaemi M, Feizi-Derakhshi M-R (2014) Forest optimization algorithm. Expert Syst Appl 41:6676–6687. https://doi.org/10.1016/j.eswa.2014.05.009

Ghaemi M, Feizi-Derakhshi M-R (2016) Feature selection using forest optimization algorithm. Pattern Recognit 60:121–129. https://doi.org/10.1016/j.patcog.2016.05.012

Huang G-B, Zhu Q-Y, Siew C-K (2006) Extreme learning machine: theory and applications. Neurocomputing 70:489–501

Bin HG, Wang DH, Lan Y (2011) Extreme learning machines: a survey. Int J Mach Learn Cybern 2:107–122. https://doi.org/10.1007/s13042-011-0019-y

Marler RT, Arora JS (2004) Survey of multi-objective optimization methods for engineering. Struct Multidiscip Optim 26:369–395. https://doi.org/10.1007/s00158-003-0368-6

Coello CAC, Lamont GB, Van Veldhuizen DA (2007) Evolutionary algorithms for solving multi-objective problems. Springer, US, Boston, MA

Hall MA (1999) Correlation-based Feature Selection for Machine Learning

Kira K, Rendell LA (1992) A Practical Approach to Feature Selection. In: Machine Learning Proceedings 1992. Elsevier, pp 249–256

Shreem SS, Abdullah S, Nazri MZA (2014) Hybridising harmony search with a Markov blanket for gene selection problems. Inf Sci (Ny) 258:108–121. https://doi.org/10.1016/j.ins.2013.10.012

Gangavarapu T, Patil N (2019) A novel filter–wrapper hybrid greedy ensemble approach optimized using the genetic algorithm to reduce the dimensionality of high-dimensional biomedical datasets. Appl Soft Comput J 81:105538. https://doi.org/10.1016/j.asoc.2019.105538

Ozger ZB, Bolat B, Diri B (2019) A probabilistic multi-objective artificial bee colony algorithm for gene selection. J Univers Comput Sci 25:418–443

Abo-Hammour Z, Alsmadi O, Momani S, Abu Arqub O (2013) A genetic algorithm approach for prediction of linear dynamical systems. Math Probl Eng 2013:1–12. https://doi.org/10.1155/2013/831657

Abo-Hammour Z, Abu Arqub O, Alsmadi O et al (2014) An optimization algorithm for solving systems of singular boundary value problems. Appl Math Inf Sci 8:2809–2821. https://doi.org/10.12785/amis/080617

Momani S, Abo-Hammou ZS, Alsmad OM (2016) Solution of inverse kinematics problem using genetic algorithms. Appl Math Inf Sci 10:225–233

Abu Arqub O, Abo-Hammour Z, Momani S, Shawagfeh N (2012) Solving singular two-point boundary value problems using continuous genetic algorithm. Abstr Appl Anal. https://doi.org/10.1155/2012/205391

Hammad M, Iliyasu AM, Subasi A et al (2021) A multitier deep learning model for arrhythmia detection. IEEE Trans Instrum Meas. https://doi.org/10.1109/TIM.2020.3033072

Sedik A, Hammad M, Abd El-Samie FE et al (2021) Efficient deep learning approach for augmented detection of coronavirus disease. Neural Comput Appl. https://doi.org/10.1007/s00521-020-05410-8

Alghamdi AS, Polat K, Alghoson A et al (2020) A novel blood pressure estimation method based on the classification of oscillometric waveforms using machine-learning methods. Appl Acoust 164:107279. https://doi.org/10.1016/j.apacoust.2020.107279

Hammad M, Alkinani MH, Gupta BB, Abd El-Latif AA (2021) Myocardial infarction detection based on deep neural network on imbalanced data. Multimed Syst. https://doi.org/10.1007/s00530-020-00728-8

Hancer E, Xue B, Zhang M et al (2018) Pareto front feature selection based on artificial bee colony optimization. Inf Sci (Ny) 422:462–479. https://doi.org/10.1016/j.ins.2017.09.028

Agarwalla P, Mukhopadhyay S (2018) Feature selection using multi-objective optimization technique for supervised cancer classification. In: Mandal JK, Mukhopadhyay S, Dutta P (eds) Multi-objective optimization. Springer, pp 195–213

Nouri-Moghaddam B, Ghazanfari M, Fathian M (2020) A novel filter-wrapper hybrid gene selection approach for microarray data based on multi-objective forest optimization algorithm. Decis Sci Lett 9:271–290. https://doi.org/10.5267/j.dsl.2020.5.006

Shahbeig S, Rahideh A, Helfroush MS, Kazemi K (2018) Gene selection from large-scale gene expression data based on fuzzy interactive multi-objective binary optimization for medical diagnosis. Biocybern Biomed Eng 38:313–328. https://doi.org/10.1016/j.bbe.2018.02.002

Mishra S, Shaw K, Mishra D (2012) A new meta-heuristic bat inspired classification approach for microarray data. Procedia Technol 4:802–806. https://doi.org/10.1016/j.protcy.2012.05.131

Dashtban M, Balafar M, Suravajhala P (2018) Gene selection for tumor classification using a novel bio-inspired multi-objective approach. Genomics 110:10–17. https://doi.org/10.1016/j.ygeno.2017.07.010

Nouri-Moghaddam B, Ghazanfari M, Fathian M (2021) A novel multi-objective forest optimization algorithm for wrapper feature selection. Expert Syst Appl 175:114737. https://doi.org/10.1016/j.eswa.2021.114737

Javidi M, Hosseinpourfard R (2015) Chaos genetic algorithm instead genetic algorithm. Int Arab J Inf Technol 12:163–168

Gharooni-fard G, Moein-darbari F, Deldari H, Morvaridi A (2010) Scheduling of scientific workflows using a chaos-genetic algorithm. Procedia Comput Sci 1:1445–1454. https://doi.org/10.1016/j.procs.2010.04.160

Snaselova P, Zboril F (2015) Genetic algorithm using theory of chaos. Procedia Comput Sci 51:316–325. https://doi.org/10.1016/j.procs.2015.05.248

Baliarsingh SK, Vipsita S, Muhammad K et al (2019) Analysis of high-dimensional genomic data employing a novel bio-inspired algorithm. Appl Soft Comput 77:520–532. https://doi.org/10.1016/j.asoc.2019.01.007

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evol Comput 6:182–197

Zhu Z, Ong YS, Dash M (2007) Markov blanket-embedded genetic algorithm for gene selection. Pattern Recognit 40:3236–3248. https://doi.org/10.1016/j.patcog.2007.02.007

OpenML. https://www.openml.org/

Singh N, Singh P (2020) Stacking-based multi-objective evolutionary ensemble framework for prediction of diabetes mellitus. Biocybern Biomed Eng 40:1–22. https://doi.org/10.1016/j.bbe.2019.10.001

Asadi S, Roshan SE (2021) A bi-objective optimization method to produce a near-optimal number of classifiers and increase diversity in Bagging. Knowl Based Syst 213:106656. https://doi.org/10.1016/j.knosys.2020.106656

Ribeiro VHA, Reynoso-Meza G (2020) Ensemble learning by means of a multi-objective optimization design approach for dealing with imbalanced data sets. Expert Syst Appl 147:113232

Taguchi G, Chowdhury S, Wu Y (2005) Taguchi’s quality engineering handbook. Wiley

Xue B, Zhang M, Browne WN (2013) Particle swarm optimization for feature selection in classification: a multi-objective approach. IEEE Trans Cybern 43:1656–1671. https://doi.org/10.1109/TSMCB.2012.2227469

Xue B, Fu W, Zhang M (2014) Multi-objective feature selection in classification: a differential evolution approach. In: Asia-Pacific Conference on Simulated Evolution and Learnin. pp 516–528

Hancer E, Xue B, Zhang M, et al (2015) A multi-objective artificial bee colony approach to feature selection using fuzzy mutual information. 2015 IEEE Congr Evol Comput CEC 2015 Proc 2420–2427. https://doi.org/10.1109/CEC.2015.7257185

Xue B, Zhang M, Browne WN (2012) Multi-objective particle swarm optimisation (PSO) for feature selection. Proc fourteenth Int Conf Genet Evol Comput Conf GECCO ’12 81. https://doi.org/10.1145/2330163.2330175

Amoozegar M, Minaei-Bidgoli B (2018) Optimizing multi-objective PSO based feature selection method using a feature elitism mechanism. Expert Syst Appl 113:499–514. https://doi.org/10.1016/j.eswa.2018.07.013

Auger A, Bader J, Brockhoff D, Zitzler E (2009) Theory of the hypervolume indicator: Optimal μ-distributions and the choice of the reference point. In: Proceedings of the 10th ACM SIGEVO Workshop on Foundations of Genetic Algorithms, FOGA’09. ACM Press, New York, New York, USA, pp 87–102

Brockhoff D, Friedrich T, Neumann F (2008) Analyzing hypervolume indicator based algorithms. In: Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). pp 651–660

Sierra MR, Coello Coello CA (2005) Improving PSO-Based Multi-objective Optimization Using Crowding, Mutation and ∈-Dominance. In: International conference on evolutionary multi-criterion optimization. Springer, pp 505–519

Jensen MT (2003) Reducing the run-time complexity of multiobjective EAs: the NSGA-II and other algorithms. IEEE Trans Evol Comput 7:503–515. https://doi.org/10.1109/TEVC.2003.817234

Lv J, Peng Q, Chen X, Sun Z (2016) A multi-objective heuristic algorithm for gene expression microarray data classification. Expert Syst Appl 59:13–19. https://doi.org/10.1016/j.eswa.2016.04.020

Wang Y, Sun X (2018) A many-objective optimization algorithm based on weight vector adjustment. Comput Intell Neurosci. https://doi.org/10.1155/2018/4527968

Chand S, Wagner M (2015) Evolutionary many-objective optimization: a quick-start guide. Surv Oper Res Manag Sci 20:35–42. https://doi.org/10.1016/j.sorms.2015.08.001

Khan B, Wang Z, Han F et al (2017) Fabric weave pattern and yarn color recognition and classification using a deep ELM network. Algorithms. https://doi.org/10.3390/a10040117

Tissera MD, McDonnell MD (2016) Deep extreme learning machines: supervised autoencoding architecture for classification. Neurocomputing 174:42–49. https://doi.org/10.1016/j.neucom.2015.03.110

Jiang XW, Yan TH, Zhu JJ et al (2020) Densely connected deep extreme learning machine algorithm. Cognit Comput 12:979–990. https://doi.org/10.1007/s12559-020-09752-2

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Nouri-Moghaddam, B., Ghazanfari, M. & Fathian, M. A novel bio-inspired hybrid multi-filter wrapper gene selection method with ensemble classifier for microarray data. Neural Comput & Applic 35, 11531–11561 (2023). https://doi.org/10.1007/s00521-021-06459-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-06459-9