Abstract

Knowledge is a formal way of understanding the world, providing human-level cognition and intelligence for the next-generation artificial intelligence (AI). An effective way to automatically acquire this important knowledge, called Relation Extraction (RE), plays a vital role in Natural Language Processing (NLP). To date, there are amount of studies for RE in previous works, among which these technologies based on deep neural networks (DNNs) have become the mainstream direction of this research. In particular, the supervised and distant supervision methods based on DNNs are the most popular and reliable solutions for RE, whose various evolutions on structure and settings have affected this task. Understanding the model structure and related settings will give the researchers a deep insight into RE. However, little research has been done on them. Hence, this paper starts from these two points and carries out analysis around the mainstream research routes, supervised and distant supervision. Meanwhile, we classify all related works according to the evolution of model structure to facilitate the analysis. Finally, we discuss some challenges of RE and give out our conclusion.

Similar content being viewed by others

Notes

This tool in this work can be downloaded from here http://nlp.stanford.edu/software/lex-parser.shtml.

References

Bollacker K, Evans C, Paritosh P, Sturge T, Taylor J (2008) Freebase: a collaboratively created graph database for structuring human knowledge. In: Proceedings of the 2008 ACM SIGMOD international conference on Management of data, 1247–1250

Auer S, Bizer C, Kobilarov G, Lehmann J, Cyganiak R, Ives Z (2007) Dbpedia: a nucleus for a web of open data. In: The semantic web, Springer, 722–735

Vrandečić D, Krötzsch M (2014) Wikidata: a free collaborative knowledgebase. Commun ACM 57(10):78–85

Suchanek FM, Kasneci G, Weikum G (2007) Yago: a core of semantic knowledge. In: Proceedings of the 16th international conference on World Wide Web, 697–706

Brin S (1998) Extracting patterns and relations from the world wide web. In: International workshop on the world wide web and databases, Springer, 172–183

Agichtein E, Gravano L (2000) Snowball: Extracting relations from large plain-text collections. In: Proceedings of the fifth ACM conference on digital libraries, ACM, 85–94

Liu C, Sun W, Chao W, Che W (2013) Convolution neural network for relation extraction. In: International conference on advanced data mining and applications, Springer, 231–242

Zhou P, Shi W, Tian J, Qi Z, Li B, Hao H, Xu B (2016) Attention-based bidirectional long short-term memory networks for relation classification. In: Proceedings of the 54th annual meeting of the association for computational linguistics (Volume 2: Short Papers), vol 2, 207–212

Cai R, Zhang X, Wang H (2016) Bidirectional recurrent convolutional neural network for relation classification. In: Proceedings of the 54th annual meeting of the association for computational linguistics (Volume 1: Long Papers), vol 1, 756–765

Nédellec C, Bossy R, Kim JD, Kim JJ, Ohta T, Pyysalo S, Zweigenbaum P (2013) Overview of bionlp shared task 2013. In: Proceedings of the BioNLP shared task 2013 workshop, 1–7

Vazquez M, Krallinger M, Leitner F, Valencia A (2011) Text mining for drugs and chemical compounds: methods, tools and applications. Mol Inf 30(6–7):506–519

Vela M, Declerck T (2009) Concept and relation extraction in the finance domain. In: Proceedings of the eight international conference on computational semantics, 346–350

Kumar S (2017) A survey of deep learning methods for relation extraction. arXiv preprint arXiv:170503645

Pawar S, Palshikar GK, Bhattacharyya P (2017) Relation extraction: a survey. arXiv preprint arXiv:171205191

Smirnova A, Cudré-Mauroux P (2018) Relation extraction using distant supervision: A survey. ACM Computing Surveys (CSUR) 51(5):1–35

Nayak T, Majumder N, Goyal P, Poria S (2021) Deep neural approaches to relation triplets extraction: a comprehensive survey. arXiv preprint arXiv:210316929

Aydar M, Bozal O, Ozbay F (2020) Neural relation extraction: a survey. arXiv e-prints pp arXiv–2007

Han X, Gao T, Lin Y, Peng H, Yang Y, Xiao C, Liu Z, Li P, Sun M, Zhou J (2020) More data, more relations, more context and more openness: a review and outlook for relation extraction. arXiv preprint arXiv:200403186

Liu K (2020) A survey on neural relation extraction. Science China Technological Sciences pp 1–19

Hendrickx I, Kim SN, Kozareva Z, Nakov P, Ó Séaghdha D, Padó S, Pennacchiotti M, Romano L, Szpakowicz S (2009) Semeval-2010 task 8: multi-way classification of semantic relations between pairs of nominals. In: proceedings of the workshop on semantic evaluations: recent achievements and future directions, Association for computational linguistics, 94–99

Han X, Zhu H, Yu P, Wang Z, Yao Y, Liu Z, Sun M (2018) Fewrel: A large-scale supervised few-shot relation classification dataset with state-of-the-art evaluation. arXiv preprint arXiv:181010147

Gao T, Han X, Zhu H, Liu Z, Li P, Sun M, Zhou J (2019) Fewrel 2.0: towards more challenging few-shot relation classification. arXiv preprint arXiv:191007124

Riedel S, Yao L, McCallum A (2010) Modeling relations and their mentions without labeled text. Joint European conference on machine learning and knowledge discovery in databases. Springer, Heidelberg, pp 148–163

Tang W, Hui B, Tian L, Luo G, He Z, Cai Z (2021) Learning disentangled user representation with multi-view information fusion on social networks. Inf Fusion 74:77–86

LeCun Y, Boser B, Denker JS, Henderson D, Howard RE, Hubbard W, Jackel LD (1989) Backpropagation applied to handwritten zip code recognition. Neural Comput 1(4):541–551

Elman JL (1991) Distributed representations, simple recurrent networks, and grammatical structure. Mach Learn 7(2–3):195–225

Socher R, Huval B, Manning CD, Ng AY (2012) Semantic compositionality through recursive matrix-vector spaces. In: Proceedings of the 2012 joint conference on empirical methods in natural language processing and computational natural language learning, Association for computational linguistics, 1201–1211

Scarselli F, Gori M, Tsoi AC, Hagenbuchner M, Monfardini G (2008) The graph neural network model. IEEE Trans Neural Networks 20(1):61–80

Zhang S, Zheng D, Hu X, Yang M (2015) Bidirectional long short-term memory networks for relation classification. In: Proceedings of the 29th Pacific Asia conference on language, information and computation, 73–78

Sundermeyer M, Schlüter R, Ney H (2012) Lstm neural networks for language modeling. In: Thirteenth annual conference of the international speech communication association

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Chung J, Gulcehre C, Cho K, Bengio Y (2014) Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint arXiv:14123555

Mikolov T, Chen K, Corrado G, Dean J (2013) Efficient estimation of word representations in vector space. arXiv preprint arXiv:13013781

Turian J, Ratinov L, Bengio Y (2010) Word representations: a simple and general method for semi-supervised learning. In: Proceedings of the 48th annual meeting of the association for computational linguistics, Association for computational linguistics, 384–394

Pennington J, Socher R, Manning C (2014) Glove: Global vectors for word representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), 1532–1543

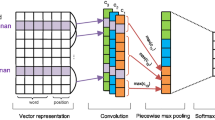

Zeng D, Liu K, Lai S, Zhou G, Zhao J, et al. (2014) Relation classification via convolutional deep neural network

Nguyen TH, Grishman R (2015) Relation extraction: perspective from convolutional neural networks. In: Proceedings of the 1st workshop on vector space modeling for natural language processing, 39–48

Santos CNd, Xiang B, Zhou B (2015) Classifying relations by ranking with convolutional neural networks. arXiv preprint arXiv:150406580

Wang L, Cao Z, De Melo G, Liu Z (2016) Relation classification via multi-level attention cnns. In: Proceedings of the 54th annual meeting of the association for computational linguistics (volume 1: long papers), 1298–1307

Zhang D, Wang D (2015) Relation classification via recurrent neural network. arXiv preprint arXiv:150801006

Qin P, Xu W, Guo J (2017) Designing an adaptive attention mechanism for relation classification. In: 2017 International joint conference on neural networks (IJCNN), IEEE, 4356–4362

Zhang C, Cui C, Gao S, Nie X, Xu W, Yang L, Xi X, Yin Y (2019) Multi-gram cnn-based self-attention model for relation classification. IEEE Access 7:5343–5357

Ren F, Zhou D, Liu Z, Li Y, Zhao R, Liu Y, Liang X (2018) Neural relation classification with text descriptions. In: Proceedings of the 27th international conference on computational linguistics, 1167–1177

Zhang L, Xiang F (2018) Relation classification via bilstm-cnn. In: International conference on data mining and big data, Springer, pp 373–382

Mooney RJ, Bunescu RC (2006) Subsequence kernels for relation extraction. In: Advances in neural information processing systems, pp 171–178

Xu Y, Mou L, Li G, Chen Y, Peng H, Jin Z (2015) Classifying relations via long short term memory networks along shortest dependency paths. In: proceedings of the 2015 conference on empirical methods in natural language processing, 1785–1794

Guo X, Zhang H, Yang H, Xu L, Ye Z (2019) A single attention-based combination of cnn and rnn for relation classification. IEEE Access 7:12467–12475

Jin L, Song L, Zhang Y, Xu K, Ma Wy YuD (2020) Relation extraction exploiting full dependency forests. Proc AAAI Conf Artif Intell 34:8034–8041

Hearst MA (1992) Automatic acquisition of hyponyms from large text corpora. In: Proceedings of the 14th conference on Computational linguistics-Volume 2, Association for computational linguistics, pp 539–545

Berland M, Charniak E (1999) Finding parts in very large corpora. In: Proceedings of the 37th annual meeting of the association for computational linguistics

Etzioni O, Cafarella M, Downey D, Kok S, Popescu AM, Shaked T, Soderland S, Weld DS, Yates A (2004) Web-scale information extraction in knowitall:(preliminary results). In: Proceedings of the 13th international conference on World Wide Web, ACM, 100–110

Yates A, Cafarella M, Banko M, Etzioni O, Broadhead M, Soderland S (2007) Textrunner: open information extraction on the web. In: Proceedings of Human Language Technologies: The Annual Conference of the North American Chapter of the Association for Computational Linguistics: Demonstrations, Association for Computational Linguistics, pp 25–26

Phi VT, Santoso J, Shimbo M, Matsumoto Y (2018) Ranking-based automatic seed selection and noise reduction for weakly supervised relation extraction. In: Proceedings of the 56th Annual Meeting of the association for computational linguistics (Volume 2: Short Papers), pp 89–95

Hasegawa T, Sekine S, Grishman R (2004) Discovering relations among named entities from large corpora. In: Proceedings of the 42nd annual meeting on association for computational linguistics, association for computational linguistics, p 415

Rink B, Harabagiu S (2010) Utd: Classifying semantic relations by combining lexical and semantic resources. In: Proceedings of the 5th International workshop on semantic evaluation, Association for computational linguistics, 256–259

Kambhatla N (2004) Combining lexical, syntactic, and semantic features with maximum entropy models for extracting relations. In: Proceedings of the ACL 2004 on interactive poster and demonstration sessions, Association for computational linguistics, p 22

Bunescu RC, Mooney RJ (2005) A shortest path dependency kernel for relation extraction. In: Proceedings of the conference on human language technology and empirical methods in natural language processing, Association for computational linguistics, 724–731

Mintz M, Bills S, Snow R, Jurafsky D (2009) Distant supervision for relation extraction without labeled data. In: Proceedings of the joint conference of the 47th annual meeting of the ACL and the 4th international joint conference on natural language processing of the AFNLP: Volume 2-Volume 2, Association for computational linguistics, 1003–1011

Manning C, Surdeanu M, Bauer J, Finkel J, Bethard S, McClosky D (2014) The stanford corenlp natural language processing toolkit. In: Proceedings of 52nd annual meeting of the association for computational linguistics: system demonstrations, 55–60

Xu K, Feng Y, Huang S, Zhao D (2015) Semantic relation classification via convolutional neural networks with simple negative sampling. arXiv preprint arXiv:150607650

Kim Y (2014) Convolutional neural networks for sentence classification. arXiv preprint arXiv:14085882

Kalchbrenner N, Grefenstette E, Blunsom P (2014) A convolutional neural network for modelling sentences. arXiv preprint arXiv:14042188

Xu Y, Jia R, Mou L, Li G, Chen Y, Lu Y, Jin Z (2016) Improved relation classification by deep recurrent neural networks with data augmentation. arXiv preprint arXiv:160103651

Xiao M, Liu C (2016) Semantic relation classification via hierarchical recurrent neural network with attention. In: Proceedings of COLING 2016, the 26th international conference on computational linguistics: Technical Papers, 1254–1263

Lee J, Seo S, Choi YS (2019) Semantic relation classification via bidirectional lstm networks with entity-aware attention using latent entity typing. arXiv preprint arXiv:190108163

Zheng S, Xu J, Zhou P, Bao H, Qi Z, Xu B (2016) A neural network framework for relation extraction: Learning entity semantic and relation pattern. Knowl-Based Syst 114:12–23

Wang H, Qin K, Lu G, Luo G, Liu G (2020) Direction-sensitive relation extraction using bi-sdp attention model. Knowl-Based Syst 21:105928105928

Zhang Z, Shu X, Yu B, Liu T, Zhao J, Li Q, Guo L (2020) Distilling knowledge from well-informed soft labels for neural relation extraction. In: AAAI, pp 9620–9627

Lyu S, Cheng J, Wu X, Cui L, Chen H, Miao C (2020) Auxiliary learning for relation extraction. IEEE Trans Emer Top Comp Intell

Zeng D, Liu K, Chen Y, Zhao J (2015) Distant supervision for relation extraction via piecewise convolutional neural networks. In: Proceedings of the 2015 conference on empirical methods in natural language processing, 1753–1762

Jiang X, Wang Q, Li P, Wang B (2016) Relation extraction with multi-instance multi-label convolutional neural networks. In: Proceedings of COLING 2016, the 26th international conference on computational linguistics: Technical papers, pp 1471–1480

Yang L, Ng TLJ, Mooney C, Dong R (2017) Multi-level attention-based neural networks for distant supervised relation extraction. In: AICS, pp 206–218

Lin Y, Liu Z, Sun M (2017) Neural relation extraction with multi-lingual attention. In: Proceedings of the 55th annual meeting of the association for computational linguistics (Volume 1: Long Papers), pp 34–43

Lin Y, Shen S, Liu Z, Luan H, Sun M (2016) Neural relation extraction with selective attention over instances. In: Proceedings of the 54th annual meeting of the association for computational linguistics (Volume 1: long papers), vol 1, 2124–2133

Banerjee S, Tsioutsiouliklis K (2018) Relation extraction using multi-encoder lstm network on a distant supervised dataset. In: 2018 IEEE 12th international conference on semantic computing (ICSC), IEEE, pp 235–238

Du J, Han J, Way A, Wan D (2018) Multi-level structured self-attentions for distantly supervised relation extraction. arXiv preprint arXiv:180900699

Ji G, Liu K, He S, Zhao J (2017) Distant supervision for relation extraction with sentence-level attention and entity descriptions. In: Thirty-first AAAI conference on artificial intelligence

Wang G, Zhang W, Wang R, Zhou Y, Chen X, Zhang W, Zhu H, Chen H (2018) Label-free distant supervision for relation extraction via knowledge graph embedding. In: Proceedings of the 2018 conference on empirical methods in natural language processing, 2246–2255

Vashishth S, Joshi R, Prayaga SS, Bhattacharyya C, Talukdar P (2018) Reside: Improving distantly-supervised neural relation extraction using side information. In: Proceedings of the 2018 conference on empirical methods in natural language processing, 1257–1266

Qin P, Xu W, Wang WY (2018a) Robust distant supervision relation extraction via deep reinforcement learning. arXiv preprint arXiv:180509927

Qin P, Xu W, Wang WY (2018b) Dsgan: Generative adversarial training for distant supervision relation extraction. arXiv preprint arXiv:180509929

Angeli G, Premkumar MJJ, Manning CD (2015) Leveraging linguistic structure for open domain information extraction. In: Proceedings of the 53rd annual meeting of the association for computational linguistics and the 7th international joint conference on natural language processing (Volume 1: Long Papers), vol 1, pp 344–354

Pavlick E, Rastogi P, Ganitkevitch J, Van Durme B, Callison-Burch C (2015) Ppdb 2.0: Better paraphrase ranking, fine-grained entailment relations, word embeddings, and style classification. In: proceedings of the 53rd annual meeting of the association for computational linguistics and the 7th international joint conference on natural language processing (Volume 2: Short Papers), vol 2, pp 425–430

Bethard S, Carpuat M, Cer D, Jurgens D, Nakov P, Zesch T (2016) Proceedings of the 10th international workshop on semantic evaluation (semeval-2016). In: Proceedings of the 10th international workshop on semantic evaluation (SemEval-2016)

Gábor K, Buscaldi D, Schumann AK, QasemiZadeh B, Zargayouna H, Charnois T (2018) Semeval-2018 task 7: Semantic relation extraction and classification in scientific papers. In: Proceedings of the 12th international workshop on semantic evaluation, pp 679–688

Zhang Y, Zhong V, Chen D, Angeli G, Manning CD (2017) Position-aware attention and supervised data improve slot filling. In: Proceedings of the 2017 conference on empirical methods in natural language processing (EMNLP 2017), 35–45, https://nlp.stanford.edu/pubs/zhang2017tacred.pdf

Segura Bedmar I, Martinez P, Sánchez Cisneros D (2011) The 1st ddiextraction-2011 challenge task: Extraction of drug-drug interactions from biomedical texts

Segura Bedmar I, Martínez P, Herrero Zazo M (2013) Semeval-2013 task 9: Extraction of drug-drug interactions from biomedical texts (ddiextraction 2013). Association for computational linguistics

Devlin J, Chang MW, Lee K, Toutanova K (2018) Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:181004805

Liu Y, Ott M, Goyal N, Du J, Joshi M, Chen D, Levy O, Lewis M, Zettlemoyer L, Stoyanov V (2019) Roberta: A robustly optimized bert pretraining approach. arXiv preprint arXiv:190711692

Di S, Shen Y, Chen L (2019) Relation extraction via domain-aware transfer learning. In: Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & Data Mining, 1348–1357

Sun C, Wu Y (2019) Distantly supervised entity relation extraction with adapted manual annotations. Proc AAAI Conf Artif Intell 33:7039–7046

Zhang N, Deng S, Sun Z, Chen J, Zhang W, Chen H (2019) Transfer learning for relation extraction via relation-gated adversarial learning. arXiv preprint arXiv:190808507

Zhang N, Deng S, Sun Z, Chen X, Zhang W, Chen H (2018) Attention-based capsule networks with dynamic routing for relation extraction. arXiv preprint arXiv:181211321

Sahu SK, Christopoulou F, Miwa M, Ananiadou S (2019) Inter-sentence relation extraction with document-level graph convolutional neural network. arXiv preprint arXiv:190604684

Guo Z, Zhang Y, Lu W (2019) Attention guided graph convolutional networks for relation extraction pp 241–251, 10.18653/v1/p19-1024, arxiv:1906.07510

Zhang Y, Qi P, Manning CD (2019) Graph convolution over pruned dependency trees improves relation extraction (2005):2205–2215, 10.18653/v1/d18-1244, arxiv:1809.10185

Wang H, Qin K, Lu G, Yin J, Zakari RY, Owusu JW (2021) Document-level relation extraction using evidence reasoning on rst-graph. Knowledge-based systems p 107274

Zhang N, Chen X, Xie X, Deng S, Tan C, Chen M, Huang F, Si L, Chen H (2021) Document-level relation extraction as semantic segmentation. arXiv preprint arXiv:210603618

Ye H, Chao W, Luo Z, Li Z (2016) Jointly extracting relations with class ties via effective deep ranking. arXiv preprint arXiv:161207602

Miwa M, Bansal M (2016) End-to-end relation extraction using lstms on sequences and tree structures. arXiv preprint arXiv:160100770

Li F, Zhang M, Fu G, Ji D (2017) A neural joint model for entity and relation extraction from biomedical text. BMC Bioinform 18(1):198

Zheng S, Wang F, Bao H, Hao Y, Zhou P, Xu B (2017) Joint extraction of entities and relations based on a novel tagging scheme. arXiv preprint arXiv:170605075

Xiao Y, Tan C, Fan Z, Xu Q, Zhu W (2020) Joint entity and relation extraction with a hybrid transformer and reinforcement learning based model. In: AAAI, pp 9314–9321

Acknowledgements

This work was supported by National Natural Science Foundation of China (No. U19A2059), the Fundamental Research Funds for the Central Universities (NO. ZYGX2020ZB034), and Sichuan Science and Technology Program (NO. 2019YFG0507 & 2020YFG0328). We sincerely thank Mr. Kombou Victor, Anto Leoba Jonathan and Wilson Jim Owusu for their helpful discussions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest regarding publication of this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, H., Qin, K., Zakari, R.Y. et al. Deep neural network-based relation extraction: an overview. Neural Comput & Applic 34, 4781–4801 (2022). https://doi.org/10.1007/s00521-021-06667-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-06667-3