Abstract

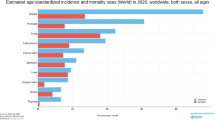

Breast cancer is one of the most commonly diagnosed cancers in the world that has overtaken lung cancer and is considered a leading cause of molarity. The current study objectives are to (1) design an abstract CNN architecture named “HMB1-BUSI,” (2) suggest a hybrid deep learning and genetic algorithm approach for the learning and optimization named HMB-DLGAHA, (3) apply the transfer learning approach using pre-trained models, (4) study the effects of regularization, optimizers, dropout, and data augmentation through fourteen experiments, and (5) report the state-of-the-art performance metrics compared with other related studies and approaches. The dataset is collected and unified from two different sources (1) “Breast Ultrasound Images Dataset (Dataset BUSI)” and (2) “Breast Ultrasound Image.” The experiments implement the weighted sum (WS) method to judge the overall performance and generalization using loss, accuracy, F1-score, precision, recall, specificity, and area under curve (AUC) metrics with different ratios. MobileNet, MobileNetV2, InceptionResNetV2, DenseNet121, DenseNet169, DenseNet201, RestNet50, ResNet101, ResNet152, RestNet50V2, ResNet101V2, ResNet152V2, Xception, and VGG19 pre-trained CNN models are used in the experiments. Xception reported \(85.17\%\) as the highest WS metric. Xception, ResNet152V2, and ResNet101V2 reported accuracy and F1-score values above \(90\%\). Xception, ResNet152V2, ResNet101V2, and DenseNet169 reported precision values above \(90\%\). Xception and ResNet152V2 reported recall values above \(90\%\). All models unless ResNet152, ResNet50, and ResNet101 reported specificity values above \(90\%\) and unless ResNet152, ResNet50, ResNet101, and VGG19 reported AUC values above \(90\%\).

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data Availability

The datasets, if existing, that are used, generated, or analyzed during the current study (A) if the datasets are owned by the authors, they are available from the corresponding author on reasonable request, (B) if the datasets are not owned by the authors, the supplementary information including the links and sizes are included in this published article.

References

Williams RR, Horm JW (1977) Association of cancer sites with tobacco and alcohol consumption and socioeconomic status of patients: interview study from the third national cancer survey. J Natl Cancer Inst 58(3):525–547

Ravdin P, Siminoff I, Harvey J (1998) Survey of breast cancer patients concerning their knowledge and expectations of adjuvant therapy. J Clin Oncol 16(2):515–521

(WHO) WHO (2021) Breast cancer now most common form of cancer: WHO taking action. https://www.who.int/news/item/03-02-2021-breast-cancer-now-most-common-form-of-cancer-who-taking-action. Accessed on 4 Feb 2021

Harris JR, Lippman ME, Veronesi U, Willett W (1992) Breast cancer. N Engl J Med 327(5):319–328

Kelsey JL, Gammon MD, John EM (1993) Reproductive factors and breast cancer. Epidemiol Rev 15(1):36

Waks AG, Winer EP (2019) Breast cancer treatment: a review. JAMA 321(3):288–300

Organization BC (2021) Breast cancer: symptoms and diagnosis. https://www.breastcancer.org/symptoms. Accessed on 4 Feb 2021

Elmore JG, Armstrong K, Lehman CD, Fletcher SW (2005) Screening for breast cancer. JAMA 293(10):1245–1256

Organization BC (2021) Breast cancer: ultrasound. https://www.breastcancer.org/symptoms/testing/types/ultrasound. Accessed on 4 Feb 2021

Key TJ, Verkasalo PK, Banks E (2001) Epidemiology of breast cancer. Lancet Oncol 2(3):133–140

Bejnordi BE et al (2017) Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA 318(22):2199–2210

Lang AE, Lozano AM (1998) Parkinsons disease. N Engl J Med 339(16):1130–1143

Wang D, Khosla A, Gargeya R, Irshad H, Beck AH (2016) Deep learning for identifying metastatic breast cancer. arXiv preprintarXiv:1606.05718

Amrane M, Oukid S, Gagaoua I, Ensari T (2018) Breast cancer classification using machine learning. In: Proceedings of the 2018 electric electronics, computer science, biomedical engineerings’ meeting (EBBT), (IEEE), pp 1–4

Cheng HD, Shan J, Ju W, Guo Y, Zhang L (2010) Automated breast cancer detection and classification using ultrasound images: a survey. Pattern Recogn 43(1):299–317

Zhang L, Suganthan PN (2016) A survey of randomized algorithms for training neural networks. Inf Sci 364:146–155

Flori RD (1992) Product review: brainmaker professional neural network simulation. Comput Invest Am Assoc Individ Invest 11(1)

Scannapieco FA, Mylotte JM (1996) Relationships between periodontal disease and bacterial pneumonia. J Periodontol 67:1114–1122

Pouyanfar S et al (2018) A survey on deep learning: algorithms, techniques, and applications. ACM Comput Surv (CSUR) 51(5):1–36

Sun H, et al. (2017) Learning to optimize: training deep neural networks for wireless resource management. In: Proceedings of the 2017 IEEE 18th international workshop on signal processing advances in wireless communications (SPAWC). (IEEE), pp 1–6

Abdulazeem Y, Balaha HM, Bahgat WM, Badawy M (2021) Human action recognition based on transfer learning approach. IEEE Access

Braunwald E (1988) Heart disease

Abdel-Hamid O, Deng L, Yu D (2013) Exploring convolutional neural network structures and optimization techniques for speech recognition. Interspeech 11:73–5

Wold E, Blum T, Keislar D, Wheaten J (1996) Content-based classification, search, and retrieval of audio. IEEE Multimedia 3(3):27–36

Cummings JL (1992) Depression and parkinson’s disease: a review. Am J Psych

Han Z et al (2017) Breast cancer multi-classification from histopathological images with structured deep learning model. Sci Rep 7(1):1–10

Yap MH et al (2017) Automated breast ultrasound lesions detection using convolutional neural networks. IEEE J Biomed Health Inform 22(4):1218–1226

Al-Dhabyani W, Gomaa M, Khaled H, Aly F (2019) Deep learning approaches for data augmentation and classification of breast masses using ultrasound images. Int J Adv Comput Sci Appl 10(5):1–11

Masud M, Rashed AEE, Hossain MS (2020) Convolutional neural network-based models for diagnosis of breast cancer. Neural Comput Appl, pp 1–12

Lazo JF, Moccia S, Frontoni E, De Momi E (2020) Comparison of different cnns for breast tumor classification from ultrasound images. arXiv preprintarXiv:2012.14517

Moon WK et al (2020) Computer-aided diagnosis of breast ultrasound images using ensemble learning from convolutional neural networks. Comput Methods Prog Biomed 190:105361

Vakanski A, Xian M, Freer PE (2020) Attention-enriched deep learning model for breast tumor segmentation in ultrasound images. Ultrasound Med Biol 46(10):2819–2833

Chang CY, Srinivasan K, Chen MC, Chen SJ (2020) Svm-enabled intelligent genetic algorithmic model for realizing efficient universal feature selection in breast cyst image acquired via ultrasound sensing systems. Sensors 20(2):432

Vutakuri N, Maheswari AU (2020) Breast cancer diagnosis using a minkowski distance method based on mutual information and genetic algorithm. Int J Adv Intel Parad 16(3–4):414–433

Davoudi K (2020) Optimizing convolutional neural network parameters using genetic algorithm for breast cancer classification

Simard PY, Steinkraus D, Platt JC, et al. (2003) Best practices for convolutional neural networks applied to visual document analysis. In: Icdar, vol 3

Albawi S, Mohammed TA, Al-Zawi S (2017) Understanding of a convolutional neural network. In: Proceedings of the 2017 international conference on engineering and technology (ICET). (IEEE), pp 1–6

Li X, Chen P, Fan K (2020) Overview of deep convolutional neural network approaches for satellite remote sensing ship monitoring technology. In: IOP conference series: materials science and engineering. (IOP Publishing), Vol 730, p 012071

Balaha HM, Ali HA, Badawy M (2021) Automatic recognition of handwritten Arabic characters: a comprehensive review. Neural Comput Appl 33(7):3011–3034

Yamashita R, Nishio M, Do RKG, Togashi K (2018) Convolutional neural networks: an overview and application in radiology. Insights Imag 9(4):611–629

Acharya UR et al (2017) A deep convolutional neural network model to classify heartbeats. Comput Biol Med 89:389–396

Lin X, Zhao C, Pan W (2017) Towards accurate binary convolutional neural network. arXiv preprintarXiv:1711.11294

Kalchbrenner N, Grefenstette E, Blunsom P (2014) A convolutional neural network for modelling sentences. arXiv preprintarXiv:1404.2188

Dureja A, Pahwa P (2019) Analysis of non-linear activation functions for classification tasks using convolutional neural networks. Recent Patents Comput Sci 12(3):156–161

Nair V, Hinton GE (2010) Rectified linear units improve restricted Boltzmann machines. In: Icml

Agarap AF (2018) Deep learning using rectified linear units (relu). arXiv preprintarXiv:1803.08375

Clevert DA, Unterthiner T, Hochreiter S (2015) Fast and accurate deep network learning by exponential linear units (elus). arXiv preprintarXiv:1511.07289

Huang Z, et al. (2020) Sndcnn: Self-normalizing deep cnns with scaled exponential linear units for speech recognition. In: ICASSP 2020–2020 IEEE international conference on acoustics, speech and signal processing (ICASSP). (IEEE), pp 6854–6858

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE international conference on computer vision, pp 1026–1034

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks. In: Proceedings of the thirteenth international conference on artificial intelligence and statistics. (JMLR Workshop and Conference Proceedings), pp 249–256

LeCun YA, Bottou L, Orr GB, Müller KR (2012) Efficient backprop. Neural networks: tricks of the trade. Springer, New York, pp 9–48

Klambauer G, Unterthiner T, Mayr A, Hochreiter S (2017) Self-normalizing neural networks. arXiv preprint arXiv:1706.02515

Xu L, Ren JS, Liu C, Jia J (2014) Deep convolutional neural network for image deconvolution. In: Advances in neural information processing systems, pp 1790–1798

O’Shea K, Nash R (2015) An introduction to convolutional neural networks. arXiv preprintarXiv:1511.08458

Guo T, Dong J, Li H, Gao Y (2017) Simple convolutional neural network on image classification. In: Proceedings of the 2017 IEEE 2nd international conference on big data analysis (ICBDA). (IEEE), pp 721–724

Wu H, Gu X (2015) Towards dropout training for convolutional neural networks. Neural Netw 71:1–10

Pereyra G, Tucker G, Chorowski J, Kaiser Ł, Hinton G (2017) Regularizing neural networks by penalizing confident output distributions. arXiv preprint arXiv:1701.06548

Ide H, Kurita T (2017) Improvement of learning for cnn with relu activation by sparse regularization. In: Proceedings of the 2017 international joint conference on neural networks (IJCNN). (IEEE), pp 2684–2691

Ruder S (2016) An overview of gradient descent optimization algorithms. CoRR abs/1609.04747

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv preprintarXiv:1412.6980

McMahan HB, et al. (2013) Ad click prediction: a view from the trenches. In: Proceedings of the 19th ACM SIGKDD international conference on Knowledge discovery and data mining, pp 1222–1230

Sutskever I, Martens J, Dahl G, Hinton G (2013) On the importance of initialization and momentum in deep learning. In: Proceedings of the 30th international conference on international conference on machine learning, vol 28, ICML’13. (JMLR.org), p III-1139-III-1147

Kingma D, Ba J (2014) Adam: a method for stochastic optimization. Int Conf Learn Rep

Dozat T (2016) Incorporating nesterov momentum into adam

Hubara I, Courbariaux M, Soudry D, El-Yaniv R, Bengio Y (2016) Binarized neural networks. In: Proceedings of the 30th international conference on neural information processing systems. (Citeseer), pp 4114–4122

Duchi J, Hazan E, Singer Y (2011) Adaptive subgradient methods for online learning and stochastic optimization. J Mach Learn Res 12(7)

Zeiler MD (2012) Adadelta: an adaptive learning rate method. arXiv preprintarXiv:1212.5701

De Boer PT, Kroese DP, Mannor S, Rubinstein RY (2005) A tutorial on the cross-entropy method. Ann Oper Res 134(1):19–67

Ho Y, Wookey S (2019) The real-world-weight cross-entropy loss function: modeling the costs of mislabeling. IEEE Access 8:4806–4813

Zhang Z, Sabuncu MR (2018) Generalized cross entropy loss for training deep neural networks with noisy labels. arXiv preprint arXiv:1805.07836

Balaha HM, Ali HA, Saraya M, Badawy M (2021) A new Arabic handwritten character recognition deep learning system (ahcr-dls). Neural Comput Appl 33(11):6325–6367

Gao B, Pavel L (2017) On the properties of the softmax function with application in game theory and reinforcement learning. arXiv preprintarXiv:1704.00805

Jang E, Gu S, Poole B (2016) Categorical reparameterization with gumbel-softmax. arXiv preprintarXiv:1611.01144

Howard AG, et al. (2017) Mobilenets: efficient convolutional neural networks for mobile vision applications. arXiv preprintarXiv:1704.04861

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen LC (2018) Mobilenetv2: inverted residuals and linear bottlenecks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4510–4520

Chollet F (2017) Xception: deep learning with depthwise separable convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1251–1258

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

He K, Zhang X, Ren S, Sun J (2016) Identity mappings in deep residual networks. European conference on computer vision. Springer, New York, pp 630–645

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4700–4708

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25:1097–1105

Christlein V, et al. (2019) Deep generalized max pooling. In: Proceedings of the 2019 international conference on document analysis and recognition (ICDAR). (IEEE), pp 1090–1096

Marler RT, Arora JS (2010) The weighted sum method for multi-objective optimization: new insights. Struct Multidiscip Optim 41(6):853–862

Bahgat WM, Balaha HM, AbdulAzeem Y, Badawy MM (2021) An optimized transfer learning-based approach for automatic diagnosis of covid-19 from chest x-ray images. PeerJ Comput Sci 7:e555

Mirjalili S (2019) Genetic algorithm. Evolutionary algorithms and neural networks. Springer, New York, pp 43–55

Kramer O (2017) Genetic algorithms. Genetic algorithm essentials. Springer, New York, pp 11–19

Balaha HM, El-Gendy EM, Saafan MM (2021) Covh2sd: a covid-19 detection approach based on harris hawks optimization and stacked deep learning. Expert Syst Appl 186:115805

Balaha HM, et al. (2021) Recognizing arabic handwritten characters using deep learning and genetic algorithms. Multimedia Tools Appl, pp 1–37

Whitley D (1994) A genetic algorithm tutorial. Stat Comput 4(2):65–85

Balaha HM, Balaha MH, Ali HA (2021) Hybrid covid-19 segmentation and recognition framework (hmb-hcf) using deep learning and genetic algorithms. Artif Intell Med 119:102156

Deb K, Agrawal RB et al (1995) Simulated binary crossover for continuous search space. Comp Syst 9(2):115–148

De Falco I, Della Cioppa A, Tarantino E (2002) Mutation-based genetic algorithm: performance evaluation. Appl Soft Comput 1(4):285–299

Mathew TV (2012) Genetic algorithm. Report submitted at IIT Bombay

Genlin J (2004) Survey on genetic algorithm. Comput Appl Softw 2:69–73

Shorten C, Khoshgoftaar TM (2019) A survey on image data augmentation for deep learning. J Big Data 6(1):1–48

Perez L, Wang J (2017) The effectiveness of data augmentation in image classification using deep learning. arXiv preprint arXiv:1712.04621

Mikołajczyk A, Grochowski M (2018) Data augmentation for improving deep learning in image classification problem. In: Proceedings of the 2018 international interdisciplinary PhD workshop (IIPhDW). (IEEE), pp 117–122

Antoniou A, Storkey A, Edwards H (2017) Data augmentation generative adversarial networks. arXiv preprint arXiv:1711.04340

Al-Dhabyani W, Gomaa M, Khaled H, Fahmy A (2020) Dataset of breast ultrasound images. Data Br 28:104863

Rodrigues PS (2017) Breast ultrasound image. Mendeley Data 1

Pilgrim M, Willison S (2009) Dive Into Python 3, vol 2. Springer, New York

Kociołek M, Strzelecki M, Obuchowicz R (2020) Does image normalization and intensity resolution impact texture classification? Comput Med Imaging Graph 81:101716

Balaha HM, Saafan MM (2021) Automatic exam correction framework (aecf) for the mcqs, essays, and equations matching. IEEE Access 9:32368–32389

Bisong E (2019) Google colaboratory. Building machine learning and deep learning models on google cloud platform. Springer, New York, pp 59–64

Ketkar N (2017) Introduction to keras. Deep learning with Python. Springer, New York, pp 97–111

Goldsborough P (2016) A tour of tensorflow. arXiv preprintarXiv:1610.01178

Xing J, et al. (2020) Using bi-rads stratifications as auxiliary information for breast masses classification in ultrasound images. IEEE J Biomed Health Inform

Hershey JR, Olsen PA (2007) Approximating the kullback leibler divergence between gaussian mixture models. In: Proceedings of the 2007 ieee international conference on acoustics, speech and signal processing-ICASSP’07. (IEEE), vol. 4, pp IV–317

Funding

No funding was received for this work (i.e., study).

Author information

Authors and Affiliations

Contributions

All the authors have participated in writing the manuscript and have revised the final version. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of Interest

No conflict of interest exists. We wish to confirm that there are no known conflicts of interest associated with this publication and there has been no significant financial support for this work that could have influenced its outcome.

Intellectual Property

We confirm that we have given due consideration to the protection of intellectual property associated with this work and that there are no impediments to publication, including the timing of publication, concerning intellectual property. In so doing, we confirm that we have followed the regulations of our institutions concerning intellectual property.

Research Ethics

We further confirm, if existing, that any aspect of the work covered in this manuscript that has involved human patients has been conducted with the ethical approval of all relevant bodies and that such approvals are acknowledged within the manuscript. Written consent to publish potentially identifying information, such as details of the case and photographs, was obtained from the patient(s) or their legal guardian(s).

Authorship

We confirm that the manuscript has been read and approved by all named authors. We confirm that the order of authors listed in the manuscript has been approved by all named authors.

Compliance with Ethical Standards

The current study does not contain any studies with human participants and/or animals performed by any of the authors.

Consent to Participate

There is no informed consent for the current study.

Consent for Publication

Not Applicable.

Contact with the Editorial Office

The “Corresponding Author” who is declared on the title page. This author submitted this manuscript using his account in the editorial submission system. (A) We understand that the “Corresponding Author” is the sole contact for the Editorial process (including the editorial submission system and direct communications with the office). He is responsible for communicating with the other authors about progress, submissions of revisions, and final approval of proofs. (B) We confirm that the email address shown below is accessible by the “Corresponding Author” and is the address to which “Corresponding Author”’s editorial submission system account is linked and has been configured to accept email from the editorial office (email: hossam.m.balaha@mans.edu.eg).

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Balaha, H.M., Saif, M., Tamer, A. et al. Hybrid deep learning and genetic algorithms approach (HMB-DLGAHA) for the early ultrasound diagnoses of breast cancer. Neural Comput & Applic 34, 8671–8695 (2022). https://doi.org/10.1007/s00521-021-06851-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-06851-5