Abstract

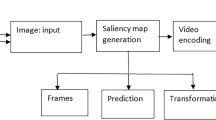

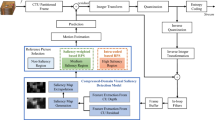

The fast development of video technology and hardware has led to a great amount of video applications in the field of industry, such as the video conference, video surveillance and live video streaming. Most of the applications are facing with the problem of limited network bandwidth while try to keep high video resolution. Perceptual video compression addresses the problem by introducing saliency information to reduce the perceptual redundancy, which retains more information in salient region while compresses the non-salient region as much as possible. In this paper, in order to combine the multi-scale information in video saliency, an advanced video saliency detection model SALDPC is proposed which is built based on deformable pyramid convolution decoder and multi-scale temporal recurrence. In order to better guide video coding through saliency, based on the HEVC video coding standard, a saliency-aware rate-distortion optimization algorithm, SRDO, is proposed using block-based saliency information to change the rate-distortion balance and guide more reasonable bit allocation. Furthermore, a more flexible QP selection method, SAQP, is developed using CU's saliency information adaptively to change the QP of CU to ensure the high quality of the high saliency areas. The final results are available in the following three configurations: SRDO, SRDO + SQP, and SRDO + SAQP. Experimental results show that our method achieves a very high video quality improvement while significantly reducing the video encoding time. Compared to HEVC, the BD-EWPSNR of the SRDO method improves 0.703 dB, and the BD-Rate based on EWPSNR saves 20.822%; the BD-EWPSNR of the SRDO + SAQP is improved up to 1.217 dB, and the BD-Rate based on EWPSNR further saves up to 32.41%. At the same time, in terms of compression time, the proposed method saves up to 29.06% compared to HEVC. Experimental results show the superiority of the proposed method in comparison to the state-of-the-art methods.

Similar content being viewed by others

References

Hussain T, Muhammad K, Ser JD, Baik SW, De Albuquerque VHC (2020) Intelligent embedded vision for summarization of multiview videos in IIoT. IEEE T Ind Inf 16(4):2592–2602

Muhammad K, Hussain T, Del Ser J, Palade V, De Albuquerque VHC (2020) DeepReS: a deep learning-based video summarization strategy for resource-constrained industrial surveillance scenarios. IEEE T Ind Inf 16(9):5938–5947

Wang MH, Xie WY, Zhang J, Qin J (2020) Industrial applications of ultrahigh definition video coding with an optimized supersample adaptive offset framework. IEEE T Ind Inf 16(12):7613–7623

Sullivan GJ, Ohm JR (2010) Recent developments in standardization of high efficiency video coding (HEVC). Proc SPIE 7798(1):731–739

Wiegand T, Ohm JR, Sullivan GJ, Han WJ, Joshi R (2010) Special section on the joint call for proposals on high efficiency video coding (HEVC) standardization. IEEE Trans Circuits Syst Video Technol 20(12):1661–1666

Zhang J, Kwong STW, Zhao T, Ip HHS (2019) Complexity control in the HEVC intracoding for industrial video applications. IEEE T Ind Inf 15(3):1437–1449

Lee JS, Ebrahimi T (2012) Perceptual video compression: a survey. IEEE J Sel Top Signal Process 6(6):684–697

Itti L, Koch C, Niebur E (1998) A model of saliency-based visual attention for rapid scene analysis. IEEE Trans Pattern Anal Mach Intell 20(11):1254–1259

Schölkopf B, Platt J, Hofmann T (2007) Graph-Based visual saliency. Adv Neural Inf Proc Sys 19:545–552

Hou X, Zhang L (2007) Saliency detection: a spectral residual approach, In IEEE Conferene Computer Vision and Pattern Recognition, Minneapolis, MN, USA, pp. 1–8

Judd T, Durand F, Torralba A (2012) A benchmark of computational models of saliency to predict human fixations, MIT Technical report, Cambridge, MA, USA, Rep. MIT-CSAIL-TR-2012-001

Kümmerer M, Theis L, Bethge M (2014) Deep gaze I: boosting saliency prediction with feature maps trained on imagenet,” arXiv preprint arXiv: 1411.1045

Kummerer M, Wallis TSA, Gatys LA, Bethge M (2017) Understanding low-and high-level contributions to fixation prediction, In Proc. IEEE Int. Conf. Comp. Vision, Venice, Italy, pp. 4789–4798

Huang X, Shen C, Boix X, Zhao Q (2015) Salicon: Reducing the semantic gap in saliency prediction by adapting deep neural networks, In IEEE Int. Conf. Comput. Vision, Santiago, Chile, pp. 262–270

Kruthiventi SSS, Ayush K, Babu RV (2017) Deepfix: a fully convolutional neural network for predicting human eye fixations. IEEE Trans Image Process 26(9):4446–4456

Pan J, Sayrol E, Giro-i-Nieto X, Mcguinness K, Oconnor N. E. (2016) Shallow and deep convolutional networks for saliency prediction, In Proc. IEEE Conf. Comput. Vision Pattern Recognit., Las Vegas, NV, USA, pp. 598–606

Pan J et al. (2017) Salgan: Visual saliency prediction with generative adversarial networks, arXiv preprint arXiv: 1701.01081

Wang W, Shen J (2018) Deep visual attention prediction. IEEE Trans Image Process 27(5):2368–2378

Itti L, Dhavale N, Pighin F (2004) Realistic avatar eye and head animation using a neurobiological model of visual attention. Proc SPIE Int Soc Opt Eng 5200:64–78

Itti L, Baldi P (2009) Bayesian surprise attracts human attention. Vision Res 49(10):1295–1306

Guo C, Zhang L (2010) A novel multiresolution spatiotemporal saliency detection model and its applications in image and video compression. IEEE Trans Image Process 19(1):185–198

Fang Y, Wang Z, Lin W, Fang ZJ (2014) Video saliency incorporating spatiotemporal cues and uncertainty weighting. IEEE Trans Image Process 23(9):3910–3921

Xu M, Jiang L, Sun XY, Ye ZT, Wang ZL (2017) Learning to detect video saliency with HEVC features. IEEE Trans Image Process 26(1):369–385

Jiang L, Xu M, Liu T, Qiao ML, Wang ZL (2018) Deepvs: a deep learning based video saliency prediction approach, In Proc. Eur. Conf. Comput. Vision (ECCV), Munich, Germany, pp. 602–617

Wang WG, Shen JB, Guo F, Cheng MM, Borji A (2018) Revisiting video saliency: a large-scale benchmark and a new model, In Proc. IEEE Conf. Comput. Vision Pattern Recogniti., Salt Lake, UTAH, pp. 4894–4903

Wang WG, Shen JB, Xie JW, Cheng MM, Ling HB, Borji A (2021) Revisiting video saliency prediction in the deep learning era. IEEE Trans Pattern Anal Mach Intell 43(1):220–237

Itti L (2004) Automatic foveation for video compression using a neurobiological model of visual attention. IEEE Trans Image Process 13(10):1304–1318

Cavallaro A, Steiger O, Ebrahimi T (2005) Semantic video analysis for adaptive content delivery and automatic description. IEEE Trans Circuits Syst Video Technol 15(10):1200–1209

Zünd F, Pritch Y, Sorkine-Hornung A, Mangold S, Gross T (2013) Content-aware compression using saliency-driven image retargeting, In 2013 IEEE Int. Conf. Image Process., IEEE, Melbourne, VIC, Australia, pp. 1845–1849

Karlsson LS, Sjostrom M, (2005) Improved ROI video coding using variable Gaussian pre-filters and variance in intensity,” In IEEE Int. Conf. Image Process., IEEE, Genova, Italy, pp. 13–16

Li ZC, Qin SY, Itti L (2011) Visual attention guided bit allocation in video compression. Image Vision Comput 29(1):1–14

Gupta R, Khanna MT, Chaudhury S (2013) Visual saliency guided video compression algorithm. Signal Process Image Commun 28(9):1006–1022

Hadizadeh H, Bajić IV (2014) Saliency-aware video compression. IEEE Trans Image Process 23(1):19–33

Xu M, Deng X, Li SX, Wang ZL (2014) Region-of-interest based conversational HEVC coding with hierarchical perception model of face. IEEE J Sel Top Signal Process 8(3):475–489

Li S, Xu M, Deng X, Wang Z (2015) Weight-based R-λ rate control for perceptual HEVC coding on conversational videos. Signal Process Image Commun 38:127–140

Xiang G et al (2018) A perceptually temporal adaptive quantization algorithm for HEVC. J Visual Commun Image Represent 50:280–289

Xiao J et al (2017) A sensitive object-oriented approach to big surveillance data compression for social security applications in smart cities. Software Pract Exper 47(8):1061–1080

Zhu SP, Xu ZY (2018) Spatiotemporal visual saliency guided perceptual high efficiency video coding with neural network. Neurocomputing 275:511–522

He KM, Zhang XY, Ren SQ, Sun J (2016) Deep residual learning for image recognition, In 2016 IEEE Conf. Comput. Vision Pattern Recognit. (CVPR), Las Vegas, NV, pp. 770–778

Gao SH, Cheng MM, Zhao K, Zhang XY, Yang MH, Torr PHS (2021) Res2net: a new multi-scale backbone architecture. IEEE Trans Pattern Anal Mach Intell 43(2):652–662

Linardos P, Mohedano E, Nieto JJ, O’Connor N (2019) Simple vs complex temporal recurrences for video saliency prediction, arXiv preprint arXiv: 1907.01869

Lin TY, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection, arXiv preprint arXiv: 1612.03144, pp. 2117–2125

Dai JF et al. (2017) Deformable convolutional networks, arXiv preprint arXiv: 1703.06211, pp. 764–773

Zhu SP, Liu C, Xu ZY (2020) High-Definition video compression system based on perception guidance of salient information of a convolutional neural network and HEVC compression domain. IEEE Trans Circuits Syst Video Technol 30(7):1946–1959

Sun XB, Yang XF, Wang SK, Liu M (2020) Content-aware rate control scheme for hevc based on static and dynamic saliency detection. Neurocomputing 411:393–405

Khatoonabadi SH, Vasconcelos N, Bajić IV, Shan Y, “How many bits does it take for a stimulus to be salient?,” In 2015 IEEE Proc. IEEE Conf. Comput. Vision Pattern Recognit., Boston, MA, 2015, pp. 5501–5510

Leborán V, García-Díaz A, Fdez-Vidal XR, Pardo XM (2017) Dynamic whitening saliency. IEEE Trans Pattern Anal Mach Intell 39(5):893–907

Hadizadeh H, Enriquez MJ, Bajic IV (2012) Eye-tracking database for a set of standard video sequences. IEEE Trans Image Process 21(2):898–903

Bylinskii Z, Judd T, Oliva A, Torralba A, Durand F (2019) What do different evaluation metrics tell us about saliency models? IEEE Trans Pattern Anal Mach Intell 41(3):740–757

Li Z, Aaron A, Katsavounidis I, Moorthy A, Manohara M (2018) Toward a practical perceptual video quality metric, Netflix Technol. Blog, vol. 62, no. 2, Jun. 2016. Accessed: Aug. 21, 2018. [Online] Available: https://netflixtechblog.com/toward-a-practical-perceptual-video-quality-metric-653f208b9652

Acknowledgements

This work is supported by the National Key Technologies R&D Program of China (Grant No. 2016YFB0500505), National Natural Science Foundation of China (NSFC) under grants No. 61375025, 61075011 and 60675018, and also the Scientific Research Foundation for the Returned Overseas Chinese Scholars from the State Education Ministry of China.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhu, S., Chang, Q. & Li, Q. Video saliency aware intelligent HD video compression with the improvement of visual quality and the reduction of coding complexity. Neural Comput & Applic 34, 7955–7974 (2022). https://doi.org/10.1007/s00521-022-06895-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-06895-1