Abstract

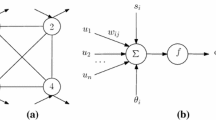

In recent years, studies on chaotic neural networks have been increased to construct a robust and flexible intelligent network resembling the human brain. To increase the chaotic performance and to reduce the time-complexity of conventional chaotic neural networks, this paper presents an innovative chaotic architecture called cascade chaotic neural network (CCNN). Cascade chaotic system is inspired by cascade structures in electronic circuits. Cascade structure is based on a combination of two or more one-dimensional chaotic maps. This combination provides a new chaotic map that has more complicated behavior than its grain maps. The fusion of this structure into the network neurons makes the CCNN more capable of confronting nonlinear problems. In the proposed model, cascade chaotic activation function (CCAF) is introduced and applied. Using the CCAF with inherent chaotic features such as increasing variability, ergodicity, maximum entropy, and free saturation zones can be promising to solve or reduce learning problems in conventional AFs without increasing complexity. The complexity does not increase because no parameter is added to the system in use. The required chaos for neural network is generated by the Li oscillator, and then when using the neural network, parameters are considered as constants. Chaotic behavior of the CCNN is investigated through bifurcation diagram. Also, prediction capability of the proposed model is verified through popular benchmark problems. Simulation and analysis demonstrate that in comparison with outstanding chaotic models, the CCNN provides more accurate and robust results in various conditions.

Similar content being viewed by others

References

Lee RS (2006) Lee-Associator—a chaotic auto associative network for progressive memory recalling. Neural Netw 19:644–666

Düzenli G (2015) A new security approach for public transport application against tag cloning with neural network-based pattern recognition. Neural Comput Appl 26:1681–1691

Li M, Hong Q, Wang X (2022) Memristor-based circuit implementation of Competitive Neural Network based on online unsupervised Hebbian learning rule for pattern recognition. Neural Comput Appl 34:319–331

Maass W, Markram H (2002) Synapses as dynamic memory buffers. Neural Netw 15:155–161

Tang M, Wang K, Zhang J, Zheng L (2009) Fuzzy chaotic neural networks. In: Mo H (ed) Handbook of research on artificial immune systems and natural computing: applying complex adaptive technologies. Harbin Engineering University, Harshey, pp 520–555

Chen L, Aihara K (1999) Global searching ability of chaotic neural networks. IEEE Trans Circuits Syst I 48(8):974–993

Qiao J, Hu Z, Li W (2019) Hysteretic noisy frequency conversion sinusoidal chaotic neural network for traveling salesman problem. Neural Comput Appl 31:7055–7069

Abdulkarim SA, Engelbrecht AP (2021) Time series forecasting with feedforward neural networks trained using particle swarm optimizers for dynamic environments. Neural Comput Appl 33:2667–2683

Shakiba M, Teshnehlab M, Zokaie S, Zakermosh M (2008) Short-term prediction of traffic rate interval router using hybrid training of dynamic synapse neural network structure. Int J Appl Sci 8(8):1534–1540

Zweiri YH (2006) Optimization of a three-term backpropagationalgorithm used for neural network learning. Int J Comput Intell 3(4):322–327

Li Y, Fu Y, Li H, Zhang SW (2009) The improved training algorithm of back propagation neural network with self-adaptive learning rate. In: International conference on computational intelligence and natural computing, Wuhan

He Y, Xu Q, Wan J, Yang S (2018) Electrical load forecasting based on self-adaptive chaotic neural network using Chebyshev map. Neural Comput Appl 29:603–612

Nayak J, Naik B, Behera HS, Abraham A (2018) Elitist teaching–learning-based optimization (ETLBO) with higher-order Jordan Pi-sigma neural network: a comparative performance analysis. Neural Comput Appl 30:1445–1468

Nair V, Hinton GE (2010) Rectified linear units improve restricted boltzmann machines. In: International conference on machine learning

Mohammed NA, Al-Bazi A (2021) An adaptive backpropagation algorithm for long-term electricity load forecasting. Neural Comput Appl

Ng SC, Cheung CC, Leung SH (2004) Magnified gradient function with deterministic weight modification in adaptive learning. IEEE Trans Neural Netw 15(6):1411–1423

He G, Cao Z, Zhu P, Ogura H (2003) Controlling chaos in a chaotic neural network. Neural Netw 16(8):1195–1200

Olsen LF, Degen H (1985) Chaos in biological systems. Q Rev Biophys 18(2):165–225

Arbib MA (2003) The handbook of brain theory and neural networks. MIT Press, Cambridge

Zhang Y, Liu M, Ma B, Ma B, Zhen Y (2017) The performance evaluation of diagonal recurrent neural network with different chaos neurons. Neural Comput Appl 28:1611–1618

Ahmed SU, Shahjahan M, Murase K (2011) Injecting chaos in feedforward neural networks. Neural Process Lett 34(1):87–100

Chen Y, Sano H, Wakaiki M, Yaguchi T (2021) Secret communication systems using chaotic wave equations with neural network boundary conditions. Entropy 23(7):1–33

Hayou S, Doucet A, Rousseau J (2019) On the impact of the activation function on deep neural networks training. In: Proceedings of the 36th international conference on machine learning, Long Beach

Jacot A, Gabriel F, Ged FG, Hongler C (2019) Order and chaos: NTK views on DNN Normalization. Checkerboard and Boundary Artifacts. arXiv: Learning

Huang X, Xu J, Wang S (2012) Nonlinear system identification with continuous piecewise linear neural network. Neurocomputing 77(1):167–177

Ding H, Li W, Qiao J (2021) A self-organizing recurrent fuzzy neural network based on multivariate time series analysis. Neural Comput Appl 33:5089–5109

Olyaee M, Abasi H, Yaghoobi M (2013) Using hierarchical adaptive neuro fuzzy systems and design two new edge detectors in noisy images. J Soft Comput Appl 2013:1–10

Chen T, Chen H, Liu RW (1995) Approximation capability in C(R~/sup n/) by multilayer feedforward networks and related problems. IEEE Trans Neural Netw 6(1):25–30

Buscema PM, Massini G, Fabrizi M, Breda M, Torre FD (2018) The ANNS approach to DEM reconstruction. Comput Intell Int J 34(1):310–344

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning representations by back-propagating errors. Nature 323:533–536

Hagan MT, Menhaj MB (1994) Training feed forward networks with the Marquardt algorithm. IEEE Trans Neural Netw 5:989–993

Behera L, Kumar S, Patnaik A (2006) On adaptive learning rate that guarantees convergence in feedforward networks. IEEE Trans Neural Netw 17(5):1116–1125

Jamil M, Zeeshan M (2019) A comparative analysis of ANN and chaotic approach-based wind speed prediction in India. Neural Comput Appl 31:6807–6819

Magoulas GD, Plagianakos VP, Vrahatis MN (2002) Globally convergent algorithms with local learning rates. IEEE Trans Neural Netw 13(3):774–779

Bhojani SH, Bhatt N (2020) Wheat crop yield prediction using new activation functions in neural network. Neural Comput Appl 32:13941–13951

Zweir YH, Whidborne J, Seneviratne AD (2003) A three-term backpropagation algorithm. Neurocomputing 50:305–318

Gashler MS, Smith MR, Morris R, Martinez T (2016) Missing value imputation with unsupervised backpropagation. Comput Intell 32(2):196–215

Kamruzzaman J (2002) Arctangent activation function to accelerate backpropagation learning. IEICE Trans Fundam Electron Commun Comput Sci E85A(10):2373–2376

Bilski J (2000) The backpropagation learning with logarithmic transfer function. In: Proceeding fifth conference on neural networks and soft computing, Poland

Pedamonti D (2018) Comparison of non-linear activation functions for deep neural networks on MNIST classification task. http://arxiv.org/abs/1804.02763

Clevert D-A, Unterthiner T, Hoc S (2016) Fast and accurate deep network learning by exponential linear units (ELUS). In: ICLR

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: surpassing human-level performance on ImageNet classification. In: IEEE international conference on computer vision (ICCV), Santiago

Kim D, Kim J, Kim J (2020) Elastic exponential linear units for convolutional neural networks. Neurocomputing 406:253–266

Asaduzzaman M, Shahjahan M, Murase K (2009) Faster training using fusion of activation functions for feed forward neural networks. Int J Neural Syst 19(6):437–448

Demir FB, Tuncer T, Kocamaz AF (2020) A chaotic optimization method based on logistic-sine map for numerical function optimization. Neural Comput Appl 32:14227–14239

Yang D, Li G, Cheng G (2007) On the efficiency of chaos optimization algorithms for global optimization. Chaos Solitons Fractals 34(4):1366–1375

Stergiou K, Karakasidis TE (2021) Application of deep learning and chaos theory for load forecasting in Greece. Neural Comput Appl 33:16713–16731

Gomes DS, Ludermir GS, Lima TB (2011) Comparison of new activation functions in neural network for forecasting financial time series. Neural Comput Appl 20:417–439

Sodhi SS, Chandra P (2014) Bi-modal derivative activation function for sigmoidal feedforward networks. Neurocomputing 143(2):182–196

Wang L, Meng Z, Sun Y, Guo L, Zhou M (2015) Design and analysis of a novel chaotic diagonal recurrent neural network. Commun Nonlinear Sci Numer Simul 26(1–3):11–23

Kabir ANME, Uddin AFMN, Asaduzzaman M, Hasan MF, Hasan MI, Shahjahan M (2012) Fusion of chaotic activation functions in training neural network. In: 7th International conference on electrical and computer engineering, Dhaka

Ott E (2002) Chaos in dynamical systems. Cambridge University Press, New York

Tao Q, Sun Z, Kong K (2012) Developing learning algorithms via optimized discretization of continuous dynamical systems. IEEE Trans Syst Man Cybern Part B 42(1):140–149

Deng L, Li D, Cai Z, Cai Z, Hong L (2020) Smart IoT information transmission and security optimization model based on chaotic neural computing. Neural Comput Appl 32:16491–16504

Zhou Y, Bao L, Chen CP (2014) A new 1D chaotic system for image encryption. Signal Process 97:172–182

Zhou Y, Hua Z, Pun CM, Chen CLP (2015) Cascade chaotic system with applications. IEEE Trans Cybern 45(9):2001–2012

Jakimosk G, Subbalakshmi K (2007) Discrete Lyapunov exponent and differential cryptanalysis. IEEE Trans Circuits Syst II Express Briefs 54(6):449–501

Wong MHY, Liu JNK, Shum DTF, Lee RST (2009) The modeling of fuzzy systems based on Lee-Oscilatory Chaotic Fuzzy Model (LOCFM). In: PHYSCON 2009, Catania

Lorenz E (1963) Deterministic nonperiodic flows. J Atmos Sci 20(2):130–141

Palmer TN (1993) Extended-range atmospheric prediction and the Lorenz model. Bull Am Meteor Soc 74(1):49–65

Ardalani MF, Zolfaghari S (2010) Chaotic time series prediction with residualanalysis method using hybrid Elman-NARX neural networks. Neurocomputing 73(13):2540–2553

Sello S (2001) Solar cycle forecasting: a nonlinear dynamics approach. Astron Astrophys 377(1):312–320

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Abbasi, H., Yaghoobi, M., Teshnehlab, M. et al. Cascade chaotic neural network (CCNN): a new model. Neural Comput & Applic 34, 8897–8917 (2022). https://doi.org/10.1007/s00521-022-06912-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-06912-3