Abstract

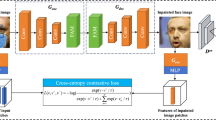

Face inpainting is a significant problem encountered in many image restoration tasks, in which various methods based on deep learning are explored. Existing methods cannot restore enough structure details as the masked input only provides limited information. In this paper, a novel reference-guided face inpainting method is proposed to generate inpainting results more similar to people themselves, which restores the missing pixels by referring to a reference image besides an original masked image. Concretely, another reference image with the same identity as the masked input is utilized as a conditional input to constrain the generated coarse result of the first inpainting stage. Furthermore, a reference attention module is designed to restore more textural details by computing the similarity between the pixels of the coarse result and the reference image. The similarity is further represented by the similarity maps, which are deconvolved to reconstruct the pixels of the missing regions. Extensive experimental results on CelebA datasets and LFW datasets demonstrate that our proposed method can generate an image with more similar features to people themselves and achieves superior performance to the state-of-the-art methods quantitatively and qualitatively.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Barnes C, Shechtman E, Finkelstein A, Goldman DB (2009) Patchmatch: a randomized correspondence algorithm for structural image editing. ACM Trans Graph 28(3):24

Hays J, Efros AA (2007) Scene completion using millions of photographs. ACM Trans Graph (ToG) 26(3):4-es. https://doi.org/10.1145/1400181.1400202

Efros AA, Freeman WT (2001) Image quilting for texture synthesis and transfer. In: Proceedings of the 28th annual conference on Computer graphics and interactive techniques, pp 341-346 https://doi.org/10.1145/383259.383296

Efros AA, Leung TK (1999) Texture synthesis by non-parametric sampling. In: Proceedings of the seventh IEEE international conference on computer vision, IEEE vol 2, pp. 1033–1038. https://doi.org/10.1109/iccv.1999.790383

Wilczkowiak M, Brostow GJ, Tordoff B, Cipolla R (2005) Hole filling through photomontage. In: BMVC 2005-Proceedings of the British Machine Vision Conference 2005 https://doi.org/10.5244/c.19.52

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25:1097–1105. https://doi.org/10.1145/3065386

Wang H, Peng J, Jiang G, Fu X (2021) Learning multiple semantic knowledge for cross-domain unsupervised vehicle re-identification. In: 2021 IEEE International Conference on Multimedia and Expo (ICME), IEEE, pp 1-6 https://doi.org/10.1109/icme51207.2021.9428440

Pathak D, Krahenbuhl P, Donahue J, Darrell T, Efros AA (2016) Context encoders: feature learning by inpainting. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2536–2544 https://doi.org/10.1109/cvpr.2016.278

Yu J, Lin Z, Yang J, Shen X, Lu X, Huang TS (2018) Generative image inpainting with contextual attention. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5505–5514 https://doi.org/10.1109/cvpr.2018.00577

Yang C, Lu X, Lin Z, Shechtman E, Wang O, Li H (2017) High-resolution image inpainting using multi-scale neural patch synthesis. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6721–6729 https://doi.org/10.1109/cvpr.2017.434

Yu J, Lin Z, Yang J, Shen X, Lu X, Huang TS (2019) Free-form image inpainting with gated convolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 4471–4480 https://doi.org/10.1109/iccv.2019.00457

Iizuka S, Simo-Serra E, Ishikawa H (2017) Globally and locally consistent image completion. ACM Trans Graph (ToG) 36(4):1–14. https://doi.org/10.1145/3072959.3073659

Liu G, Reda FA, Shih KJ, Wang TC, Tao A, Catanzaro B (2018) Image inpainting for irregular holes using partial convolutions. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 85–100 https://doi.org/10.1007/978-3-030-01252-6_6

Li Y, Liu S, Yang J, Yang MH (2017) Generative face completion. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3911–3919 https://doi.org/10.1109/cvpr.2017.624

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, WardeFarley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. Adv Neural Inf Process Syst 27

Shim G, Park J, Kweon IS (2020) Robust reference-based super-resolution with similarity-aware deformable convolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8425–8434 https://doi.org/10.1109/cvpr42600.2020.00845

Yang F, Yang H, Fu J, Lu H, Guo B (2020) Learning texture transformer network for image super-resolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5791–5800 https://doi.org/10.1109/cvpr42600.2020.00583

Zhang Z, Wang Z, Lin Z, Qi H (2019) Image super-resolution by neural texture transfer. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7982–7991 https://doi.org/10.1109/cvpr.2019.00817

Zheng H, Guo M, Wang H, Liu Y, Fang L (2017) Combining exemplar-based approach and learning-based approach for light field super-resolution using a hybrid imaging system. In: Proceedings of the IEEE international conference on computer vision workshops, pp 2481–2486 https://doi.org/10.1109/iccvw.2017.292

Zheng H, Ji M, Wang H, Liu Y, Fang L (2018) Crossnet: An end-to-end reference-based super resolution network using cross-scale warping. In: Proceedings of the European conference on computer vision (ECCV), pp 88–104 https://doi.org/10.1007/978-3-030-01231-1_6

Zheng H, Ji M, Han L, Xu Z, Wang H, Liu Y, Fang L (2017) Learning cross-scale correspondence and patch-based synthesis for reference-based super-resolution. In:BMVC, vol 1, p 2 https://doi.org/10.5244/c.31.138

Ballester C, Bertalmio M, Caselles V, Sapiro G, Verdera J (2001) Filling-in by joint interpolation of vector fields and gray levels. IEEE Trans Image Process 10(8):1200–1211. https://doi.org/10.1109/83.935036

Bertalmio M, Sapiro G, Caselles V, Ballester C (2000) Image inpainting. In: Proceedings of the 27th annual conference on Computer graphics and interactive techniques, pp 417–424

Levin A, Zomet A, Weiss Y (2003) Learning how to inpaint from global image statistics. ICCV 1:305–312. https://doi.org/10.1109/iccv.2003.1238360

Simakov D, Caspi Y, Shechtman E, Irani M (2008) Summarizing visual data using bidirectional similarity. In: 2008 IEEE conference on computer vision and pattern recognition, IEEE, pp 1–8 https://doi.org/10.1109/cvpr.2008.4587842

Wang H, Peng J, Zhao Y, Fu X (2020) Multi-path deep CNNs for fine-grained car recognition. IEEE Trans Veh Technol 69(10):10484–10493. https://doi.org/10.1109/tvt.2020.3009162

Ding Y, Ma Z, Wen S, Xie J, Chang D, Si Z, Liang WuMH (2021) AP-CNN: weakly supervised attention pyramid convolutional neural network for fine-grained visual classification. IEEE Trans Image Process 30:2826–2836. https://doi.org/10.1109/tip.2021.3055617

Chang D, Ding Y, Xie J, Bhunia AK, Li X, Ma Z, Wu M, Guo J, Song YZ (2020) The devil is in the channels: mutual-channel loss for fine-grained image classification. IEEE Trans Image Process 29:4683–4695. https://doi.org/10.1109/tip.2020.2973812

Deng Q, Li Q, Cao J, Liu Y, Sun Z (2020) Controllable multi-attribute editing of high-resolution face images. IEEE Trans Inf Forensics Secur 16:1410–1423. https://doi.org/10.1109/tifs.2020.3033184

Yeh RA, Chen C, Yian Lim T, Schwing AG, Hasegawa-Johnson M, Do MN (2017) Semantic image inpainting with deep generative models. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5485–5493 https://doi.org/10.1109/cvpr.2017.728

Que Y, Li S, Lee HJ (2020) Attentive composite residual network for robust rain removal from single images. IEEE Trans Multimedia. https://doi.org/10.1109/tmm.2020.3019680

Nazeri, Ng, Joseph, Qureshi, and Ebrahimi. Nazeri K, Ng E, Joseph T, Qureshi FZ, Ebrahimi M (2019) Edgeconnect: Generative image inpainting with adversarial edge learning. arXiv:190100212

Zhang W, Zhu J, Tai Y, Wang Y, Chu W, Ni B, Wang C, Yang X (2021) Context-aware image inpainting with learned semantic priors. arXiv:210607220

He R, Cao J, Song L, Sun Z, Tan T (2019) Adversarial cross-spectral face completion for NIR-VIS face recognition. IEEE Trans Pattern Anal Mach Intell 42(5):1025–1037. https://doi.org/10.1109/tpami.2019.2961900

Zhao Y, Price B, Cohen S, Gurari D (2019) Guided image inpainting: Replacing an image region by pulling content from another image. In: 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), IEEE, pp 1514–1523 https://doi.org/10.1109/wacv.2019.00166

Whyte O, Sivic J, Zisserman A (2009) Get out of my picture! internet-based inpainting. In: BMVC, vol 2, p 5 https://doi.org/10.5244/c.23.116

Hays J, Efros AA (2007) Scene completion using millions of photographs. ACM Trans Graph (ToG) 26(3):4-es. https://doi.org/10.1145/1276377.1276382

Huang JB, Kang SB, Ahuja N, Kopf J (2014) Image completion using planar structure guidance. ACM Trans Graph (ToG) 33(4):1–10. https://doi.org/10.1145/2601097.2601205

Yang Y, Guo X (2020) Generative landmark guided face inpainting. In: Chinese conference on pattern recognition and computer vision (PRCV), Springer, pp 14-26 https://doi.org/10.1007/978-3-030-60633-6_2

Li J, Li Z, Cao J, Song X, He R (2021) Faceinpainter: high fidelity face adaptation to heterogeneous domains. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5089–5098

Wang, T. C., Liu, M. Y., Zhu, J. Y., Tao, A., Kautz, J., Catanzaro, B. (2018) High-resolution image synthesis and semantic manipulation with conditional GANs. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp 8798–8807). https://doi.org/10.1109/cvpr.2018.00917

Zhang R, Zhu JY, Isola P, Geng X, Lin AS, Yu T, Efros AA (2017) Real-time user-guided image colorization with learned deep priors. arXiv:170502999

Sangkloy P, Lu J, Fang C, Yu F, Hays J (2017) Scribbler: Controlling deep image synthesis with sketch and color. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5400–5409 https://doi.org/10.1109/cvpr.2017.723

Li Y, Luo Y, Lu J (2021) Reference-guided deep deblurring via a selective attention network. Appl Intell. https://doi.org/10.1007/s10489-021-02585-y

Zhou Y, Barnes C, Shechtman E, Amirghodsi S (2021) Transfill: reference-guided image inpainting by merging multiple color and spatial transformations. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2266–2276

Liu Z, Luo P, Wang X, Tang X (2015) Deep learning face attributes in the wild. In: Proceedings of the IEEE international conference on computer vision, pp 3730–3738 https://doi.org/10.1109/iccv.2015.425

Huang GB, Mattar M, Berg T, Learned-Miller E (2008, October) Labeled faces in the wild: a database forstudying face recognition in unconstrained environments. In: Workshop on faces in’Real-Life’Images: detection, alignment, and recognition

Acknowledgements

This work was supported by Hebei University High-level Scientific Research Foundation for the introduction of talent (No.521100221029).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yu, J., Li, K. & Peng, J. Reference-guided face inpainting with reference attention network. Neural Comput & Applic 34, 9717–9731 (2022). https://doi.org/10.1007/s00521-022-06961-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-06961-8