Abstract

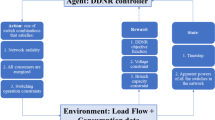

Relay protection system plays an important role in the safe and stable operation of distribution network (DN), and the traditional model-based relay protection algorithms are difficult to solve the impact of the increasing uncertainty caused by distributed generation (DG) access on the security of DN. To solve this issue, first, the relay protection characteristics of DN under DG access are analyzed; second, the DN relay protection problem is transformed into multiagent reinforcement learning (RL) problem; third, a DN distributed protection method based on multiagent deep deterministic policy gradient (MADDPG) is proposed. The advantage of this method is that there is no need to build a DN security model in advance; therefore, it can effectively overcome the impact of uncertainty caused by DG access on DN security . Extensive experiments show the effectiveness of the proposed algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Abbreviations

- DN:

-

Distribution network

- DG:

-

Distributed generation

- RL:

-

Reinforcement learning

- DDPG:

-

Deep deterministic policy gradient

- MADDPG:

-

Multiagent deep deterministic policy gradient

- AC:

-

Alternating current

- NLP:

-

Nonlinear programming

- MPSO:

-

Modified particle swarm optimization

- MG:

-

Micro grid

- OC:

-

Overcurrent

- AI:

-

Artificial intelligence

- ANN:

-

Artificial neural network

- TD:

-

Temporal difference

- DQN:

-

Deep Q-learning network

- GA:

-

Genetic algorithm

- LLG:

-

Line to Line to Ground

- SLG:

-

Single Line to Ground

- LL:

-

Line to Line

References

Zhou B, Chen Q, Yang D (2018) On the power system large-scale blackout in Brazil. Power Gen Technol 39(2):97–105

Quan L, Zhan H, Zhang Y, Xiao J, Peng P (2021) Adaptive current main protection scheme of distribution network accessed with multiple distributed generations. Power Syst Protect Control 49(8):63–69

Barra PHA, Coury DV, Fernandes RAS (2020) A survey on adaptive protection of microgrids and distribution systems with distributed generators. Renew Sustain Energy Rev 118:109524

Usama M et al (2021) A comprehensive review on protection strategies to mitigate the impact of renewable energy sources on interconnected distribution networks. IEEE Access 9:35740–35765

Hussain N, Nasir M, Vasquez JC, Guerrero JM (2020) Recent developments and challenges on AC microgrids fault detection and protection systems–a review. Energies 13(9):2149

Kiliçkiran HC, Şengör İ, Akdemir H, Kekezoğlu B, Erdinç O, Paterakis NG (2018) Power system protection with digital overcurrent relays: a review of non-standard characteristics. Electr Power Syst Res 164:89–102

Pereira K, Pereira BR, Contreras J, Mantovani JRS (2018) A multiobjective optimization technique to develop protection systems of distribution networks with distributed generation. IEEE Trans Power Syst 33(6):7064–7075

Atteya AI, El Zonkoly AM, Ashour HA (2017) Adaptive protection scheme for optimally coordinated relay setting using modified PSO algorithm. J Power Technol 97(5):463–469

MN Alam, S Chakrabarti, A Sharma, SC Srivastava, (2019) An adaptive protection scheme for AC microgrids using μPMU based topology processor. In: 2019 IEEE international conference on environment and electrical engineering and 2019 IEEE industrial and commercial power systems Europe (EEEIC / I&CPS Europe), pp 1–6

Shabani M, Karimi A (2018) A robust approach for coordination of directional overcurrent relays in active radial and meshed distribution networks considering uncertainties. Int Trans Electr Energy Syst 28(5):e2532

Darabi A, Bagheri M, Gharehpetian GB (2020) Highly sensitive micro-grid protection using overcurrent relays with a novel relay characteristic. IET Renew Power Gener 14(7):1201–1209

Lin H, Sun K, Tan Z, Liu C, Guerrero JM, Vasquez JC (2019) Adaptive protection combined with machine learning for microgrids. IET Gener, Transm Distrib 14(7):770–779

Karupiah S, Hussain M, Musirin I, Rahim S (2019) Prediction of overcurrent relay miscoordination time using urtificial neural network. IndonesianJ Electr Eng Comput Sci 14(1):319–326

DS Kumar, D Srinivasan, (2018) A numerical protection strategy for medium-voltage distribution systems. In: Proceedings IEEE Innovation Smart Grid Technology Asia (ISGT Asia), pp 1056–1061

MJ Daryani, AE Karkevandi, O Usta, (2019) A hybrid approach for microgrid protection system based on neural network and fuzzy logic. In: Proceedings IEEE PES innovation smart grid technology Europe (ISGT-Europe), pp 1–5

Glavic M (2019) (Deep) Reinforcement learning for electric power system control and related problems: a short review and perspectives. Annu Rev Control 48:22–35

Zhang Z, Zhang D, Qiu RC (2020) Deep reinforcement learning for power system applications: an overview. CSEE J Power Energy Syst 6(1):213–225

Mocanu E, Mocanu DC, Nguyen PH, Liotta A, Webber ME, Gibescu M, Slootweg JG (2019) On-line building energy optimization using deep reinforcement learning. IEEE Trans Smart Grid 10(4):3698–3708

Wan ZQ, Li HP, He HB, Prokhorov D (2019) Model-free real-time EV charging scheduling based on deep reinforcement learning. IEEE Trans Smart Grid 10(5):5246–5257

Qi XW, Luo YD, Wu GY, Boriboonsomsin K, Barth M (2019) Deep reinforcement learning enabled self-learning control for energy efficient driving. Trans Res Part C: Emerg Technol 99:67–81

Nguyen ND, Nguyen TT, Vamplew P et al (2021) A prioritized objective actor-critic method for deep reinforcement learning. Neural Comput Applic 33:10335–10349

Chen T, Su WC (2018) Indirect customer-to-customer energy trading with reinforcement learning. IEEE Trans Smart Grid 10(4):4338–4348

Xu HC, Sun HB, Nikovski D, Kitamura S, Mori K, Hashimoto H (2019) Deep reinforcement learning for joint bidding and pricing of load serving entity. IEEE Trans Smart Grid 10(6):6366–6375

Lu RZ, Hong SH (2019) Incentive-based demand response for smart grid with reinforcement learning and deep neural network. Appl Energy 236:937–949

Ye H, Luo Z (2020) Deep ranking based cost-sensitive multi-label learning for distant supervision relation extraction. Inf Process Manag 57(6):102096

Remani T, Jasmin EA, ImthiasAhamed TP (2019) Residential load scheduling with renewable generation in the smart grid: a reinforcement learning approach. IEEE Syst J 13(3):3283–3294

Zhang XS, Li Q, Yu T, Yang B (2018) Consensus transfer Q-learning for decentralized generation command dispatch based on virtual generation tribe. IEEE Trans Smart Grid 9(3):2152–2165

Saenz-Aguirre A, Zulueta E, Fernandez-Gamiz U, Lozano J, Lopez-Guede JM (2019) Artificial neural network based reinforcement learning for wind turbine yaw control. Energies 12(3):436

Claessens BJ, Vrancx P, Ruelens F (2018) Convolutional neural networks for automatic state-time feature extraction in reinforcement learning applied to residential load control. IEEE Trans Smart Grid 9(4):3259–3269

HC Kilikiran, B Kekezoglu, GN Paterakis, (2018) Reinforcement learning for optimal protection coordination. In: 2018 International conference on smart energy systems and technologies (SEST), Sevilla, pp 1–6

D Wu, X Zheng, D Kalathil, L Xie, (2019) Nested reinforcement learning based control for protective relays in power distribution systems. In: 2019 IEEE 58th conference on decision and control (CDC), pp 1925–1930

Zhang J, Wang G, Yue S, Liu J, Yao X (2020) Multi-agent system application in accordance with game theory in bi-directional coordination network model. J Syst Eng Electron 31(2):279–289

Kim B, Park J, Park S, Kang S (2010) Impedance learning for robotic contact tasks using natural actor-critic algorithm. IEEE Trans Syst, Man, Cybern Part B (Cybernetics) 40(2):433–443

Dong L et al (2021) Optimal dispatch of combined heat and power system based on multi-agent deep reinforcement learning. Power Syst Technol 45(12):4729–4738

J Zhang, T Pu, Y Li, X Wang, X Zhou, (2021) Research on optimal dispatch strategy of distributed generators based on multi-agent deep reinforcement learning. Power Syst Technol, pp 1–10

Schneider KP et al (2018) Analytic considerations and design basis for the IEEE distribution test feeders. IEEE Trans Power Syst 33:3181–3188

Acknowledgements

This work was supported in part by the National Key Research and Development Program of China under Grant 2018YFB1700103 and in part by the Science and Technology Project of State Grid Zhejiang Electric Power Company Ltd under Grant B311SX210003 and in part by the Science and Technology Project of State Grid Liaoning Electric Power Company Ltd under Grant 2021YF-39.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of Interest

These no potential competing interests in our paper. And all authors have seen the manuscript and approved to submit to your journal. We confirm that the content of the manuscript has not been published or submitted for publication elsewhere.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zeng, P., Cui, S., Song, C. et al. A multiagent deep deterministic policy gradient-based distributed protection method for distribution network. Neural Comput & Applic 35, 2267–2278 (2023). https://doi.org/10.1007/s00521-022-06982-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-06982-3