Abstract

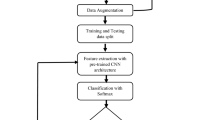

Behavior recognition in video sequences is an interesting task in the computer vision field. Human action recognition, allows machines to analyze and comprehend several human activities from input data sources, such as sensors, and multimedia contents. It has been widely applied in public security, video surveillance, robotics and video games control, and industrial automation fields. However, in these applications, gestures are formed by complex temporal sequences of motions. This representation imposes many problems such as variations in action/gesture performers style, body shape and size and even the way that the same signer realize the motion, and various problems related to the scene environment like illumination changes and background complexity. Moreover, processing on large-scale continuous gesture data make these problems more challenging due to the unknown boundaries limit of each gesture embedded in the continuous sequence. In this work, we present a novel deep architecture for isolated actions and large scale continuous hand gesture recognition using RGB, depth, and gray-scale video sequences. The proposed approach, called End-to-End Deep Gesture Recognition Process (E2E-DGRP), considers a new representation of the data by extracting gesture characteristics. This is followed by an analysis of each sequence to detect and recognize the corresponding actions. Firstly, segmentation module spot each start and end gesture boundaries and segment continuous gesture sequences into several isolated gestures based on motion indicator method. Then, a set of discriminating features is generated characterizing motion location, motion velocity and motion orientation using a proposed method named “Deep Signature”. Generated features are fed to an additional neural network layer formed by a Softmax classifier which is an output layer for gesture classification purpose. The high performance of our approach is obtained by the experiments carried out on three well-known datasets KTH and Weizman for isolated actions in RGB and grayscale modality and Chalearn LAP ConGD dataset for continuous gesture in depth. A first experimental study shows that, the recognition results obtained by our method outperform those obtained by previously published approaches and it achieves 97.5 and 98.7% in terms of accuracy with KTH and Weizmann datasets, respectively. A second experimental study, conducted using the Chalearn LAP ConGD data set, shows that our strategy outperforms other methods in terms of precision reaching 0.6661 as mean Jaccard Index.

Similar content being viewed by others

References

Foerster F, Smeja M (1999) Joint amplitude and frequency analysis of tremor activity. Electromyogr Clin Neurophysiol 39(1):11–19

Vishwakarma S, Agrawal A (2012) A survey on activity recognition and behavior understanding in video surveillance. Visual Computer 29:983–1009

Petkovic M, Jonker W (2001) Content-based video retrieval by integrating spatio-temporal and stochastic recognition of events. In: Proceedings IEEE Workshop on Detection and Recognition of Events in Video., pp. 75–82

Prati A, Shan C, Wang K (2019) Sensors, vision and networks: from video surveillance to activity recognition and health monitoring. J Ambient Intell Smart Environ 11:5–22

Wilson P, Lewandowska-Tomaszczyk B (2014) Affective robotics: modelling and testing cultural prototypes. Cognit Comput 6:814–840. https://doi.org/10.1007/s12559-014-9299-3

Yang L, Huang J, Feng T, Hong’an W, Guozhong D (2019) Gesture interaction in virtual reality. Virtual Reality Intell Hardw 1:84–112. https://doi.org/10.3724/SP.J.2096-5796.2018.0006

Wadhawan A, Kumar P (2020) Deep learning-based sign language recognition system for static signs. Neural Comput Appl 32:1–12. https://doi.org/10.1007/s00521-019-04691-y

Mousavi Hondori H (2014) A review on technical and clinical impact of microsoft kinect on physical therapy and rehabilitation. J Med Eng 2014:1–16. https://doi.org/10.1155/2014/846514

Moss RH, Stoecker WV, Lin S-J, Muruganandhan S, Chu K-F, Poneleit KM, Mitchell CD (1989) Skin cancer recognition by computer vision. Computerized Med Imag Gr 13(1):31–36. https://doi.org/10.1016/0895-6111(89)90076-1. (Diversity in Biomedical Imaging)

Kuniyoshi Y, Inoue H, Inaba M (1990) Design and implementation of a system that generates assembly programs from visual recognition of human action sequences. In: EEE International workshop on intelligent robots and systems, towards a new frontier of applications, pp. 567–574

Yamato J, Ohya J, Ishii K (1992) Recognizing human action in time-sequential images using hidden markov model. In: IEEE computer society conference on computer vision and pattern recognition, pp. 379–385

Wang L, Qiao Y, Tang X (2015) Action recognition with trajectory-pooled deep-convolutional descriptors. In: proceedings of the IEEE conference on computer vision and pattern recognition, pp. 4305–4314

Feichtenhofer C, Pinz A, Wildes RP (2016) Spatiotemporal residual networks for video action recognition. In: Proceedings of the 30th international conference on neural information processing systems. NIPS’16, pp. 3476–3484. Curran Associates Inc., Red Hook, NY, USA

Asadi-Aghbolaghi M, Clapés A, Bellantonio M, Escalante HJ, Ponce-López V, Baró X, Guyon I, Kasaei S, Escalera S (2017) Deep learning for action and gesture recognition in image sequences: A survey. In: Gesture Recognition Springer, pp. 539–578

Li Q, Huang C, Yao Z, Chen Y, Ma L (2018) Continuous dynamic gesture spotting algorithm based on dempster-shafer theory in the augmented reality human computer interaction. Int J Med Robot Computer Assist Surg 14:41. https://doi.org/10.1002/rcs.1931

Mahmoud R, Belgacem S, Omri MN (2021) Towards wide-scale continuous gesture recognition model for in-depth and grayscale input videos. Int J Mach Learn Cybernet 12(4):1173–1189. https://doi.org/10.1007/s13042-020-01227-y

Schuldt C, Laptev I, Caputo B (2004) Recognizing human actions: A local SVM approach. In: proceedings of the pattern recognition, 17th international conference on (ICPR-04). ICPR-04, pp. 32–36. IEEE Computer Society, USA

Gorelick L, Blank M, Shechtman E, Irani M, Basri R (2007) Actions as space-time shapes. Trans Pattern Anal Mach Intell 29(12):2247–2253

Wan J, Li S, Zhao Y, Zhou S, Guyon I, Escalera S (2016) Chalearn looking at people RGB-D isolated and continuous datasets for gesture recognition, pp. 761–769 . https://doi.org/10.1109/CVPRW.2016.100

LeCun Y, Bottou L, Bengio Y, Haffner P (2016) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning internal representations by error propagation. In: Rumelhart DE, Mcclelland JL (eds) Parallel distributed processing: explorations in the microstructure of cognition, volume 1: foundations. MIT Press, Cambridge, MA, pp 318–362

Jordan MI (1986) Serial order: A parallel, distributed processing approach. Technical Report 8604, Institute for Cognitive Science, University of California, San Diego

Cunningham P, Delany S (2007) k-nearest neighbour classifiers. J Mult Classif Syst 54(6):1–25. https://doi.org/10.1145/3459665

Breiman L (2001) Random forests. Mach Learn J 45(1):5–32. https://doi.org/10.1023/A:1010933404324

Quinlan JR (1986) Induction of decision trees. Mach Learn J 1:81–106

Friedman N, Geiger D, Goldszmidt M (1997) Bayesian network classifiers. Mach Learn 29(2–3):131–163. https://doi.org/10.1023/A:1007465528199

Rabiner LR (1989) A tutorial on hidden Markov models and selected applications in speech recognition. Proc IEEE 77(2):257–286. https://doi.org/10.1109/5.18626

Lafferty J, Mccallum A, Pereira F (2001) Conditional random fields: Probabilistic models for segmenting and labeling sequence data. In: proceedings of the eighteenth international conference on machine learning, pp. 282–289

Sun X, Chen M, Hauptmann A (2009) Action recognition via local descriptors and holistic features. In: IEEE computer society conference on computer vision and pattern recognition workshops, pp. 58–65 . https://doi.org/10.1109/CVPRW.2009.5204255

B J, Patil C (2018) Video based human activity detection, recognition and classification of actions using SVM. Trans Mach Learn Artif Intell 6:22

Ji X-F, Wu Q-Q, Ju Z, Wang Y-Y (2015) Study of human action recognition based on improved spatio-temporal features. Int J Autom Comput 11:500–509

Aslan MF, Durdu A, Sabanci K (2019) Human action recognition with bag of visual words using different machine learning methods and hyperparameter optimization. Neural Comput Appl 32:8585–8597

Mabrouk O, Hlaoua L, Omri MN (2021) Exploiting ontology information in fuzzy SVM social media profile classification. Appl Intell 51(6):3757–3774. https://doi.org/10.1007/s10489-020-01939-2

Mabrouk O, Hlaoua L, Omri MN (2018) Fuzzy twin svm based-profile categorization approach. 14th International conference on natural computation, fuzzy systems and knowledge discovery (ICNC-FSKD), 547–553

Liu C, Ying J, Yang H, Liu J (2020) Improved human action recognition approach based on two-stream convolutional neural network model. Visual Computer 37:1327–1341. https://doi.org/10.1007/s00371-020-01868-8

Jaouedi N, Boujnah N, Bouhlel MS (2020) A new hybrid deep learning model for human action recognition. J King Saud Univ- Computer Inform Sci 32(4):447–453. https://doi.org/10.1016/j.jksuci.2019.09.004. (Emerging Software Systems)

Baccouche M, Mamalet F, Wolf C, Garcia C, Baskurt A (2011) Sequential deep learning for human action recognition. In: proceedings of the second international conference on human behavior unterstanding. HBU’11, pp. 29–39. Springer, Berlin, Heidelberg . https://doi.org/10.1007/978-3-642-25446-8-4

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos. In: Proceedings of the 27th international conference on neural information processing systems. NIPS’14, pp. 568–576. MIT Press, MA, USA

Xu k, Jiang X, Sun T (2017) Two-stream dictionary learning architecture for action recognition. IEEE Trans Circuit Syst Video Technol 27(3):567–576. https://doi.org/10.1109/TCSVT.2017.2665359

Sclaroff S, Lee S, Yang H (2009) Sign language spotting with a threshold model based on conditional random fields. IEEE Trans Pattern Anal Mach Intell 31(07):1264–1277. https://doi.org/10.1109/TPAMI.2008.172

Lee H-K, Kim JH (1999) An HMM-based threshold model approach for gesture recognition. IEEE Trans Pattern Anal Mach Intell 21(10):961–973. https://doi.org/10.1109/34.799904

Celebi S, Aydin AS, Temiz TT, Arici T (2013) Gesture recognition using skeleton data with weighted dynamic time warping. VISAPP 2013 - proceedings of the international conference on computer vision theory and applications 1, 620–625

Wan J, Ruan Q, Deng S (2013) One-shot learning gesture recognition from RGB-D data using bag of features. J Mach Learn Res 14:2549–2582

Wan J, Athitsos V, Jangyodsuk P, Escalante HJ, Ruan Q, Guyon I (2014) CSMMI: class-specific maximization of mutual information for action and gesture recognition. IEEE Trans Image Process: Publ IEEE Signal Process Soc 23(7):3152–3165

Forney GD (1973) The Viterbi algorithm. Proc IEEE 61(3):268–278. https://doi.org/10.1109/PROC.1973.9030

Zhu G, Zhang L, Shen P, Song J, Shah S, Bennamoun M (2019) Continuous gesture segmentation and recognition using 3DCNN and convolutional LSTM. IEEE Trans Multim 21(4):1011–1021. https://doi.org/10.1109/TMM.2018.2869278

Hoàng NN, Lee G, Kim S, Yang HJ (2019) Continuous hand gesture spotting and classification using 3D finger joints information. In: 2019 IEEE International conference on image processing (ICIP), pp. 539–543

Chai X, Liu Z, Yin F, Liu Z, Chen X (2016) Two streams recurrent neural networks for large-scale continuous gesture recognition. In: 2016 23rd international conference on pattern recognition (ICPR), pp. 31–36 . https://doi.org/10.1109/ICPR.2016.7899603

Liu Z, Chen Z (2017) Continuous gesture recognition with hand-oriented spatiotemporal feature. In: 2017 IEEE international conference on computer vision workshops (ICCVW), pp. 3056–3064 . https://doi.org/10.1109/ICCVW.2017.361

Ren S, He K, Girshick R, Sun J (2015) Faster R-CNN: Towards real-time object detection with region proposal networks. Adv Neural Inf Process Syst 28:91–99

Mahmoud R, Belgacem S, Omri MN (2020) Deep signature-based isolated and large scale continuous gesture recognition approach. J King Saud Univ- Computer Inf Sci 5:1319–1578. https://doi.org/10.1007/s10462-012-9356-9

Boukhari K, Omri MN (2020) Approximate matching-based unsupervised document indexing approach: application to biomedical domain. Scientometrics 123:1–22. https://doi.org/10.1007/s11192-020-03474-w

Chebil W, Soualmia L, Omri MN, Darmoni S (2016) Indexing biomedical documents with a possibilistic network. J Am Soc Inf Sci Technol 67:928–941. https://doi.org/10.1002/asi.23435

Boukhari K, Omri MN (2020) DL-VSM based document indexing approach for information retrieval. J Amb Intell Human Comput 11:1–12. https://doi.org/10.1007/s12652-020-01684-x

Fkih F, Omri MN (2020) Hidden data states-based complex terminology extraction from textual web data model. Appl Intell 50(6):1813–1831. https://doi.org/10.1007/s10489-019-01568-4

Belgacem S, Chatelain C, Paquet T (2017) Gesture sequence recognition with one shot learned CRF/HMM hybrid model. J Image Vision Comput 61:12–21

Ranjan A, Black, M (2017) Optical flow estimation using a spatial pyramid network. In: Proceedings IEEE conference on computer vision and pattern recognition (CVPR) 2017, pp. 4161–4170

Tran D, Bourdev L, Fergus R, Torresani L, Paluri M (2015) Learning spatiotemporal features with 3D convolutional networks. In: Proceedings of the 2015 IEEE International conference on computer vision (ICCV). ICCV’15, pp. 4489–4497

Wan J, Escalera S, Escalante HJ, Baró X, Guyon I, Allik J, Lin C, Xie Y, Anbarjafari G, Gorbova J (2017) Results and analysis of chalearn lap multi-modal isolated and continuous gesture recognition, and real versus fake expressed emotions challenges. In: Proceedings of the IEEE international conference on computer vision workshops, pp. 3189–3197 . https://doi.org/10.1109/ICCVW.2017.377

Kumar V, Nandi G, Kala R (2014) Static hand gesture recognition using stacked denoising sparse autoencoders. 2014 seventh international conference on contemporary computing (IC3), 99–104

Rublee E, Rabaud V, Konolige K, Bradski G (2011) ORB: an efficient alternative to sift or surf. In: Proceedings of the IEEE international conference on computer vision, pp. 2564–2571

Pichao W, Wanqing L, Song L, Yuyao Z, Zhimin G, Philip O (2016) Large-scale continuous gesture recognition using convolutional neural networks. In: 23rd international conference on pattern recognition (ICPR), pp. 13–18 . https://doi.org/10.1109/ICPR.2016.7899600

Sun Y, Kamel MS, Wong AKC, Wang Y (2007) Cost-sensitive boosting for classification of imbalanced data. Pattern Recognit 40(12):3358–3378. https://doi.org/10.1016/j.patcog.2007.04.009

Wan J, Guo G, Li S (2015) Explore efficient local features from RGB-D data for one-shot learning gesture recognition. IEEE Trans Pattern Anal Mach Intell 38(8):1626–1639

Jiang F, Zhang S, Wu S, Gao Y, Zhao D (2015) Multi-layered gesture recognition with kinect. J Mach Learn Res 16(1):227–254

Qian H, Zhou J, Mao Y, Yuan Y (2017) Recognizing human actions from silhouettes described with weighted distance metric and kinematics. Multim Tools Appl 76(21):21889–21910. https://doi.org/10.1007/s11042-017-4610-4

Chou K-P, Prasad M, Wu D, Sharma N, Li D, Lin Y, Blumenstein M, Lin W-C, Lin C-T (2018) Robust feature-based automated multi-view human action recognition system. IEEE Access 6:15283–15296

Vishwakarma D, Dhiman C (2019) A unified model for human activity recognition using spatial distribution of gradients and difference of gaussian kernel. Visual Computer 35:1–19. https://doi.org/10.1007/s00371-018-1560-4

Antonik P, Marsal N, Brunner D, Rontani D (2019) Human action recognition with a large-scale brain-inspired photonic computer. Nat Mach Intell 1:530–537. https://doi.org/10.1038/s42256-019-0110-8

Avola D, Cascio M, Cinque L, Foresti GL, Massaroni C, Rodolà E (2020) 2-D Skeleton-based action recognition via two-branch stacked LSTM-RNNs. IEEE Trans Multim 22(10):2481–2496. https://doi.org/10.1109/TMM.2019.2960588

Jain SB, Sreeraj M (2015) Multi-posture human detection based on hybrid hog-bo feature. 2015 Fifth international conference on advances in computing and communications (ICACC), 37–40

Vishwakarma DK (2020) A two-fold transformation model for human action recognition using decisive pose. Cognit Syst Res 61:1–13. https://doi.org/10.1016/j.cogsys.2019.12.004

Molchanov P, Yang X, Gupta S, Kim K, Tyree S, Kautz J (2016) Online detection and classification of dynamic hand gestures with recurrent 3D convolutional neural networks. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp. 4207–4215 . https://doi.org/10.1109/CVPR.2016.456

Cihan N Camgoz, Hadfield S, Bowden R (2017) Particle filter based probabilistic forced alignment for continuous gesture recognition. In: The IEEE international conference on computer vision (ICCV) workshops, pp. 3079–3085

Dang LM, Min K, Wang H, Piran M, Lee H, Moon H (2020) Sensor-based and vision-based human activity recognition: A comprehensive survey. Pattern Recognit. https://doi.org/10.1016/j.patcog.2020.107561

Heidari AA, Faris H, Mirjalili S, Aljarah I (2019) An efficient hybrid multilayer perceptron neural network with grasshopper optimization. Soft Comput. https://doi.org/10.1007/s00500-018-3424-2

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. In: Proceedings of the 25th international conference on neural information processing systems - volume 1. NIPS’12, pp. 1097–1105. Curran Associates Inc., Red Hook, NY, USA

Inoue M, Inoue S, Nishida T (2018) Deep recurrent neural network for mobile human activity recognition with high throughput. Artif Life Robot 23:173–185. https://doi.org/10.1007/s10015-017-0422-x

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mahmoud, R., Belgacem, S. & Omri, M. Towards an end-to-end isolated and continuous deep gesture recognition process. Neural Comput & Applic 34, 13713–13732 (2022). https://doi.org/10.1007/s00521-022-07165-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07165-w