Abstract

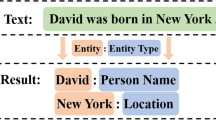

Medical concept normalization aims to construct a semantic mapping between mentions and concepts and to uniformly represent mentions that belong to the same concept. In the large-scale biological literature database, a fast concept normalization method is essential to process a large number of requests and literature. To this end, we propose a hierarchical concept normalization method, named FastMCN, with much lower computational cost and a variant of transformer encoder, named stack and index optimized self-attention (SISA), to improve the efficiency and performance. In training, FastMCN uses SISA as a word encoder to encode word representations from character sequences and uses a mention encoder which summarizes the word representations to represent a mention. In inference, FastMCN indexes and summarizes word representations to represent a query mention and output the concept of the mention which with the maximum cosine similarity. To further improve the performance, SISA was pre-trained using the continuous bag-of-words architecture with 18.6 million PubMed abstracts. All experiments were evaluated on two publicly available datasets: NCBI disease and BC5CDR disease. The results showed that SISA was three times faster than the transform encoder for encoding word representations and had better performance. Benefiting from SISA, FastMCN was efficient in both training and inference, i.e. it achieved the peak performance of most of the baseline methods within 30 s and was 3000–5600 times faster than the state-of-the-art method in inference.

Similar content being viewed by others

Notes

References

Alsentzer E, Murphy J, Boag W, et al (2019) Publicly available clinical BERT embeddings. In: Proceedings of the 2nd clinical natural language processing workshop. Association for Computational Linguistics, Minneapolis, Minnesota, USA, pp 72–78. https://doi.org/10.18653/v1/W19-1909

Aronson AR (2001) Effective mapping of biomedical text to the UMLS Metathesaurus: the MetaMap program. In: Proceedings AMIA symposium, pp 17–21

Bentz C, Alikaniotis D (2016) The word entropy of natural languages. arXiv:1606.06996 [cs]

Chen L, Fu W, Gu Y et al (2020) Clinical concept normalization with a hybrid natural language processing system combining multilevel matching and machine learning ranking. J Am Med Inform Assoc 27(10):1576–1584. https://doi.org/10.1093/jamia/ocaa155

Cho K, van Merriënboer B, Gulcehre C, et al (2014) Learning phrase representations using RNN encoder–decoder for statistical machine translation. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP). Association for Computational Linguistics, Doha, Qatar, pp 1724–1734. https://doi.org/10.3115/v1/D14-1179

Devlin J, Chang MW, Lee K, et al (2019) BERT: pre-training of deep bidirectional transformers for language understanding. arXiv:1810.04805 [cs]

Doğan RI, Leaman R, Lu Z (2014) NCBI disease corpus: a resource for disease name recognition and concept normalization. J Biomed Inform 47:1–10. https://doi.org/10.1016/j.jbi.2013.12.006

Dong C, Wang G, Xu H, et al (2021) EfficientBERT: progressively searching multilayer perceptron via warm-up knowledge distillation. arXiv:2109.07222 [cs]

D’Souza J, Ng V (2015) Sieve-based entity linking for the biomedical domain. In: Proceedings of the 53rd annual meeting of the association for computational linguistics and the 7th international joint conference on natural language processing (volume 2: short papers). Association for Computational Linguistics, Beijing, China, pp 297–302. https://doi.org/10.3115/v1/P15-2049

Floridi L, Chiriatti M (2020) GPT-3: its nature, scope, limits, and consequences. Mind Mach 30(4):681–694. https://doi.org/10.1007/s11023-020-09548-1

Gu Y, Tinn R, Cheng H, et al (2020) Domain-specific language model pretraining for biomedical natural language processing. arXiv:2007.15779 [cs]

Henry S, Wang Y, Shen F et al (2020) The 2019 National Natural language processing (NLP) Clinical Challenges (n2c2)/Open Health NLP (OHNLP) shared task on clinical concept normalization for clinical records. J Am Med Inform Assoc 27(10):1529–1537. https://doi.org/10.1093/jamia/ocaa106

Hill F, Cho K, Korhonen A (2016) Learning distributed representations of sentences from unlabelled data. In: Proceedings of the 2016 conference of the North American chapter of the association for computational linguistics: human language technologies. Association for Computational Linguistics, San Diego, California, pp 1367–1377. https://doi.org/10.18653/v1/N16-1162

Ji Z, Wei Q, Xu H (2020) BERT-based ranking for biomedical entity normalization. In: AMIA joint summits on translational science proceedings, vol 2020, pp 269–277

Kiss T, Strunk J (2006) Unsupervised multilingual sentence boundary detection. Comput Linguist 32(4):485–525. https://doi.org/10.1162/coli.2006.32.4.485

Leaman R, Lu Z (2016) TaggerOne: joint named entity recognition and normalization with semi-Markov Models. Bioinformatics 32(18):2839–2846. https://doi.org/10.1093/bioinformatics/btw343

Leaman R, Islamaj Dogan R, Lu Z (2013) DNorm: disease name normalization with pairwise learning to rank. Bioinformatics 29(22):2909–2917. https://doi.org/10.1093/bioinformatics/btt474

Leaman R, Khare R, Lu Z (2015) Challenges in clinical natural language processing for automated disorder normalization. J Biomed Inform 57:28–37. https://doi.org/10.1016/j.jbi.2015.07.010

Lee J, Yoon W, Kim S et al (2020) BioBERT: a pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 36(4):1234. https://doi.org/10.1093/bioinformatics/btz682

Li H, Chen Q, Tang B et al (2017) CNN-based ranking for biomedical entity normalization. BMC Bioinform 18(11):79–86. https://doi.org/10.1186/s12859-017-1805-7

Li J, Sun Y, Johnson RJ, et al (2015) Annotating chemicals, diseases and their interactions in biomedical literature. In: Proceedings of the fifth biocreative challenge evaluation workshop, pp 173–182

Limsopatham N, Collier N (2016) Normalising medical concepts in social media texts by learning semantic representation. In: Proceedings of the 54th annual meeting of the association for computational linguistics (Volume 1: Long Papers). Association for Computational Linguistics, Berlin, Germany, pp 1014–1023. https://doi.org/10.18653/v1/P16-1096

Lin TY, Goyal P, Girshick R, et al (2017) Focal loss for dense object detection. In: 2017 IEEE international conference on computer vision (ICCV). IEEE, Venice, pp 2999–3007. https://doi.org/10.1109/ICCV.2017.324

Liu H, Xu Y (2018) A deep learning way for disease name representation and normalization. In: Huang X, Jiang J, Zhao D, et al (eds) Natural language processing and Chinese computing, vol 10619. Springer, Cham, pp 151–157. https://doi.org/10.1007/978-3-319-73618-1_13

Miftahutdinov Z, Tutubalina E (2019) Deep neural models for medical concept normalization in user-generated texts. In: Proceedings of the 57th annual meeting of the association for computational linguistics: student research workshop. Association for Computational Linguistics, Florence, Italy, pp 393–399. https://doi.org/10.18653/v1/P19-2055

Mikolov T, Chen K, Corrado G, et al (2013) Efficient estimation of word representations in vector space. In: Proceedings of workshop at ICLR 2013

Mondal I, Purkayastha S, Sarkar S, et al (2019) Medical entity linking using triplet network. In: Proceedings of the 2nd clinical natural language processing workshop. Association for Computational Linguistics, Minneapolis, Minnesota, USA, pp 95–100. https://doi.org/10.18653/v1/W19-1912

Pattisapu N, Anand V, Patil S et al (2020) Distant supervision for medical concept normalization. J Biomed Inform 109(103):522. https://doi.org/10.1016/j.jbi.2020.103522

Pennington J, Socher R, Manning C (2014) Glove: global vectors for word representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP). Association for Computational Linguistics, Doha, Qatar, pp 1532–1543. https://doi.org/10.3115/v1/D14-1162

Savova GK, Masanz JJ, Ogren PV et al (2010) Mayo clinical text analysis and knowledge extraction system (cTAKES): architecture, component evaluation and applications. J Am Med Inform Assoc 17(5):507–513. https://doi.org/10.1136/jamia.2009.001560

Sennrich R, Haddow B, Birch A (2016) Neural machine translation of rare words with subword units. In: Proceedings of the 54th annual meeting of the association for computational linguistics (volume 1: long papers). Association for Computational Linguistics, Berlin, Germany, pp 1715–1725. https://doi.org/10.18653/v1/P16-1162

Sung M, Jeon H, Lee J, et al (2020b) Biomedical entity representations with synonym marginalization. arXiv:2005.00239 [cs]

Tai KS, Socher R, Manning CD (2015) Improved semantic representations from tree-structured long short-term memory networks. In: Proceedings of the 53rd annual meeting of the association for computational linguistics and the 7th international joint conference on natural language processing (volume 1: long papers). Association for Computational Linguistics, Beijing, China, pp 1556–1566. https://doi.org/10.3115/v1/P15-1150

Wright D, Katsis Y, Mehta R, et al (2019) NormCo: deep disease normalization for biomedical knowledge base construction. In: Automated knowledge base construction

Xu D, Zhang Z, Bethard S (2020) A generate-and-rank framework with semantic type regularization for biomedical concept normalization. In: Proceedings of the 58th annual meeting of the association for computational linguistics. Association for Computational Linguistics, Online, pp 8452–8464. https://doi.org/10.18653/v1/2020.acl-main.748

Xu J, Zhou H, Gan C, et al (2021) Vocabulary learning via optimal transport for neural machine translation. In: Proceedings of the 59th annual meeting of the association for computational linguistics and the 11th international joint conference on natural language processing (volume 1: long papers). Association for Computational Linguistics, Online, pp 7361–7373. https://doi.org/10.18653/v1/2021.acl-long.571

Yeganova L, Kim S, Chen Q et al (2020) Better synonyms for enriching biomedical search. J Am Med Inform Assoc 27(12):1894–1902. https://doi.org/10.1093/jamia/ocaa151

Funding

Funding was provided by National Natural Science Foundation of China (Grant No. 61871141), Natural Science Foundation of Guangdong Province (Grant No. 2021A1515011339), Collaborative Innovation Team of Guangzhou University of Traditional Chinese Medicine (Grant No. 2021XK08).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest to this work and do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Proof of Eq. 6

Appendix A: Proof of Eq. 6

Given \(E_{char} \in R^{C \times D_{emb}}\), \(W_{Q} \in R^{D_{model} \times R_{emb}}\), i is an integer, and \(i \in [0, C - 1]\). Let

a denotes the ith row of \(E_{char}\) dot product by \(W_{Q}^{T}\). Similarly, let

b denotes the ith row of \(E_{char} W_{Q}^{T}\). \(E_{char} W_{Q}^{T}\) denotes the dot products of each row in \(E_{char}\) and each column in \(W_{Q}^{T}\), so b is equal to the ith row of \(E_{char}\) dot product by \(W_{Q}^{T}\). Thus,

Rights and permissions

About this article

Cite this article

Liang, L., Hao, T., Zhan, C. et al. Fast medical concept normalization for biomedical literature based on stack and index optimized self-attention. Neural Comput & Applic 34, 16311–16324 (2022). https://doi.org/10.1007/s00521-022-07228-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07228-y