Abstract

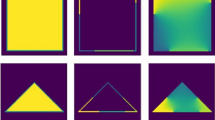

Deep neural networks (DNNs) are promising alternatives to simulate physical problems. These networks are capable of eliminating the requirement of numerical iterations. The DNNs could learn the governing physics of engineering problems through a learning process. The structure of deep networks and parameters of the training process are two basic factors that influence the simulation accuracy of DNNs. The loss function is the main part of the training process that determines the goal of training. During the training process, lost function regularly is used to adapt parameters of the deep network. The subject of using DNNs to learn the physical images is a novel topic and demands novel loss functions to capture the physical meanings. Thus, for the first time, the present study aims to develop new loss functions to enhance the training process of DNNs. Here, three novel loss functions were introduced and examined to estimate the temperature distributions in thermal conduction problems. The images of temperature distribution obtained in the present research were systematically compared with the literature data. The results showed that one of the introduced loss functions could significantly outperformance the literature loss functions available in the literature. Using a new loss function improved the mean error by 67.1%. Moreover, using new loss functions eliminated the pixels predictions (with large errors) by 96%.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Wang J, Jiang J (2020) SA-Net: A deep spectral analysis network for image clustering. Neurocomputing 383:10–23

Chan T-H, Jia K, Gao S, Lu J, Zeng Z, Ma Y (2015) PCANet: A simple deep learning baseline for image classification? IEEE Trans Image Process 24(12):5017–5032

Qing Y, Zeng Y, Li Y, Huang G-B (2020) Deep and wide feature based extreme learning machine for image classification. Neurocomputing 412:426–436

Graves A, Mohamed A-r, Hinton G (eds) (2013) Speech recognition with deep recurrent neural networks. In: 2013 IEEE international conference on acoustics, speech and signal processing. IEEE

Pascual S, Bonafonte A, Serrà J (2017) SEGAN: speech enhancement generative adversarial network. arXiv:170309452.2017

Yıldırım Ö, Baloglu UB, Acharya UR (2018) A deep convolutional neural network model for automated identification of abnormal EEG signals. Neural Comput Appl. https://doi.org/10.1007/s00521-018-3889-z

Hemanth DJ, Deperlioglu O, Kose U (2020) An enhanced diabetic retinopathy detection and classification approach using deep convolutional neural network. Neural Comput Appl 32(3):707–721

Woźniak M, Siłka J, Wieczorek M (2021) Deep neural network correlation learning mechanism for CT brain tumor detection. Neural Comput Appl. https://doi.org/10.1007/s00521-021-05841-x

He K, Zhang X, Ren S, Sun J (eds) (2015) Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE international conference on computer vision

Han K, Mun YY, Gweon G, Lee J-G (eds) (2013) Understanding the difficulty factors for learning materials: a qualitative study. In: International conference on artificial intelligence in education. Springer

Ioffe S, Szegedy C (2015) Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv:150203167.2015

Agarap AF (2018) Deep learning using rectified linear units (relu). arXiv:180308375.2018

Raissi M, Yazdani A, Karniadakis GE (2020) Hidden fluid mechanics: Learning velocity and pressure fields from flow visualizations. Science 367(6481):1026–1030

Berg J, Nyström K (2018) A unified deep artificial neural network approach to partial differential equations in complex geometries. Neurocomputing 317:28–41

Lin Q, Hong J, Liu Z, Li B, Wang J (2018) Investigation into the topology optimization for conductive heat transfer based on deep learning approach. Int Commun Heat Mass Transf 97:103–109

Liu X, Zhang H, Kong X, Lee KY (2020) Wind speed forecasting using deep neural network with feature selection. Neurocomputing 397:393–403

Sharma R, Farimani AB, Gomes J, Eastman P, Pande V (2018) Weakly-supervised deep learning of heat transport via physics informed loss. arXiv:180711374.2018

Ciresan D, Giusti A, Gambardella LM, Schmidhuber J (eds) (2012) Deep neural networks segment neuronal membranes in electron microscopy images. Advances in neural information processing systems

Bergman TL, Incropera FP, Lavine AS, DeWitt DP (2011) Introduction to heat transfer. John Wiley & Sons, USA

Farimani AB, Gomes J, Pande VS (2017) Deep learning the physics of transport phenomena. arXiv:170902432.2017

Edalatifar M, Tavakoli MB, Ghalambaz M, Setoudeh F (2020) A dataset for conduction heat transer and deep learning. Mendeley Data 1:10–7632

Edalatifar M, Tavakoli MB, Ghalambaz M, Setoudeh F (2020) Using deep learning to learn physics of conduction heat transfer. J Therm Anal Calorim 146:1435–1452

Nadipally M (2019) Optimization of methods for image-texture segmentation using ant colony optimization. In: Hemanth DJ, Gupta D, Emilia Balas V (eds) Intelligent data analysis for biomedical applications. Academic Press, Cambridge, pp 21–47

Botchkarev A (2018) Performance metrics (error measures) in machine learning regression, forecasting and prognostics: properties and typology. arXiv:180903006.2018

Masci J, Meier U, Cireşan D, Schmidhuber J (eds) (2011) Stacked convolutional auto-encoders for hierarchical feature extraction. In: international conference on artificial neural networks. Springer

Wu C, Khishe M, Mohammadi M, Taher Karim SH, Rashid TA (2021) Evolving deep convolutional neutral network by hybrid sine–cosine and extreme learning machine for real-time COVID19 diagnosis from X-ray images. Soft Comput. https://doi.org/10.1007/s00500-021-05839-6

Hu T, Khishe M, Mohammadi M, Parvizi G-R, Taher Karim SH, Rashid TA (2018) Real-time COVID-19 diagnosis from X-Ray images using deep CNN and extreme learning machines stabilized by chimp optimization algorithm. Biomed Signal Process Control 68:102764

Rashid TA, Abbas DK, Turel YK (2019) A multi hidden recurrent neural network with a modified grey wolf optimizer. PLoS ONE 14(3):e0213237

Rashid TA, Fattah P, Awla DK (2018) Using accuracy measure for improving the training of LSTM with metaheuristic algorithms. Procedia Comput Sci 140:324–333

Jabar AL, Rashid TA (2018) A modified particle swarm optimization with neural network via euclidean distance. Int J Recent Contrib Eng Sci IT (iJES) 6(1):4–18

Lemaréchal C (2012) Cauchy and the gradient method. Doc Math Extra 251(254):10

Cheridito P, Jentzen A, Riekert A, Rossmannek F (2022) A proof of convergence for gradient descent in the training of artificial neural networks for constant target functions. arXiv preprint arXiv:210209924.2021.

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning representations by back-propagating errors. Nature 323(6088):533–536

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT Press, Cambridge

Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C et al (2015) TensorFlow: large-scale machine learning on heterogeneous systems

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv preprint arXiv:14126980.2014

Glorot X, Bengio Y (eds) (2010) Understanding the difficulty of training deep feedforward neural networks. In: Proceedings of the thirteenth international conference on artificial intelligence and statistics

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors clarify that there is no conflict of interest for report.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Edalatifar, M., Ghalambaz, M., Tavakoli, M.B. et al. New loss functions to improve deep learning estimation of heat transfer. Neural Comput & Applic 34, 15889–15906 (2022). https://doi.org/10.1007/s00521-022-07233-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07233-1