Abstract

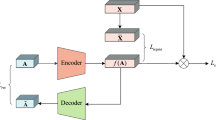

Generalized zero-shot domain adaptation (GZSDA) aims to classify samples from seen and unseen classes in a target domain by utilizing labeled data for all classes from a source domain and labeled data from seen classes in the target domain. GZSDA is more challenging than zero-shot learning or domain adaptation problems. We aim to learn prototypes for unseen classes in the target domain. The test samples can be classified into one of the seen and unseen classes based on the distance with the prototypes for seen and unseen classes in the target domain. Therefore, we propose a generalized zero-shot domain adaptation with a target unseen class prototype learning method (TUPL). We project the source samples and the target samples into a common subspace by making the samples of the same class near to cope with the domain difference. To strengthen the intra-class compactness of the samples, we pull samples closer to their class prototypes while maintaining data variance, learning discriminative representations in the subspace. Then, we learn the target unseen class prototypes by the relationships of the source and target domains and the relationships of the seen and unseen classes to get more accurate ones. The evaluations on the GZSDA datasets show that TUPL outperforms existing methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Patel VM, Gopalan R, Li R, Chellappa R (2015) Visual domain adaptation: a survey of recent advances. IEEE Sign Process Magaz 32(3):53–69

Xian Y, Lampert CH, Schiele B, Akata Z (2018) Zero-shot learning-a comprehensive evaluation of the good, the bad and the ugly. IEEE Trans Patt Anal Mach Intell 41(9):2251–2265

Chao W-L, Changpinyo S, Gong B, Sha F (2016) An empirical study and analysis of generalized zero-shot learning for object recognition in the wild, In: ECCV, Springer, pp. 52–68

Peng KC, Wu Z, Ernst J (2017) Zero-shot deep domain adaptation, In: European Conference on computer vision

Wang J, Jiang J (2019) Conditional coupled generative adversarial networks for zero-shot domain adaptation, in. IEEE/CVF International conference on computer vision (ICCV) 2019:3374–3383

Pan SJ, Tsang IW, Kwok JT, Yang Q (2010) Domain adaptation via transfer component analysis. IEEE Trans Neur Netw 22(2):199–210

Long M, Wang J, Ding G, Sun J, Yu PS (2014) Transfer joint matching for unsupervised domain adaptation, In: CVPR, pp. 1410–1417

Li J, Jing M, Lu K, Ding Z, Zhu L, Huang Z (2019) Leveraging the invariant side of generative zero-shot learning, In: CVPR, pp. 7402–7411

Pan SJ, Yang Q (2010) A survey on transfer learning. IEEE Trans Knowl Data Eng 22(10):1345–1359

Sun B, Feng J, Saenko K (2017) Correlation alignment for unsupervised domain adaptation, In: Domain adaptation in computer vision applications, Springer, pp. 153–171

Long M, Cao Y, Wang J, Jordan M (2015) Learning transferable features with deep adaptation networks, In: International conference on machine learning, PMLR, pp. 97–105

Long M, Zhu H, Wang J, Jordan MI (2016) Unsupervised domain adaptation with residual transfer networks, In: Advances in neural information processing systems, pp. 136–144

Ganin Y, Ustinova E, Ajakan H, Germain P, Larochelle H, Laviolette F, Marchand M, Lempitsky V (2016) Domain-adversarial training of neural networks. J Mach Learn Res 17(1):2030–2096

Tzeng E, Hoffman J, Darrell T, Saenko K (2015) Simultaneous deep transfer across domains and tasks, In: Proceedings of the IEEE International conference on computer vision, pp. 4068–4076

Tzeng E, Hoffman J, Saenko K, Darrell T (2017) Adversarial discriminative domain adaptation, in: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 7167–7176

Lampert CH, Nickisch H, Harmeling S (2014) Attribute-based classification for zero-shot visual object categorization. IEEE Trans Patt Anal Mach Intell 36(3):453–465

Liu J, Kuipers B, Savarese S (2011) Recognizing human actions by attributes, In: CVPR, IEEE, pp. 3337–3344

Frome A, Corrado GS, Shlens J, Bengio S, Dean J, Mikolov T et al. (2013) Devise: a deep visual-semantic embedding model, In: NeurIPS, pp. 2121–2129

Jayaraman D, Grauman K (2014) Zero-shot recognition with unreliable attributes, In: NeurIPS, pp. 3464–3472

Akata Z, Reed S, Walter D, Lee H, Schiele B (2015) Evaluation of output embeddings for fine-grained image classification, In: CVPR, pp. 2927–2936

Akata Z, Perronnin F, Harchaoui Z, Schmid C (2016) Label-embedding for image classification. IEEE Trans Patt Anal Mach Intell 38(7):1425–1438

Fu Z, Xiang T, Kodirov E, Gong S (2015) Zero-shot object recognition by semantic manifold distance, In: CVPR, pp. 2635–2644

Fu Z, Xiang T, Kodirov E, Gong S (2017) Zero-shot learning on semantic class prototype graph. IEEE Trans Patt Anal Mach Intell 40(8):2009–2022

Ding Z, Shao M, Fu Y (2017) Low-rank embedded ensemble semantic dictionary for zero-shot learning, In: CVPR, pp. 2050–2058

Shigeto Y, Suzuki I, Hara K, Shimbo M, Matsumoto Y (2015) Ridge regression, hubness, and zero-shot learning, In: Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Springer, pp. 135–151

Dinu G, Lazaridou A, Baroni M (2015) Improving zero-shot learning by mitigating the hubness problem, In: Workshop at ICLR

MarcoBaroni AG Hubness and pollution: delving into cross-space mapping for zero-shot learning

Li X, Fang M, Li H, Wu J (2020) Learning domain invariant unseen features for generalized zero-shot classification. Knowl-Bas Sys, 206:106378

Qin J, Wang Y, Liu L, Chen J, Shao L (2016) Beyond semantic attributes: discrete latent attributes learning for zero-shot recognition. IEEE Sign Process Lett 23(11):1667–1671

Wang Y, Gong Y, Liu Q (2015) Robust attribute-based visual recognition using discriminative latent representation, In: International conference on multimedia modeling, Springer, pp. 191–202

Guo Y, Ding G, Jin X, Wang J (2015) Learning predictable and discriminative attributes for visual recognition., In: AAAI, pp. 3783–3789

Fu Y, Hospedales TM, Xiang T, Gong S (2014) Learning multimodal latent attributes. IEEE Trans Patt Anal Mach Intell 36(2):303–316

Kodirov E, Xiang T, Gong S (2017) Semantic autoencoder for zero-shot learning, in: CVPR, pp. 3174–3183

Long Y, Liu L, Shen F, Shao L, Li X (2018) Zero-shot learning using synthesised unseen visual data with diffusion regularisation. IEEE Trans Patt Anal Mach Intell 40(10):2498–2512

Xian Y, Sharma S, Schiele B, Akata Z (2019) f-vaegan-d2: A feature generating framework for any-shot learning, In: CVPR, pp. 10275–10284

Mishra A, Reddy SK, Mittal A, Murthy HA (2018) A generative model for zero shot learning using conditional variational autoencoders, In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp. 2188–2196

Gao R, Hou X, Qin J, Chen J, Liu L, Zhu F, Zhang Z, Shao L (2020) Zero-vae-gan: generating unseen features for generalized and transductive zero-shot learning. IEEE Trans Image Process 29:3665–3680

Li J, Jing M, Lu K, Ding Z, Zhu L, Huang Z (2019) Leveraging the invariant side of generative zero-shot learning, In: CVPR

Yu H, Lee B (2019) Zero-shot learning via simultaneous generating and learning, in: NeurIPS, pp. 46–56

Felix R, Kumar VB, Reid I, Carneiro G (2018) Multi-modal cycle-consistent generalized zero-shot learning, In: ECCV, pp. 21–37

Schonfeld E, Ebrahimi S, Sinha S, Darrell T, Akata Z (2019) Generalized zero-and few-shot learning via aligned variational autoencoders, In: CVPR, pp. 8247–8255

Zhang H, Long Y, Liu L, Shao L (2019) Adversarial unseen visual feature synthesis for zero-shot learning. Neurocomputing 329:12–20

Luo Y, Wang X, Pourpanah F (2021) Dual vaegan: a generative model for generalized zero-shot learning. Appl Soft Comput, 107:107352

Kohonen T (1992) Learning vector quantisation and the self organising map, In: Theory and applications of neural networks, Springer, pp. 235–242

Snell J, Swersky K, Zemel RS (2017) Prototypical networks for few-shot learning, arXiv preprint arXiv:1703.05175

Quattoni A, Collins M, Darrell T (2008) Transfer learning for image classification with sparse prototype representations, In: 2008 IEEE Conference on computer vision and pattern recognition, IEEE, pp. 1–8

Paaßen B, Schulz A, Hahne J, Hammer B (2018) Expectation maximization transfer learning and its application for bionic hand prostheses. Neurocomputing 298:122–133

Belkin M, Niyogi P (2001) Laplacian eigenmaps and spectral techniques for embedding and clustering. Nips 14:585–591

He X, Niyogi P (2004) Locality preserving projections. Adv Neur Infor Process Sys 16(16):153–160

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. ICLR

Venkateswara H, Eusebio J, Chakraborty S, Panchanathan S (2017) Deep hashing network for unsupervised domain adaptation, In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 5018–5027

Saenko K, Kulis B, Fritz M, Darrell T (2010) Adapting visual category models to new domains, In: Computer vision–ECCV 2010, Springer, pp. 213–226

Long M, Zhu H, Wang J, Jordan MI (2017) Deep transfer learning with joint adaptation networks, In: International conference on machine learning, PMLR, pp. 2208–2217

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition, In: CVPR, pp. 770–778

Wang Q, Bu P, Breckon TP (2019) Unifying unsupervised domain adaptation and zero-shot visual recognition, In: 2019 International joint conference on neural networks (IJCNN), IEEE, pp. 1–8

Lu H, Shen C, Cao Z, Xiao Y, van den Hengel A (2018) An embarrassingly simple approach to visual domain adaptation. IEEE Trans Image Process 27(7):3403–3417

Morerio P, Cavazza J, Murino V (2018) Minimal-entropy correlation alignment for unsupervised deep domain adaptation

Cao Z, You K, Long M, Wang J, Yang Q (2019) Learning to transfer examples for partial domain adaptation, in: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 2985–2994

Wang Q, Chen K (2017) Zero-shot visual recognition via bidirectional latent embedding. Int J Comput Visi 124(3):356–383

Maaten Lvd, Hinton G (2008) Visualizing data using t-SNE. J Mach Learn Res 9:2579–2605

Acknowledgements

This work is supported by National Natural Science Foundation of China under (Grant Nos. 61806155, 62176197), China Postdoctoral Science Foundation funded project under Grant Nos. 2018M631125, National Natural Science Foundation of Shaanxi Province (Grant Nos. 2020JQ-323, 2020GY-062), Nature Science Foundation of Anhui Province under Grant Nos. 1908085MF186, Fundamental Research Funds for the Central Universities under Grant Nos. XJS200303.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, X., Fang, M. & Chen, B. Generalized zero-shot domain adaptation with target unseen class prototype learning. Neural Comput & Applic 34, 17793–17807 (2022). https://doi.org/10.1007/s00521-022-07413-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07413-z