Abstract

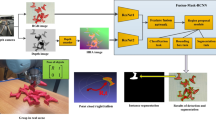

Object affordance detection aims to identify, locate and segment the functional regions of objects, so that robots can understand and manipulate objects like humans. The affordance detection task has two main challenges: (1) Due to the need to provide accurate positioning information for the robot to manipulate objects, the affordance segmentation results are required to have high boundary quality. (2) Different kinds of objects have significant differences in appearances, but may have the same affordance. Correspondingly, parts with the same appearance may have different affordances. The existing methods regard affordance detection as an image segmentation problem, without focusing on the boundary quality of detection results. In addition, most of the existing methods do not consider the potential relationship between object categories and object affordances. Aiming at the above problems, we propose a boundary-preserving network (BPN) to provide affordance masks with better boundary quality for robots to manipulate objects. Our framework contains three new components: the IoU (Intersection-over-Union) branch, the affordance boundary branch and the relationship attention module. The IoU branch is used to predict the IoU score of each object bounding box. The affordance boundary branch is used to guide the network to learn the boundary features of objects. The relationship attention module is used to enhance the feature representation capability of the network by exploring the potential relationship between object categories and object affordances. Experiments show that our method is helpful to improve the boundary quality of the predicted affordance masks. On the IIT-AFF dataset, the performance of the proposed BPN is 2.32% (F-score) and 2.89% (F-score) higher than that of the strong baseline in terms of affordance masks and the boundaries of affordance masks, respectively. Furthermore, the real-world robot manipulation experiments show that the proposed BPN can provide accurate affordance information for robots to manipulate objects.

Similar content being viewed by others

Data availability

The public IIT-AFF dataset is used in this project.

Code availability

The project is not complete yet, and we will release some of the source code when the project is complete.

References

Gibson JJ (2014) The ecological approach to visual perception, classic. Psychology Press, Hove

Ferretti, G.: A distinction concerning vision-for-action and affordance perception. Consciousness and Cognition 87 (2021). doi:https://doi.org/10.1016/j.concog.2020.103028

Hassanin M, Khan S, Tahtali M (2021) Visual affordance and function understanding: a survey. Acm Comput Surv. https://doi.org/10.1145/3446370

Do TT, Nguyen A, Reid I (2018) AffordanceNet: an end-to-end deep learning approach for object affordance detection. In: 2018 IEEE international conference on robotics and automation (ICRA), 21–25 May 2018, pp 5882–5889

Chu F, Xu R, Vela PA (2019) Learning affordance segmentation for real-world robotic manipulation via synthetic images. IEEE Robot Autom Lett 4(2):1140–1147. https://doi.org/10.1109/LRA.2019.2894439

Minh CND, Gilani SZ, Islam SMS, Suter D (2020) Learning affordance segmentation: an investigative study. In: 2020 digital image computing: techniques and applications (DICTA), 29 Nov.–2 Dec. 2020, pp 1–8

Zhao X, Cao Y, Kang Y (2020) Object affordance detection with relationship-aware network. Neural Comput Appl 32(18):14321–14333. https://doi.org/10.1007/s00521-019-04336-0

Gu QP, Su JH, Yuan L (2021) Visual affordance detection using an efficient attention convolutional neural network. Neurocomputing 440:36–44. https://doi.org/10.1016/j.neucom.2021.01.018

Chen X, Lian Y, Jiao L, Wang H, Gao Y, Lingling S (2020) Supervised edge attention network for accurate image instance segmentation. In: Vedaldi A, Bischof H, Brox T, Frahm J-M (eds) Computer vision: ECCV 2020, 2020, pp. 617–631. Springer International Publishing, Cham

He K, Gkioxari G, Dollár P, Girshick R (2020) Mask R-CNN. IEEE Trans Pattern Anal Mach Intell 42(2):386–397. https://doi.org/10.1109/TPAMI.2018.2844175

Ren S, He K, Girshick R, Sun J (2017) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39(6):1137–1149. https://doi.org/10.1109/TPAMI.2016.2577031

Cai Z, Vasconcelos N (2018) Cascade R-CNN: delving into high quality object detection. In: 2018 IEEE/CVF conference on computer vision and pattern recognition (CVPR), 18–23 June 2018, pp 6154–6162

Wu Y, Chen Y, Yuan L, Liu Z, Wang L, Li H, Fu Y (2020) Rethinking classification and localization for object detection. In: 2020 IEEE/CVF conference on computer vision and pattern recognition (CVPR), 13–19 June 2020, pp 10183–10192

Jiang B, Luo R, Mao J, Xiao T, Jiang Y (2018) Acquisition of localization confidence for accurate object detection. In: Computer vision: ECCV 2018, pp 816–832. Springer International Publishing, Cham

Chi C, Zeng X, Bruniaux P, Tartare G (2021) A study on segmentation and refinement of key human body parts by integrating manual measurements. Ergonomics. https://doi.org/10.1080/00140139.2021.1963489

Ruiz E, Mayol-Cuevas W (2020) Geometric affordance perception: leveraging deep 3D saliency with the interaction tensor. Front Neurorobot. https://doi.org/10.3389/fnbot.2020.00045

Qian K, Jing XS, Duan YH, Zhou B, Fang F, Xia J, Ma XD (2020) Grasp pose detection with affordance-based task constraint learning in single-view point clouds. J Intell Rob Syst 100(1):145–163. https://doi.org/10.1007/s10846-020-01202-3

Corona E, Pumarola A, Alenyà G, Moreno-Noguer F, Rogez G (2020) GanHand: predicting human grasp affordances in multi-object scenes. In: 2020 IEEE/CVF conference on computer vision and pattern recognition (CVPR), 13–19 June 2020, pp. 5030–5040

Montesano L, Lopes M, Bernardino A, Santos-Victor J (2008) Learning object affordances: from sensory-motor coordination to imitation. IEEE Trans Rob 24(1):15–26. https://doi.org/10.1109/TRO.2007.914848

Chang O (2015) A bio-inspired robot with visual perception of affordances. In: Agapito L, Bronstein MM, Rother C (eds) Computer vision: ECCV 2014 workshops, pp 420–426. Springer International Publishing, Cham

Song HO, Fritz M, Goehring D, Darrell T (2016) Learning to detect visual grasp affordance. IEEE Trans Autom Sci Eng 13(2):798–809. https://doi.org/10.1109/TASE.2015.2396014

Myers A, Teo CL, Fermüller C, Aloimonos Y (2015) Affordance detection of tool parts from geometric features. In: 2015 IEEE international conference on robotics and automation (ICRA), 26–30 May 2015, pp 1374–1381

Lakani SR, Rodríguez-Sánchez AJ, Piater J (2017) Can affordances guide object decomposition into semantically meaningful parts? In: 2017 IEEE winter conference on applications of computer vision (WACV), 24–31 March 2017, pp 82–90

Lakani SR, Rodríguez-Sánchez AJ, Piater J (2018) Exercising affordances of objects: a part-based approach. IEEE Robot Autom Lett 3(4):3465–3472. https://doi.org/10.1109/LRA.2018.2853639

Rezapour Lakani S, Rodríguez-Sánchez AJ, Piater J (2019) Towards affordance detection for robot manipulation using affordance for parts and parts for affordance. Auton Robot 43(5):1155–1172. https://doi.org/10.1007/s10514-018-9787-5

Iizuka M, Hashimoto M (2018) Detection of semantic grasping-parameter using part-affordance recognition. In: 2018 19th International conference on research and education in mechatronics (REM), 7–8 June 2018, pp 136–140

Iizuka M, Akizuki S, Hashimoto M (2019) Accuracy improvement of functional attribute recognition by dense CRF considering object shape. Electron Commun Jpn 102(3):56–62. https://doi.org/10.1002/ecj.12151

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), 27–30 June 2016, pp 770–778

Huang G, Liu Z, Maaten LVD, Weinberger KQ (2017) Densely connected convolutional networks. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR), 21–26 July 2017, pp 2261–2269

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: 2018 IEEE/CVF conference on computer vision and pattern recognition, 18–23 June 2018, pp 7132–7141

Tan M, Le Q (2019) Efficientnet: rethinking model scaling for convolutional neural networks. In: International conference on machine learning 2019, pp 6105–6114. PMLR

Zhang H, Wu C, Zhang Z, Zhu Y, Lin H, Zhang Z, Sun Y, He T, Mueller J, Manmatha RJ (2020) Resnest: split-attention networks. arXiv preprint arXiv:2004.08955 (2020)

Ardón P, Pairet È, Lohan KS, Ramamoorthy S, Petrick RJ (2020) Affordances in robotic tasks--a survey. arXiv:2004.07400

Lenz I, Lee H, Saxena A (2015) Deep learning for detecting robotic grasps. Int J Robot Res 34(4–5):705–724. https://doi.org/10.1177/0278364914549607

Redmon J, Angelova A (2015) Real-time grasp detection using convolutional neural networks. In: 2015 IEEE international conference on robotics and automation (ICRA), 26–30 May 2015, pp 1316–1322

Guo D, Sun F, Liu H, Kong T, Fang B, Xi N (2017) A hybrid deep architecture for robotic grasp detection. In: 2017 IEEE international conference on robotics and automation (ICRA), 29 May–3 June 2017, pp 1609–1614

Chu F, Xu R, Vela PA (2018) Real-world multiobject, multigrasp detection. IEEE Robot Autom Lett 3(4):3355–3362. https://doi.org/10.1109/LRA.2018.2852777

Watson J, Hughes J, Iida F (2017) Real-world, real-time robotic grasping with convolutional neural networks. In: Towards autonomous robotic systems, pp 617–626. Springer International Publishing, Cham

Ardón PÈP, Petillot Y, Petrick RPA, Ramamoorthy S, Lohan KS (2021) Self-assessment of grasp affordance transfer. In: 2020 IEEE/RSJ international conference on intelligent robots and systems (IROS), 24 Oct.–24 Jan. 2021, pp 9385–9392

Nguyen A, Kanoulas D, Caldwell DG, Tsagarakis NG (2016) Detecting object affordances with convolutional neural networks. In: 2016 IEEE/RSJ international conference on intelligent robots and systems (IROS), 9–14 Oct. 2016, pp. 2765–2770

Nguyen A, Kanoulas D, Caldwell DG, Tsagarakis NG (2017) Object-based affordances detection with convolutional neural networks and dense conditional random fields. In: 2017 IEEE/RSJ international conference on intelligent robots and systems (IROS), 24–28 Sept. 2017, pp. 5908–5915

Chu FJ, Xu R, Seguin L, Vela PA (2019) Toward affordance detection and ranking on novel objects for real-world robotic manipulation. IEEE Robot Autom Lett 4(4):4070–4077. https://doi.org/10.1109/LRA.2019.2930364

Yin C, Zhang Q, Ren W (2021) A new semantic edge aware network for object affordance detection. J Intell Rob Syst 104(1):2. https://doi.org/10.1007/s10846-021-01525-9

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: 3rd international conference on learning representations (ICLR 2015), 7–9 May 2015, pp 1–14

Lin T, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR), 21–26 July 2017, pp 936–944

Fu J, Liu J, Tian H, Li Y, Bao Y, Fang Z, Lu H (2019) Dual attention network for scene segmentation. In: 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR), 15–20 June 2019, pp 3141–3149

Zheng Z, Wang P, Liu W, Li J, Ye R, Ren D (2020) Distance-IoU loss: faster and better learning for bounding box regression. Proc AAAI Conf Artif Intell 34(07):12993–13000. https://doi.org/10.1609/aaai.v34i07.6999

Cheng T, Wang X, Huang L, Liu W (2020) Boundary-preserving mask R-CNN. In: Vedaldi A, Bischof H, Brox T, Frahm J-M (eds) Computer vision: ECCV 2020. Springer, Cham, pp 660–676

Zhen M, Wang J, Zhou L, Li S, Shen T, Shang J, Fang T, Quan L (2020) Joint semantic segmentation and boundary detection using iterative pyramid contexts. In: 2020 IEEE/CVF conference on computer vision and pattern recognition (CVPR), 13–19 June 2020, pp. 13663–13672

Casas S, Gulino C, Suo S, Urtasun R (2020) The importance of prior knowledge in precise multimodal prediction. In: 2020 IEEE/RSJ international conference on intelligent robots and systems (IROS), 24 Oct.–24 Jan. 2021, pp 2295–2302

Woo S, Park J, Lee J-Y, Kweon IS (2018) CBAM: convolutional block attention module. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y (eds) Computer vision: ECCV 2018. Springer International Publishing, Cham, pp 3–19

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2017) Grad-CAM: visual explanations from deep networks via gradient-based localization. In: 2017 IEEE international conference on computer vision (ICCV), 22–29 Oct. 2017, pp. 618–626

Funding

No funding was received for conducting this study.

Author information

Authors and Affiliations

Contributions

CY is responsible for the main research work and paper writing. QZ is responsible for the guidance of the project.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflicts of interest to this work.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

All authors have read this manuscript and would like to have it considered exclusively for publication in neural computing and applications.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yin, C., Zhang, Q. Object affordance detection with boundary-preserving network for robotic manipulation tasks. Neural Comput & Applic 34, 17963–17980 (2022). https://doi.org/10.1007/s00521-022-07446-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07446-4