Abstract

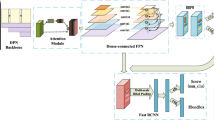

Multi-scale object detection is one of the focuses of object detection, which is particularly vital for ship detection. In order to achieve the desired effects, most advanced Convolutional Neural Network-based detectors enumerate and make inferences over multi-resolution feature maps. However, the existing methods bring two critical problems: (1) Over-fitted anchor settings and supervisions for object scales will restrict the generalized performance of the algorithm. (2) Similar multi-resolution prediction branches insulate the feature space and prevent learning from branches at different levels. Drawing on the human cognitive process, this paper proposes a novel structure for multi-scale rotated ship detection called the Feature Attention Transfer module, which generates and transfers attention in multi-level feature maps to instruct each prediction branch to focus on the features that are not well extracted in other branches. Accordingly, a customized supervision method called “Inclusion–Exclusion Learning” is proposed for associative learning based on the prediction results on multi-scale branches. We employ an anchor-free rotated ship detection framework to verify the proposed module. Extensive experiments are conducted to demonstrate the effectiveness of the proposed algorithm, called SKFat, on three optical remote sensing image datasets. Experimental results show that the proposed modules improve the multi-resolution detection framework while introducing negligible inference overhead. The best result of the proposed algorithm achieves the state-of-the-art average precision while reaching a high inference speed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Huo W, Huang Y, Pei J, Zhang Q, Gu Q, Yang J (2018) Ship detection from ocean sar image based on local contrast variance weighted information entropy. Sensors 18(4):1196

Pelich R, Longépé N, Mercier G, Hajduch G, Garello R (2014) Ais-based evaluation of target detectors and sar sensors characteristics for maritime surveillance. IEEE J Sel Top Appl Earth Observ Remote Sens 8(8):3892–3901

Schloenhardt A (2003) Migrant smuggling: illegal migration and organised crime in Australia and the Asia Pacific Region. Leiden, The Netherlands: Brill–Nijhoff, [Online]. Available: https://brill.com/view/title/8821

Marino A, Sugimoto M, Ouchi K, Hajnsek I (2013) Validating a notch filter for detection of targets at sea with alos-palsar data: Tokyo bay. IEEE J Sel Top Appl Earth Observ Remote Sens 7(12):4907–4918

Cai Z, Vasconcelos N (2019) Cascade r-cnn: high quality object detection and instance segmentation. IEEE Trans Pattern Anal Mach Intell 43:1483–98

Zhao Q, Sheng T, Wang Y, Tang Z, Chen Y, Cai L, Ling H (2019) M2det: a single-shot object detector based on multi-level feature pyramid network. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 9259–9266

Qiao S, Chen L-C, Yuille A (2020) Detectors: detecting objects with recursive feature pyramid and switchable atrous convolution. Preprint arXiv:2006.02334

Girshick R, Donahue J, Darrell T, Malik J (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 580–587

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C-Y, Berg AC (2016) Ssd: single shot multibox detector. In: European conference on computer vision. Springer, pp 21–37

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 779–788

Zhou X, Wang D, Krähenbühl P (2019) Objects as points. Preprint arXiv:1904.07850

Ren S, He K, Girshick R, Sun J (2015) Faster r-cnn: towards real-time object detection with region proposal networks. In: Advances in neural information processing systems, pp 91–99

Cheng B, Wei Y, Shi H, Feris R, Xiong J, Huang T (2018) Revisiting rcnn: on awakening the classification power of faster rcnn. In: Proceedings of the European conference on computer vision (ECCV), pp 453–468

Zhu C, He Y, Savvides M (2019) Feature selective anchor-free module for single-shot object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 840–849

Singh B, Davis LS (2018) An analysis of scale invariance in object detection snip. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3578–3587

Chen X, Fang H, Lin T-Y, Vedantam R, Gupta S, Dollár P, Zitnick CL (2015) Microsoft coco captions: data collection and evaluation server. Preprint arXiv:1504.00325

Xia G-S, Bai X, Ding J, Zhu Z, Belongie S, Luo J, Datcu M, Pelillo M, Zhang L (2018) Dota: a large-scale dataset for object detection in aerial images. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3974–3983

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. In: Advances in neural information processing systems, pp 5998–6008

Leng J, Liu Y, Chen S (2019) Context-aware attention network for image recognition. Neural Comput Appl 31(12):9295–9305

Cui Z, Leng J, Liu Y, Zhang T, Quan P, Zhao W (2021) Sknet: detecting rotated ships as keypoints in optical remote sensing images. IEEE Trans Geosci Remote Sens 59:8826–40

Dai J, Li Y, He K, Sun J (2016) R-fcn: object detection via region-based fully convolutional networks. In: Advances in neural information processing systems, pp 379–387

Ma J, Shao W, Ye H, Wang L, Wang H, Zheng Y, Xue X (2018) Arbitrary-oriented scene text detection via rotation proposals. IEEE Trans Multimed 20(11):3111–3122

Jiang Y, Zhu X, Wang X, Yang S, Li W, Wang H, Fu P, Luo Z (2017) R2cnn: rotational region cnn for orientation robust scene text detection. Preprint arXiv:1706.09579

Ding J, Xue N, Long Y, Xia G-S, Lu Q (2018) Learning roi transformer for detecting oriented objects in aerial images. Preprint arXiv:1812.00155

Zhang S, Wen L, Bian X, Lei Z, Li SZ (2018) Single-shot refinement neural network for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4203–4212

Lin T-Y, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision, pp 2980–2988

Lin T-Y, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2117–2125

Law H, Deng J (2018) Cornernet: detecting objects as paired keypoints. In: Proceedings of the European conference on computer vision (ECCV), pp 734–750

Zhou X, Yao C, Wen H, Wang Y, Zhou S, He W, Liang J (2017) East: an efficient and accurate scene text detector. In: Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, pp 5551–5560

Lin Y, Feng P, Guan J (2019) Ienet: interacting embranchment one stage anchor free detector for orientation aerial object detection. Preprint arXiv:1912.00969

Cheng M-M, Zhang Z, Lin W-Y, Torr P (2014) Bing: binarized normed gradients for objectness estimation at 300fps. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3286–3293

Szegedy C, Reed S, Erhan D, Anguelov D, Ioffe S (2014) Scalable, high-quality object detection. Preprint arXiv:1412.1441

Erhan D, Szegedy C, Toshev A, Anguelov D (2014) Scalable object detection using deep neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2147–2154

Wang J, Chen K, Yang S, Loy CC, Lin D (2019) Region proposal by guided anchoring. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2965–2974

Cai Z, Fan Q, Feris RS, Vasconcelos N (2016) A unified multi-scale deep convolutional neural network for fast object detection. In: European conference on computer vision. Springer, pp 354–370

Mnih V, Heess N, Graves A, Kavukcuoglu K (2014) Recurrent models of visual attention. In: Proceedings of the 27th international conference on neural information processing systems, vol 2, pp 2204–2212

Bahdanau D, Cho KH, Bengio Y (2015) Neural machine translation by jointly learning to align and translate. In: 3rd international conference on learning representations, ICLR 2015

Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S (2020) End-to-end object detection with transformers. In: European conference on computer vision. Springer, pp 213–229

Woo S, Park J, Lee J-Y, Kweon IS (2018) Cbam: convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV), pp 3–19

Jaderberg M, Simonyan K, Zisserman A, Kavukcuoglu K (2015) Spatial transformer networks. In: Proceedings of the 28th international conference on neural information processing systems,vol 2, pp 2017–2025

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Newell A, Yang K, Deng J (2016) Stacked hourglass networks for human pose estimation. In: European conference on computer vision. Springer, pp 483–499

Ross SM (2014) Introduction to probability models. Academic press

Neubeck A, Van Gool L (2006) Efficient non-maximum suppression. In: 18th international conference on pattern recognition (ICPR’06). IEEE, vol 3, pp 850–855

Duan K, Bai S, Xie L, Qi H, Huang Q, Tian Q (2019) Centernet: keypoint triplets for object detection. In: Proceedings of the IEEE international conference on computer vision, pp 6569–6578

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Liu Z, Liu Y, Weng L, Yang Y (2017) A high resolution optical satellite image dataset for ship recognition and some new baselines. In: International conference on pattern recognition applications & methods

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. Preprint arXiv:1409.1556

Zhang Z, Guo W, Zhu S, Yu W (2018) Toward arbitrary-oriented ship detection with rotated region proposal and discrimination networks. IEEE Geosci Remote Sens Lett 15:1–5

Liao M, Zhu Z, Shi B, Xia G, Bai X (2018) Rotation-sensitive regression for oriented scene text detection. In: 2018 IEEE conference on computer vision and pattern recognition, CVPR 2018, Salt Lake City, UT, USA, June 18–22, 2018. IEEE Computer Society, pp 5909–5918

Yang X, Liu Q, Yan J, Li A (2019) R3det: refined single-stage detector with feature refinement for rotating object. Preprint arXiv:1908.05612

Liu L, Bai Y, Li Y (2020) Locality-aware rotated ship detection in high-resolution remote sensing imagery based on multi-scale convolutional network. Preprint arXiv:2007.12326

Koo J, Seo J, Jeon S, Choe J, Jeon T (2018) Rbox-cnn: rotated bounding box based cnn for ship detection in remote sensing image. In: Proceedings of the 26th ACM SIGSPATIAL international conference on advances in geographic information systems, pp 420–423

Wang J, Yang W, Li H-C, Zhang H, Xia G-S (2020) Learning center probability map for detecting objects in aerial images. IEEE Trans Geosci Remote Sens 59:4307–23

Lin T-Y, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft coco: common objects in context. In: European conference on computer vision. Springer, pp 740–755

Funding

This work was supported in part by the Natural Science Foundation of China under Grant 71671178, in part by the Equipment Advance Research Fund 6142502180101. It is also supported by the Fundamental Research Funds for the Central Universities.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We declare that we have no financial and personal relationships with other people or organizations that can inappropriately influence our work, there is no professional or other personal interest of any nature or kind in any product, service and/or company that could be construed as influencing the position presented in, or the review of, the manuscript entitled.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cui, Z., Liu, Y., Zhao, W. et al. Learning to transfer attention in multi-level features for rotated ship detection. Neural Comput & Applic 34, 19831–19844 (2022). https://doi.org/10.1007/s00521-022-07491-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07491-z