Abstract

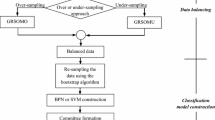

The application of clustering in generating random subspace has improved the accuracy and diversity of ensemble classification methods. If clusters are not balanced (unequal size of clusters) and not strong (unequal number of data from each class in each cluster), the results will deviate from classes with more samples in each cluster and thereby will be biased. The current paper presents a novel strong balance-constrained clustering or hard-strong clustering. This method creates diverse strong balanced data clusters to train different base classifiers and an artificial neural network with more than one hidden layer, in which the final decision is made for the data class through majority voting. By implementing the proposed method on 16 datasets, two objectives are followed: enhancing the performance of ensemble classifier and deep learning-based method (data mining objective), and adopting appropriate policies for budget, time, and energy assignment to various business domains by decision-makers (business objective). Based on the evaluation and comparison of the results, the proposed method is faster than other balancing methods. Furthermore, the accuracy of the proposed ensemble method has proved acceptable improvement than other ensemble classification methods.

Similar content being viewed by others

Availability of data and material

This study incorporated 15 datasets from the UCI Machine Learning Repository and a dataset from www.kaggle.com.

References

Moslehi F, Haeri A, Gholamian MR (2020) A novel selective clustering framework for appropriate labeling of clusters based on K-means algorithm. Sci Iran 27(5):2621–2634

Tan P-N, Steinbach M, Kumar V (2016) Introduction to data mining. Pearson Education India

Rokach L (2010) Ensemble-based classifiers. Artif Intell Rev 33(1–2):1–39

Ren Y, Zhang L, Suganthan PN (2016) Ensemble classification and regression-recent developments, applications and future directions. IEEE Comput Intell Mag 11(1):41–53

Kuncheva LI, Whitaker CJ (2003) Measures of diversity in classifier ensembles and their relationship with the ensemble accuracy. Mach Learn 51(2):181–207

Dietterich TG (1997) Machine-learning research. AI Mag 18(4):97–97

Zhou Z-H (2012) Ensemble methods: foundations and algorithms. CRC Press

Kittler J, Hatef M, Duin RP, Matas J (1998) On combining classifiers. IEEE Trans Pattern Anal Mach Intell 20(3):226–239

Jan ZM, Verma B (2020) Multiple strong and balanced clusters based ensemble of deep learners. Pattern Recognit 107:107420

Breiman L (1996) Bagging predictors. Mach Learn 24(2):123–140

Freund Y, Schapire RE (1996) Experiments with a new boosting algorithm. In: ICML, Citeseer, pp 148–156

Liaw A, Wiener M (2002) Classification and regression by randomForest. R news 2(3):18–22

Yang Y, Jiang J (2015) Hybrid sampling-based clustering ensemble with global and local constitutions. IEEE Trans Neural Netw Learn Syst 27(5):952–965

Seiffert C, Khoshgoftaar TM, Van Hulse J, Napolitano A (2009) RUSBoost: a hybrid approach to alleviating class imbalance. IEEE Trans Syst Man Cybern Part A Syst Hum 40(1):185–197

Avidan S (2006) Spatialboost: adding spatial reasoning to adaboost. In: European conference on computer vision. Springer, pp 386–396

Domingo C, Watanabe O (2000) MadaBoost: a modification of AdaBoost. In: COLT. Citeseer, pp 180–189

Rätsch G, Onoda T, Müller K-R (2001) Soft margins for AdaBoost. Mach Learn 42(3):287–320

Vezhnevets A, Vezhnevets V (2005) Modest AdaBoost-teaching AdaBoost to generalize better. In: Graphicon, vol 5, pp 987–997

Ho TK (1998) The random subspace method for constructing decision forests. IEEE Trans Pattern Anal Mach Intell 20(8):832–844

Bryll R, Gutierrez-Osuna R, Quek F (2003) Attribute bagging: improving accuracy of classifier ensembles by using random feature subsets. Pattern Recognit 36(6):1291–1302

Lazarevic A, Kumar V (2005) Feature bagging for outlier detection. In: Proceedings of the eleventh ACM SIGKDD international conference on knowledge discovery in data mining, pp 157–166

Murty MN, Jain A, Flynn P (1999) Data clustering: a review. ACM Comput Surv 31(3):264

Datta S, Datta S (2003) Comparisons and validation of statistical clustering techniques for microarray gene expression data. Bioinformatics 19(4):459–466

Celebi ME (2014) Partitional clustering algorithms. Springer

Han J, Liu H, Nie F (2018) A local and global discriminative framework and optimization for balanced clustering. IEEE Trans Neural Netw Learn Syst 30(10):3059–3071

Gupta MK, Chandra P (2020) An empirical evaluation of K-means clustering algorithm using different distance/similarity metrics. In: Proceedings of ICETIT 2019. Springer, pp 884–892

Naldi MC, Campello RJ, Hruschka ER, Carvalho A (2011) Efficiency issues of evolutionary k-means. Appl Soft Comput 11(2):1938–1952

Jain AK, Dubes RC (1988) Algorithms for clustering data. Prentice-Hall Inc

Forgy EW (1965) Cluster analysis of multivariate data: efficiency versus interpretability of classifications. Biometrics 21:768–769

Lloyd S (1982) Least squares quantization in PCM. IEEE Trans Inf Theory 28(2):129–137

MacQueen J (1967) Some methods for classification and analysis of multivariate observations. In: Proceedings of the fifth Berkeley symposium on mathematical statistics and probability, Oakland, CA, USA, vol 14, pp 281–297

Hartigan JA, Wong MA (1979) Algorithm AS 136: a k-means clustering algorithm. J R Stat Soc Ser C (Appl Stat) 28(1):100–108

Likas A, Vlassis N, Verbeek JJ (2003) The global k-means clustering algorithm. Pattern Recognit 36(2):451–461

Faber V (1994) Clustering and the continuous k-means algorithm. Los Alamos Sci 22(138144.21):67

Bradley PS, Fayyad UM (1998) Refining initial points for k-means clustering. In: ICML. Citeseer, pp 91–99

Katsavounidis I, Kuo C-CJ, Zhang Z (1994) A new initialization technique for generalized Lloyd iteration. IEEE Signal Process Lett 1(10):144–146

Kaufman L, Rousseeuw PJ (2009) Finding groups in data: an introduction to cluster analysis, vol 344. Wiley

Uchenna OE, Iheanyi OS (2020) Some versions of k-means clustering method and its comparative study in low and high dimensional data. Afr J Math Stat Stud 3(1):68–78

Jan Z, Verma B (2020) Multicluster class-balanced ensemble. IEEE Trans Neural Netw Learn Syst 32:1014–1025

Asafuddoula M, Verma B, Zhang M (2017) A divide-and-conquer-based ensemble classifier learning by means of many-objective optimization. IEEE Trans Evol Comput 22(5):762–777

Ribeiro VHA, Reynoso-Meza G (2018) A multi-objective optimization design framework for ensemble generation. In: Proceedings of the genetic and evolutionary computation conference companion, pp 1882–1885

Zhang C, Lim P, Qin AK, Tan KC (2016) Multiobjective deep belief networks ensemble for remaining useful life estimation in prognostics. IEEE Trans Neural Netw Learn Syst 28(10):2306–2318

Zhao J, Jiao L, Xia S, Fernandes VB, Yevseyeva I, Zhou Y, Emmerich MT (2018) Multiobjective sparse ensemble learning by means of evolutionary algorithms. Decis Support Syst 111:86–100

Hastie T, Rosset S, Zhu J, Zou H (2009) Multi-class adaboost. Stat Interface 2(3):349–360

Dietterich TG (2000) Ensemble methods in machine learning. In: International workshop on multiple classifier systems. Springer, pp 1–15

Fletcher S, Verma B (2017) Removing bias from diverse data clusters for ensemble classification. In: International conference on neural information processing. Springer, pp 140–149

Ding C, He X (2002) Cluster merging and splitting in hierarchical clustering algorithms. In: 2002 IEEE international conference on data mining. Proceedings. IEEE, pp 139–146

Gupta S, Jain A, Jeswani P (2018) Generalized method to produce balanced structures through k-means objective function. In: 2018 2nd International conference on I-SMAC (IoT in social, mobile, analytics and cloud). IEEE, pp 586–590

Zhong S, Ghosh J (2003) Model-based clustering with soft balancing. In: ICDM’03: proceedings of the third IEEE international conference on data mining, p 459

Zhou P, Chen J, Fan M, Du L, Shen Y-D, Li X (2020) Unsupervised feature selection for balanced clustering. Knowl Based Syst 193:105417

Bradley PS, Bennett KP, Demiriz A (2000) Constrained k-means clustering. Microsoft Res Redmond 20(0):0

Costa LR, Aloise D, Mladenović N (2017) Less is more: basic variable neighborhood search heuristic for balanced minimum sum-of-squares clustering. Inf Sci 415:247–253

Malinen MI, Fränti P (2014) Balanced k-means for clustering. In: Joint IAPR international workshops on statistical techniques in pattern recognition (SPR) and structural and syntactic pattern recognition (SSPR). Springer, pp 32–41

Zhu S, Wang D, Li T (2010) Data clustering with size constraints. Knowl Based Syst 23(8):883–889

Althoff T, Ulges A, Dengel A (2011) Balanced clustering for content-based image browsing. Ser Ges Inform 1:27–30

Banerjee A, Ghosh J (2002) On scaling up balanced clustering algorithms. In: Proceedings of the 2002 SIAM international conference on data mining. SIAM, pp 333–349

Banerjee A, Ghosh J (2004) Frequency-sensitive competitive learning for scalable balanced clustering on high-dimensional hyperspheres. IEEE Trans Neural Netw 15(3):702–719

Chen Y, Zhang Y, Ji X (2006) Size regularized cut for data clustering. In: Advances in neural information processing systems, pp 211–218

Hagen L, Kahng AB (1992) New spectral methods for ratio cut partitioning and clustering. IEEE Trans Comput Aided Des Integr Circuits Syst 11(9):1074–1085

Kawahara Y, Nagano K, Okamoto Y (2011) Submodular fractional programming for balanced clustering. Pattern Recognit Lett 32(2):235–243

Lin W-A, Chen J-C, Castillo CD, Chellappa R (2018) Deep density clustering of unconstrained faces. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8128–8137

Liu H, Han J, Nie F, Li X (2017) Balanced clustering with least square regression. In: Thirty-first AAAI conference on artificial intelligence

Bora RM, Chaudhari SN, Mene SP. A review of ensemble based classification and clustering in machine learning

Rahman A, Verma B (2010) A novel ensemble classifier approach using weak classifier learning on overlapping clusters. In: The 2010 international joint conference on neural networks (IJCNN). IEEE, pp 1–7

Verma B, Rahman A (2011) Cluster-oriented ensemble classifier: impact of multicluster characterization on ensemble classifier learning. IEEE Trans Knowl Data Eng 24(4):605–618

Rahman A, Verma B (2011) Novel layered clustering-based approach for generating ensemble of classifiers. IEEE Trans Neural Netw 22(5):781–792

Jurek A, Bi Y, Wu S, Nugent CD (2013) Clustering-based ensembles as an alternative to stacking. IEEE Trans Knowl Data Eng 26(9):2120–2137

Rahman A, Verma B (2013) Ensemble classifier generation using non-uniform layered clustering and genetic algorithm. Knowl Based Syst 43:30–42

Huang D, Wang C-D, Lai J-H, Liang Y, Bian S, Chen Y (2016) Ensemble-driven support vector clustering: from ensemble learning to automatic parameter estimation. In: 2016 23rd International conference on pattern recognition (ICPR). IEEE, pp 444–449

Asafuddoula M, Verma B, Zhang M (2017) An incremental ensemble classifier learning by means of a rule-based accuracy and diversity comparison. In: 2017 international joint conference on neural networks (IJCNN). IEEE, pp 1924–1931

Hamers L (1989) Similarity measures in scientometric research: the Jaccard index versus Salton’s cosine formula. Inf Process Manag 25(3):315–318

Jan ZM, Verma B, Fletcher S (2018) Optimizing clustering to promote data diversity when generating an ensemble classifier. In: Proceedings of the genetic and evolutionary computation conference companion, pp 1402–1409

Jan Z, Verma B (2019) Ensemble classifier generation using class-pure cluster balancing. In: International conference on neural information processing. Springer, pp 761–769

Md. Jan Z, Verma B (2019) Evolutionary classifier and cluster selection approach for ensemble classification. ACM Trans Knowl Discov Data (TKDD) 14(1):1–18

Jan ZM, Verma B (2019) Ensemble classifier optimization by reducing input features and base classifiers. In: 2019 IEEE congress on evolutionary computation (CEC). IEEE, pp 1580–1587

Jan ZM, Verma B (2019) Balanced image data based ensemble of convolutional neural networks. In: 2019 IEEE symposium series on computational intelligence (SSCI). IEEE, pp 2418–2424

Almalaq A, Edwards G (2017) A review of deep learning methods applied on load forecasting. In: 2017 16th IEEE international conference on machine learning and applications (ICMLA). IEEE, pp 511–516

Neena A, Geetha M (2018) Image classification using an ensemble-based deep CNN. In: Recent findings in intelligent computing techniques. Springer, pp 445–456

Affeldt S, Labiod L, Nadif M (2020) Spectral clustering via ensemble deep autoencoder learning (SC-EDAE). Pattern Recognit 108:107522

Abazar T, Masjedi P, Taheri M (2020) An efficient ensemble of convolutional deep steganalysis based on clustering. In: 2020 6th International conference on web research (ICWR). IEEE, pp 260–264

Sideratos G, Ikonomopoulos A, Hatziargyriou ND (2020) A novel fuzzy-based ensemble model for load forecasting using hybrid deep neural networks. Electr Power Syst Res 178:106025

Saini D, Singh M (2015) Achieving balance in clusters-a survey. Int Res J Eng Technol 2(9):2611–2614

Gupta S (2017) A survey on balanced data clustering algorithms. Int J Women Res Eng Sci Manag 2(9):2611–2614

Ding C, He X (2004) Principal component analysis and effective k-means clustering. In: Proceedings of the 2004 SIAM international conference on data mining. SIAM, pp 497–501

Jolliffe IT (2002) Principal components in regression analysis. In: Principal component analysis, pp 167–198

Borgwardt S, Brieden A, Gritzmann P (2013) A balanced k-means algorithm for weighted point sets. arXiv preprint arXiv:13084004

Tzortzis G, Likas A (2014) The MinMax k-Means clustering algorithm. Pattern Recognit 47(7):2505–2516

Arthur D, Vassilvitskii S (2006) k-means++: the advantages of careful seeding. Stanford

Chang X, Nie F, Ma Z, Yang Y (2014) Balanced k-means and min-cut clustering. arXiv preprint arXiv:14116235

Borgwardt S, Brieden A, Gritzmann P (2017) An LP-based k-means algorithm for balancing weighted point sets. Eur J Oper Res 263(2):349–355

Liu H, Huang Z, Chen Q, Li M, Fu Y, Zhang L (2018) Fast clustering with flexible balance constraints. In: 2018 IEEE international conference on big data (big data). IEEE, pp 743–750

Le HM, Eriksson A, Do T-T, Milford M (2018) A binary optimization approach for constrained k-means clustering. In: Asian conference on computer vision. Springer, pp 383–398

Chakraborty D, Das S (2019) Modified fuzzy c-mean for custom-sized clusters. Sādhanā 44(8):182

Lin W, He Z, Xiao M (2019) Balanced clustering: a uniform model and fast algorithm. In: IJCAI, pp 2987–2993

Rujeerapaiboon N, Schindler K, Kuhn D, Wiesemann W (2019) Size matters: cardinality-constrained clustering and outlier detection via conic optimization. SIAM J Optim 29(2):1211–1239

Tang W, Yang Y, Zeng L, Zhan Y (2019) Optimizing MSE for clustering with balanced size constraints. Symmetry 11(3):338

Chen X, Hong W, Nie F, Huang JZ, Shen L (2020) Enhanced balanced min cut. Int J Comput Vis 128:1–14

Zhang T, Wang D, Chen H (2016) Balanced COD-CLARANS: a constrained clustering algorithm to optimize logistics distribution network. In: 2016 2nd International conference on artificial intelligence and industrial engineering (AIIE 2016). Atlantis Press

Elango M, Nachiappan S, Tiwari MK (2011) Balancing task allocation in multi-robot systems using K-means clustering and auction based mechanisms. Expert Syst Appl 38(6):6486–6491

Rani S, Kurnia YA, Huda SN, Ekamas SAS (2019) Smart travel itinerary planning application using held-Karp algorithm and balanced clustering approach. In: Proceedings of the 2019 2nd international conference on E-business, information management and computer science, pp 1–5

Liao Y, Qi H, Li W (2012) Load-balanced clustering algorithm with distributed self-organization for wireless sensor networks. IEEE Sens J 13(5):1498–1506

Lan Y, Xiuli C, Meng W (2009) An energy-balanced clustering routing algorithm for wireless sensor networks. In: 2009 WRI world congress on computer science and information engineering. IEEE, pp 316–320

Gong Y, Chen G, Tan L (2008) A balanced serial k-means based clustering protocol for wireless sensor networks. In: 2008 4th International conference on wireless communications, networking and mobile computing. IEEE, pp 1–6

Tan L, Gong Y, Chen G (2008) A balanced parallel clustering protocol for wireless sensor networks using K-means techniques. In: 2008 Second international conference on sensor technologies and applications (sensorcomm 2008). IEEE, pp 300–305

Ray A, De D (2016) Energy efficient clustering protocol based on K-means (EECPK-means)-midpoint algorithm for enhanced network lifetime in wireless sensor network. IET Wirel Sens Syst 6(6):181–191

Hassan AA, Shah WM, Othman MFI, Hassan HAH (2020) Evaluate the performance of K-means and the fuzzy C-means algorithms to formation balanced clusters in wireless sensor networks. Int J Electr Comput Eng 2088–8708:10

Agrawal D, Pandey S (2020) Load balanced fuzzy-based clustering for WSNs. In: International conference on innovative computing and communications. Springer, pp 583–592

Chethana G, Padmaja K (2019) An iterative approach for optimal number of balanced clusters and placement of cluster heads in WSN with spatial constraints. In: 2019 4th international conference on recent trends on electronics, information, communication & technology (RTEICT). IEEE, pp 1314–1321

Mahajan M, Nimbhorkar P, Varadarajan K (2009) The planar k-means problem is NP-hard. In: International workshop on algorithms and computation. Springer, pp 274–285

Aloise D, Deshpande A, Hansen P, Popat P (2009) NP-hardness of Euclidean sum-of-squares clustering. Mach Learn 75(2):245–248

Pyatkin A, Aloise D, Mladenović N (2017) NP-hardness of balanced minimum sum-of-squares clustering. Pattern Recognit Lett 97:44–45

Bertoni A, Goldwurm M, Lin J, Saccà F (2012) Size constrained distance clustering: separation properties and some complexity results. Fundam Inform 115(1):125–139

Kushwaha M, Yadav H, Agrawal C (2020) A review on enhancement to standard K-means clustering. In: Social networking and computational intelligence. Springer, pp 313–326

Dataset A. University of California machine learning repository

Zhang L, Suganthan PN (2014) Oblique decision tree ensemble via multisurface proximal support vector machine. IEEE Trans Cybern 45(10):2165–2176

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:14091556

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

AH and SAMA conceived of the presented idea. SAMA developed the theory and performed the computations. FM verified the analytical methods. All authors discussed the results and contributed to the final manuscript.

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Mousavian Anaraki, S.A., Haeri, A. & Moslehi, F. Generating balanced and strong clusters based on balance-constrained clustering approach (strong balance-constrained clustering) for improving ensemble classifier performance. Neural Comput & Applic 34, 21139–21155 (2022). https://doi.org/10.1007/s00521-022-07595-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07595-6