Abstract

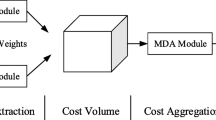

Transformers have achieved impressive performance in natural language processing and computer vision, including text translation, semantic segmentation, etc. However, due to excessive self-attention computation and memory occupation, the stereo matching task does not share its success. To promote this technology in stereo matching, especially with limited hardware resources, we propose a sliding space-disparity transformer named SSD-former. According to matching modeling, we simplify transformer for achieving faster speed, memory-friendly, and competitive performance. First, we employ the sliding window scheme to limit the self-attention operations in the cost volume for adapting to different resolutions, bringing efficiency and flexibility. Second, our space-disparity transformer remarkably reduces memory occupation and computation, only computing the current patch’s self-attention with two parts: (1) all patches of current disparity level at the whole spatial location and (2) the patches of different disparity levels at the exact spatial location. The experiments demonstrate that: (1) different from the standard transformer, SSD-former is faster and memory-friendly; (2) compared with 3D convolution methods, SSD-former has a larger receptive field and provides an impressive speed, showing great potential in stereo matching; and (3) our model obtains state-of-the-art performance and a faster speed on the multiple popular datasets, achieving the best speed–accuracy trade-off.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Beltagy I, Peters ME, Cohan A (2020) Longformer: The long-document transformer. In: arXiv preprint arXiv:2004.05150

Brown TB, Mann B, Ryder N, Subbiah M, Kaplan J, Dhariwal P, Neelakantan A, Shyam P, Sastry G, Askell A et al. (2020) Language models are few-shot learners. In: arXiv preprint arXiv:2005.14165

Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S (2020) End-to-end object detection with transformers. In: European Conference on Computer Vision (ECCV), pp. 213–229

Chang JR, Chen YS (2018) Pyramid stereo matching network. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 5410–5418

Chen Y, Kalantidis Y, Li J, Yan S, Feng J (2018) Multi-fiber networks for video recognition. In: European conference on computer vision (ECCV), pp. 352–367

Cheng X, Zhong Y, Harandi M, Dai Y, Chang X, Li H, Drummond T, Ge Z (2020) Hierarchical neural architecture search for deep stereo matching. In: Advances in Neural Information Processing Systems (NIPS), Vol 33. pp 22158–22169

Cornia M, Stefanini M, Baraldi L, Cucchiara R (2020) Meshed-memory transformer for image captioning. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 10578–10587

Dai Y, Zhu Z, Rao Z, Li B (2019) Mvs\(^2\): Deep unsupervised multi-view stereo with multi-view symmetry. In: International Conference on 3d vision (3DV), pp. 1–8

Dai Z, Yang Z, Yang Y, Carbonell J, Le QV, Salakhutdinov R (2019) Transformer-xl: Attentive language models beyond a fixed-length context. In: arXiv preprint arXiv:1901.02860

Di Gangi MA, Negri M, Cattoni R, Roberto D, Turchi M (2019) Enhancing transformer for end-to-end speech-to-text translation. In: Machine Translation Summit XVII, pp. 21–31

Ding Y, Hua L, Li S (2021) Research on computer vision enhancement in intelligent robot based on machine learning and deep learning. Neural Comput Appl 34:2623–2635

Ding Y, Lin L, Wang L, Zhang M, Li D (2020) Digging into the multi-scale structure for a more refined depth map and 3d reconstruction. Neural Comput Appl 32:11217–11228

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, et al. (2021) An image is worth 16x16 words: transformers for image recognition at scale. In: arXiv preprintarXiv:2010.11929

Duggal S, Wang S, Ma WC, Hu R, Urtasun R (2019) Deeppruner: Learning efficient stereo matching via differentiable patchmatch. In: IEEE International Conference on computer vision (ICCV), pp. 4384–4393

Fang X (2021) Making recommendations using transfer learning. Neural Comput Appl 33:9663–9676

Fang Y, Ma Z, Zheng H, Ji W (2020) Trainable tv-\(l_1\) model as recurrent nets for low-level vision. Neural Comput Appl 32(18):14603-14611

Geiger A, Lenz P, Urtasun R (2012) Are we ready for autonomous driving? the kitti vision benchmark suite. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 3354–3361

Guo X, Yang K, Yang W, Wang X, Li H (2019) Group-wise correlation stereo network. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 3273–3282

Hirschmuller H (2007) Stereo processing by semiglobal matching and mutual information. IEEE Trans Pattern Anal Mach Intell. 30(2):328–341

Hu B, Zhang Z (2021) Bio-inspired visual neural network on spatio-temporal depth rotation perception. Neural Comput Appl 33:10351–10370

Joshi M, Chen D, Liu Y, Weld DS, Zettlemoyer L, Levy O (2020) Spanbert: Improving pre-training by representing and predicting spans. Transactions of the association for computational linguistics 8:64–77

Kendall A, Martirosyan H, Dasgupta S, Henry P, Kennedy R, Bachrach A, Bry A (2017) End-to-end learning of geometry and context for deep stereo regression. In: IEEE International Conference on computer vision (ICCV), pp. 66–75

Kim TH, Sajjadi MS, Hirsch M, Scholkopf B (2018) Spatio-temporal transformer network for video restoration. In: European Conference on computer vision (ECCV), pp. 106–122

Li D, Deng L, Cai Z (2020) Design of traffic object recognition system based on machine learning. Neural Comput Appl 33:8143–8156

Li X, Fan Y, Rao Z, Lv G, Liu S (2021) Synthetic-to-real domain adaptation joint spatial feature transform for stereo matching. IEEE Signal Process Lett 29:60–64

Li X, Hou Y, Wang P, Gao Z, Xu M, Li W (2021) Transformer guided geometry model for flow-based unsupervised visual odometry. Neural Comput Appl 33:8031-8042

Li Z, Liu X, Drenkow N, Ding A, Creighton FX, Taylor RH, Unberath M (2021) Revisiting stereo depth estimation from a sequence-to-sequence perspective with transformers. IEEE International Conference on computer vision and pattern recognition (CVPR) pp. 6197–6206

Liang J, Homayounfar N, Ma WC, Xiong Y, Hu R, Urtasun R (2020) Polytransform: Deep polygon transformer for instance segmentation. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 9131–9140

Liu H, Lu J, Feng J, Zhou J (2017) Two-stream transformer networks for video-based face alignment. IEEE Trans Pattern Anal Mach Intell 40(11):2546–2554

Mayer N, Ilg E, Häusser P, Fischer P, Cremers D, Dosovitskiy A, Brox T (2016) A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 4040–4048

Menze M, Geiger A (2015) Object scene flow for autonomous vehicles. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 3061–3070

Potamias RA, Siolas G, Stafylopatis AG (2020) A transformer-based approach to irony and sarcasm detection. Neural Comput Appl 32(23):17309–17320

Rao Z, Dai Y, Shen Z, He R (2022) Rethinking training strategy in stereo matching. IEEE Trans Neural Networks Learn Syst. https://doi.org/10.1109/TNNLS.2022.3146306

Rao Z, He M, Dai Y, Shen Z (2022) Patch attention network with generative adversarial model for semi-supervised binocular disparity prediction. Visual Comput 38:77–93

Rao Z, He M, Dai Y, Zhu Z, Li B, He R (2020) Nlca-net: a non-local context attention network for stereo matching. APSIPA Trans Signal Inf Process 9:e1–e13

Rao Z, He M, Zhu Z, Dai Y, He R (2021) Bidirectional guided attention network for 3-d semantic detection of remote sensing images. IEEE Trans Geosci Remote Sens 59(7):6138–6153

Schops T, Schonberger JL, Galliani S, Sattler T, Schindler K, Pollefeys M, Geiger A (2017) A multi-view stereo benchmark with high-resolution images and multi-camera videos. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 3260–3269

Seki A, Pollefeys M (2017) Sgm-nets: Semi-global matching with neural networks. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 6640–6649

So D, Le Q, Liang C (2019) The evolved transformer. In: International Conference on machine learning, pp. 5877–5886

Tankovich V, Häne C, Fanello S, Zhang Y, Izadi S, Bouaziz S (2020) Hitnet: Hierarchical iterative tile refinement network for real-time stereo matching. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 14362–14372

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need. In: Advances in neural information processing systems (NIPS), pp. 5998–6008

Wu Z, Wu X, Zhang X, Wang S, Ju L (2019) Semantic stereo matching with pyramid cost volumes. In: IEEE International Conference on computer vision (ICCV), pp. 7484–7493

Xu H, Zhang J (2020) Aanet: Adaptive aggregation network for efficient stereo matching. In: IEEE International Conference on computer vision and pattern recognition (CVPR),1959–1968

Yang F, Yang H, Fu J, Lu H, Guo B (2020) Learning texture transformer network for image super-resolution. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 5791–5800

Yang G, Manela J, Happold M, Ramanan D (2019) Hierarchical deep stereo matching on high-resolution images. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 5515–5524

Yin Z, Darrell T, Yu F (2019) Hierarchical discrete distribution decomposition for match density estimation. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 6044–6053

Zbontar J, LeCun Y et al (2016) Stereo matching by training a convolutional neural network to compare image patches. J Mach Learn Res 17(1):2287–2318

Zhai M, Xiang X (2021) Geometry understanding from autonomous driving scenarios based on feature refinement. Neural Comput Appl 33(8):3209–3220

Zhang F, Prisacariu V, Yang R, Torr PH (2019) Ga-net: Guided aggregation net for end-to-end stereo matching. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 185–194

Zhang F, Qi X, Yang R, Prisacariu V, Wah B, Torr P (2020) Domain-invariant stereo matching networks. In: European Conference on computer vision (ECCV), pp. 420–439

Zhang Y, Chen Y, Bai X, Yu S, Yu K, Li Z, Yang K (2020) Adaptive unimodal cost volume filtering for deep stereo matching. In: the Association for the advance of artificial intelligence (AAAI), vol. 34, pp. 12926–12934

Zhang Z, Wu Y, Zhou J, Duan S, Zhao H, Wang R (2020) Sg-net: Syntax-guided machine reading comprehension. In: the Association for the advance of artificial intelligence (AAAI), vol. 34, pp. 9636–9643

Zheng S, Lu J, Zhao H, Zhu X, Luo Z, Wang Y, Fu Y, Feng J, Xiang T, Torr PH et al. (2020) Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In: IEEE International Conference on computer vision and pattern recognition (CVPR), pp. 6881–6890

Zhong Y, Dai Y, Li H (2017) Self-supervised learning for stereo matching with self-improving ability. In: arXiv preprint arXiv:1709.00930

Acknowledgements

This work was supported in part by National Natural Science Foundation of China (61671387, 61420106007, 61871325, and 62001396).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Rao, Z., He, M., Dai, Y. et al. Sliding space-disparity transformer for stereo matching. Neural Comput & Applic 34, 21863–21876 (2022). https://doi.org/10.1007/s00521-022-07621-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07621-7